ABSTRACT

Although reproducibility is central to the scientific method, its understanding within the research community remains insufficient. We aimed to explore the perceptions of research reproducibility among stakeholders within academia, learn about possible barriers and facilitators to reproducibility-related practices, and gather their suggestions for the Croatian Reproducibility Network website. We conducted four focus groups with researchers, teachers, editors, research managers, and policymakers from Croatia (n = 23). The participants observed a lack of consensus on the core definitions of reproducibility, both generally and between disciplines. They noted that incentivization and recognition of reproducibility-related practices from publishers and institutions, alongside comprehensive education adapted to the researchers’ career stage, could help with implementing reproducibility. Education was considered essential to these efforts, as it could help create a research culture based on good reproducibility-related practices and behavior rather than one driven by mandates or career advancement. This was particularly found to be relevant for growing reproducibility efforts globally. Regarding the Croatian Reproducibility Network website, the participants suggested we adapt the content to users from different disciplines or career stages and offer guidance and tools for reproducibility through which we should present core reproducibility concepts. Our findings could inform other initiatives focused on improving research reproducibility.

Introduction

Research reproducibility – a concept central to the scientific method – has gained significant attention in recent years. In a study recently published as a preprint, Gould et al. (Citation2023) provided 246 ecologists and evolutionary biologists with a single dataset and asked them to investigate the answers to prespecified research questions. Despite using the same data, they came up with varying results and statistical inferences, likely due to applying different statistical approaches, choices, and methods. However, this finding is not an isolated phenomenon, but rather a manifestation of the ongoing reproducibility crisis, which came to prominence following a survey conducted among 1576 scientists in 2016 (Baker Citation2016).

These issues with research reproducibility first emerged in psychology (Open Science Collaboration Citation2015), especially in experimental studies (Youyou, Yang, and Uzzi Citation2023). However, they were also identified in social sciences in general (Camerer et al. Citation2018), neuroscience (Button et al. Citation2013), preclinical research (Begley and John Citation2015; Begley and Lee Citation2012), cancer biology (Errington et al. Citation2014), and other disciplines, with researchers likewise reporting inability to reproduce previous studies.

Although these replication failures are at times the result of research misconduct, such as fabrication or falsification of data in the initial studies, they are also related to questionable or detrimental research practices (Resnik and Shamoo Citation2017), such as incomplete reporting of research methods, selective reporting and misleading statistical analyses, or non-sharing of data and study materials (Bouter et al. Citation2016; National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science Citation2017a; Resnik and Shamoo Citation2017). Whether intended or not, such practices can impact the integrity of research, leading the academic community and the general population to doubt the trustworthiness of its findings and to suspect more serious breaches of research ethics and integrity (National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science Citation2017c). Consequently, they have been one of the key targets of initiatives seeking to improve research reproducibility (Munafò et al. Citation2017).

Issues with core definitions and terminology

Although the importance of reproducibility has been widely acknowledged in science, there is still an ongoing discussion regarding its core terminology, resulting in conflicting uses of various terms in different contexts (Barba Citation2018; Sven and Schneider Citation2023). Gundersen (Citation2021) surveyed the current literature and found varying definitions of the main terms – “reproducibility,” “replicability,” and “repeatability.” These were occasionally accompanied by further qualifiers, such as those proposed by Goodman, Fanelli, and Ioannidis (Citation2016), who presented a framework based on the reproducibility of methods, results, and inferences. They defined “methods reproducibility” as the provision of details on study procedures which could “in theory or in actuality” allow for its repetition; “results reproducibility” (“previously described as replicability”) as “obtaining the same results” as a previous study while closely matching its original procedures; and “inferential reproducibility” as “the making of knowledge claims of similar strength from a study replication or reanalysis” (Goodman, Fanelli, and Ioannidis Citation2016).

The USA National Academies of Sciences, Engineering, and Medicine distinguish both reproducibility and replicability, giving the former term a wider definition (Gundersen Citation2021; National Academies of Sciences, Engineering, and Medicine Citation2019b). The United Kingdom Reproducibility Network (UKRN), meanwhile, considers research to be reproducible if individuals with expertise can follow how and why it was done; what evidence was generated; the reasoning behind the analysis; and how all these factors led to the findings and conclusions (United Kingdom Reproducibility Network Citation2024).

At minimum, these definitions mostly agree that “reproducibility” and its related terms encompass “conducting an experiment with the same or similar experimental procedure over again with different degrees of variation of conditions” (Gundersen Citation2021). In their linguistic analysis of scientific and nonscientific articles on reproducibility, Nelson et al. (Citation2021) observed a “thematic core” focused on the “sources of irreproducibility and associated solutions.” They also noted varying uses of “reproducibility” and “replication” in different disciplines, suggesting they “might be markers of methodological or disciplinary difference.” However, they did not analyze the exact content and meaning of these terms in different contexts.

Such ambiguity points to the need for more research exploring stakeholders’ perceptions and understandings of reproducibility, its terminology, and its effect on science, which is currently lacking. A previous study applied a mixed-methods approach to understanding the discourse on reproducibility, highlighting the value of qualitative analyses in identifying the bounds and features of reproducibility discourse and “distinct vocabularies and constituencies that reformers should engage with to promote change” (Nelson et al. Citation2021).

Challenges in practice

Aside from ambiguity in terminology, significant barriers to implementing reproducibility remain in practice. In relation to the conduct of research, these include small sample sizes (Button et al. Citation2013; Ioannidis Citation2005), lack of data sharing (Gabelica, Bojčić, and Puljak Citation2022; Miyakawa Citation2020), selective reporting, as well as practical reasons such as pressure to publish or lack of oversight on early career researchers (Baker Citation2016), all of which have been labeled as questionable research practices or minor research misbehaviors (Bouter et al. Citation2016). In relation to this, Cole, Reichmann, and Ross-Hellauer (Citation2023) observed that resource intensiveness, insufficient support and recognition from institutions, and unequal access between researchers hinder the implementation of open research practices, such as sharing data, publishing in open access, and others. In response, researchers and institutions can act by implementing structural changes and setting up clear norms for ethical conduct among researchers, offering training for good practices, incentivizing researchers who foster and promote research integrity, and establishing informal and community-level initiatives (Haven et al. Citation2022).

However, although research misconduct and questionable research practices underly irreproducibility (Haven et al. Citation2022; Munafò et al. Citation2017; National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science Citation2017c), it is at times difficult to extrapolate from tools and interventions addressing these issues to those aimed at improving reproducibility, which have yet to be systematically mapped and explored (Dudda et al. Citation2023). In line with the approaches suggested for handling questionable research practices, some ways of improving reproducibility include institutional policy changes, the delivery of training and education for researchers, the establishment of informal communities, and the development of open-access tools for reproducibility (Cole, Reichmann, and Ross-Hellauer Citation2023; National Academies of Sciences, Engineering, and Medicine Citation2019a; National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science Citation2017c).

We were unable to find prior research on how these interventions and educational interventions should be delivered, what tools might be used, or what stakeholders’ real-world needs are in view of fostering reproducibility. For example, in the context of research ethics training, studies have adopted a focus group methodology to explore the development of web-based platforms for promoting responsible research and increasing its uptake (Evans et al. Citation2021). The resulting platform now provides diverse resources, reports, and training programs related to research integrity (Embassy of Good Science Citation2024). Likewise, websites of various associations promoting reproducibility contain useful materials and information on relevant events (United Kingdom Reproducibility Network Citation2023). However, studies have not yet explored what stakeholders might actually require from such platforms or what content they might consider useful.

To address these gaps, we aimed to explore stakeholders’ perceptions of research reproducibility and its related terms, learn about possible barriers and facilitators of reproducibility improvement, and gather input on what a web-based tool for advancing reproducibility should offer to the research community.

Materials and methods

Study design and reporting

This was a qualitative, focus group-based study with stakeholders within the scientific community, including researchers, editors, research managers, and policymakers from Croatia. We specifically selected the focus group approach because it allows for the generation of new ideas and interaction between participants by juxtaposing their perspectives and experiences on a given topic, which would, in turn, allow us to observe nuances in opinions between participants with different backgrounds (Kamberelis and Dimitriadis Citation2005). We followed the Consolidated Criteria for Reporting Qualitative Research (COREQ) guidelines (Tong, Sainsbury, and Craig Citation2007) in describing our findings. We also preregistered the study on the Open Science Framework (Buljan et al. Citation2023).

Sampling

We used a two-step sampling procedure, starting with purposive sampling, to gather participants of varying backgrounds and disciplines. Two researchers (IB, MFŽ) contacted potential participants via e-mail, in which we explained the study’s aim and invited them to participate in the focus groups. In the second step, we employed the snowball sampling method by asking participants to suggest other stakeholders who might be willing to join the discussions. As we invited potential participants from Croatia, which has a small research community, there was a possibility that they would know one or more of the other focus group members.

Research team composition

The study team included three women (NB, LP, MFŽ) and two men (IB, LU) from Croatia. Three researchers – MFŽ, NB, LU – were doctoral students with degrees in biomedicine, dental medicine, and history/anglistics, respectively. IB is an assistant professor of psychology, while LP is a professor of biomedicine. All researchers had previous experience in qualitative research, either through moderating focus groups or conducting analyses and publishing.

Context and data collection

The focus groups were conducted online via Microsoft Teams (Microsoft Corporation, San Francisco, USA) and recorded audiovisually. They lasted 60–75 minutes and were conducted in English. To ensure reliability, two researchers (MFŽ for one and IB for three focus groups) moderated each discussion, while another (LU) acted as an observer and took field notes in all focus groups while also ensuring that the discussions did not diverge from the study aims. We also used a pre-developed focus group guide, which we previously revised iteratively through discussion and then preregistered along with the study protocol (Buljan et al. Citation2023). We did not adapt it afterward. Based on this topic guide, the participants were presented with the following three definitions of reproducibility, replicability, and repeatability to facilitate the initial discussion (Parsons et al. Citation2022):

Repeatability: obtaining the same results under the same conditions;

Replicability: obtaining the same results under slightly different conditions;

Reproducibility: obtaining the same results under very different conditions.

We used Microsoft Team’s internal transcription tool to generate focus group transcripts, after which two researchers (LU, IB) checked them against the original recording for accuracy. In view of member checking, we did not return the transcripts or the field notes to the participants for confirmation, but we did send the final findings to five participants for their feedback. They did not have additional suggestions or comments, nor did they observe any divergence from their experiences or perspectives.

Website development

An independent design professional developed the website, initially modeling it after the UKRN website (United Kingdom Reproducibility Network Citation2023), but adapting it based on the input of all Croatian Reproducibility Network members. The website included information on the organization, its members, and its activities; a dedicated questions and answers section where users could send over reproducibility-related queries; links to articles, conferences, and events related to reproducibility; and useful tools for conducting reproducible research, including statistical software, books, and guidelines. We presented its basic, Croatian-only version to the participants in the second half of the focus group (Croatian Reproducibility Network Citation2023), asking them for suggestions for improvements.

Analysis approach

We used Braun and Clarke’s reflexive thematic analysis approach (Braun and Clarke Citation2006). After initially reading the transcripts, one researcher (NB) developed the initial codes and themes, revising them through subsequent readings, while another (MFŽ) checked and revised them afterward in consultation with the initial coder (NB). After this step, the full team discussed and reviewed the findings. This led to an additional restructuring of the themes and subthemes and a final round of discussions to ensure the robustness and validity of the thematic framework.

Data saturation was not applicable, as it is incompatible with Braun and Clarke’s methodology (Braun and Clarke Citation2021). We also did not consider triangulation in view of using other materials for analysis, as we did not find any that would be relevant for our focus groups in the Croatian context. However, we did conduct triangulation by including researchers with diverse backgrounds, as well as researchers with different levels of professional experience. We presented all themes and subthemes alongside quotes/excerpts from the focus group transcripts in the “Results” section below. We attempted to limit possible bias by developing focus group guides to help keep the discussion focused on the research aims. We conducted the analysis using NVivo software, version 1.7.1. (QSR International, Burlington, MA, USA).

Ethics

The University of Split School of Medicine Ethics Committee approved the study (Approval number: 2181-198-03-04-23-A024). The participants signed an informed consent form and were informed that they would be recorded audiovisually. They were also made aware that the transcripts would be analyzed on a group level and that their data would be anonymized and known only to the research group members.

Results

Participants

We reached out to 25 individuals, of whom 23 consented to participate and were included in the focus groups. All participants were Croatian; 15 were women. Seven had a background in humanities and social sciences, while 16 came from biomedicine or natural sciences. Most (n = 13) were employed at higher education institutions, followed by research (n = 6) and healthcare institutes (n = 4). All participants had experience in conducting research, and they also had other overlapping roles within the academic ecosystem, acting as editors, research managers (individuals who participate in the decision-making process as advisors and staff), policymakers, or teachers. The participants included researchers whose work was affected by the challenges and changes in reproducibility, but was not related to the topic explicitly (labeled as researchers/consumers), as well as those who were researching reproducibility topics directly and were thoroughly familiar with the theme (labeled as researchers with experience/expertise in reproducibility) ().

Table 1. Descriptive statistics about participants (n = 23), presented as frequencies.

Themes identified

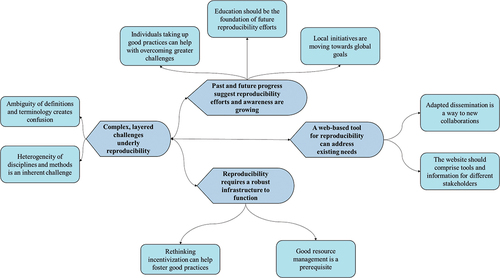

Overall, four themes and nine sub-themes emerged in the analysis (), creating a thematic framework reflecting the participants’ perspectives of reproducibility. In this framework, the absence of clear definitions and differences in the extent to which reproducibility is perceived as a problem in some disciplines presents a complex, inherent challenge to any efforts for addressing reproducibility. Yet despite this challenge, past and ongoing progress suggests that general awareness of reproducibility-related issues is growing, and that it can be advanced through education, informal initiatives, and the fostering of good practices in the research community. Likewise, incentivization and good resource management emerge as key components of any infrastructure targeting these challenges. Our web-based tool could, therefore, serve as a platform for researchers, funders, and policymakers to connect, get educated, and find useful information, provided that its structure and content are designed with each of these groups in mind.

Theme 1: Complex, layered challenges underly reproducibility

Participants described reproducibility as theoretically and practically complex, highlighting its inherent ambiguity and a lack of consensus on its very definition.

I think the conversations […] around replication and reproducibility are kind of a Pandora’s box. – Man; postdoctoral researcher; researcher with experience/expertise in reproducibility, teacher

Sub-theme: Ambiguity of definitions and terminology creates confusion

Participants expressed mixed levels of personal familiarity with the three abovementioned definitions of reproducibility, replicability, and repeatability and frequently used them interchangeably during the discussion. They observed that this confusion was also present in general discourse on the topic, suggesting that an official classification should be set up to avoid confusion.

I think this distinction between the three terms should be […] more clear to everybody. I think that we are just not using it in the correct way. – Woman; postdoctoral researcher; researcher/consumer

I think most of the time I use the term reproducibility. I didn’t know those particular nuances in language. – Woman; senior researcher; researcher/consumer, teacher, research manager/policymaker/editor

Besides this, they emphasized a lack of standardization and diversity in perspectives on what exactly replication entails – just repeating methods, obtaining the same results, or something else, which further mystified what a “successful” replication exactly is.

They start with “Can we get the same results as other people in other studies,” and then all these questions arise: Are we transparent enough with the methods? Do we do proper data sharing? Do we share analytical pipelines […]? What does it mean to reproduce a result – is that an inferential statistical criterion [or] is [it] something different? – Man; postdoctoral researcher; researcher with experience/expertise in reproducibility, teacher

I think that there is actually more talk about what replicability is. So if you collect new data and […] do something slightly differently, should your results actually repeat exactly or, you know, how exactly? They can be a bit different [but] not drastically different, but then where is this point of being drastically different? – Woman; senior researcher; researcher with experience/expertise in reproducibility

Overall, the participants found that ambiguity in terminology and definitions made any advances in reproducibility more challenging to design or implement, opining that it should be resolved before moving forward.

Sub-theme: Heterogeneity of disciplines and methods is an inherent challenge

The participants emphasized that reproducibility is understood differently in different disciplines, both in views of methods and practical issues. They noted that researchers in some fields were not as deeply concerned or aware of it as others were, with those primarily relying on quantitative data and experimental methodologies seemingly being more involved.

I also talked with colleagues from other studies and some older teachers, professors of economics, and most of them don’t know about those terms. – Man; postdoctoral researcher; researcher/consumer, teacher

I think that other studies are not involved in [reproducibility as much] as medicine – for example, kinesiology. – Man; postdoctoral researcher; researcher/consumer, teacher

It is easier to measure something in a controlled environment. In psychology and sociology, it’s harder to get the same conditions or pick the same population […] – Woman; PhD student; researcher/consumer

The participants considered that, for quantitative studies, knowing methodology and statistics is critical to interpreting and understanding divergent results (e.g., when drugs have variable effects between studies). In that sense, statistical questions can create confusion – for example, what is statistical, and what is practical significance?

How can you know that the same medication will have the same effect every time? When you are taking pills for a headache, sometimes it’s working, sometimes it’s not working. So when you have a lot of patients, you can’t be sure that the results will be the same. – Man; postdoctoral researcher; researcher/consumer

It’s like a very complex conversation between what is statistical significance versus practical significance and [how] do we decide when do we have a truth claim versus not? – Man; postdoctoral researcher; researcher with experience/expertise in reproducibility, teacher

These statements suggest that methodological and statistical literacy is a prerequisite for understanding the basic concepts and importance of reproducibility and (sometimes) for conducting replication studies.

Theme 2: Reproducibility requires a robust infrastructure to function

This theme encompasses all structural, practical barriers that make implementing reproducibility difficult, as opposed to the theoretical ones presented above. These include insufficient practical skills, as well as a scarcity of finances and data needed to conduct replication studies. Due to these barriers, as one participant pointed out, people feel excluded and become defensive when discussing reproducibility-related practices, especially data sharing.

I find [it] hard when I discuss with my colleagues [about] open science practices [as] they don’t feel invited. [Another participant] had a great lecture at our student symposium, and she had a sentence that said “don’t judge people.” It resonated with me so hard that people often feel attacked when you talk about open science. […] If they don’t share data, they don’t share code, and they don’t know how to do it, they often feel like they can’t be a part of this community. – Woman; PhD student; researcher/consumer

This suggests that many of these practical barriers are perceived that structural and systemic; resolving them could likely ensure more people willingly engage in reproducibility.

Sub-theme: Good resource management is a prerequisite

Participants who had previous experience with reproducibility found good resource and data management to be crucial for making reproducibility efforts successful. They observed that even in cases where datasets are shared, they are often made incomprehensible and unusable, likely because researchers sometimes feel mandated to share data, leading them to “revolt” against the system in this way. Others pointed out a lack of field-specific repositories for data sharing, which would make finding information easier.

So you have to submit the data – [so that person would say] OK, so I’ll just submit my data, but they’re not going to be useful to anyone because they are not going to be understandable. – Woman; senior researcher; researcher with experience/expertise in reproducibility

[…] we have a lack of exclusive repositories for each scientific branch. If we have one repository with clustered-up data, that’s going to be very difficult […] to dig out what you need. – Man; postdoctoral researcher; researcher with experience/expertise in reproducibility

Meanwhile, both junior and senior researchers observed that reproducibility efforts are often very costly, making them unfeasible. They thus noted that replication studies should not be done just for the sake of replication.

The studies are quite lengthy and costly, so reproducing them [should] not necessarily [be done] just to prove someone else’s hypothesis. – Woman; PhD student; researcher/consumer, teacher

[If] it’s very costly and if you don’t gain much by repeating or not repeating results, then don’t do it. – Woman; senior researcher; researcher with experience/expertise in reproducibility

Our participants found that sensible, well-managed funding; encouragement of transparent reporting and data sharing; education; and the establishment of standardized practices for producing accessible, understandable data could act as practical facilitators to research reproducibility, provided that they are based on a culture that values methodological rigor and reproducibility.

Sub-theme: Rethinking incentivization can help foster good practices

Participants observed that personal motivation is necessary for engaging in reproducibility, irrespective of whether it is based on practical interests or willingness to participate in good practices. They also noted that many researchers refrain from participating in reproducibility until their institutions or policymakers implement the required infrastructure and rules, which is why some can easily ignore their own poor practices or those undertaken by others.

When I talk to people in psychology who are not involved with open science [about] replicability, they often [say] “I’m waiting on the side to see what’s going to fall through”, right? – Man; postdoctoral researcher; researcher with experience/expertise in reproducibility, teacher

[…] you sometimes encounter [issues with] reproducibility within the lab, between groups […] or between departments and you just […] turn a blind eye and say, oh, it could be because, I don’t know, reagents, air, the way the animals were behaving that day. – Woman; PhD student; researcher/consumer

Likewise, the junior researchers in our focus groups claimed that they felt prevailing pressure from academia to publish novel, innovative studies rather than replications.

Would I conduct a replication or reproducibility study? I would say no because I’m pursued by the publish-or-perish culture, and I need to make novel papers [if I want to] pursue my career in academia. – Woman; PhD student; researcher/consumer

Overall, our participants emphasized the importance of personal motivation, integrity, and ethics for reproducibility practices. However, because of the prevalent publish-or-perish attitude and a lack of incentives, they observed that scientists find it challenging to prioritize replication studies.

Theme 3: Past and future progress suggest reproducibility efforts and awareness are growing

This theme captures the participants’ impressions about ongoing change and improvements in reproducibility and related practices such as open science and preregistration initiatives. They observed that reproducibility has been receiving more attention as more people are engaging in it and in related open science initiatives.

So I think we need more education. I think it’s still really [in its] roots, but it’s much better than it was five years ago when nobody even talked about open data or the importance of reproducible code […]. – Woman; senior researcher; researcher with experience/expertise in reproducibility

They also emphasized the importance of increasing awareness of reproducibility and good research practices.

Sub-theme: Local initiatives are moving towards global goals

Participants with experience in reproducibility stated that the initiative has seemingly been gaining attention over time, with some having previously worked on reproducibility studies and initiatives and remaining interested in the topic. They generally commended open science efforts, offering general, nonspecific examples they encountered in their work.

We are doing also some hackathons on true reproducibility – so not just potential to reproduce, but can you actually, if you have the code and data, reproduce the results […] and can you reproduce without having any analytical code? Can you just retrace the methods and try to still get the result if you have the data available? – Woman; senior researcher; researcher with experience/expertise in reproducibility

I did my PhD in the Netherlands [where] open science is huge, and [reproducibility] was especially growing when I was there. – Man; postdoctoral researcher; researcher with experience/expertise in reproducibility, teacher

In their opinion, these initiatives seemed to be spreading globally and acted as facilitators in disseminating the core ideas of reproducibility to a wider audience.

Sub-theme: Education should be the foundation of future reproducibility efforts

Education emerged as a panacea for emerging issues in reproducibility throughout the focus groups. At baseline, the junior researchers reported hearing about reproducibility during their studies.

So I think it was something like the definitions for something like this, but they were only mentioned during the lecture, so I don’t know much more about it. – Woman; PhD student; researcher/consumer

I heard a lot about it throughout my studies and also during my PhD, so I’m familiar with the term. – Woman; PhD student; researcher/consumer, teacher

However, all participants agreed that comprehensive education could be a key facilitator of reproducibility in the future, as the field is still novel and changing. They suggested that this does not have to happen through formal education, but rather that students and researchers should be taught how to adapt to change, with such education efforts designed specifically for different generations. Here, senior researchers suggested a focus on changing the mind-set of younger individuals and the newer generations of researchers.

This is because the times [and the problem] have changed since our studies, right? So […] that’s a continuous process. [Even if we begin] to teach these things systematically, [they] are going to change in the future. So maybe just [think about] prepar[ing] for change and to be adaptable to these changes […]. [Think about] ways to research these things, to check what has changed, [or] how to do things. – Woman; senior researcher; researcher/consumer

I think the best way to start is […] with the new generations, with education, with changing the mindset […]. – Woman; postdoctoral researcher; researcher/consumer, research manager/policymaker/editor, teacher

Some participants had previously engaged in reproducibility-related practices, some expressed their personal interest to do so, while others commended existing international initiatives on reproducible research practices. They also consistently stressed the importance of advancing education on reproducibility by teaching flexibility to change, adapting it to different generations, and encouraging a mentality shift, particularly among young people.

Sub-theme: Individuals taking up good practices can help with overcoming greater challenges

The participants provided examples of many positive advances in reproducibility, but noted they are often limited to specific settings. For example, senior researchers in our sample have encountered different open data/open science initiatives before, opining that related practices are becoming more commonplace, with institutions and journals sometimes making them mandatory.

[…] considering these open science policies at [INSTITUTION], I think we are quite encouraged by administrators at our university because our library offers [to] upload our publications on Dabar [collection of Croatian digital archives and repositories], so we don’t have to do it by ourselves. Sometimes, we don’t have to send it to them; they just send us an e-mail that they uploaded it on Dabar. – Woman; senior researcher; researcher/consumer, teacher

[…] but lately when I see the term [reproducibility], it’s [...] when I’m submitting a paper – looking at guidelines in journals and then they ask to describe the method and statistics to ensure replicability or reproducibility. – Woman; senior researcher; researcher/consumer, teacher

Others listed many methodological procedures that could make studies reproducible in the future, such as choosing the appropriate study design, keeping notes, repeating measurements when possible, including a larger sample of participants, making use of reporting checklists, and generally being more detailed in reporting on research procedures.

Yeah, everything should be listed […] Just write every single thing in detail on what you’ve done. Because in a matter of a week, maybe you’ll remember all the details, but in a month or two years, [when] you need to come back to the same thing, you will for sure not know at all what you have done. – Woman; postdoctoral researcher; researcher/consumer

[When] writing the paper, one should […] write all the details. Keep in mind that, okay, if I take [these] methods, if I repeat it and do every single thing that is written, will I be able to reproduce the methodology? – Woman; postdoctoral researcher; researcher/consumer

They also generally agreed on the need for transparency in reporting analytical codes or algorithms used in a study, as well as methodological steps in general.

[…] even if you don’t want to disclose your complete code, your algorithm, it is always honest to write information on functions you use, etc. – Woman, researcher/consumer; postdoctoral researcher; teacher

If you use this approach in visualizing the network and cleaning the data so that they fit in functions that you are planning to use, it is necessary to write in your article how you prepared the data. Which functions did you use? Which package, which program, language, and how did you visualize the data? – Woman; postdoctoral researcher; researcher/consumer, teacher

The increased awareness of open science practices might signify a growing commitment to transparency when conducting scientific research, encouraging the shift to more reproducible research. The participants emphasized that detailing study procedures, transparently reporting on the code and methodology used in the study, and pragmatically selecting studies for replication based on cost-benefit considerations could advance reproducibility.

Theme 4: A web-based tool for reproducibility can address existing needs

Sub-theme: The website should comprise tools and information for different stakeholders

In reviewing the webpage with a web-based tool for research reproducibility, the participants offered several suggestions regarding its practical content, which, during our study period, included links to articles, statistical software, and conferences. For example, they suggested that it could also offer a starter pack for early career and experienced researchers alike. To target both groups, they suggested that the page should be subdivided through panels or further subpages, offering the user easier navigation depending on their prior knowledge of reproducibility.

I think for this kind of page or project, it’s really important to have basic steps [on] how to get started. So you have a lot of materials, and sometimes even I, [who had been] practicing open science for a year or two, get lost in all the materials. So maybe “how to” […] starter pack for PhD students or for senior scientists […]. – Woman; PhD student; researcher/consumer

I went directly to your page […] I would like to suggest for you [to have] four panels […] so users don’t need to scroll on your web page. I often find that people are quite lazy, and they don’t like to scroll to see other content. – Woman; PhD student; researcher/consumer

Likewise, they commended the availability of links to free statistical software but suggested adding other tools that are applicable to different sciences.

If there are some tools available on the site […] for researchers […] that can be used or directions on how to use them. That would be my reason to visit the page. – Man; senior researcher; researcher with experience/expertise in reproducibility, research manager/policymaker/editor, teacher

I think it’s very tricky because it’s all discipline-specific, [as is] software that is used in different disciplines. – Woman; senior researcher; researcher with experience/expertise in reproducibility

However, the participants found the subpage comprising publications on reproducibility confusing, as it was unclear to them what these studies were about. They also suggested that dedicated subpages should be assigned to studies, tools, and other content displayed there, to avoid oversaturation. Others suggested having a section with essential information and a definition of reproducibility, along with links to relevant studies, which could help address the stakeholders’ varying levels of knowledge.

And then [this subpage] is a bit unclear. So what is the evidence [in these publications]? [Is it] evidence that reproducibility is important? Evidence that we have the confidence? – Woman; senior researcher; researcher with experience/expertise in reproducibility

I guess it would be good to have a basic [explanation] so the person who doesn’t want to read an article actually […] just understands generally why these are important, and then there can be links to […] some specific publications […]. – Woman; senior researcher; researcher with experience/expertise in reproducibility

I also find it a bit confusing because when I read [this subpage], I would think maybe about “Tools” and about “Scientific articles,” but I wouldn’t think about “Conferences” and “Participate in research” […] that seems more like events [or] how to get involved. – Woman; senior researcher; researcher with experience/expertise in reproducibility

Sub-theme: Adapted dissemination is a way to new collaborations

Regarding the issue of dissemination, the participants focused on the need to use multiple media to reach diverse audiences, especially younger researchers. This included blogs, videos, photographs, and even podcasts.

If I may suggest something that’s really interesting for me […] a blog tab. So it’s where people who are actually members of your group can write about their experiences with open science, their research, their pathway to open practices. I think it’s quite motivating for potential members to read that […]. – Woman; PhD student; researcher/consumer

In my experience with advocacy, especially in Open Access, videos work much better than texts, so I find it much easier to engage people if they can actually see something for a couple of minutes, especially younger scholars […]. That also works really well, instead of blog-like posts. – Man; postdoctoral researcher; researcher with experience/expertise in reproducibility, teacher

“Interactivity” came up several times in the discussion. Specifically, allowing participants to send over questions through the website was seen as a way of increasing uptake or awareness. These communication channels could thus be adapted to target different stakeholders, including other researchers and funders.

I would add […] interactive [features]. So that users could write questions and discuss because people have different ideas on what reproducibility is, why it’s important, and how it should be used. So I would put an “interact” window somewhere as well. – Woman; postdoctoral researcher; researcher with experience/expertise in reproducibility

[…] you know, like some kind of tab, “How to” for funders or “Ask questions if you’re a funder” […] because, obviously, reproducibility has to be funded […] – Woman; senior researcher; research/consumer

Some participants saw dissemination through social media as inherently positive, while others thought it unprofessional and unreliable. Those who preferred social media recommended using several platforms to increase visibility and include diverse readers, adding that these posts should contain news and planned events with links to the webpage. This could also mean utilizing paid advertising services if funds are available.

Different generations would use different networks, [as would] different professionals versus [the] general public. So, if you do all of these main [social media outlets], you would reach, I think, the highest number of people. – Woman; senior researcher; researcher/consumer

And all this is shared through the central network, so [that] anybody can participate, and you can invite each other. This is a template that really works, in my impression […]. – Man; postdoctoral researcher; researcher with experience/expertise in reproducibility, teacher

Maybe [have the news part] updated on social media. – Woman; postdoctoral researcher; researcher, research manager/policymaker/editor, teacher

You can pay for these things to make your web page more visible in search tools. So, you have good tags on how to find your page, and it depends on what people are looking for, right? – Woman; postdoctoral researcher; researcher/consumer, teacher

Discussion

Through three focus groups with various stakeholders in the Croatian scientific community, we explored the understanding of reproducibility and possible ways of improvement. Specifically, we observed a general consensus across our focus groups that, despite recent growth in related research and the formation of various initiatives, diverse and often disparate definitions of reproducibility and its core terminology still remain between disciplines and in different contexts. In view of implementation, the participants saw a need to support researchers with incentivization, robust technical infrastructure, and comprehensive policies that would address the traditional “publish-or-perish” culture. Finally, education was seen as an important way to advance reproducibility and foster the uptake of related practices among junior and senior researchers alike, especially outside of the purview of “formal” education. Interestingly, both junior and senior researchers in our group saw it as important for their future work, with the former highlighting a special need to implement such education within formal educational programmes in greater detail. They, therefore, saw the Croatian Reproducibility Network website as useful, especially if it contained practical tools such as statistical software or “reproducibility starter packs.” However, they also suggested that it should be supplemented with alternative methods of communication, such as vlogs or podcasts, to better attract diverse stakeholders and younger researchers.

The participants’ observations of ambiguity in the various definitions related to reproducibility and heterogeneity between disciplines with regard to core terminology correspond to observations in existing literature (Goodman, Fanelli, and Ioannidis Citation2016; Gundersen Citation2021). While they at least heard of the term “reproducibility” in some context, they demonstrated varying familiarity with “repeatability” and “replicability,” finding them to either be synonymous or complementary to each other, depending on their background. This is in line with previous results (Gundersen Citation2021), which found varying uses of the terms within scientific articles on reproducibility. Likewise, the participants did not always distinguish between open science practice (such as open data, open methods, and others (Parsons et al. Citation2022)) and reproducibility, suggesting that they found them to be inter-related and co-dependent. Kohrs et al. (Citation2023) likewise noted that “some use these terms interchangeably,” yet highlighted their importance for reproducibility in general – a trend noted in empirical research as well (Hardwicke et al. Citation2020).

The participants who had experience with reproducibility research (primarily postdoctoral or senior researchers) focused on the issue of “what reproducibility entails.” This has previously been discussed by Goodman, Fanelli, and Ioannidis (Citation2016), who proposed a framework of “Methods reproducibility,” “Results reproducibility,” and “Inferential reproducibility” based on “descriptive qualifiers” which are meant to clarify what aspect of reproducibility is being discussed. This framework was supported by Plesser (Citation2018), among others. In a similar way, Gundersen (Citation2021) suggests three degrees of reproducibility, where research is “Outcome reproducible” (identical outcomes to the original experiment), “Analysis reproducible” (identical outcome leads to the same interpretation, but not necessarily the same outcome), and “Interpretation reproducible” (interpretation the same, but outcomes and analysis are necessarily not).

Although these terms theoretically help focus discussions, it would seem that confusion persists in our study sample regarding what constitutes reproducibility. A core issue our participants observed concerned the “truth claims” emerging from quantitative studies, especially in view of failing or succeeding in obtaining the statistical significance of the original experiment. Many of these failures and successes that underly replication efforts relate to questionable or detrimental research practices, such as improper statistical approaches in the initial studies, p-hacking, harking, and issues with sample sizes, among others (Macleod and the University of Edinburgh Research Strategy Group Citation2022; Munafò et al. Citation2017; National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science Citation2017c). While these practices, alongside research misconduct such as falsification or fabrication, are “avoidable” in the sense that they can be resolved through training, incentives, and education, they should be distinguished from challenges that run deeper as integral aspects of the scientific process (e.g., Type I errors or issues with generalizability) (Macleod and the University of Edinburgh Research Strategy Group Citation2022).

In relation to this, Goodman, Fanelli, and Ioannidis (Citation2016) describe that, while statistical significance might be achieved in a reproducibility study, it does not necessarily result in definitive “knowledge claims”; conversely, “multiple studies that fail to demonstrate statistical significance do not necessarily confirm the absence of an effect” (Goodman, Fanelli, and Ioannidis Citation2016). This is echoed in existing literature, which discusses a need for divergence from traditional null hypothesis significance testing and evaluation via p-values in order to advance reproducibility (Lash Citation2017), and is further exacerbated by the challenge of “how much evidence needs to be gathered for effective proof,” which significantly varies between disciplines and specific studies (Goodman, Fanelli, and Ioannidis Citation2016). While research has not specifically answered this issue, our participants suggested that comprehensive methodological and statistical education could further our awareness and understanding of the issue and help researchers better interpret “statistically significant results” in relation to their own limitations and the limitations of their study.

In practical aspects, our participants observed that researchers feel compelled by mandates to use open science and subsequently implement reproducibility-related practices. Consequently, they found that the datasets that researchers feel “forced” to share are unreadable or incomprehensible – either due to a lack of know-how or personal revolt against mandates – thus preventing reproducibility. A systematic review by Zuiderwijk et al. (Citation2020) found “Requirements and formal obligations,” “Expected performance,” and “Legislation and regulation” to be three of eleven drivers/inhibitors of researcher’s motivations for data sharing. Likewise, surveys among researchers have found that funder, institutional, and journal requirements are among the main motivators for data sharing (Kaiser and Brainard Citation2023; Nelson and Eggett Citation2017). Diaba-Nuhoho and Amponsah-Offeh (Citation2021) underlined the importance of different stakeholders (research institutions, grant awarding institutions, and publishers) in incentivizing researchers who adhere to open science practices through promotions, contracts, or funding. Our participants suggested that, although such mandates, including those aimed at establishing reproducibility-related practices, do help advance reproducibility, they must be implemented with care and only following extensive training that would provide researchers with the know-how, instill values, and highlight career benefits. In line with this, initiatives aimed at addressing key challenges in reproducibility and fostering research integrity stress a need to establish a positive culture where such practices are integrated as a habit by funders, publishers, and institutions alike, as opposed to one where they are forced upon the research community (National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science Citation2017b; Roje et al. Citation2023; Stewart et al. Citation2022).

Concerns about costs related to reproducibility-related practices such as open data also emerged as a key barrier. In practice, these costs can grow to be extensive, as the expenses due to securing “data managers, staff time to prepare data, and repository fees” can rise to more than one million USD annually per institution (Kaiser and Brainard Citation2023). However, studies have reported the costs of irreproducible preclinical research to be vastly higher (Freedman, Cockburn, and Simcoe Citation2015), let alone those of other disciplines. Likewise, the extensive availability of open-source, free repositories and guidance/training programs for open science practices (OpenAIRE Citation2024; National Institutes of Health Citation2023) could help further annul the training and infrastructural needs, driving costs down.

Another notable finding is our participants’ perception of the “confusion” of researchers when attempting to engage in reproducibility. They observed that those without know-how seemingly feel “embarrassed” or less able to engage in reproducibility practices. Furthermore, due to the diversity of backgrounds, some participants noted a lack of repositories for data sharing or materials specifically related to their disciplines, which prevented researchers from engaging in data-sharing practices. However, with the availability of online resources, it might be possible that this finding reflects a recent survey where scientists underestimated the prevalence and uptake of open science practices among their peers (Ferguson et al. Citation2023). Likewise, it is possible that the plurality of information available on such practices might cause confusion among researchers who “do not know where to look” for guidance. This, in turn, further highlights a need for the systematization of guidance and the creation of “introductory” packages for open science/reproducibility geared toward less experienced researchers.

The participants further noted that incentivization (or the lack thereof) through financial/institutional support for reproducible research and the validation of such studies for academic advancement could help engage people in reproducibility efforts. Of note, junior researchers in our sample also found this challenging, highlighting that the “publish-or-perish” culture also affected the way they conducted research, as it could affect their long-term careers. This is in line with the findings of Stieglitz et al. (Citation2020), where career advancements were found to significantly influence willingness for open data. Likewise, an adaptation of “research assessment criteria and program requirements” was suggested as one of the key strategies for advancing reproducibility and open science by Kohrs et al. (Citation2023), which is similar to the findings of a scoping review that looked at factors influencing the implementability of research integrity (Roje et al. Citation2023). Addressing this would require actions from all stakeholders within the research ecosystem and would include additional training and rewards for researchers willing to engage in such practices.

In relation to this, the bias of institutions and publishers toward non-negative research results and novel studies observed by our participants meant that many reproducibility efforts go to waste. This bias toward non-negative results as a factor impeding reproducibility and research integrity has been noted in the literature (Da Silva and Jaime Citation2015; Pawel et al. Citation2023; Roje et al. Citation2023; Romero Citation2019), with research suggesting a need for special venues for such studies (Pusztai, Hatzis, and Andre Citation2013). In light of our findings, these factors could generally be addressed by re-designing institutional evaluation criteria, which should further reward researchers who conduct reproducibility studies.

Some participants observed a growing awareness toward reproducibility issues globally, especially in view of a growing number of grassroots initiatives and organizations. These include, among others, the Reproducibility Networks organized in the UK and elsewhere (Munafò et al. Citation2020; Stewart et al. Citation2022; United Kingdom Reproducibility Network Citation2023), but also national-level open science and research integrity efforts in the Netherlands (Open Science Netherlands Citation2024; Netherlands Research Integrity Network Citation2024), which are all focused on fostering good research practices and “cultural” change by targeting funders, publishers, institutions, and individual researchers alike. Other notable initiatives (which did not emerge in the focus groups) include the AllTrials and Null Hypothesis initiatives, aiming to make more non-positive study results available and accessible to the public through either publications or preprints (AllTrials Citation2024; Null Hypothesis Initiative Citation2024). In their view, these initiatives play both a role in raising awareness and in educating researchers outside of the formal institutional context through meetings, podcasts, and the dissemination of guidelines for reproducibility.

In fact, both informal and formal education came up as a key concept throughout the focus groups, irrespective of specific backgrounds or career stages. Our participants, especially ones with less experience in research, had previously encountered reproducibility and related practices through formal education, but were seemingly not educated extensively on how to take them up or what they comprised. Consequently, they noted a need for more education, as it would help them learn about practices that would become relevant to their career. Senior researchers, meanwhile, suggested that the implementation of such education at early stages could help create “cultural” changes in the long-term. Despite recommendations that such education should be incorporated into curricula, as they would play a key role in the future of science, the participants did not suggest specific approaches on how this could be realized. They did note that these educational programs should teach people why reproducibility matters, as well as the necessary skills to put it into practice (e.g., data-sharing, proper statistical analyses). Adopting reproducibility and open science practices, in that sense, could especially help further advance reproducibility, as our participants opined that such incremental uptake of skills could result in a larger-scale impact on science over time.

One example of an educational intervention aimed at creating a research culture that nurtures open science is the GW4 Undergraduate Psychology Consortium set in the UK, which aimed to teach undergraduate students research practices such as preregistration, transparent reporting, and applying rigorous research methods when conducting studies (Button Citation2018). A similar approach was taken by Toelch and Ostwald (Citation2018) in designing an open science course. Kohrs et al. (Citation2023) suggested several strategies, such as “requiring reproducible research and open science practices in undergraduate or graduate theses” or even performing replications within courses as end-goal projects. Aside from these top-down approaches, a non-formal option is organizing journal clubs and similar communities where researchers from various disciplines could discuss reproducibility, gather and share materials, and help each other in adopting new practices (Kohrs et al. Citation2023). Introducing early career researchers to these rigorous research practices at such an early stage of their professional career might help foster reproducibility, research integrity, and open science in the long term, as it would help create an internal, value-based culture rather than one centered on mandates.

Our participants found that a research reproducibility website should contain both guidance and practical tools adapted to different disciplines and researchers at varying stages of their careers. This is in line with the findings of Labib et al. (Citation2022) that training in open science should be available to all, yet tailored to researchers’ specific career stages, and that the availability of tools could help empower researchers. Toelch and Ostwald (Citation2018) likewise found education on open-source tools to be a critical part of teaching open science and reproducibility. Kohrs et al. (Citation2023) suggested that “resource hubs … collecting resources, or providing training and consulting services” could be designed for both educating individuals and forming communities focused on advancing reproducibility. Aside from the statistical software and hands-on guidance mentioned by our participants, the availability of new tools for qualitative analysis (ATLAS.ti Citation2024) and artificial intelligence, such as ChatGPT or Bard, could help educate and train individuals on reproducibility. For example, ChatGPT features specialized models such as the “Data Analyst,” which helps researchers analyze and visualize their data (OpenAI Citation2024). Based on our findings, gathering these tools in one place and forming a “toolbox” adapted for specific groups of researchers could be of great use to the Croatian and other research communities.

The participants likewise noted the importance of blog posts, podcasts, and similar communication methods that could be disseminated through social media, thus providing additional channels to reach diverse groups. The ReproducibiliTeach YouTube channel (ReproducibiliTeach Citation2024) and the ReproducibiliTea Podcast (ReproducibiliTea Citation2024) serve as good examples of this approach, as does the website of the Embassy of Good Science, which provides various educational formats aimed at teaching research integrity (Embassy of Good Science Citation2024). They suggested that a multi-method outreach strategy could help reach researchers who frequently visit websites or dislike newsletters and build a community of diverse stakeholders, especially early-career researchers who prefer social media or podcasts/blogs.

Strengths and limitations

To the best of our knowledge, this is the first qualitative exploration of how stakeholders within academia observe research reproducibility – both its base-level terminology and its future within Croatia and globally, with a focus on possible barriers and facilitators to implementation. Within this study, we included a diverse yet balanced group of stakeholders with varying levels of experience and used a well-established, flexible and robust analytical approach in analyzing the data. In divergence from our study goals, we were unable to gather a sufficient number of policymakers and funders; we also did not manage to gather any individuals from technical disciplines (e.g., information technology, architecture, etc.), leaving a gap for future studies. However, it is worth noting that Croatia (our study setting) is a rather small country with a limited pool of research funders, policymakers, and industry stakeholders, meaning that research among these populations might be better suited for a country without these limitations. Likewise, we only received responses to review our findings from five participants who verbally consented to do so during our focus groups. Furthermore, the use of an online platform instead of conducting physical focus groups could have limited interaction between the participants, at least in a natural, face-to-face way. However, we tried to mitigate this by preregistering our study and developing a focus group guide to ensure that the discussions would be focused toward our research questions (as much as possible), but also to offer a relatively loose framework where discussions could be redirected to our participant’s interests. When interpreting results, one must also consider the small scientific community setting in which the focus groups were conducted, so our results cannot be generalized to other contexts.

Conclusions

Through four focus groups with stakeholders from Croatia, we explored the concept of reproducibility and related issues, and also received valuable input on the development of the Croatian Reproducibility Network website. According to our participants, standardization of terms is needed in order to communicate issues to the wider scientific community. Moreover, they emphasized that the principal step in the improvement of reproducibility should be education in reproducibility, as well as changes in incentive structure. Regarding the Croatian Reproducibility Network website, they stated educational materials, personal stories related to research reproducibility and social media presence as key components for making the site valuable to the research community. To further explore approaches to increasing reproducibility, we aim to conduct a quantitative/survey study within the Croatian population about the critical issues related to reproducibility practices to see how we could further tailor our educational interventions to different stakeholders within the Croatian research community.

Author contributions

Substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data for the work – LU, NB, MFŽ, IB. Drafting the work or reviewing it critically for important intellectual content – all authors. Final approval of the version to be published – all authors. Agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved – all authors.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The participants of this study did not give written consent for their data to be shared publicly, so due to the sensitive nature of the research, raw data from this study was not made publicly available. The COREQ checklist and the focus group guide are available at the Open Science Framework (Buljan et al. Citation2023).

Additional information

Funding

References

- “AllTrials”. 2024. Main Page. https://www.alltrials.net/find-out-more/about-alltrials/.

- ”ATLAS.ti”. 2024. Main Page. https://atlasti.com/.

- Baker, M. 2016. “1,500 Scientists Lift the Lid on Reproducibility.” Nature 533 (7604): 452–454. https://doi.org/10.1038/533452a.

- Barba, L. A. 2018. “Terminologies for Reproducible Research.” arXiv Preprint. https://doi.org/10.48550/ARXIV.1802.03311.

- Begley, C. G., and P. A. I. John. 2015. “Reproducibility in Science: Improving the Standard for Basic and Preclinical Research.” Circulation Research 116 (1): 116–126. https://doi.org/10.1161/CIRCRESAHA.114.303819.

- Begley, C. G., and M. E. Lee. 2012. “Raise Standards for Preclinical Cancer Research.” Nature 483 (7391): 531–533. https://doi.org/10.1038/483531a.

- Bouter, L. M., J. Tijdink, N. Axelsen, B. C. Martinson, and G. Ter Riet. 2016. “Ranking Major and Minor Research Misbehaviors: Results from a Survey Among Participants of Four World Conferences on Research Integrity.” Research Integrity and Peer Review 1 (1): 17. https://doi.org/10.1186/s41073-016-0024-5.

- Braun, V., and V. Clarke. 2006. “Using Thematic Analysis in Psychology.” Qualitative Research in Psychology 3 (2): 77–101. https://doi.org/10.1191/1478088706qp063oa.

- Braun, V., and V. Clarke. 2021. “To Saturate or Not to Saturate? Questioning Data Saturation as a Useful Concept for Thematic Analysis and Sample-Size Rationales.” Qualitative Research in Sport, Exercise and Health 13 (2): 201–216. https://doi.org/10.1080/2159676X.2019.1704846.

- Buljan, I., M. Franka Žuljević, N. Bralić, L. Ursić, and L. Puljak. 2023. Understanding of Concept of Reproducibility and Defining the Needs of the Stakeholders: A Qualitative Study. Open Science Framework. https://doi.org/10.17605/OSF.IO/H9RT7.

- Button, K. S. 2018. “Reboot Undergraduate Courses for Reproducibility.” Nature 561 (7723): 287–287. https://doi.org/10.1038/d41586-018-06692-8.

- Button, K. S., J. P. A. Ioannidis, C. Mokrysz, B. A. Nosek, J. Flint, E. S. J. Robinson, and M. R. Munafò. 2013. “Power Failure: Why Small Sample Size Undermines the Reliability of Neuroscience.” Nature Reviews Neuroscience 14 (5): 365–376. https://doi.org/10.1038/nrn3475.

- Camerer, C. F., A. Dreber, F. Holzmeister, T.-H. Ho, J. Huber, M. Johannesson, M. Kirchler, G. Nave, B. A. Nosek, T. Pfeiffer, et al. 2018. “Evaluating the Replicability of Social Science Experiments in Nature and Science Between 2010 and 2015.” Nature Human Behaviour 2 (9): 637–644. https://doi.org/10.1038/s41562-018-0399-z.

- Cole, N. L., S. Reichmann, and T. Ross-Hellauer. 2023. “Toward Equitable Open Research: Stakeholder Co-Created Recommendations for Research Institutions, Funders and Researchers.” Royal Society Open Science 10 (2): 221460. https://doi.org/10.1098/rsos.221460.

- “Croatian Reproducibility Network”. 2023. Main Page. https://crorin.hr/.

- Da Silva, T., and A. Jaime. 2015. “Negative Results: Negative Perceptions Limit Their Potential for Increasing Reproducibility.” Journal of Negative Results in BioMedicine 14 (1): 12. https://doi.org/10.1186/s12952-015-0033-9.

- Diaba-Nuhoho, P., and M. Amponsah-Offeh. 2021. “Reproducibility and Research Integrity: The Role of Scientists and Institutions.” BMC Research Notes 14 (1): 451. https://doi.org/10.1186/s13104-021-05875-3.

- Dudda, L. A., M. Kozula, T. Ross-Hellauer, E. Kormann, R. Spijker, N. DeVito, G. Gopalakrishna, V. Van den Eynden, P. Onghena, F. Naudet, et al. 2023. “Scoping Review and Evidence Mapping of Interventions Aimed at Improving Reproducible and Replicable Science: Protocol.” Open Research Europe 3 (October): 179. https://doi.org/10.12688/openreseurope.16567.1.

- “Embassy of Good Science”. 2024. Main Page. https://embassy.science/wiki/Main_Page.

- Errington, T. M., E. Iorns, W. Gunn, F. Elisabeth Tan, J. Lomax, and B. A. Nosek. 2014. “An Open Investigation of the Reproducibility of Cancer Biology Research.” eLife 3 (December): e04333. https://doi.org/10.7554/eLife.04333.

- Evans, N., M. Van Hoof, L. Hartman, A. Marusic, B. Gordijn, K. Dierickx, L. Bouter, and G. Widdershoven. 2021. “EnTIRE: Mapping Normative Frameworks for EThics and Integrity of REsearch.” Research Ideas and Outcomes 7 (November): e76240. https://doi.org/10.3897/rio.7.e76240.

- Ferguson, J., R. Littman, G. Christensen, E. Levy Paluck, N. Swanson, Z. Wang, E. Miguel, D. Birke, and J.-H. Pezzuto. 2023. “Survey of Open Science Practices and Attitudes in the Social Sciences.” Nature Communications 14 (1): 5401. https://doi.org/10.1038/s41467-023-41111-1.

- Freedman, L. P., I. M. Cockburn, and T. S. Simcoe. 2015. “The Economics of Reproducibility in Preclinical Research.” PLOS Biology 13 (6): e1002165. https://doi.org/10.1371/journal.pbio.1002165.

- Gabelica, M., R. Bojčić, and L. Puljak. 2022. “Many Researchers Were Not Compliant with Their Published Data Sharing Statement: A Mixed-Methods Study.” Journal of Clinical Epidemiology 150 (October): 33–41. https://doi.org/10.1016/j.jclinepi.2022.05.019.

- Goodman, S. N., D. Fanelli, and J. P. A. Ioannidis. 2016. “What Does Research Reproducibility Mean?” Science Translational Medicine 8 (341). https://doi.org/10.1126/scitranslmed.aaf5027.

- Gould, E., H. Fraser, T. Parker, S. Nakagawa, S. Griffith, P. Vesk, F. Fidler, et al. 2023. “Same Data, Different Analysts: Variation in Effect Sizes Due to Analytical Decisions in Ecology and Evolutionary Biology.” Ecology and Evolutionary Biology. https://doi.org/10.32942/X2GG62.

- Gundersen, O. E. 2021. “The Fundamental Principles of Reproducibility.” Philosophical Transactions of the Royal Society A: Mathematical, Physical and Engineering Sciences 379 (2197): 20200210. https://doi.org/10.1098/rsta.2020.0210.

- Hardwicke, T. E., J. D. Wallach, M. C. Kidwell, T. Bendixen, S. Crüwell, and J. P. A. Ioannidis. 2020. “An Empirical Assessment of Transparency and Reproducibility-Related Research Practices in the Social Sciences (2014–2017).” Royal Society Open Science 7 (2): 190806. https://doi.org/10.1098/rsos.190806.

- Haven, T., G. Gopalakrishna, J. Tijdink, D. Van Der Schot, and L. Bouter. 2022. “Promoting Trust in Research and Researchers: How Open Science and Research Integrity Are Intertwined.” BMC Research Notes 15 (1): 302. https://doi.org/10.1186/s13104-022-06169-y.

- Ioannidis, J. P. A. 2005. “Why Most Published Research Findings Are False.” PLOS Medicine 2 (8): e124. https://doi.org/10.1371/journal.pmed.0020124.

- Kaiser, J., and J. Brainard. 2023. “Ready, Set, Share!” Science 379 (6630): 322–325. https://doi.org/10.1126/science.adg8142.

- Kamberelis, G., and G. Dimitriadis. 2005. “Focus Groups: Strategic Articulations of Pedagogy, Politics, and Inquiry.” The SAGE Handbook of Qualitative Research 3 (January): 887–907.

- Kohrs, F. E., S. Auer, A. Bannach-Brown, S. Fiedler, T. Laura Haven, V. Heise, C. Holman, et al. 2023. Eleven Strategies for Making Reproducible Research and Open Science Training the Norm at Research Institutions. Open Science Framework. https://doi.org/10.31219/osf.io/kcvra.

- Labib, K., N. Evans, R. Roje, P. Kavouras, A. Reyes Elizondo, W. Kaltenbrunner, I. Buljan, T. Ravn, G. Widdershoven, L. Bouter, et al. 2022. “Education and Training Policies for Research Integrity: Insights from a Focus Group Study.” Science & Public Policy 49 (2): 246–266. https://doi.org/10.1093/scipol/scab077.

- Lash, T. L. 2017. “The Harm Done to Reproducibility by the Culture of Null Hypothesis Significance Testing.” American Journal of Epidemiology 186 (6): 627–635. https://doi.org/10.1093/aje/kwx261.

- Macleod, M., and the University of Edinburgh Research Strategy Group. 2022. “Improving the Reproducibility and Integrity of Research: What Can Different Stakeholders Contribute?” BMC Research Notes 15 (1): 146. https://doi.org/10.1186/s13104-022-06030-2.

- Miyakawa, T. 2020. “No Raw Data, No Science: Another Possible Source of the Reproducibility Crisis.” Molecular Brain 13 (1): 24. https://doi.org/10.1186/s13041-020-0552-2.

- Munafò, M. R., C. D. Chambers, A. M. Collins, L. Fortunato, and M. R. Macleod. 2020. “Research Culture and Reproducibility.” Trends in Cognitive Sciences 24 (2): 91–93. https://doi.org/10.1016/j.tics.2019.12.002.

- Munafò, M. R., B. A. Nosek, D. V. M. Bishop, K. S. Button, C. D. Chambers, N. Percie Du Sert, U. Simonsohn, E.-J. Wagenmakers, J. J. Ware, and J. P. A. Ioannidis. 2017. “A Manifesto for Reproducible Science.” Nature Human Behaviour 1 (1): 0021. https://doi.org/10.1038/s41562-016-0021.

- National Academies of Sciences, Engineering, and Medicine. 2019a. “Improving Reproducibility and Replicability.” Reproducibility and Replicability in Science. Vol. 25303. Washington, D.C.: National Academies Press. https://doi.org/10.17226/25303.

- National Academies of Sciences, Engineering, and Medicine. 2019b. Reproducibility and Replicability in Science. Washington, D.C: National Academies Press. https://doi.org/10.17226/25303.

- National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science. 2017a. “Context and Definitions”. In Fostering Integrity in Research. Consensus Study Report, Washington, DC: The National Academies Press (US). https://www.ncbi.nlm.nih.gov/books/NBK475954/.

- National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science. 2017b. “Identifying and Promoting Best Practices for Research Integrity”. In Fostering Integrity in Research. Consensus Study Report, Washington, DC: The National Academies Press (US). https://www.ncbi.nlm.nih.gov/books/NBK475945/.

- National Academies of Sciences, Engineering, and Medicine, Policy and Global Affairs, Committee on Science, Engineering, Medicine, and Public Policy, and Committee on Responsible Science. 2017c. “Incidence and Consequences”. In Fostering Integrity in Research. Consensus Study Report, Washington, DC: The National Academies Press (US) https://www.ncbi.nlm.nih.gov/books/NBK475945/.

- National Council for Science. 2009. ‘Pravilnik o Znanstvenim i Umjetničkim Područjima, Poljima i Granama - NN 118/2009’. https://narodne-novine.nn.hr/clanci/sluzbeni/2009_09_118_2929.html.

- National Institutes of Health. 2023. ‘Enhancing Reproducibility Through Rigor and Transparency’. https://grants.nih.gov/policy/reproducibility/index.htm.

- Nelson, K. I., J. Chung, M. M. Malik, and M. M. Malik. 2021. “Mapping the Discursive Dimensions of the Reproducibility Crisis: A Mixed Methods Analysis.” PLOS ONE 16 (7): e0254090. https://doi.org/10.1371/journal.pone.0254090.

- Nelson, G. M., and D. L. Eggett. 2017. “Citations, Mandates, and Money: Author Motivations to Publish in Chemistry Hybrid Open Access Journals.” Journal of the Association for Information Science and Technology 68 (10): 2501–2510. https://doi.org/10.1002/asi.23897.

- “Netherlands Research Integrity Network”. 2024. Main Page. https://nrin.nl/

- “Null Hypothesis Initiative”. 2024. Main Page. https://nullhypothesis.com/about.php.

- “OpenAI”. 2024. How to Find a Trusworthy Repository for Your Data. https://chat.openai.com/g/g-HMNcP6w7d-data-analyst.

- “OpenAIRE”. 2024. How to Find a Trusworthy Repository for Your Data. https://www.openaire.eu/find-trustworthy-data-repository.

- Open Science Collaboration. 2015. “Estimating the Reproducibility of Psychological Science.” Science 349 (6251): aac4716. https://doi.org/10.1126/science.aac4716.

- “Open Science Netherlands”. 2024. Landing Page. https://www.openscience.nl/en.

- Parsons, S., F. Azevedo, M. M. Elsherif, S. Guay, O. N. Shahim, G. H. Govaart, E. Norris, A. O’Mahony, A. J. Parker, A. Todorovic, et al. 2022. “A Community-Sourced Glossary of Open Scholarship Terms.” Nature Human Behaviour 6 (3): 312–318. https://doi.org/10.1038/s41562-021-01269-4.

- Pawel, S., R. Heyard, C. Micheloud, and L. Held. 2023. Replication of “Null Results” – Absence of Evidence or Evidence of Absence? Preprint. elife. https://doi.org/10.7554/eLife.92311.1.

- Plesser, H. E. 2018. “Reproducibility Vs. Replicability: A Brief History of a Confused Terminology.” Frontiers in Neuroinformatics 11 (January): 76. https://doi.org/10.3389/fninf.2017.00076.