?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

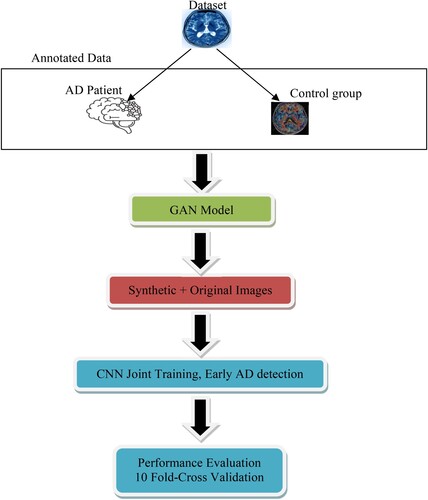

Alzheimer's disease (AD) is a neurological condition that impairs the patient's cognitive function. The article presents a deep learning architecture using the implicit image from MRI to categories MRI scans and detect AD on time. The annotated data is divided into patients with AD and control groups. After GAN creates the synthetic and real images, the dataset is passed through CNN to detect spatial features from the scans. We used 30 slices from the region of the top brain above the eyes for learning. In order to train the CNNs and evaluate the results, the data is divided using the 10-fold cross-validation evaluating technique to validate the model, the accuracy estimates are 99.67%, 98.76%, respectively.

1. Introduction

Alzheimer's disease seems to be a neurological condition that affects cognition, functioning, and behaviour. It is gradual and irreversible (Basheera & Ram, Citation2021; Tufail et al., Citation2020). The progression of Alzheimer's disease includes preclinical illness, mild cognitive and/or behavioural impairments, and instead dementia (Mahendran et al., Citation2021; Zhang et al., Citation2014). Doctors are now being asked to diagnose Alzheimer's earlier, before the onset of dementia. Doctors need to be able to swiftly and precisely recognise the symptoms and pathophysiology of Alzheimer's disease to examine, diagnose, and ultimately treat people with the condition. It also gives patients and their caregivers a chance to change their daily routines positively, which may help them live comfortably for years to come (Kadhim et al., Citation2021; Ramzan et al., Citation2019). Several factors, such as.

Clinicians need more time because they have a lot of people to see, and healthcare workers frequently have limited time. Due to the demanding nature of medicine, doctors may have less time than they would want to spend with each patient, which might affect how thoroughly diagnoses are made.

The difficulty in accurately diagnosing Alzheimer's disease: Alzheimer's disease is a complex neurological disorder with symptoms that may overlap with those of other conditions. Diagnosing Alzheimer's disease accurately requires a comprehensive evaluation of a patient's cognitive functions, which can be intricate and time-consuming. The variability in symptom presentation and progression adds to the diagnostic challenge.

With the tendency of healthcare providers and hospitals to dismiss symptoms as inevitable with ageing, there is a prevalent perception, both among healthcare providers and in society, that some cognitive decline is a normal part of ageing. As a result, early symptoms of Alzheimer's disease may be overlooked or attributed to typical age-related changes rather than being recognised as potential indicators of a more serious condition.

The early diagnosis process of the disease provided an opportunity for everyone to adjust in involvement, whereas the patient was actively participating. This includes information to clinical experts regarding advice and monetary help, non-chemo, and therapeutic treatment approaches. Even though no disease-modifying agents capable of reversing the initial clinical symptoms related to the illness have yet made it to the market, Individuals with mild to moderate Alzheimer's dementia who have access to the greatest treatments and resources (Sreelakshmi et al., Citation2022; Vasuki & Malar, Citation2021) may continue to experience high levels of pleasure for an extended length of time. Multiple neuroimaging markers, such as MRI and PET, have developed many machine learning algorithms (Nagaraj & Duong, Citation2020; Ocasio & Duong, Citation2021) that may help diagnose AD based on the highly dimensional information gathered from these scans. These machine learning approaches must automatically distinguish AD patients from those with normal control issues and estimate the risk that those with MCI will develop AD. Therefore, cases with MCI may be divided into two groups: those who do not advance (ncMCI) and those who do (cMCI). As a consequence, early Alzheimer's disease diagnosis may be seen as a straightforward multiclass classification problem. The issue was condensed into a binary classification job in some earlier investigations. The procedure integrates features from many biological modalities using a multi-kernel SVM classifier. Yet, classifying subjects with more than two classes in a single setup is challenging for SVM (Support Vector Machine). Some techniques included preexisting knowledge in the creation of the model. As an illustration, an improved graph cut technique with parameters changed by the prevalence of specific classes in the training model was proposed (Ortiz et al., Citation2016; Tanveer et al., Citation2020; Wang et al., Citation2016; Zhang et al., Citation2021). It may be difficult to design the reliance on prior knowledge and responsive to changes in the dataset (Babu, Citation2021; Nagarathna & Kusuma, Citation2022; Seshadri et al., Citation2021; Sethi et al., Citation2021; Yagis et al., Citation2021). Different stages of Alzheimer’s disease and impairment stages are presented in Tables and , respectively.

Table 1. Different Stages of Alzheimer’s disease.

Table 2. Impairment Stages.

Patients may take preventative actions before irreversible brain damage develops if they are informed of their diagnosis of AD early on and given an accurate assessment of the severity and probability of recurrence of the disease. Most of the research that has employed machine learning approaches for CAD of AD has shown a restriction in diagnostic accuracy, mainly due to the intrinsic limits of the selected learning models, even though there are many such studies. To address this issue and advance Alzheimer's disease (AD) and prodromal stage detection, we developed a deep learning architecture using stacking auto-encoders with a softmax output layer – a disorder of Minimal Brain Function. Our approach can analyze many classes simultaneously, requires fewer labelled training samples, and has little technical knowledge compared to the previous methods. In our studies, we obtained a considerable performance gain in categorising all diagnosis categories. In recent decades, DL has established a revolutionary approach in comparison to other ML methods that have been used. Because it can extract information directly from images via neural networks, deep learning has done the process automatically so that it no longer requires experts to remove features. This is made possible by the fact that the process no longer needs to occur in a manual process and in a process distinct from the classifier. Generative adversarial networks (GANs) have recently outperformed convolutional neural networks in image classification tasks, achieving unprecedented accuracy and precision.

Older people are more in number suffering from the disease; therefore, recently, the CAD-based MRI system to detect AD has been popular. A structural MRI known as sMRI is a technique widely used to detect the brain tumour, lesion and atrophy in early stages. This method also helps in the early detection of AD. CNN gained popularity in medical utility and devices to enhance the detection, recognition, and automation where scans and images relate. Researchers use the CNN model to extract features from image scans where the input is 2D or 3D images. This research detects different stages of AD dementia, from mild to severe, using structural CNN.

To automatically analyze Alzheimer's disease, this article will test how well GAN-based MRI synthesised features perform. Deep learning (DL) using CNN (convolutional neural network) techniques performs well in many picture categorisation tasks. To identify Alzheimer's disorders using MRI images, GAN-based models were constructed as a DL approach. The effectiveness of the model was analyzed and compared between completely connected layers. The following research questions will be answered as part of the study goals. (1) Does the pre-train DL GAN with Pyramid Net employed in this work have any use for categorising Alzheimer's disease in MRI brain images? (2) when combined with a Pre-trained GAN network, which of the following classifiers will provide the best classification performance using CNN. The following are the contributions to this article.

We proposed a supervised-based CNN model to detect the early disease of Alzheimer's with an augmented dataset produced by GAN to enhance the accuracy and improve the model's generalisation. The scan dataset was improper and lacked resolution, which needs enhancement. The GAN also helped CNN to provide a better dataset.

We provided an innovative methodology that tackles the problem of dataset shortage. The need for more datasets is a well-known problem in machine learning. This method can be used anywhere where the image data is unbalanced and insufficient.

Our work is state-of-the-art; we performed a wide experimental work and compared it with other recent work. Our model performs better than recent work in detecting AD. According to the result produced by our model, it contributes to both industrial and clinical practices.

The model is regular and works on a small amount of data. It is also integrated into ADNI to collect data from the model already in the database. The model learns and adopts according to the features and new findings.

2. Literature review

Memory loss with cognitive decline is a hallmark of Alzheimer's disease (A.D.), which several other symptoms may also accompany. Early identification of A.D. may be useful for commencing a suitable course of therapy to avoid more brain injuries, even though it has a severe effect on patients’ lives as it has no known cure. For decades, machine learning techniques have been used to categorise A.D., with conclusions relying on personally created features and a multi-stage architectural classifier. Since the advent of deep learning, neural networks’ whole workflow has been used in pattern categorisation. Our research here focuses on using M.R.I. and convolutional neural networks for diagnosing A.D. in its earliest stages (ConvNets) (M.R.I.). The input sources for categorisation have been M.R.I. image segments of the white and grey matter. Following convolutional operations, ensemble learning techniques have been used to combine the results of deep learning classifiers to improve classification. In this article, three base ConvNets were constructed, put into practice, and contrasted. For initial Alzheimer's disease diagnosis, the technique was assessed using data from the A.D. Neuroimaging Project. Our classifications have an accuracy rate of up to 97.65% points for A.D. and moderate cognitive deficiency and 88.37% of the total for mild cognitive impairment and normal control (Ji et al., Citation2019). Early detection of Alzheimer's disease is crucial for keeping the patient's condition from getting worse. By using digital subtraction angiography, the authors of this study were able to develop a unique approach that provides a comprehensive representation of a possible new biomarker for blood flow in the brain's cortex. The dataset utilised is derived from the medical records of the K.A.U.H. hospital and contains information on study participants and healthy controls. The angiographies of Alzheimer's patients have been digitally omitted. Pre-processing methods were used to prepare the dataset for feature extraction and classification because each scan had numerous pictures for both right and left I.C.A.s. After converting from actual to D.C.T. space, the countless individual scan frames were averaged to eliminate any remaining noise. The averaged picture was then translated back to physical space, and the two views were merged using a Meijering filter. The suggested method extracts the features using a collection of pre-trained models, including InceptionV3 and DenseNet201.

To determine which characteristics had an explained variance of 0.99, we used principal component analysis to integrate the attribute values from two pre-trained models. We passed them into a machine learning classifier. Although the acquired experimental data may not be directly comparable to other cutting-edge techniques in the literature due to variations in dataset sampling and the used brain blood flow biomarker, they are more efficient regarding current medical recommendations and have an accuracy of 99.14% (Gharaibeh et al., Citation2022). Alzheimer's disease (A.D.) is a degenerative neurological ailment, and pathophysiology-based biomarkers may provide accurate measures for detection and progression. Neuroimaging scans built from M.R.I. data and metabolism pictures from FDG-PET allow for in-vivo examinations of the live brain's anatomy and function (glucose metabolism). It is believed that integrating various image modalities with supplementary information could improve A.D.'s early diagnosis. Researchers use a multimodal and multiscale deep neural network to present a unique deep-learning-based approach to identifying people with A.D. Our method outperforms prior studies (Lu et al., Citation2018) by providing a sensitivity of 94.23% in classifying individuals with a diagnostic technique of probable A.D., precision of 86.3% in organising non-demented restrictions, and an accuracy of 82.4% in determining MCI people who will transition to A.D. at a period of two years before the conversion (86.4% mixed precision for converting within 1–3 years).

Using R-fMRI data, we build the brain network by computing the functional links of various brain areas. To differentiate between normal aging and the onset of Alzheimer's disease, defined as mild cognitive impairment, a custom-built autoencoder network is created. The proposed method offers a robust classifier for AD diagnosis by efficiently disclosing discriminative brain network features. Our predictive method is much more stable and reliable than the conventional approaches, as demonstrated by a 31.21-% improvement in accuracy and a 51.23-% reduction in standard deviation when using the proposed deep learning method to analyze R-fMRI time series data. Our study investigates the potential of deep learning for early AD finding and prevention by classifying high-dimensional multimedia information from medical care (Ju et al., Citation2019). The deep learning approach provides one structure for simultaneous representation training and feature classification, eliminating the need for laborious hand-crafted extracted features and feature engineering. This paper offers an EnAlexNets-based method for early positron emission tomography diagnosis of Alzheimer's disease. The AAL cortical parcellation chart allows us to pinpoint 62 anatomical volumes inside the brain; from there, we extract input images from each quantity type to optimise a pre-trained AlexNet; and finally, we are able to use an amalgamation of those effectively trained AlexNets as the classifiers. We compared this approach against seven other ones for evaluation on such an ADNI dataset. Our findings show that the proposed EnAlexNets algorithm better separates AD cases from normal controls than those seven techniques (Zheng et al., Citation2018). Positron emission tomography, or PET, is a neuroimaging technique used to create 3D pictures of the brain. Due to the availability of PET images, researchers attempted to utilise computer-aided diagnosis to differentiate between AD and normal control. After using image processing and attribute extraction methods, most legacy approaches created a model or classifiers to label brain pictures. As a result, the retrieved features significantly impacted the recognition rate of earlier approaches. To solve this problem, we develop an improved CAD system using a convolutional neural network, or CNN, to distinguish between normal and Alzheimer's disease-affected people. The ADNI database's 18FDG-PET images are used to evaluate the proposed approach, with the sample size equalling 855 persons (220 AD patients and 220 controls). The results showed that the suggested CAD system achieves a remarkable 96.1% accuracy, a sensitivity of 96.1%, and a loss of 94.1% compared to the techniques presently in use and documented in the literature (Hamdi et al., Citation2022).

Many different types of segmentation methods have been developed for AD diagnosis. The ability of deep learning approaches to deliver relevant findings across a massive amount of data has piqued interest in their application to the problems of brain architecture segmentation and AD classification. Because of this, deep learning techniques are now favoured over cutting-edge machine learning methods. Here, we will briefly summarise the most recent quantitative brain MRI analysis techniques based on deep learning to detect AD. We review their findings using open-source datasets and demonstrate how AD classification may be enhanced by employing brain MRI segmentation and convolutional neural network architectures. Researchers also analyze how AD is diagnosed and how magnetic Resonance segmentation is employed to diagnose AD. Ultimately, we explore potential future research trajectories for developing a computer-aided AD diagnosis system and offer insight into present difficulties (Yamanakkanavar et al., Citation2020). Using multimodal as well as multiscale deep neural networks, we present a novel deep-learning-based approach in this research to identify people with AD. Our approach outperforms previous research in several ways: It has a sensitivity of 94.23% in classifying individuals who have a medical indication of probable AD, a specificity of 86.3% in organising non-demented limitations, and a precision of 82.4% in identifying patients with MCI who will convert to AD three years prior to the conversion (86.4% mixed reliability for conversion in 1–3 years). To keep the density of voxels (patches in ROI) uniform throughout the brain, leading to uniformly sized image aggregation across all ROIs. In this study, 500, 1000, and 2000 voxels were predetermined as the patch sizes. In all, across all grey matter ROIs in the brain split by FreeSurfer, we found a total of 1488, 705, and 342 patches of various sizes. Given the few available data samples, the patch size was determined to preserve sufficient detail while avoiding an excessively large feature dimension. High-dimensional non-rigid registrations (LDDMM 34) were used to align the ROIs from the conventional template MRI with their corresponding ROIs in each target image. The original template was broken apart patch by patch using the registration mappings. Target pictures were segmented into a set number of patches per FreeSurfer ROI in the relevant MRI space using the provided templates. The size of a single patch in multiple pictures changes after transformation due to non-rigid registrations recording local expansion and contraction, which is utilised to define the regional data of a particular brain structure scan. After removing the skull from the T1 MRI scan, the FDG-PET image of each patient was co-registered with it utilising a stiff modification based on standardised mutual information. The cost function employed was normalised correlation, and there were twelve degrees of freedom in the analysis. Because it seemed unlikely that the brainstem would be affected by AD, it was chosen as a baseline to normalise the voxel intensity in that particular brain metabolism imaging using FDG-PET. Each patch's size was employed to represent brain anatomy, while each patch's average intensity was factored into a feature vector representing metabolic activity (Zhang et al., Citation2022). MRI images of individuals with Alzheimer's disease are extremely similar to images of healthy elderly individuals, complicating the detection process. New deep learning methods have shown human-level performance in several domains, including medical image processing. We suggest analyzing MRI scans of the brain using a deep convolutional neural network to look for signs of Alzheimer's disease. Our suggested model beats comparable baselines in a number of trials on the Open Access Sequencing of Imaging Studies database. (Islam & Zhang, Citation2018).

The results of this work provide a cutting-edge, user-friendly, and historically quick automated deep learning-based approach to detecting AD utilising a large MRI dataset of healthy and sick people. The 111 people who were included in the study were classified as having MCI, AD, or normal cognitive function. As classification aids, both SVM and multiple DNN algorithm models were tested. Accuracy of 80-90% was achieved using deep learning techniques for AD prediction. Predicting diseases like Alzheimer's, Dementia, and Parkinson's using highly precise computational-automated machine-learning approaches is crucial for better medical, societal, and economic results (Muhammed Raees & Thomas, Citation2021). This paper builds on prior work by explaining a novel software-based pipeline for the categorisation and initial identification of AD, using the same deep learning algorithms that have successfully identified functionalities and recognised patterns for many diseases affecting the brain and other organs. The proposed method uses pre- and post-processing to normalise the MRI data and enable pattern recognition, as well as two different kinds of three-dimensional convolutional neural network networks (3D CNN) to simplify the analysis of brain MRI data and the automated extraction of features and classification. Based on experimental results and publicly available information sets from the Alzheimer's Disease Neuroimaging Initiative (ADNI), we find that the suggested approach achieves 97.5%, 82.5%, and 83.75% accuracy in terms of categorisation model for AD versus cognitively normal (CN), CN versus mild cognitive decline (MCD), and MCI versus AD, respectively. In addition, it has a multiclass classification accuracy of 85%. Structural MRI, which is often used to diagnose AD, may detect brain anomalies in people with the disease. The extraction of task-oriented properties from sMRI has been greatly aided by the swift growth of deep learning methodology, which has led to adopting a wide variety of deep learning algorithms. Throughout this study, we first thoroughly review various deep learning model applications for sMRI-based AD detection. We have specifically classified them into four major groups based on the different sorts of input and discuss each group's benefits and drawbacks. Unique presentation and the trouble of effectively modelling the interaction among spatially distant places are the next two difficulties we suggest for ongoing study. Finally, we propose two prospective lines of investigation for improving deep learning-based AD detection models (Wang & Cao, Citation2021).

3. System overview

Using DL and CNN, this paper aims to improve the classification of MRI data for the early identification of AD. Therefore, this work suggests constructing and testing a CNN DL method using MRI-extracted features for the automated classification of AD employing Dual GAN as a means of disease detection. Figure depicts the high-level layout of the suggested method.

The methodologies use GAN for further enhancement so that the convolutional neural network can determine the synthetic and original data. We used a double layer of validation. The model can be used in the medical field, particularly in imagery, to enhance the images, data augmentation, feature extraction, and knowledge of AD features. MRI devices can build the model into a computer system, especially to detect AD. The scan can also provide labels that seek the interest of the health care professionals. The GAN also changes the image modality image from one channel to another, better understanding the standing of the scans. This helps the health professional get a peak on specific scan contrast. The synthetic imagery using GAN also fine-tuns the image for better look and accuracy. The CNN model is also deployed to detect the artifacts during scans that negatively affect the scans. These scans make it difficult for the professional to understand or see the artifacts. Sometimes, the artifacts, key positions, and scans go wasted, affecting the patient's economy, energy power, and resources. The model can also be used in transfer learning to contribute to further research. All these practices contribute to the medical field. CNN is good in quantitative analysis, such as the scan's shape, structure, and volume. This helps the health professional to diagnose diseases and improve the decision process. The first step of the model is to annotate the data with AD patients and the control group. Since CCN requires a large volume of data to process and perform better. The next step is to pass the labelled data to GAN to generate a mimic augmented dataset for both labelled data. In step two, we created an expanded mimic dataset close to the realistic world, having 30,000 entries of the scans, breaking equally 15 for the control group and 15 thousand for the AD patient.

The third step of this project is to construct and verify a Convolutional Neural Network model for feature extraction and classification. Model performance in experiments will be assessed by examining the characteristics recovered by the Generative Adversarial Network from the fully connected layer based on the verified model. The following steps comprise the technique used to construct a method for diagnosing AD: MRI data acquisition comes first. Second, we did some image pre-processing in which we reduced the resolution of each MRI image to a level that the CNN model could handle. The next step included extracting features from MRI images using a Dual Generative Adversarial Network and Convolutional Neural Network. Finally, we compared our findings to those of other researchers and examined the efficiency and efficacy of each method using the assessment criteria.

3.1. Preprocessing

The University of London's statistical parametric mapping (SPM), a programme built on the MATLAB platform, was used to correct MRI images from the ADNI datasets to remove effects on head movement caused by lengthy image acquisitions. The images were reduced in size to 192*192*160 after being acquired from the website (Liu et al., Citation2022; Luo et al., Citation2023). The downsized MRI images were then classified into three groups using SPM: White matter, gray matter, and CSF. Because GM (Gray Matter) and WM (White Matter) in brain patterns are so distinct and useful in making a diagnosis of AD (Long et al., Citation2023; Xiao et al., Citation2023), they were chosen as the training dataset. After segmentation, there were 192 TIF images of GM and WM. The information for GM and WM was selected from 20 consecutive slices of every MRI image with discernible brain structure. Our neural networks’ inputs comprised 224 × 224 reduced versions of the original GM and WM slices (Fan et al., Citation2023; Liu et al., Citation2021; Ting et al., Citation2023).

3.2. Feature extraction

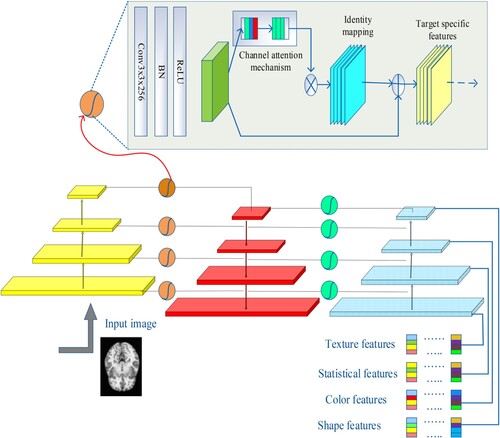

Pyramid attention networks are revolutionary networks that rapidly learn the properties without sacrificing efficiency. GaFP-Net teaches us about spectral, statistic, transformation, and constellation properties. Spectral features are similar to the time and frequency domain. Modern visuals are more nuanced than ever because of channel surrounds and image fluctuations. Higher-order aspects of a picture, such as those captured by statistics, are shown in such examples.

Because Pyramid Attention Networks are so good at learning complex aspects inside data while still being computationally efficient, they are regarded as revolutionary. The “pyramid” topology improves the network's comprehension of both coarse-grained and fine-grained characteristics by enabling information to be captured at many scales. Without compromising computational performance, PAN effectively learns characteristics across many abstraction levels, allowing it to recognise intricate patterns and correlations in the incoming data. Because of this, PAN is a good choice for activities that call for a thorough comprehension of hierarchical aspects.

This paper introduces GaFPNet, an adaptable feature extraction network with pictures of varying frequency and amplitude. GaFPNet uses four sub-components to extract features: gate attention, channel attention, global attention, identification mapping, and feature reuse. Figure show how GaFPNet operates.

The components that makeup GaFPNet are as follows:

(i) Gate: The collection of filter maps is represented as f m (1), f m (2), f m (3), & so on (n) by the function referred to as H f, which converts the feature maps supplied into outputs feature maps, or o t (f mo t). This means that the following is what is utilised to compute the feature map output function in gate:

(1)

(1)

(ii) Global and Channel Level Attention: The network can expand and scale well across many different kinds of images if its channel-level emphasis accurately reflects the interdependence of its global-level features. The Squeeze with stimulation Block is used for channel-level attention, and it consists of two stages – the SQUEEZE phase H SQ for the entire feature embedding operation, and the H EX for the stimulation of the features in the appropriate channels. A look of fm application in H SQ & H EX.

(2)

(2)

(iii) Identity Mapping: For the final result in this phase, an element-wise addition operation is employed and the formula is as follows.

(3)

(3)

(iv) Feature Reuse: This process in GaFPNet is crucial both before and after the gating procedure is finished. In feature reuse, a compressed feature connection is assessed for precise feature extraction.

Spectral, statistical, transformational, and constellation features are among the many attributes that GaFP-Net is intended to teach the model. This all-encompassing strategy makes the network adaptable across many jobs and data kinds by enabling it to gather a wide variety of information. GaFP-Net uses spectral features to describe properties that resemble those in the time and frequency domain. The network is good at picking up subtle spectral information, which is important for deciphering patterns in data that are connected to frequency and timing. GaFP-Net addresses higher-order features of an image by emphasising statistical qualities. This entails identifying intricate statistical correlations in the data so that the network may identify patterns that would not be seen with more basic analysis. GaFP-Net investigates transformational and constellation characteristics in addition to fundamental features. Constellation properties deal with identifying patterns created by the arrangement of features, whereas transformation properties deal with how data evolves under various transformations. GaFP-Net is especially pertinent when considering today's more complex than ever graphics. It ensures that the network can manage the complexities of modern visual data by taking into account picture fluctuations and channel surrounds, which are contextual details around each data point. To sum up, PAN and GaFP-Net are examples of sophisticated neural network designs that are excellent at recognising a wide range of attributes and hierarchical aspects in data, which makes them effective tools for jobs requiring intricate linkages and patterns.

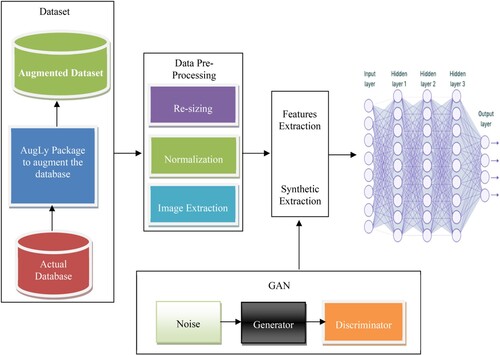

3.3. Dual GAN for disease classification

The combination of two CNN and GAN is called dual model training. Generator and Discriminator make up GAN. We collect texture features from the first GAN, while soft features are extracted from the second GAN. Because they can produce more data, generative adversarial networks (GAN) have been the subject of extensive research. A plethora of high-level research is available on the use of GAN, including ones focusing on image synthesis, semantics editing of images, style movement, image super-resolution, and classification. These publications also highlight the difficulties that still need to be overcome in the concept and use of GANs. GAN is an innovative technique for growing the dataset that produces excellent images, making it very beneficial for image processing. As an illustration, deep convolutional generative adversarial networks (DCGAN) that integrate CNN plus GANs for unsupervised learning are presented in the study “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks.” Relying on a GAN equivalent model, a technique for amplifying a tiny sample library has been suggested. Depending on GAN, a method for recognising facial expressions has been offered. An image recognition technique was presented once on conditional deep convolutional generative adversarial networks; a semi-supervised generative adversarial network has been suggested to boost the precision of image categorisation.

A method for image superresolution has been developed utilising generative adversarial networks (GAN), which can generate a high-resolution super-resolved (SR) image by 16 times by increasing the quality of a low-resolution image. GAN can therefore be employed to provide an unending supply of relevant data and improve model performance. With GAN, producing large-scale, crisp images from a limited set of photos is challenging. Because the collection of pictures of ill plant leaves is small and has an imbalanced distribution of data, it is simple for GAN to produce images. For instance, although DCGAN may produce high-quality small-sized images, when large-scale images are created by deepening the model, some issues, such as lack of clarity and image blur, appear. The loss function does not clearly indicate the training process, and the training is not stable. We suggest using DoubleGAN to generate additional images for the unbalanced dataset using photos provided by ADNI to address these issues.

A limited number of ill samples were then fed into the pre-trained model after enough healthy leaves had been input into WGAN to create a trained model. The image was 64*64 pixels. The Wasserstein distance was utilised to improve the original GAN's loss function, keep the model training from collapsing, make the training more stable, and provide the model with a distinct training index. Second, the residual network was expanded using SRGAN (Sreelakshmi et al., Citation2022), which deepened the network and avoided overfitting. The loss function improved the generator's content loss and eliminated a mismatch between the super-resolution and original images. SRGAN enabled the creation of 256*256 pixel clear images from fewer photographs. To assess the efficiency of the created images, we mixed the new photos with the original pictures and ran classification trials. AD Classification using Dual Training CNN and GAN is shown in Figure .

4. Experimental results

This section discusses the background and methodology of our study before moving on to report its findings. After briefly outlining both the hardware and software setups used in the experiment, we will discuss the outcomes of the model training and authentication procedures. The last part discusses the results obtained by combining the Pyramid Net algorithm for feature extraction and the dual GAN for classifications. Finally, we'll compare and contrast the outcomes obtained utilising the proposed technique with those obtained using other methods.

The ADNI databank was the source of the neuroimaging information used in our analyses. We selected MRIs from 311 people: 65 with Alzheimer's disease (AD), 67 with mild cognitive impairment (MCI), 102 with no cognitive impairment (MCI), and 77 with normal controls from the ADNI base group. The MRI data is split into 83 functional zones and then nonlinearly allocated to the ICBM 152 template. We took the CMRGlc patterns from the PET scan and the MRI grey matter volumes. Elastic Net was used for characteristics before the classification task. All features are standardised to zero mean and between 0 and 1 to support sigmoidal decoding.

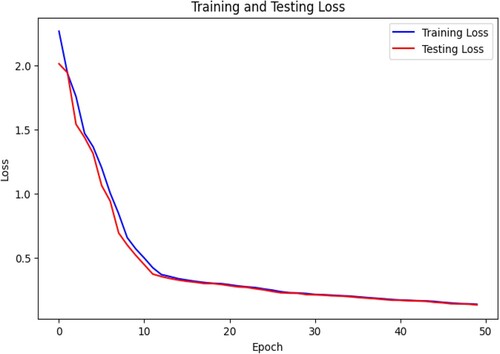

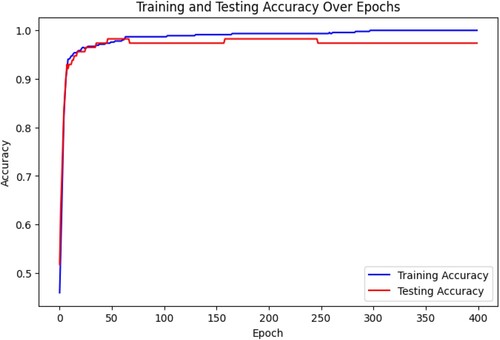

The loss function shows the difference between predicted values and the true values. Figure suggests that the loss decreases to a minimum with an increase in epochs. This data indicates that the model is fit for combined training. The augmented data we have generated using GAN for CNN is suitable for classification. The model's generalisation is performing well to explore new changes in scans.

The accuracy of this model indicates that the classification between AD and normal person is correct. The Figure shows that the model is classifying 96% of data correctly. This gave the model a high level of performance. However, we have a complex dataset of real and augmented sets. Therefore, it is necessary to consider the confusion matrix as well.

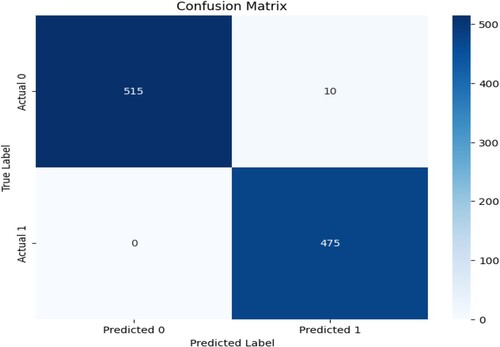

Figure shows the confusion matrix of the model in which the model correctly classified the true positive as 515 while the true negative is 475, where the true negative is 10, which is an incorrect classification, and the false negative is 0. The matrix suggests that the model predicts true positives by only 5%. The model is 95% efficient in positive, true, and false.

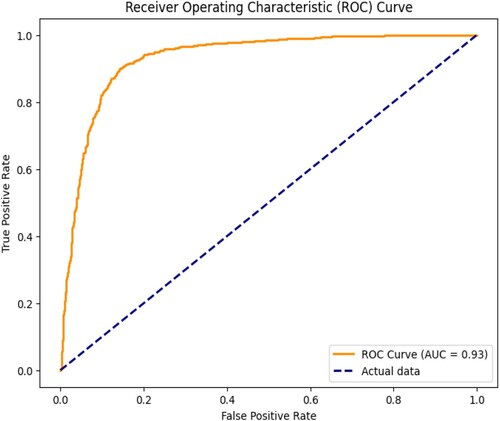

Figure shows the model performance of accurate prediction through the set thresholds. The curve shows that the model's sensitivity is 0.98 against specificity, which is 0.95. This shows that the model is performing better.

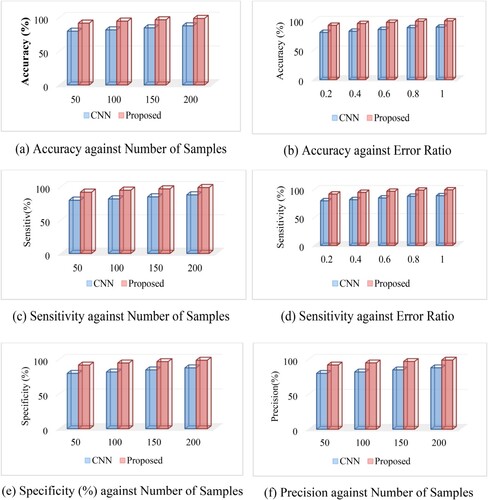

Performance of our Model against conventional CNN is shown in Figure .

Figure 8. Performance of our Model against conventional CNN; (a) Accuracy against Number of Samples (b) Accuracy against Error Ratio (c) Sensitivity against Number of Samples (d) Sensitivity against Error Ratio (e) Specificity (%) against Number of Samples (f) Precision against Number of Samples.

The above figure was the means of tests conducted using the ideal circumstances. As can be shown in Table , the binary 1017 classification of AD was more accurately classified using deep learning (87.76%) overall. Despite the fact that training a parametric model is more challenging in this case since the training set had an unequal % of every group (77 NC individuals and 169 MCI persons), the proposed technique performed almost as well as CNN when categorising NC and MCI. It was found that the classification accuracy was higher than previously thought, as were the sensitivity values (88.57 and 74.29%, respectively).

Table 3. Class average classification precisions.

It is understood that better sensitivity aids in diagnosis as misclassifying patients into various categories often has variable consequences; for instance, misclassifying AD and MCI patients into NC could have more severe implications than the contrary. Class average classification precisions may be seen in the first four columns of the above Table, while overall quality can be seen in the last three. Higher accuracy were seen for all three classes (55.43% on NC, 32.02% on ncMCI, with 51.96% on AD), with cMCI being the only exception due to its somewhat smaller subject matter. Comparing to SVMs, a performance improvement has been made in terms of overall specificity and accuracy (47.42% and 83.75%). Since the standard deviation is 4.42%, the average accuracy of the model on 10-fold cross validation is 96.00%. The predicted range of model performance based on the samples is indicated by the 95% confidence interval for accuracy (93.26% to 98.74%).

Every base classifier goes through two steps of training. When we develop the fully connected classifier for CNNs is fixed to fit the densely integrated layers classifier's weights. Random initialisation was used for the completely connected layers at the top, and significant weight updates were transmitted. To conform to the paradigm for medical imaging, the updated entire layers were linked with some of the convolution base's uppermost layers. The foundation classifiers used for the comprehensive early detection of AD were developed with a focus on fine-tuning among the network's fully connected layers and its released layers. GAN-based training illustrated the similar processes for the foundational learners.

4.1. Limitation of the study

The study is highly dependent on the quality of the dataset. Since the proposed model generates a synthetic dataset that mimics the real-world scan images of MRI. The data might also be biased due to the diverse population and sample data, which will affect the generalisation.

The combined training of GAN and CNN may also lead to confusion and lack of interoperability, making it difficult to understand which image feature contributes to detecting AD disease. The validation of the model is also highly dependent on the ADNI database. The detection of the temporal changes may also affect the model generalisation.

4.2. Industrial and clinical significance

The early detection and diagnosis of Alzheimer’s disease have significance in clinical and industrial use. Early detection is a crucial part of clinical treatment for timely interventions. These methods also improve the management of patients and their outcomes. Research shows that early detection of the disease makes progress in patients. This detection also makes it possible for the healthcare professional to plan and make early decisions for recovery or halt the spread of the disease. The automated decision also makes it easy for the professionals to evaluate the patient accurately. These innovations also optimise the use of healthcare biomedical devices such as MRI and reduce the use of energy and power. It helps patients with time allocation and reduces wait time. We also used the ADNI database, which will help the researchers collaborate in further digging. The adaptation of this technology reduces human error, which causes sometimes disasters. These technologies also offer State of the art devices in healthcare. The research can also be used for X-rays and CT scans. Psychology and neurology students can also benefit from the research method. They can observe the behaviour of the patient on early detections. The devices and biomedical industry can also benefit from the research to adopt the technology for many other devices used in healthcare. This can create specialised devices and services in healthcare.

4.3. Strategy for practical implementation

The strategy begins with specific data to the healthcare industry to the model. Patient records, medical imaging data, or any other pertinent healthcare data may fall under this category. When managing patient data, security laws like HIPAA need to be followed.

Adjust the GaFP-Net and Pyramid Attention Networks (PAN) architectures to fit the needs of healthcare applications. Adjust the networks to accommodate medical imaging modalities, including CT or MRI scans, taking into account the special qualities and difficulties related to healthcare data. Preprocess the medical images by standardising intensities, normalising pixel values, and addressing issues such as noise or artifacts. Considering augmentation techniques specific to healthcare, such as geometric transformations, intensity variations, or anatomical variations, is a good idea given the scarcity of medical data.

Clinical labels connected to the medical data are used to train the algorithms. This entails using diagnostic labels or expert annotations for supervised learning tasks, including anomaly detection or illness categorisation. Make sure clinically significant characteristics are captured by the models. Include techniques for interpretability and explain ability of the model, which are important for healthcare applications. This guarantees that medical professionals can comprehend and have faith in the choices the models make. Methods such as PAN's attention processes help to make this interpretable.

Integrate the trained models into the current healthcare systems, ensuring that they work with Picture Archiving and Communication Systems (PACS) and Electronic Health Records (EHR). A smooth integration would enable effective adoption in clinical workflows. To guarantee the models’ robustness and generalizability across various demographic groupings, validate them on a variety of patient populations. Examine the models in relation to different medical situations, taking age, gender, and comorbidities into account. Put in place systems for ongoing education and development. Make sure the models are updated with fresh data on a regular basis so they can adjust to changing medical understanding and diagnostic standards. Encourage data scientists and healthcare practitioners to work together. To improve the models and make them more in line with practical clinical requirements, take into account physicians’ input.

5. Conclusion

Our research investigated various applications of pre-trained architectures, such as Generative Adversarial Networks (GANs) and Pyramid Attention Networks (PAN), combined with a dual-train Convolutional Neural Network (CNN), with the goal of building a robust classification model for Alzheimer's disease using MRI information. Furthermore, in order to improve our comprehension of the spectral, statistical, transformational, and constellation features essential for successful early AD detection, we integrated GaFP-Net (Generic and Feature-Preserved Network).

First, we used the CNN and Dual GAN architecture that had already been trained. We then added PAN to it and used Softmax to calculate categorical cross-entropy in the deep learning framework for the classification task. We then used the insights from GaFP-Net to further improve the features retrieved by ResNet-50 to evaluate the model's performance.

Data from the MIRIAD database and the ADNI MRI were used in the evaluation. Surprisingly, the suggested technique showed a remarkable 99% accuracy rate. Our GAN and PAN models performed better than other state-of-the-art models utilising the ADNI dataset, demonstrating their effectiveness in classifying Alzheimer's disease.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Babu, G. S. (2021). Exploiting of classification paradigms for early diagnosis of Alzheimer’s disease. Information Technology in Industry, 9(2), 281–288. https://doi.org/10.17762/itii.v9i2.345

- Basheera, S., & Ram, M. S. (2021). Deep learning based Alzheimer's disease early diagnosis using T2w segmented gray matter LJLMRI. International Journal of Imaging Systems and Technology, 31(3), 1692–1710. https://doi.org/10.1002/ima.22553

- Fan, Z., He, Y., Sun, W., Li, Z., Ye, C., & Wang, C. (2023). Clinical characteristics, diagnosis and management of sweet syndrome induced by azathioprine. Clinical and Experimental Medicine, 23(7), 3581–3587. https://doi.org/10.1007/s10238-023-01135-9

- Gharaibeh, M., Almahmoud, M., Ali, M., Al-Badarneh, A. F., El-Heis, M., Abualigah, L. M., Altalhi, M., Alaiad, A., & Gandomi, A. H. (2022). Early diagnosis of Alzheimer's disease using cerebral catheter angiogram neuroimaging: A novel model based on deep learning approaches. Big Data and Cognitive Computing, 6(1), 2. https://doi.org/10.3390/bdcc6010002

- Hamdi, M., Bourouis, S., Rastislav, K., & Mohmed, F. (2022). Evaluation of neuro images for the diagnosis of Alzheimer's disease using deep learning neural network. Frontiers in Public Health, 10. https://doi.org/10.3389/fpubh.2022.834032

- Islam, J., & Zhang, Y. (2018). Early diagnosis of Alzheimer's disease: A neuroimaging study with deep learning architectures. 2018 IEEE/CVF conference on computer vision and pattern recognition workshops (CVPRW), 1962-19622.

- Ji, H., Liu, Z., Yan, W. Q., & Klette, R. (2019). Early diagnosis of Alzheimer's disease using deep learning. Proceedings of the 2nd international conference on control and computer vision - ICCCV 2019.

- Ju, R., Hu, C., Zhou, P., & Li, Q. (2019). Early diagnosis of Alzheimer's disease based on resting-state brain networks and deep learning. IEEE/ACM Transactions on Computational Biology and Bioinformatics, 16(1), 244–257. https://doi.org/10.1109/TCBB.2017.2776910

- Kadhim, K. A., Mohamed, F. B., & Khudhair, Z. N. (2021). Deep learning: Classification and automated detection earlier of Alzheimer’s disease using brain MRI images. Journal of Physics: Conference Series, 1892(1). https://doi.org/10.1088/1742-6596/1892/1/012009

- Liu, H., Yuan, H., Hou, J., Hamzaoui, R., & Gao, W. (2022). PUFA-GAN: A frequency-aware generative adversarial network for 3D point cloud upsampling. IEEE Transactions on Image Processing, 31, 7389–7402. https://doi.org/10.1109/TIP.2022.3222918

- Liu, L., Zhang, Y., Tang, L., Zhong, H., Danzeng, D., Liang, C., & Liu, S. (2021). The neuroprotective effect of Byu d Mar 25 in LPS-induced Alzheimer's disease mice model. Evidence-Based Complementary and Alternative Medicine, 2021, 8879014. https://doi.org/10.1155/2021/8879014

- Long, W., Xiao, Z., Wang, D., Jiang, H., Chen, J., Li, Y., & Alazab, M. (2023). Unified spatial-temporal neighbor attention network for dynamic traffic prediction. IEEE Transactions on Vehicular Technology, 72(2), 1515–1529. https://doi.org/10.1109/TVT.2022.3209242

- Lu, D., Popuri, K., Ding, G. W., Balachandar, R., Beg, M. F., & Alzheimer’s Disease Neuroimaging Initiative. (2018). Multimodal and multiscale deep neural networks for the early diagnosis of Alzheimer’s disease using structural MR and FDG-PET images. Scientific Reports, 8(1), 5697.

- Luo, Y., Chen, D., & Xing, X. (2023). Comprehensive analyses revealed eight immune related signatures correlated With aberrant methylations as prognosis and diagnosis biomarkers for kidney renal papillary cell carcinoma. Clinical Genitourinary Cancer, 21(5), 537–545. https://doi.org/10.1016/j.clgc.2023.06.011

- Mahendran, N., Vincent, P. M., Srinivasan, K., & Chang, C. (2021). Improving the classification of Alzheimer’s disease using hybrid gene selection pipeline and deep learning. Frontiers in Genetics, 12. https://doi.org/10.3389/fgene.2021.784814

- Muhammed Raees, P. C., & Thomas, V. (2021). Automated detection of Alzheimer’s disease using deep learning in MRI. Journal of Physics: Conference Series, 1921(1). https://doi.org/10.1088/1742-6596/1921/1/012024

- Nagaraj, S., & Duong, T. Q. (2020). Risk score stratification of Alzheimer’s disease and mild cognitive impairment using deep learning.

- Nagarathna, C. R., & Kusuma, M. (2022). Automatic diagnosis of Alzheimer’s disease using hybrid model and CNN. Journal of Soft Computing Paradigm, 3(4), 322–335. https://doi.org/10.36548/jscp.2021.4.007

- Ocasio, E., & Duong, T. Q. (2021). Deep learning prediction of mild cognitive impairment conversion to Alzheimer’s disease at 3 years after diagnosis using longitudinal and whole-brain 3D MRI. PeerJ Computer Science, 7. https://doi.org/10.7717/peerj-cs.560

- Ortiz, A., Munilla, J., Górriz, J. M., & Ramírez, J. (2016). Ensembles of deep learning architectures for the early diagnosis of the Alzheimer's disease. International Journal of Neural Systems, 26(7), 1650025. https://doi.org/10.1142/S0129065716500258

- Ramzan, F., Khan, M. U. G., Rehmat, A., Iqbal, S., Saba, T., Rehman, A., & Mehmood, Z. (2019). A deep learning approach for automated diagnosis and multi-class classification of Alzheimer’s disease stages using resting-state fMRI and residual neural networks. Journal of Medical Systems, 44(2), 37.

- Seshadri, N. P., McCalla, S., & Shah, R. (2021). Early prediction of Alzheimer’s disease with a multimodal multitask deep learning model. Journal of Student Research, 10(1). https://doi.org/10.47611/jsrhs.v10i1.1366

- Sethi, M., Ahuja, S., Rani, S., Bawa, P., & Zaguia, A. (2021). Classification of Alzheimer's disease using Gaussian-based Bayesian parameter optimization for deep convolutional LSTM network. Computational and Mathematical Methods in Medicine, 2021. https://doi.org/10.1155/2021/4186666

- Sreelakshmi, S., Malu, G., & Sherly, E. (2022). Alzheimer’s disease classification from cross-sectional brain MRI using deep learning. 2022 IEEE International Conference on Image Processing, Informatics, Communication and Energy Systems (SPICES), 1, 401–405.

- Tanveer, M., Richhariya, B., Khan, R. U., Rashid, A. H., Khanna, P., Prasad, M., & Lin, C. T. (2020). Machine learning techniques for the diagnosis of Alzheimer’s disease. ACM Transactions on Multimedia Computing, Communications, and Applications, 16(1), 1–35. https://doi.org/10.1145/3344998

- Ting, G., Zhang, F., Zhu, X., Deng, D., Ran, T., Li, L., Feng, L., Kong, L., Sun, L., & Ji, X. (2023). Diagnosis and surgical outcomes of coarctation of the aorta in pediatric patients: A retrospective study. Frontiers in Cardiovascular Medicine, 10, 1–11. https://doi.org/10.3389/fcvm.2023.1078038

- Tufail, A. B., Zhang, Q., & Ma, Y. (2020). Binary classification of Alzheimer’s disease using sMRI imaging modality and deep learning. Journal of Digital Imaging, 33(5), 1073–1090. https://doi.org/10.1007/s10278-019-00265-5

- Vasuki, A., & Malar, R. J. (2021). A review on multimodal brain image fusion using deep learning for alzheimer's disease.

- Wang, S., Zhang, Y., Liu, G., Phillips, P., & Yuan, T. F. (2016). Detection of Alzheimer’s disease by three-dimensional displacement field estimation in structural magnetic resonance imaging. Journal of Alzheimer's Disease, 50(1), 233–248. https://doi.org/10.3233/JAD-150848

- Wang, T., & Cao, L. (2021). Deep learning based diagnosis of Alzheimer's disease using structural magnetic resonance imaging: A survey. 2021 3rd international conference on applied machine learning (ICAML), 408-412.

- Xiao, Z., Fang, H., Jiang, H., Bai, J., Havyarimana, V., Chen, H., & Jiao, L. (2023). Understanding private Car aggregation effect via spatio-temporal analysis of trajectory data. IEEE Transactions on Cybernetics, 53(4), 2346–2357. https://doi.org/10.1109/TCYB.2021.3117705

- Yagis, E., Herrera, A. G., & Citi, L. (2021). Convolutional autoencoder based deep learning approach for Alzheimer's disease diagnosis using brain MRI. 2021 IEEE 34th international symposium on computer-based medical systems (CBMS), 486-491.

- Yamanakkanavar, N., Choi, J. Y., & Lee, B. (2020). MRI segmentation and classification of human brain using deep learning for diagnosis of Alzheimer’s disease: A survey. Sensors (Basel, Switzerland), 20(11). https://doi.org/10.3390/s20113243

- Zhang, J., Shen, Q., Ma, Y., Liu, L., Jia, W., Chen, L., & Xie, J. (2022). Calcium homeostasis in Parkinson’s disease: From pathology to treatment. Neuroscience Bulletin, 38(10), 1267–1270. https://doi.org/10.1007/s12264-022-00899-6

- Zhang, X., Han, L., Zhu, W., Sun, L., & Zhang, D. (2021). An explainable 3D residual self-attention deep neural network FOR joint atrophy localization and Alzheimer's disease diagnosis using structural MRI. IEEE Journal of Biomedical and Health Informatics, 26(11), 5289–5297. https://doi.org/10.1109/JBHI.2021.3066832

- Zhang, Y., Wang, S., Phillips, P., Dong, Z., Ji, G., & Yang, J. (2015). Detection of Alzheimer's disease and mild cognitive impairment based on structural volumetric MR images using 3D-DWT and WTA-KSVM trained by PSOTVAC. Biomedical Signal Processing and Control, 21, 58–73. https://doi.org/10.1016/j.bspc.2015.05.014

- Zhang, Y. D., Wang, S., & Dong, Z. (2014). Classification of Alzheimer disease based on structural magnetic resonance imaging by kernel support vector machine decision tree. Progress In Electromagnetics Research, 144, 171–184. https://doi.org/10.2528/PIER13121310

- Zheng, C., Xia, Y., Chen, Y., Yin, X., & Zhang, Y. (2018). Early diagnosis of Alzheimer’s disease by ensemble deep learning using FDG-PET. In Intelligence science and Big data engineering: 8th International conference, IScIDE 2018, Lanzhou, China, August 18–19, 2018, revised selected papers 8 (pp. 614–622). Springer International Publishing.