?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

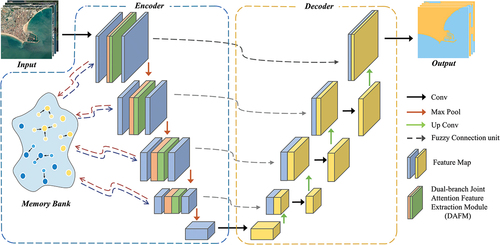

Sea-Land Segmentation (SLS) of remote sensing images is a meaningful task in the remote sensing and computer vision community. Some tricky situations, such as intraclass heterogeneity due to imaging constraints, inherent interclass similarity of sea-land regions and uncertain sea-land boundaries, still are and continues to be the significant challenges in SLS. In this paper, a fuzzy-embedded multi-scale prototype network, named FMPNet, is proposed to target the above challenges of SLS task. We design a dual-branch joint attention feature extraction module (DAFM) for effective feature extraction. Memory bank (MB) is designed to collect multi-scale prototypes, aiming to obtain discriminative feature representations and guide feature selection. In addition, fuzzy connection (FC) unit is embedded in the network structure to mitigate the uncertain sea-land boundaries through 2D Gaussian fuzzy method. Extensive experimental results on a publicly SLS dataset and real region images captured by the Gaofen-1 satellite demonstrate the superior performance of the proposed FMPNet over the other state-of-the-art methods.

Introduction

Sea-land segmentation (SLS) refers to the pixel-level classification that distinguishes between sea and land areas in remote sensing imagery. It holds great significance and finds wide applications in marine resource management (Fonner et al., Citation2020), natural environment monitoring (X. Li et al., Citation2018), and coastal change analysis (Sun et al., Citation2023). With the rapid advancement of aerospace technology and the commercialization of satellites, remote sensing platforms are now capable of capturing clearer images (Kotaridis & Lazaridou, Citation2021). The complex spectral and spatial textures present in remote sensing images enable more precise feature representation, allowing for a clearer expression of textural features and spatial structural properties of landcovers (K. Hu et al., Citation2023; X. Li et al., Citation2023). Remote sensing has become an effective and economical technique for large-scale map imaging. However, the abundance of information in remote sensing images has also increased their complexity, posing a significant challenge in extracting valuable information from them.

Semantic segmentation has emerged as an effective technique for addressing this challenge, as it allows for fine-grained pixel-level classification of features (Hao et al., Citation2020; Minaee et al., Citation2021). Recently, convolutional neural network (CNN) has become state-of-the-art technique for image analysis (Alzubaidi et al., Citation2021; Tian et al., Citation2022). CNN can provide end-to-end solutions for various visual tasks including instance segmentation (Fang et al., Citation2023; Y. Liu et al., Citation2020), semantic segmentation (Garcia-Garcia et al., Citation2018; X. Liu et al., Citation2019), object detection (Y. Ji et al., Citation2021; Wu et al., Citation2020), and so on. It takes the original image as input and outputs predictions without the need for feature engineering. CNN is given powerful context extraction capabilities by convolution and pooling operations. In the field of remote sensing, CNN overcomes the limitations of traditional target-oriented pixel segmentation methods in terms of accuracy and greatly improves the robustness and automation level of remote sensing image interpretation (Hao et al., Citation2020; Minaee et al., Citation2021).

In recent years, researchers have explored various CNN-based networks for SLS of remote sensing images, achieving remarkable results. Initially, most CNN-based methods focused on improving network performance by increasing network depth and width. However, this solution may exacerbate feature differentiation, leading to potential gradient disappearance (K. He et al., Citation2016). To overcome these challenges, attention mechanism (Oktay et al., Citation2018) and residual connection (Chaurasia & Culurciello, Citation2017) have been commonly employed to optimize networks, enhance information dissemination, and model intricate dependencies between input and output feature maps. J. Li et al. (Citation2022) proposed a UNet optimization structure for SLS that combines atlas spatial pyramid pooling (ASPP) and flexible rectified linear unit (FReLU), along with an attention mechanism to improve the perception capability of the network. Aghdami-Nia et al. (Citation2022) refined the UNet architecture by using residual operations in convolutional blocks and Jaccard loss function to improve the accuracy of SLS. Shamsolmoali et al. (Citation2019) combined up-down sampling paths with several densely connected residual blocks aggregating multi-scale contextual information for SLS of remote sensing images. Despite the rapid development of SLS based on previous research, most current methodologies are developed utilizing the principles commonly employed in semantic segmentation approaches, and their pertinence to SLS requires further improvement. We aim to consider the key problems in SLS and design a network to alleviate these problems point-to-point. Specifically, through researches and experiments, we found the following two main challenges in SLS of remote sensing images:

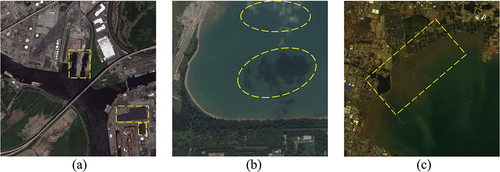

The phenomena of intra-class heterogeneity and inter-class similarity are prevalent in SLS. Complex geomorphic information, the variable physical feature of coastlines (as shown in ), and the instability of remote sensing imaging (e.g. clouds and their shadows obscuring or blurring the remote sensing images in ) make it possible for a single category to contain variety features, and there is often a similarity of spectral features between different categories, which greatly increases the difficulty of SLS.

Figure 1. (a) The land building (two yellow boxes on the lower right) and the harbor (yellow box in the middle) have similar spectral features and shapes. (b) Remote sensing images are disturbed by clouds and their shadows. (c) The frequent changes in the shape of the shoal caused by the tide make the shoal a mixed area of sea and land, which exacerbates the uncertainty of the sea-land boundary.

Uncertainty of sea-land boundaries. From the geological viewpoint, sea-land boundary can be defined as the transition zone between sea and land, usually the coastline where the sea meets the land (Barceló et al., Citation2023). However, the fine segmentation of the boundary is not easy due to the complexity and diversity of coastlines. The sea-land boundary exhibits unique geographic, morphological, and dynamic characteristics, which further contribute to the uncertainties in sea-land segmentation (SLS) tasks. As shown in , due to tidal phenomena, the shoal becomes a mixed sea-land area with irregular boundaries, making it difficult to locate the boundary accurately. Moreover, remote sensing images are affected by imaging conditions, resulting in mixed pixels at the sea-land boundary. This phenomenon poses a significant challenge for accurate segmentation of the sea-land boundary.

In this paper, FMPNet is proposed in order to cope with the above challenges in SLS of remote sensing images. In response to the first challenge, we designed DAFM, a dual-branch joint attention feature extraction module. Meanwhile, the MB was designed to store multi-scale prototype features, guiding feature selection based on their discriminative power. This approach enhances the differences between different classes and alleviates the issues caused by intra-class heterogeneity and inter-class similarity. Furthermore, we recognize a new perspective on the SLS task: not all areas of the scene require the same level of attention. In many scenes, it is crucial to focus on weak information, such as boundaries, which are difficult to discriminate but hold significant importance. To improve the network’s perception of boundaries, we employ FC to establish a fuzzy relationship between neighboring pixels of sea-land boundaries. To validate the effectiveness of FMPNet for SLS, we compare it with state-of-the-art methods using publicly available SLSD dataset (Chu et al., Citation2019) and field remote sensing images from Gaofen-1 satellite. The results demonstrate the superior performance of FMPNet. We hope that the insights presented in this paper will inspire and contribute to future research in the field of SLS.

The main contributions of this paper are summarized as follows:

We summarize two intractable difficulties in SLS of remotely sensed imagery: (a) the abundance of intra-class heterogeneity and inter-class similarity in SLS scenes. (b) Uncertainty of sea-land boundaries. To address these difficulties, we propose a fuzzy-embedded multi-scale prototype network called FMPNet.

DAFM and MB are designed to guide feature selection through multi-scale prototypes for efficient feature extraction to alleviate segmentation difficulties in SLS due to intra-class heterogeneity and inter-class similarity. FC is designed to establish the fuzzy relation between the pixels at the land and sea boundaries to alleviate the boundary uncertainty problem.

Extensive experiments were performed on the SLSD dataset. In addition, the model trained on the SLSD dataset was tested in Gaofen-1 real scenarios outside of the SLSD dataset. Quantitative and qualitative results show that our model outperforms other state-of-the-art methods.

The remaining sections of this paper are organized as follows. Section 2 provides a brief review of related work. Section 3 describes in detail the FMPNet proposed in this paper. Section 4 demonstrates the performance of our method through comparison experiments on two datasets, and proves the effectiveness of each module through ablation experiments. Finally, Section 5 summarizes this paper and presents an outlook for future research.

Related works

Sea-land segmentation of remote sensing image

SLS of remote sensing images is a significant and widely studied field. In the early stages of research, the mainstream method for automatic segmentation was inspired by threshold-based segmentation, where each pixel is compared with a preset threshold. Otsu (Citation1979) presented a pioneering threshold-based work using gray-scale histograms to effectively implement SLS. X. Chen et al. (Citation2014) made enhancements to the segmentation effect and response speed by incorporating both coarse and precise threshold operations. However, these methods exhibit high sensitivity to spectral variations and lack the capability to learn from neighboring range pixels. As a result, they can only yield satisfactory outcomes in scenarios where there are pronounced spectral differences. When there are complex texture distributions and scene variations in remote sensing images, it is difficult to find the optimal threshold to adapt to all segmentation scenes, which leads to misclassification becoming common. Consequently, the drawbacks of threshold-based methods significantly hinder their effectiveness in practical applications.

With the blowout development of CNNs and their exceptional feature extraction capabilities and generalization performance, general-purpose semantic segmentation networks have proven to be effective in various specific tasks, including SLS. Currently, CNN-based methods dominate the state-of-the-art in segmentation. Fully convolutional network (FCN) (Long et al., Citation2015) is considered to be the first milestone of end-to-end CNN that produces satisfactory segmentation results by combining appearance and semantic information. With the success of FCN in the field of semantic segmentation, there has been a proliferation of innovative approaches based on FCN Badrinarayanan et al. (Citation2017) proposed SegNet, which uses an encode-decoder structure to achieve effective pixel-wise semantic segmentation. UNet (Ronneberger et al., Citation2015) employs a similar structure where the encoding structure extracts image features and the decoding structure recovers detailed features lost in downsampling. deeplabv3+ (L.-C. Chen et al., Citation2017) uses atrous convolution (L.-C. Chen et al., Citation2018) with different dilatation rates to extract features with different receptive fields. SENet (J. Hu et al., Citation2018) improves network representation by modelling interdependencies between convolutional feature channels. HRNet (Wang et al., Citation2020) combining adaptive spatial pooling and fine-grained feature-aware operations to enhance network representation of multi-scale features.

Numerous CNN-based methods have also been proposed for SLS. Specifically, H. Lin et al. (Citation2017) proposed a multi-scale FCN for maritime semantic annotation. They categorized the pixels of maritime images into three categories: sea, land, and ship. (R. Li et al. (Citation2018) proposed a convolutional neural network DeepUnet improved over the classical coding and decoding structure to deepen the network depth for SLS, which leads to the problem of information loss due to the structural characteristics of up-down sampling, which affects the segmentation of image details and edges. Similar to Deepunet (Tseng & Sun, Citation2022), mitigate the interference of climate change on SLS by training a deep network to learn the relationship between neighboring pixels (Cheng et al., Citation2016). proposed SeNet, which utilizes a local smoothing regularization method to achieve improved spatial consistency results in SLS tasks, along with a multi-task loss. Cui et al. (Citation2020) proposed a scale-adaptive network (SANet), which is designed with adaptive multi-scale feature learning modules instead of traditional convolutional operations for large scene SLS. X. Ji et al. (Citation2023) proposed a two-branch integrated network DBENet for SLS tasks, which proposed a highly efficient integrated attention learning strategy to enhance the correlation between the two branches, thereby facilitating feature fusion and information transfer Heidler et al. (Citation2021) employed a multi-task approach combining semantic segmentation and edge detection to achieve segmentation of the Antarctic coast.

While the aforementioned methods have achieved good results in SLS, the design of these networks is not well-targeted for the SLS task, and there is room for improvement in terms of generalizing to different scenes. The segmentation of sea-land boundaries in complex scenes performs poorly, and even large misclassification within classes occurs.

Fuzzy method for semantic segmentation

Deep learning is a deterministic feature learning model, but it provides limited help in reducing data uncertainty. Remote sensing image data inevitably contains a large amount of noise and unexpected uncertainties. In order to better characterize and control the uncertainty in remote sensing image data, the research of introducing fuzzy methods into remote sensing image segmentation task has attracted the attention of more and more scholars, but it is still at a preliminary stage of exploration. Singh et al. (Citation2018) designed a new adaptive type II fuzzy filter for removing pretzel noise from images, and designed two type II fuzzy principal affiliation functions to determine the thresholds of pixel goodness. H. He et al. (Citation2019) proposed a new fuzzy uncertainty modeling algorithm to characterize the land cover pattern, and proposed an adaptive interval-value based interval type II fuzzy clustering method Lei et al. (Citation2018) proposed a fast fuzzy c-mean clustering algorithm designed to obtain contour-accurate hyper-pixel images for color image segmentation by designing a multiscale morphological gradient reconstruction operation that takes less time while providing better segmentation results (Zhao et al., Citation2021). embedded fuzzy logic units into CNN to make the segmentation results finer and more reliable Chong et al. (Citation2022) combined fuzzy pattern recognition method and CNN for urban remote sensing image segmentation. Q. Lin et al. (Citation2022) proposed a novel fuzzy based quality control algorithm to quantitatively predict the quality of segmentation results by generating fuzzy sets to describe the captured uncertainty. Ma et al. (Citation2023) combined fuzzy deep learning with conditional random fields for fine segmentation of high-resolution remote sensing images. Qu et al. (Citation2023) introduced fuzzy neighborhood and multi-attention gate modules for remote sensing image segmentation.

Compared with traditional deterministic representations, fuzzy methods provide a new way of modeling that encodes uncertain conceptual semantics in remote sensing image analysis problems to reduce the effects of remote sensing image uncertainty. For the segmentation of sea-land boundaries, fuzzy methods help to obtain feature representations that are more perceptible and comprehensible.

Method

The network architecture of the FMPNet is given in . FMPNet utilizes an encoder-decoder structure, with the encoder and encoder marked by blue dashed lines and orange dashed lines, respectively, in . Firstly, the input image enters the DAFM, which consists of a dual-branch feature extraction module and a joint attention module in series to achieve efficient feature extraction with multiple receptive fields. Meanwhile, the MB stores multiscale prototypes at the encoding stage, and prototypes with strong discrimination are used to guide feature extraction. Finally, FC constructs fuzzy relationships between neighbors for the features in the encoder, and then concatenates these features with those of the same scale in the decoder to enhance the ability of the network to classify pixels at the category boundaries.

Dual-branch joint attention feature extraction module

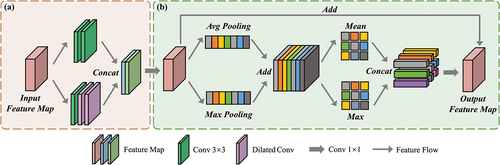

Considering the specificity of SLS scene and the task characteristics, we propose DAFM inspired by atrous convolution and attention mechanism (X. Li et al., Citation2019). DAFM adopts a dual-branch structure that contains a dual-branch feature extraction module to extract and fuse features under different receptive fields and a joint attention module to provide the ability to focus on important features in different dimensions. The flow is shown in .

Figure 3. Illustration of DAFM, where (a) denotes the dual-branch feature extraction module and (b) denotes the joint attention module.

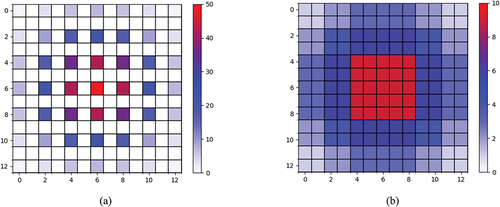

In the dual-branch feature extraction module, the first branch utilizes two consecutive generalized convolutions to extract fine features, while the other branch employs three convolutions with varying dilation rates to capture features from different receptive fields. To prevent exponential growth in computation, we use 3 × 3 sized convolutional kernels. Additionally, to avoid the raster effect caused by atrous convolution (as shown in ), we sequentially apply dilation convolutions with dilation rates of 1, 2, and 3 (as shown in ). This approach expands the receptive field without neglecting any pixels. The dual-branch structure ensures the extraction of fine features as well as a certain degree of contextual information.

Figure 4. (a) Visualization of the range of atrous convolutional feature extraction using three successive atrous convolutions with dilation rate of two produces a raster effect, i.e. a large number of pixels in the receptive field are completely ignored. (b) The visualization range of atrous convolutional feature extraction when the three successive dilation rates are 1, 2, and 3, respectively, with the learning range spreading uniformly from the center to the perimeter.

Subsequently, the feature maps are concatenated at the feature fusion unit and fed into the joint attention module after recovering the dimensions by a 1 × 1 convolution operation. This module consists of two branches of spatial attention and channel attention serially. The feature map first enters the spatial attention branch, which emphasizes the significance of various spatial locations in the input feature maps, highlighting regions with significant influence. It begins by reducing the spatial dimension to a vector through global average pooling. Subsequently, convolution is used to learn the spatial weights for each channel, which are then applied to the original feature map. Channel attention focuses on the importance of different channels in the input feature map, distinguishing which channels are more important for the task at hand and improving the utilization of different channel features.

Multi-scale prototype extraction

Despite the excellent feature extraction capability of DAFM, the features are inevitably neglected or lost to varying degrees. This is mainly due to the intra-class heterogeneity caused by the limitations of the imaging conditions, the inter-class similarity between the land and sea areas, and the inherent limitations of the convolution and up-down sampling operations.

Our design is motivated by the memory mechanisms that arise from contrast learning and meta-learning (X. Liu et al., Citation2021). In previous memory mechanisms, one common approach is to retain as much information as possible or even store all feature maps, which can be relatively inefficient. On the other hand, another approach retains only a few features, resulting in weaker generalization performance of the feature extractor. Thus, it seems that features learned from different scenes need a filtering process to become a more stable concept.

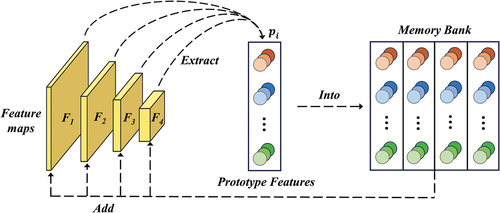

Continuing the concept of memory mechanism, we designed a multi-scale prototype extraction module based on MB. The prototype features are extracted in the multiscale feature map for guiding feature selection and enter into the MB, which explicitly establishes the relationship between inter-class features and intra-class features. This design avoids a large memory footprint and time consumption while establishing an effective MB.

Overall process of MB is shown in . Initially, the prototype features are extracted in the feature maps

,

denote the multi-scale feature maps captured by DAFM during the encoding stage. In the process of extracting

, we adopt a masking mechanism. Specifically, the similarity between feature

and

is computed by einsum operation to generate the similarity map. Then, the positions in the similarity map that exceed the threshold are set to 1, while the remaining positions are set to 0, generating a similarity mask. Note that the threshold is initialized to 0.5 and is a trainable parameter.

is multiplied with the similarity mask to generate

. Note that

is directly taken as

in this process, and the subsequent

is always calculated with the

.

enters into the MB, which maps the current

to

, completing the first round of prototype extraction and mapping. Subsequently this process is repeated on different scales of feature maps, during which

is continuously enters into

for the momentum update process of

. When

= 4,

contains prototype features at four scales. This implementation enforces consistency and disparity constraints on class features, effectively alleviating the difficulties caused by inter-class similarity and intra-class heterogeneity.

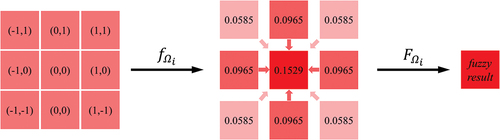

Fuzzy connection unit

Considering another challenge for SLS, on the one hand, the tidal phenomenon brought about by the rotation of the moon around the earth will have an impact on the morphology and location of the shoal, which increases the difficulty of segmentation of the sea-land boundary. On the other hand, remote sensing imaging conditions introduce mixed pixels, where a single pixel contains multiple categories. This phenomenon hinders the accurate segmentation of sea-land boundaries. To address these issues, we propose FC to reduce the uncertainty associated with sea-land boundaries, as shown in . Through the fuzzy method, we quantitatively represent the aforementioned issues and establish fuzzy relationships to enhance the flexibility. This concept is captured by Equation 1:

where represents the fuzzy set of category

on the feature map

, and

is the affiliation of pixel

to the fuzzy set

. The fuzzy set

is determined by the fuzzy function

, and the fuzzy set

degenerates to an ordinary set when

. Inspired by the fuzzy set theory mentioned above, we adopt a 2D Gaussian fuzzy method to establish fuzzy relationships between neighborhood pixels of the sea-land boundary and quantify the uncertainty problem. Firstly, a Gaussian kernel of size 3 × 3 is set up and the relative affiliation of the pixels within the kernel to the center pixel

is calculated as shown in Equation 2:

where is the relative position coordinate of pixel

in the Gaussian kernel.

denotes the variance with an initial value of 1. The default is the standard 2D Gaussian distribution. The final feature representation

of

is calculated by using the pixel itself as well as its neighboring 8 pixels, a total of 9 pixels combined, as in Equation 3:

where represents the pixel value of neighborhood pixel

. N represents the normalization operation that makes the sum of the nine weights equal to one. The affiliation degree

of

is determined by the distribution position, with the center pixel itself having the largest weight, followed by the four pixels above, below, left and right, and the diagonal pixels having the lowest weight.

Experiments

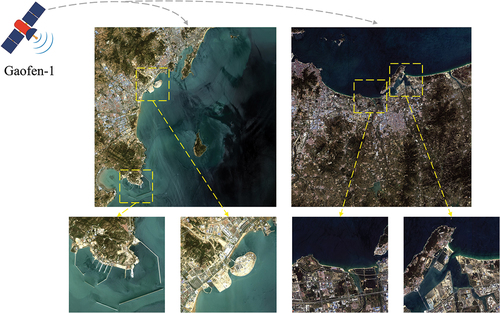

In order to evaluate the capability of the proposed FMPNet, qualitative and quantitative analyses have been performed using a publicly available SLS dataset and Gaofen-1 satellite data from actual scenes in the Jiaodong Peninsula region of China.

Dataset

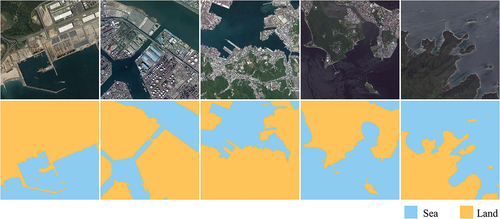

SLSD is a public SLS dataset published by (Chu et al., Citation2019). The data source is from Google Earth and contains 210 remote sensing images, all of which have three bands, are about 1000 × 1000 in size, and have a spatial resolution of 3 ~ 5 m. A training set containing 800 images of 512 × 512 and a test set of 320 images were composed by clipping. Examples of images and labels for the SLSD dataset are shown in .

The Gaofen-1 satellite dataset contains two remote sensing images of the Jiaodong Peninsula of China, each of which is about 4500 × 4500 in size and has a spatial resolution of 2 ~ 8 m. Our work department is located in the region, which facilitates our field visits and validation.

Experimental settings

In this work, we use a laboratory server for experiments and training. The main hardware and software configurations are shown in . Based on the trade-off between model performance and scale, the input image size of the model in this experiment is a 512 × 512 × 3 color image, and the output prediction is a single-channel binary map of 512 × 512 × 1. To show the segmentation results in a more graphic way, we refilled the output with colors, blue represents the sea and yellow represents the land.

Table 1. Hardware and software configuration of the experiments.

Evaluation metrics

SLS performs pixel-level segmentation of images, so pixel-level evaluation metrics are commonly used to evaluate the performance of SLS methods. We selected six evaluation metrics for evaluation. Overall accuracy (OA) denotes the ratio of correctly categorized pixels to the total number of pixels. Precision denotes the ratio of pixels correctly categorized as sea and land to those categorized as sea and land. Recall denotes the ratio of pixels correctly categorized as sea and land to the original water and land pixels. F1 Score is a composite evaluation that includes both precision and recall. intersection over union (IoU) indicates the intersection ratio of individual category predictions to true labels. Mean intersection over union (mIoU) represents the mean of the intersection ratio between the predicted values and the true labels of the sea and land categories. The evaluation metrics are expressed in the formulas shown in equations (4)-(9) (Long et al., Citation2015).

where TP is true positive, TN is true negative, FP is false positive, FN is false negative, k in the mIoU formula denotes the number of categories.

Comparative experiments

To demonstrate the effectiveness of FMPNet, we conducted experimental comparisons with other state-of-the-art methods on the SLSD dataset and the Chinese Jiaodong Peninsula data from Gaofen-1 satellite.

SLSD

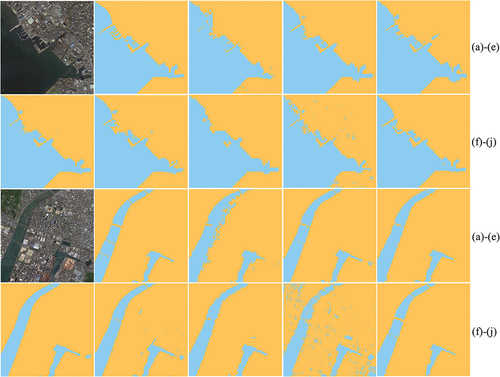

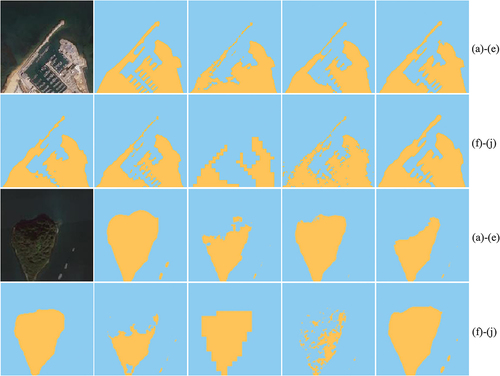

In the same experimental setting, we compared FMPNet with 10 advanced segmentation methods. presents the results of the quantitative evaluation of the segmentation effectiveness of the different methods on the SLSD dataset. As observed, FMPNet always achieves the best performance in most candidate metrics. In terms of quantitative evaluation, DeepUnet is a strong competitor to FMPNet, with Precision metrics ahead of ours. However, the visualized segmentation results in show that DeepUnet may produce coarser sea-land boundaries with some misclassifications, while the results of FMPNet are closer to the ground truth (GT).

Table 2. Performance comparison of the different methods on the SLSD dataset. All values are reported in percentage (%). Color convention: best, 2nd-best, and 3rd-best.

Figure 8. Visualization of segmentation results of FMPNet with other advanced methods in port and island. (a)Input image. (b)GT. (c)AttentionUNet. (d) FCN. (e)DeepUNet. (f)DeepLabv3+. (g)LinkNet. (h)PSPNet. (i)SegNet. (j)FMPNet(ours).

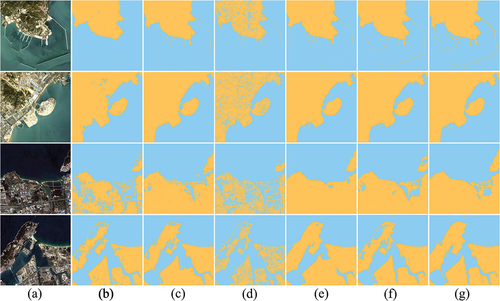

For more intuitive visual segmentation results, we selected four representative scenes from the SLSD dataset for the SLS task: port, island, city coastline, and seaway. To aid visual differentiation, we assigned the ocean a blue color and the land a yellow color in the visualization results.

showcases the visualization results for the port and island scenes. In the port scene, FMPNet achieves a complete segmentation of the long dike and accurately captures the intricate details within the port. In contrast, other methods for segmentation of port detail textures suffer from a lot of misclassifications. In the second scene, where the spectral representations of the island and nearby ocean are remarkably similar, FMPNet succeeds in achieving a relatively comprehensive segmentation of the island, successfully identifying small target objects like ships. This demonstrates the capability of FMPNet to meet the specific segmentation requirements of this task scene. Overall, FMPNet exhibits superior performance in terms of scene segmentation completeness, which stands out as its most prominent advantage over other methods.

For further testing the capability of FMPNet, we conducted experiments on two large-sized scenes. The segmentation results of the different methods for the city coastline and the seaway are shown in . The coastline scene has a great number of detailed features, and the seaway scene contains complex city spectral features, as well as bridges and waterways, and FMPNet achieves satisfactory results in both scenes. From the visualization results, it is easy to find that FMPNet still maintains a leading position in the completeness of segmentation compared with other methods.

Field remote sensing data from Gaofen-1 in Jiaodong, China

In this section, we conduct comparison experiments using remote sensing images of the Jiaodong Peninsula in China, which were captured by the Gaofen-1 satellite. Unlike the previous experiment, we directly test the model trained on the SLSD dataset on these remote sensing images. We specifically selected four challenging scenes from the images, including docks and islands, which are marked by the yellow boxes in .

Figure 10. Remote sensing images of the Jiaodong Peninsula in China captured by the Gaofen-1 satellite, with the four areas we utilized for the evaluation marked with yellow boxes in the figure.

The four scenes are located in Qingdao and Yantai, which are very close to our work department. We conducted detailed fieldwork and evaluated the segmentation results of each method qualitatively and quantitatively using a random sample of 10,000 points. As shown in , FMPNet consistently achieves the best performance among all candidate metrics and achieves a significant advantage in terms of mIoU, which is 6.04% higher than the next best performance. It is worth noting that we achieved satisfactory results by directly applying the model trained solely on the SLSD dataset to this real scene.

Table 3. Performance comparison of the different methods on the Gaofen-1 data. All values are reported in percentage (%). Color convention: best, 2nd-best, and 3rd-best.

The visualization results of Gaofen-1 data for SLS are shown in . Among them, displays the segmentation visualization results of FMPNet, which achieved satisfactory performance. AttentionUNet and SegNet performed poorly, with large incorrect segmentations. DeepLabv3+ and DeepUNet achieved good results due to their deeper networks and diverse receptive fields. However, they still exhibited some incorrect segmentations at the sea-land boundary. In contrast, FMPNet performed the best on the Gaofen-1 data, achieving complete and detailed segmentation of the sea-land boundaries, including the challenging breeding area. This demonstrates the excellent SLS performance of FMPNet, as well as its ability to adapt to different scenes.

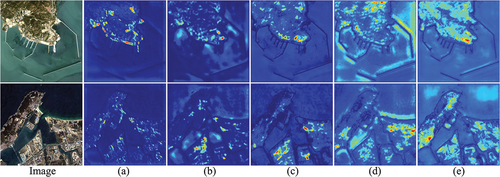

Ablation experiments

To evaluate the performance improvement brought by each module in FMPNet, we conducted ablation experiments on the SLSD dataset. We use OA, F1 score and mIoU as evaluation metrics. The quantitative analysis is shown in . After adding DAFM, MB and FC modules respectively to the baseline AttentionUNet, all candidate metrics show large improvements. The most significant improvement in mIoU is 2.58%, 2.5%, and 2.18%, respectively, which proves the effectiveness of our proposed modules. FMPNet achieved the best results when all three modules were used, with OA, F1 score, and mIoU improving by 1.87%, 1.71%, and 3.64%, respectively, demonstrating the compatibility and robustness of the modules.

Table 4. Results of the ablation experiment.

There is a correlation between attention level and segmentation results. In the SLS task, the visualization of feature maps can help us to understand more clearly which regions the model focuses on and to visualize the contribution of each module. We visualized the impact of ablating each module using Gaofen-1 data, as shown in . The brightness of a region indicates how much attention the modules pay to the region, the brighter the region is the more interested the module is in that region. Thus, we can notice how the modules refine different features.

shows the feature visualization of the baseline, where the regions of interest are scattered and the sea-land boundaries are not clear. The feature visualization after adding MB is shown in , with more regions of interest, the network learns features more uniformly, and the boundaries are clearer than before. shows the feature visualization after adding FC, the sea-land boundaries are emphasized and the boundary contours have obviously become clearer, especially the strip boundaries in the harbor, which is crucial for SLS. The visualization after adding DAFM is shown in , where large contiguous areas are focused on while the boundaries are completely delineated.

The feature visualization of FMPNet is the best, as shown in , where the land and sea boundaries are clearly delineated and the colors of the land and sea areas are represented evenly respectively, indicating that FMPNet distinguishes them clearly. These feature visualizations in illustrate the role of each module for different regions, which shows that FMPNet can clearly distinguish between sea and land and can effectively complete the SLS task.

Conclusion

In this paper, we propose FMPNet, a fuzzy-embedded multi-scale prototype network, to address the challenges caused by inter-class similarity, intra-class heterogeneity, and uncertainty of sea-land boundaries in SLS. FMPNet combines fuzzy method and multi-scale prototype learning to alleviate these problems. The proposed DAFM effectively combines the advantages of atrous convolution and attention to enhance the feature learning ability of the network, and the MB is designed to collect multi-scale prototypes of each class to extract discriminative feature representations to alleviate the problems posed by inter-class similarity and intra-class heterogeneity. In addition, embedding the proposed FC into the network structure can effectively eliminate the mixed pixels and improve the accuracy of the network in classifying the pixels at the sea-land boundary. Effectively combining fuzzy methods with deep learning provides new insights for SLS. Extensive experiments on a public SLS dataset and real remote sensing data from Gaofen-1 validate the feasibility, effectiveness and generalization of the proposed FMPNet.

Acknowledgments

All authors would sincerely thank the reviewers and editors for their suggestions and opinions for improving this article.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The SLSD dataset is available at https://github.com/lllltdaf2/Sea-land-segmentation-data; the field data for the Jiaodong Peninsula from Gaofen-1 was provided by our work department, and some of them are publicly available at https://github.com/CVFishwgy/Gaofen1_sea_land_data.

Additional information

Funding

References

- Aghdami-Nia, M., Shah-Hosseini, R., Rostami, A., & Homayouni, S. (2022). Automatic coastline extraction through enhanced sea-land segmentation by modifying Standard U-Net. International Journal of Applied Earth Observation Geoinformation, 109, 102785. https://doi.org/10.1016/j.jag.2022.102785

- Alzubaidi, L., Zhang, J., Humaidi, A. J., Al-Dujaili, A., Duan, Y., Al-Shamma, O., Santamaría, J., Fadhel, M. A., Al-Amidie, M., & Farhan, L. (2021). Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. Journal of Big Data, 8, 1–15. https://doi.org/10.1186/s40537-021-00444-8

- Badrinarayanan, V., Kendall, A., & Cipolla, R. (2017). SegNet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Transactions on Pattern Analysis & Machine Intelligence, 39(12), 2481–2495. https://doi.org/10.1109/TPAMI.2016.2644615

- Barceló, M., Vargas, C. A., & Gelcich, S. (2023). Land–sea interactions and ecosystem services: research gaps and future challenges. Sustainability Gelcich, 15(10), 8068. https://doi.org/10.3390/su15108068

- Chaurasia, A., & Culurciello, E. (2017). Linknet: Exploiting encoder representations for efficient semantic segmentation. IEEE Visual Communications and Image Processing (VCIP). https://doi.org/10.1109/VCIP.2017.8305148

- Cheng, D., Meng, G., Cheng, G., & Pan, C. (2016). SeNet: Structured edge network for sea–land segmentation. IEEE Geoscience & Remote Sensing Letters, 14(2), 247–251. https://doi.org/10.1109/LGRS.2016.2637439

- Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., & Yuille, A. L. (2017). Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Transactions on Pattern Analysis & Machine Intelligence, 40(4), 834–848. https://doi.org/10.1109/TPAMI.2017.2699184

- Chen, X., Sun, J., Yin, K., & Yu, J. (2014). Sea-land segmentation algorithm of SAR image based on Otsu method and statistical characteristic of sea area. Journal of Data Acquisition and Processing, 29, 603–608.

- Chen, L.-C., Zhu, Y., Papandreou, G., Schroff, F., & Adam, H. (2018). Encoder-decoder with atrous separable convolution for semantic image segmentation. Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany.

- Chong, Q., Xu, J., Jia, F., Liu, Z., Yan, W., Wang, X., & Song, Y. (2022). A multiscale fuzzy dual-domain attention network for urban remote sensing image segmentation. International Journal of Remote Sensing, 43(14), 5480–5501. https://doi.org/10.1080/01431161.2022.2135413

- Chu, Z., Tian, T., Feng, R., & Wang, L. (2019). Sea-land segmentation with Res-UNet and fully connected CRF. IEEE International Geoscience and Remote Sensing Symposium. https://doi.org/10.1109/IGARSS.2019.8900625

- Cui, B., Jing, W., Huang, L., Li, Z., & Lu, Y. (2020). Sanet: A sea–land segmentation network via adaptive multiscale feature learning. IEEE Journal of Selected Topics in Applied Earth Observations & Remote Sensing, 14, 116–126. https://doi.org/10.1109/JSTARS.2020.3040176

- Fang, L., Jiang, Y., Yan, Y., Yue, J., & Deng, Y. (2023). Hyperspectral image instance segmentation using spectral–spatial feature pyramid network. IEEE Transactions on Geoscience & Remote Sensing, 61, 1–13. https://doi.org/10.1109/TGRS.2023.3240481

- Fonner, R., Bellanger, M., & Warlick, A. (2020). Economic analysis for marine protected resources management: Challenges, tools, and opportunities. Ocean & Coastal Management, 194, 105222. https://doi.org/10.1016/j.ocecoaman.2020.105222

- Garcia-Garcia, A., Orts-Escolano, S., Oprea, S., Villena-Martinez, V., Martinez-Gonzalez, P., & Garcia-Rodriguez, J. (2018). A survey on deep learning techniques for image and video semantic segmentation. Applied Soft Computing, 70, 41–65. https://doi.org/10.1016/j.asoc.2018.05.018.

- Hao, S., Zhou, Y., & Guo, Y. (2020). A brief survey on semantic segmentation with deep learning. Neurocomputing, 406, 302–321. https://doi.org/10.1016/j.neucom.2019.11.118

- Heidler, K., Mou, L., Baumhoer, C., Dietz, A., & Xiang Zhu, X. (2021). HED-UNet: Combined segmentation and edge detection for monitoring the Antarctic coastline. IEEE Transactions on Geoscience & Remote Sensing, 60, 1–14. https://doi.org/10.1109/tgrs.2021.3064606

- He, H., Xing, H., Hu, D., & Yu, X. (2019). Novel fuzzy uncertainty modeling for land cover classification based on clustering analysis. Science China Earth Sciences, 62, 438–450. https://doi.org/10.1007/s11430-017-9224-6

- He, K., Zhang, X., Ren, S., & Sun, J. (2016). Deep residual learning for image recognition. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA.

- Hu, J., Shen, L., & Sun, G. (2018). Squeeze-and-excitation networks. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA.

- Hu, K., Zhang, E., Xia, M., Weng, L., & Lin, H. (2023). Mcanet: A multi-branch network for cloud/snow segmentation in high-resolution remote sensing images. Remote Sensing, 15(4), 1055.

- Ji, X., Tang, L., Lu, T., & Cai, C. (2023). Dbenet: Dual-branch ensemble network for sea-land segmentation of remote sensing images. IEEE transactions on instrumentation and measurement, 72, 1–11. https://doi.org/10.1109/tim.2023.3302376

- Ji, Y., Zhang, H., Zhang, Z., & Liu, M. (2021). CNN-based encoder-decoder networks for salient object detection: A comprehensive review and recent advances. Information Sciences, 546 , 835–857. https://doi.org/10.1016/j.ins.2020.09.003

- Kotaridis, I., & Lazaridou, M. (2021). Remote sensing image segmentation advances: A meta-analysis. ISPRS Journal of Photogrammetry Lazaridou, and Remote Sensing, 173 , 309–322. https://doi.org/10.1016/j.isprsjprs.2021.01.020

- Lei, T., Xiaohong, J., Yanning, Z., Shigang, L., Hongying, M., & Asoke, K. (2018). Superpixel-based fast fuzzy C-means clustering for color image segmentation. J IEEE Transactions on Fuzzy Systems Nandi, 27(9), 1753–1766.

- Li, X., He, Z., Jiang, L., & Ye, Y. (2018). Research on Remote Sensing Dynamic Monitoring of Ecological Resource Environment Based on GIS. Wireless Personal Communications, 102(4), 2941–2953. https://doi.org/10.1007/s11277-018-5317-1

- Li, J., Huang, Z., Wang, Y., & Luo, Q. (2022). Sea and land segmentation of optical remote sensing images based on U-Net optimization. Remote Sensing, 14(17), 4163.

- Li, R., Liu, W., Yang, L., Sun, S., Hu, W., Zhang, F., & Li, W. (2018). DeepUNet: A deep fully convolutional network for pixel-level sea-land segmentation. IEEE Journal of Selected Topics in Applied Earth Observations & Remote Sensing, 11(11), 3954–3962.

- Lin, Q., Chen, X., Chen, C., & Garibaldi, J. M. (2022). A novel quality control algorithm for medical image segmentation based on Fuzzy Uncertainty. IEEE Transactions on Fuzzy Systems, 31, 2532–2544. https://doi.org/10.1109/tfuzz.2022.3228332

- Lin, H., Shi, Z., & Zou, Z. (2017). Maritime semantic labeling of optical remote sensing images with multi-scale fully convolutional network. Remote Sensing, 9(5), 480.

- Liu, X., Deng, Z., & Yang, Y. (2019). Recent progress in semantic image segmentation. Artificial Intelligence Review, 52, 1089–1106. https://doi.org/10.1007/s10462-018-9641-3

- Liu, X., Tian, X., Lin, S., Qu, Y., Ma, L., Yuan, W., Zhang, Z., & Xie, Y. (2021). Learn from concepts: towards the purified memory for few-shot learning. Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence, Montreal-themed Virtual Reality.

- Liu, Y., Wu, Y.-H., Wen, P., Shi, Y., Qiu, Y., & Cheng, M.-M. (2020). Leveraging instance-, image-and dataset-level information for weakly supervised instance segmentation. IEEE Transactions on Pattern Analysis & Machine Intelligence, 44(3), 1415–1428.

- Li, X., Wang, W., Hu, X., & Yang, J. (2019). Selective kernel networks. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA.

- Li, X., Xu, F., Liu, F., Lyu, X., Tong, Y., Xu, Z., & Zhou, J. (2023). A Synergistical Attention Model for Semantic Segmentation of Remote Sensing Images. IEEE Transactions on Geoscience & Remote Sensing, 61, 1–16. https://doi.org/10.1109/tgrs.2023.3243954

- Long, J., Shelhamer, E., & Darrell, T. (2015). Fully convolutional networks for semantic segmentation. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA.

- Ma, X., Xu, J., Chong, Q., Ou, S., Xing, H., & Ni, M. (2023). Fcunet: Refined remote sensing image segmentation method based on a fuzzy deep learning conditional random field network. IET Image Processing, 17, 3616–3629. https://doi.org/10.1049/ipr2.12870

- Minaee, S., Boykov, Y., Porikli, F., Plaza, A., Kehtarnavaz, N., & Terzopoulos, D. (2021). Image segmentation using deep learning: A survey. IEEE Transactions on Pattern Analysis & Machine Intelligence, 44(7), 3523–3542.

- Oktay, O., Schlemper, J., Le Folgoc, L., Lee, M., Heinrich, M., Misawa, K., Mori, K., McDonagh, S., Hammerla, N. Y., & Kainz, B. (2018). Attention u-net: Learning where to look for the pancreas. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA.

- Otsu, N. (1979). A threshold selection method from gray-level histograms. IEEE Transactions on Systems, Man, and Cybernetics, 9(1), 62–66.

- Qu, T., Xu, J., Chong, Q., Liu, Z., Yan, W., Wang, X., Song, Y., & Ni, M. (2023). Fuzzy neighbourhood neural network for high-resolution remote sensing image segmentation. European Journal of Remote Sensing, 56(1), 2174706.

- Ronneberger, O., Fischer, P., & Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. Medical Image Computing and Computer-Assisted Intervention, Munich, Germany.

- Shamsolmoali, P., Zareapoor, M., Wang, R., Zhou, H., & Yang, J. (2019). A novel deep structure U-Net for sea-land segmentation in remote sensing images. IEEE Journal of Selected Topics in Applied Earth Observations & Remote Sensing, 12(9), 3219–3232.

- Singh, V., Dev, R., Dhar, N. K., Agrawal, P., & Verma, N. K. (2018). Adaptive type-2 fuzzy approach for filtering salt and pepper noise in grayscale images. IEEE Transactions on Fuzzy Systems, 26(5), 3170–3176.

- Sun, W., Chen, C., Liu, W., Yang, G., Meng, X., Wang, L., & Ren, K. (2023). Coastline extraction using remote sensing: A review. GIScience & Remote Sensing, 60(1), 2243671.

- Tian, Z., Zhang, B., Chen, H., & Shen, C. (2022). Instance and panoptic segmentation using conditional convolutions. IEEE Transactions on Pattern Analysis & Machine Intelligence, 45(1), 669–680.

- Tseng, S.-H., & Sun, W.-H. (2022). Sea–Land Segmentation Using HED-UNET for Monitoring Kaohsiung Port. Mathematics, 10(22), 4202.

- Wang, J., Sun, K., Cheng, T., Jiang, B., Deng, C., Zhao, Y., Liu, D., Mu, Y., Tan, M., & Wang, X. (2020). Deep high-resolution representation learning for visual recognition. IEEE Transactions on Pattern Analysis & Machine Intelligence, 43(10), 3349–3364.

- Wu, X., Sahoo, D., & Hoi, S. C. (2020). Recent advances in deep learning for object detection. Neurocomputing, 396, 39–64. https://doi.org/10.1016/j.neucom.2020.01.085

- Zhao, T., Xu, J., Chen, R., & Ma, X. (2021). Remote sensing image segmentation based on the fuzzy deep convolutional neural network. International Journal of Remote Sensing, 42(16), 6264–6283.