Abstract

Introduction

In 2011, a consensus report was produced on technology-enhanced assessment (TEA), its good practices, and future perspectives. Since then, technological advances have enabled innovative practices and tools that have revolutionised how learners are assessed. In this updated consensus, we bring together the potential of technology and the ultimate goals of assessment on learner attainment, faculty development, and improved healthcare practices.

Methods

As a material for the report, we used the scholarly publications on TEA in both HPE and general higher education, feedback from 2020 Ottawa Conference workshops, and scholarly publications on assessment technology practices during the Covid-19 pandemic.

Results and conclusion

The group identified areas of consensus that remained to be resolved and issues that arose in the evolution of TEA. We adopted a three-stage approach (readiness to adopt technology, application of assessment technology, and evaluation/dissemination). The application stage adopted an assessment ‘lifecycle’ approach and targeted five key foci: (1) Advancing authenticity of assessment, (2) Engaging learners with assessment, (3) Enhancing design and scheduling, (4) Optimising assessment delivery and recording learner achievement, and (5) Tracking learner progress and faculty activity and thereby supporting longitudinal learning and continuous assessment.

Background

Since the previous Ottawa Technology Enhanced Assessment (TEA) consensus statement of 2011, technology enhancements have realised previously unimagined possibilities to innovate assessment practices (Amin et al. Citation2011). Exciting new horizons continue to be revealed as the interface of technology and education develops new approaches to learning, teaching, and assessing, connecting staff, learners, and institutions (Alexander et al. Citation2019). This connectivity, coupled with increasingly accessible ‘big data,’ presents real opportunities to deliver personalised assessment and timely feedback.

Practice points

Institutions and individual educators should assess their readiness to adopt technology to enhance assessment. This includes ensuring digital equity and readiness of the programme, its ‘people, processes and products’ involved with assessment.

Technology should be the enabler of assessment, not the focus and driver of assessment, with active engagement of students and faculty.

Students should be actively involved in the planning and delivery of Technology Enhanced Assessment.

The adoption of new technologies in assessment can be associated with substantial risk, as well as opportunity, and can increase the impact of poor assessment practice.

Technology should maximise authenticity and personalisation of assessment and must interface effectively with Technology Enabled Healthcare.

Technology should enhance the design, scheduling, and delivery of assessment and opportunities to provide enhanced feedback and support learner and educator development.

A ‘lifecycle’ model of assessment and feedback can support assessment system change.

It is essential that the impact of technology-enhanced assessment is evaluated, disseminated, and accelerated within Health Professions Education.

Sharing best practices in technology-enhanced assessment globally is the responsibility of all health professions educators.

Consideration should be given to learning from, and with, low resource settings with a focus on implementation, upskilling colleagues, and reducing gaps in digital equity and accessibility.

However, uncritical adoption of (new) technologies in assessment brings substantial risk, from concerns spanning assessment security and systems failure through to their impact on learners and faculty, including academic integrity (Andreou et al. Citation2021). Opportunities for innovation, or the potential for misuse and misapplication of technology (for example, the use of analytics to drive decision making), bring complex academic and ethical dilemmas for learners, faculty, and organisations in settings where education is delivered.

Whilst we might recognize technology as a relatively ‘new’ construct, innovations throughout history have challenged education as we generate ‘rules’ for its application through use and experience, whilst grappling with an increasingly dynamic, accelerating landscape of technology upgrades and innovation. The pace of change in technology continues to be much quicker than educational reform, creating a perpetual sense of ‘catch up.’ This continual challenge is reflected in the triadic interface of ‘politics, pedagogy, and practices’ as educators seek to innovate assessment practices alongside these changes (Shum and Luckin Citation2019).

Since the Ottawa conference of 2020, global disruption caused by the Covid-19 pandemic continues to impact every sector of society. Education has affected a cross-generational cohort of learners (from pre-school to continuing professional development), necessitating institutions to rapidly adopt and adapt to remote/online education (Aristovnik et al. Citation2020; Cleland et al. Citation2020; Gordon et al. Citation2020; Rahim Citation2020; Farrokhi et al. Citation2021; Grafton-Clarke et al. Citation2021). Assessment has seen particular challenges but has embraced technology to deliver creative, innovative solutions in Health Professions Education (HPE) (Fuller et al. Citation2020; Hegazy et al. Citation2021). Whilst new discourses have emerged in TEA, for example, the ‘online OSCE’ (Objective Structured Clinical Examination) (Hytönen et al. Citation2021; Roman et al. Citation2022), these may lack definition or alignment to good assessment practice and the purpose of the OSCE (Boursicot et al. Citation2020, Citation2021). Other proposed technology solutions, such as learning analytics show significant potential, but currently lack a critical evidence base (Sonderlund et al. Citation2019; Archer and Prinsloo Citation2020). Consequently, calls have emerged for a greater scholarship to underpin the use of technology to innovate education (Ellaway et al. Citation2020), and this provides a helpful backdrop to explore this refreshed TEA consensus statement.

Looking back to the future? Lessons from the 2011 consensus and a refreshed framework for a new decade

Many of the technology innovations forecasted by the previous Ottawa TEA consensus may now be perceived as everyday education practice (including virtual learning environments, computer-based assessment, simulation, and mannequins) with growing trends in the gamification of assessment (Wang et al. Citation2016; Prochazkova et al. Citation2019; Tsoy et al. Citation2019; Tuti et al. Citation2020; Roman et al. Citation2022). Furthermore, the rapid spread of mobile technology over the past decade has provided learners opportunities to retrieve information in the clinical workplace but has also created tensions between students, clinical teachers, health care professionals, and patients and carers (Scott et al. Citation2017; Harrison et al. Citation2019; Folger et al. Citation2021).

However, it is important to recognise that resource and technology limitations mean that some institutions have been unable to implement teaching, learning, and assessment practices that require new technology. In addition, not all students have access to the latest technology. These factors widen an already established disparity between lower and higher-resourced environments through access to technology-enhanced learning (Aristovnik et al. Citation2020). Since the previous consensus, many of the broader ‘hardware’ solutions that have emerged in wider society have radically changed how we can assess learning, e.g. tablet-based marking in OSCEs (Judd et al. Citation2017; Daniels et al. Citation2019) and smartphone assisted assessment in the workplace (Mooney et al. Citation2014; Joynes and Fuller Citation2016).

Whilst it is tempting to explore a focus on technology ‘tools,’ some of the key concepts from the previous Ottawa framework remain at the forefront of implementation and research questions, particularly relating to the application of technology to replicate the existing assessment assignments and practices in an online environment (‘transmediation’) or to the efficiency in the use of technology-assisted assessment tools that speed up examinations and grading (‘prosthesis’). TEA has been suggested to provide authentic assessments (Gulikers et al. Citation2004) of students' competencies or combinations of knowledge, skills, and behaviours required in real life. However, the risks to authentic assessment through TEA remain high, either through unintentional assessment of unintended constructs, or deviation from real-life practices that consequently affect learner engagement and behaviours. The 2011 framework also focused on some of the challenges of high cost and high-fidelity assessment technology practices—now particularly challenged by the emergence, and understanding, of digital inequity amongst learners and institutions (UNESCO Citation2018). Despite ten years of innovation and scholarship, some of the key questions posed, surrounding the authenticity of assessment and the impact of learning remain at the forefront of our thinking.

Drawing on these lessons, this refreshed framework draws from broad scoping across assessment, technology, and educational practices in the wider environment in which assessment takes place. It draws on a reflection of both the established (educational) technology that shaped the 2010–2020 decade (Auxier et al. Citation2019), emergent technologies, and theories that underpin the practice of active learning.

Sensitised by feedback from in-person workshops in the 2020 Ottawa Conference and subsequent open consultation and workshops, the consensus framework is divided into three key stages, spanning (1) readiness for technology adoption, (2) its application to the assessment lifecycle (supported by illustrative case studies), and (3) processes for evaluation and dissemination of TEA.

STAGE 1: Assessing readiness to adopt technology to enhance assessment

Technology has been a common solution to many of the recent COVID-related challenges to learning and assessment in HPE, with a whole range of scholarly communications illustrating individual institutional responses across conference and journal platforms (e.g. Khalaf et al. Citation2020; Jaap et al. Citation2021). However, other authors have highlighted that many of the concerns around educational practice that existed pre-COVID are unlikely to have been resolved through these approaches (Ellaway et al. Citation2020). Moreover, technology may increase the impact and risk of poor assessment practises. Automated processes may ‘at the push of a button’ generate a substantial error (e.g. through inaccurate score reporting), causing long-lasting damage to learner and faculty trust around assessment processes.

As identified in the previous section, non-critical adoption of technology in assessment means potential benefits could be offset by a range of potential disadvantages (academic, financial, and institutional). Critical to the adoption of technology is the need to ‘assess assessment,’ to ensure that any sub-optimal assessment practices are identified and resolved.

One of the key recommendations of this Ottawa consensus is to ‘put aside the technology’ and investigate four critical areas associated with TEA:

The quality of existing assessment practice

The purpose of introducing TEA

Engagement with digital equity challenges

Institutional capacity to adopt technology

Assessment fit for purpose?

A review of existing assessment processes is central to readiness for adoption of TEA, and should focus not just on assessment ‘tools,’ but the wider programme of assessment, its theoretical underpinning and environments in which assessment may be undertaken (campus/clinical practice/learners’ personal space) including any existing technology used to deliver assessment (e.g. classroom desktop or personal laptop computers, or mobile devices). A broad range of expert, fit-for-purpose specifications and guidance should support this assessment review (Norcini et al. Citation2018; Boursicot et al. Citation2021; Heeneman et al. Citation2021; Torre et al. Citation2021). ‘Assessing assessment’ at the outset helps not just ensure that assessment is fit for purpose in its existing format but identifies potential pitfalls that can be resolved in advance of technology adoption. It also establishes opportunities to explore the enhancement of assessment practices or identifies new developments that will be central to the next recommendation of this review.

What is the purpose of adopting TEA?

The overriding question ahead of the introduction of TEA should be, as with all educational technology, to what extent will the technology enhance students’ learning and faculty’s ability to support this? (Fuller and Joynes Citation2015). If the purpose of TEA is only to ‘replace’ a paper version of the same assessment, the argument that the assessment would be ‘enhanced’ by technology is already lost, and could likely be an expensive mistake or worse, have longer lasting reputational damage if perceived as a ‘vanity project.’ Instead, as educators, we need to return to the principles that guide all well-theorised educational developments, looking at alignment between course content, learning activities, the proposed assessment, and the desired outcomes of the course (Biggs and Tang Citation2011; Villarroel et al. Citation2019). Learners build meaning on what they do to learn. Thus, faculty should intentionally design learning activities and assessment tasks that foster the achievement of learning outcomes, rather than focussing on implementing specific assessment methods.

Using an approach informed by pedagogy will enable teams to look at where investment in technology will be the most meaningful to their courses, either as a one-off bespoke element or as a programmatic approach. Nevertheless, it is worth noting that piecemeal investment is rarely sustainable and risks learners and faculty being asked to learn how to use multiple tools in a single area of a curriculum. If they involve high-stakes assessments, this can lead to a significant cognitive load on learners (Davies et al. Citation2010). When new and promising technology emerges, decisions to introduce it to enhance assessment are rarely immediate, potentially delaying innovation. However, of equal concern is a failure to evolve practices beyond merely ‘replacing’ what is, and has always been, done in terms of assessment.

Within considerations of the purpose of TEA in any given institution, there are wider opportunities for an educational design that are worth noting. When bringing in either small or large-scale assessment change, it is possible to leverage benefits from the process in terms of wider course transformation through, for example, the introduction of immersive design and even co-production of assessment so that both educators and learners are invested and engaged with the result (Holmboe Citation2017; Cumbo and Selwyn Citation2022). This moves away from the perennial challenge of learners feeling that assessment is something that is done ‘to’ them, rather than done ‘with’ them. Through the introduction of technology in assessment, there are also enhanced opportunities to use the data which are gathered through the assessment processes to explore what learning and feedback is taking place. Nevertheless, this should be seen as a positive by-product of the introduction of TEA and not the driving force for its introduction.

Within the broader opportunities that exist in developing TEA processes, we must also be cognisant of the challenges such introduction brings. There is a continued need to avoid embracing the myth that the next generation of learners are so-called ‘digital natives’ who easily embrace the use of new technology in learning and assessment because they use mobile devices fluently in their personal lives (Tapscott Citation1998; Prensky Citation2001; Bennett et al. Citation2008; Jones Citation2010; Jones et al. Citation2010). Whilst learners and faculty have increased technology immersion, this primarily exists in their social/personal spaces; gaps between their use of digital technology in their daily life and in learning have been reported in previous studies (Selwyn Citation2010; Pyörälä et al. Citation2019). HPE therefore still needs to focus on the support that all learners and educators will need, to make TEA effective.

There is also a risk that the introduction of TEA will widen the already large attainment gaps, between different groups of learners in an institution or between/across institutions (Rahim Citation2020; Hegazy et al. Citation2021). Those already with access to better IT systems, support, and connectivity (either through their previous education or within HPE) will find TEA easier to introduce/adopt than those who face instability or deprivation in the basic infrastructure (Aristovnik et al. Citation2020). While institutions and educators will inevitably be focused on their own local challenges, one of the larger questions posed by the introduction of TEA is how we could steer and drive technology change that promotes digital equity.

The importance of digital equity within TEA?

At an individual programme level, TEA needs to ensure the digital equity of all learners to ensure they have the required skills and comparable access to online technology (Alexander et al. Citation2019). When implementing online assessments, a strategy that ensures digital equity is therefore needed to limit the technological differences between learners (and faculty). Strategies need to consider the capacity of devices used for learning (i.e. the generation of devices), operating systems (e.g. iOS, Android), and accessories required (e.g. camera, microphone). The strategy ought to include the digital capabilities students need for each online assessment, given that students’ digital capabilities relate to specific tasks and technologies (Bennett et al. Citation2008). Ensuring digital equity for TEA is a crucial step to ensuring assessment equivalence for learners within a programme in one institution or for candidates sitting for licencing/professional assessments across institutions nationally (Wilkinson and Nadarajah Citation2021).

Digital equity can be enhanced through online assessments that operate on students’ existing devices and within their digital capabilities, and a device specification that lists devices ranging in capability and cost for students who need to purchase new technology. The successful growth of BYOD (Bring Your Own Device) assessments relied on the deliberate use of community-based technologies, held by learners, that facilitate co-creation and learner connectivity (Sundgren Citation2017). A programme involving in-home online assessments needs to consider the capacity and stability of learners’ high-speed internet connectivity.

However, there are broader questions around the ability of TEA to ensure equity between institutions situated in different parts of the globe, and these need explicit discussion to ensure that recommendations made here do not leave lower resourced institutions behind. UNESCO noted that there are two dimensions to digital accessibility: technical (infrastructure for connectivity and capacity of devices) and socio-economic (affordability and students’ digital capabilities). Unequal access to technologies and subsequently to TEA is linked to and can exacerbate, existing inequalities of income and resources between and within communities (UNESCO Citation2018). Even within areas of high internet use, marginalised communities may be missing out on opportunities; for example, in New York, 25% of homes have no internet access (Mozilla Citation2017). While individual institutions are unlikely to be able to solve these issues alone, it is vital that those who are able to trial different technological solutions share their results when the use of technology in TEA goes well—and even more importantly, when it goes less well, to help other programmes avoid making costly mistakes.

Programmes, people, products, and processes?

Based on the experiences of many of the statement’s authors and workshop attendees, considerations regarding readiness to adopt technology are often focused on the technology itself rather than the intended outcome. The risk of this approach is that the technology becomes the focus and driver of assessment enhancement rather than the enabler. This approach may also result in an over-focus on a particular technology provider, product, or solution based on personal preferences and previous experiences. To avoid this, this recommendation proposes a ‘4P’ approach (Programmes, People, Processes, and Products) as a comprehensive team-based approach when adopting TEA.

Programmes

the first area of consideration should frame the context and educational need. Assessment blueprints can be further expanded to include current implementation gaps, technological solutions (if any), risk assessment, and resources needed. Risk assessments should include the impact on regulatory compliance locally (challenges of switching/introducing new formats/approval timelines) and externally (where appropriate, continued compliance with national and/or regulatory requirements), and resources (upgrading infrastructure, hardware, or software).

People

Priorities for change management always focus on people, as their buy-in, participation, and feedback are crucial for successful implementation (Er et al. Citation2019). In the context of enhancing assessments, there are three groups of people to engage with: faculty, professional support staff (e.g. exam office, IT), and students. It is critical that this engagement includes training related to any new skill sets related to the enhancement and opportunities to give feedback after pilot developments. Similarly, the engagement of learners and professional support staff should be genuine, with their involvement at the start of the project rather than the end. Students can be successfully co-opted to partner with or co-develop the assessment enhancements (Er et al. Citation2019, Citation2020).

Processes

To implement assessments efficiently, there are internal processes that should be shared across relevant stakeholders. However, when technology is used or added for assessments, process change pitfalls often include piecemeal or direct ‘cut and paste approaches’ (transmediation). Assessment data management can be particularly susceptible to changes in such processes. A review of the impact of the technology enhancement on assessment data governance, security, transfer, and reporting at different levels can help enable data interoperability and reduce risks of technology assessment failure.

Products

The selection of the technology product depends on its alignment to the scope of work, accessibility, and affordability to the institution. An evaluation based on the former can lead to the decision to buy, build or partner with an external organisation to develop the product. In the selection of any product, careful consideration needs to be given to the utilisation of existing institutional technology (e.g. opportunities to better leverage the full abilities of everyday products, such as MS Office) and the concept of the ‘modern professional learner’s toolkit’ with an extensive range of technology already available to support personal and professional learning (Hart Citation2021).

STAGE 2: Applying technology within the ‘assessment lifecycle’

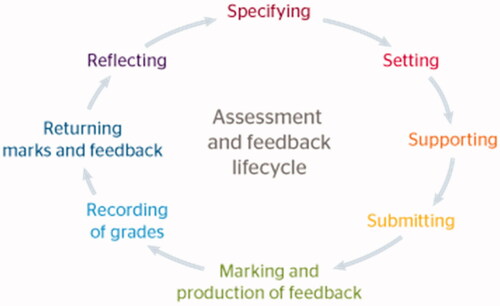

In keeping with other recent Ottawa consensus statements, this paper provides a framework of core principles to guide the enhancement of assessment with technology. Adopting a broader Higher Education assessment lifecycle model as support is intentional, maximising the applicability of this consensus to multiple educational contexts. The lifecycle provides a readily identifiable ‘helicopter view’ of all key processes involved in assessment, whatever the stakes and purpose of the assessment. The lifecycle process is iterative, enabling a quality improvement approach that spans assessment design, scheduling, and delivery through to key academic processes surrounding integrity and conduct, moderation, and student progress tracking (JISC Citation2016). As demonstrated in :

Figure 1. The assessment and feedback lifecycle (JISC Citation2016).

This lifecycle approach reflects the changing focus in HPE from assessment ‘tools’ to systems and programmes of good assessment, particularly the holistic approach seen in programmatic assessment (Heeneman et al. Citation2021). Within medical education, the lifecycle has also been successfully applied to the design, and delivery, of complex assessment interventions, such as the OSCE. Applied to the context of TEA, the lifecycle provides five key foci, and targets for application:

Advancing authenticity of assessment (an overarching principle)

Engaging learners with the assessment

Enhancing design and scheduling

Optimising assessment delivery and recording of learner achievement

Tracking and learner progress and faculty activity—supporting longitudinal learning and continuous assessment.

Following feedback from consensus in-person workshops, and the value of theory-informed, practice innovation reports, these principles are supplemented by a series of good practice exemplars are drawn from the consensus authorship. Recognising that introducing educational innovation at any time is a challenge (and arguably more so in peri/post-pandemic times), these exemplars aim to serve both as inspiration and a potential template, providing case studies of existing good practice in TEA as defined by the criteria outlined above. Not all the examples will work in all contexts, nevertheless, it is hoped that readers will be able to find elements of all the case studies and supporting literature that could be adapted to work in their own environments. Following the consensus’ best practice advice in relation to equity, case studies deliberately do not imply one ‘best’ technology for this purpose. The actual hardware and software that works in each location depend upon existing institutional infrastructures and investment, confines under which educators work globally, regardless of context.

Principle 1: Advancing assessment authenticity

The design of ‘authentic’ assessment tasks, aligned with the appropriate stage of a curriculum and relevant competencies, is a cornerstone of learner engagement and quality assessment. When technology is used to develop these tasks further (e.g. introduction of virtual reality simulation), the potential benefits of enhanced learner engagement and innovation may be offset by the unintentional introduction of different constructs (including familiarity/navigation requirements of the technology). Educational research continues to examine whether engagement in, and assessment of, ‘authentic’ tasks truly leads to mastery of the task in isolation, or more integrative application to practice and true learner development (Wiliam Citation2021). The development of TEA in HPE, therefore, needs to critically examine how it bridges the ‘authenticity interface’ of pedagogy, technology, and clinical practice.

Pedagogically, the focus of assessment continues to shift from a sole focus of the assessment of learning to a greater representation of assessment for learning and thus to ‘sustainable assessment’ (Boud Citation2000). The goal is not only to give grades on the students’ performance today but to support their future learning and growth of self-regulated learning skills, the ability to set goals for their learning, and monitor their knowledge, skills, and behaviour. Sustainable assessment emphasises that the student should learn the ability to assess their own performance not only in their educational settings but throughout their future working lives (Boud Citation2000; Boud and Soler Citation2016).

In TEA, this means that we should ensure less focus on the measurement and technical development of assessment tools, and a greater focus on the qualitative and personified assessment fostering student learning in authentic learning/workplace contexts. The use of alternative learning taxonomies that consider metacognitive function and student engagement as a result of the assessment, alongside assessment tools, can inform the selection and design of authentic tools (Marzano and Kendall Citation2007; Villarroel et al. Citation2018). The wider experiences of, and approaches to, assessment in Higher and Continuing Education are an additional useful resource for alternative assessment formats including open book and takeaway assessments (e.g. Sambell and Brown Citation2021), and assessment tools that help teachers track their students' progress whilst allowing learners to develop their own capacity to make a judgement about their learning progress and plan improvement (Malecka and Boud Citation2021).

Technically, TEA should leverage key principles that inform educational technology development, minimising ‘navigation’ issues and place learners and faculty at the heart of design (Divami Citation2021). Design should allow the assessment to be opportunistic (e.g. the use of smartphones to support WBA in a wide range of settings), immersive (e.g. allowing learners to create their own self- and group assessment opportunities), and connected (allowing personalisation and connectivity within learning organisations—e.g. smartphone apps/coaching groups to promote reflection by residents) (Konings et al. Citation2016).

Clinically, there are significant opportunities for TEA to interface effectively with current and emerging approaches to technology-enabled healthcare. Much of the current focus of educational technology in HPE assessment focuses on delivery, rather than an opportunity to align to how technology is used to enhance the care of individuals, groups, and populations. For example, we suggest the following simple framework to guide assessment opportunities:

Device augmented care

In many health systems, the advent of paperless healthcare systems and cloud-based ‘shared care’ (e.g. Philips Citation2021) spotlight the rapid upskilling needed for online consultation, communication, and decision making (Greenhalgh et al. Citation2016; Darnton et al. Citation2021). The advent and growth of bedside ultrasound as a bedside diagnostic and therapeutic procedure for undergraduates and professionals alike provides targets for TEA development (McMenamin et al. Citation2021).

Expectations, and health technology literacy, of patients and professionals

A rapidly changing pace of care means the global community of HPE learners and professionals will deliver care using constantly changing guidelines, procedures, and drugs, where timely access to online resources and continuing professional development are essential. Demonstrating ‘when, why, and how’ such resources are accessed as part of patient care should be a critical target for assessment.

Health analytics and risk communication

The use of big data and deep machine learning increasingly contribute to health, guiding algorithms and decision making, and contributing to diagnosis (Rajpurkar et al. Citation2022). Designing assessments that integrate risk communication, digital literacy, and decision making within shared patient-professional consultation models provide opportunities for highly authentic assessment tasks, accepting the ongoing debate about the opportunities and limitations of AI (Principle 5).

Principle 2: Engaging learners with assessment

In an ideal educational programme, learners would always be fully engaged with assessment throughout their programmes of study (not just when they perceive it matters). The headline principle for each of the good practice case studies presented throughout the consensus reinforces that learner engagement can be facilitated better through TEA given the opportunities for asynchronicity and connectivity. Active learner engagement is strongly associated with deeper learning (Villarroel et al. Citation2019) and achievement and the use of technology (e.g. through virtual learning environments) to measure and monitor activity highlights the importance of both early, and sustained learner engagement in assessment outcomes (Korhonen Citation2021). In this first case study, Webb and Valter demonstrate the power of simple, readily available technology to improve participation and creativity in assessment, develop reusable learning resources, and connect groups of learners:

Assessment for the You-Tube generation: a case study on student-created anatomy videos (Alex Webb & Krisztina Valter, Australian National University)

Context

We sought to modernise a 2000-word individual essay assessment on anatomy topics chosen from a list of five options provided by the teacher.

What were we trying to achieve?

We re-designed the assessment task to stimulate student interrogation of the topic and construction of their own knowledge representations utilising commonly available technology. It was also important that the task empowered student choice, made learning fun, allowed students to be creative, and provided an opportunity for students to produce outputs that could easily be shared with peers. In addition, we hoped to make marking more enjoyable in less time!

What we did

Students were tasked to create a 5–7-min entertaining educational video on an anatomy topic of their choice in self-selected groups of five. Each video was graded by the teachers. The videos were provided for the whole cohort for evaluation, and for their revision.

Ongoing evaluation of impact

The majority of students enjoyed the activity (93%) and found that it improved their understanding of the topic (90%). The opportunity to research the topic and consolidate their knowledge were key factors aiding their learning. However, time and learning a new technology detracted from their learning. For teachers, marking and providing feedback were more efficient and enjoyable.

Take home message

The process of creating videos provided students with a fun and stimulating opportunity to work as a team to construct their own representations of anatomical concepts to aid their own learning, which can be easily shared with peers.

The case study is a powerful example of learner engagement with the assessment process, demonstrating the best principles in adapting assessment for a generation who ‘prefer to learn by doing’ (Marzano and Kendall Citation2007) and the principle that learners construct meaning on what they do to learn (Biggs and Tang Citation2011). Such tasks (which can be adapted to a range of assessment stakes) have the additional benefit of co-produced content creation, resulting in a bank of resources that can then be used to enhance teaching and assessment engagement for the following cohort. From a health humanities perspective, such tasks also enable students to demonstrate their creative capacities through assessment.

From a digital and geographical equity perspective, TEA presents endless possibilities to share low-stakes assessment formats between educators and learners across borders. The use of simple, established technology often requires learners only to access/hold a relatively basic smartphone device (rather than a computer or laptop); something which was considered to be feasible worldwide in 2014, given the prevalence of devices in relation to the population (International Telecommunication Union Citation2014 in Fuller and Joynes Citation2015). Technology can present opportunities to engage learners differently with feedback; offering quicker ways to request and record in the moment thoughts on what is going well, what can be improved, and how to progress to the next level, through written, audio, or ‘speech to text’ functions now common in all mobile devices (Tuti et al. Citation2020). Such opportunity really opens up the power of technology to positively shape both assessment and learning behaviours, building on established theoretical principles of learner-driven, actionable feedback (Sadler Citation2010; Boud and Molloy Citation2013).

Principle 3: Enhancing design and scheduling of assessment

Many of the technological developments of the last decade have enabled considerable development of approaches to HPE assessment, particularly when viewed through a quality, or validity lens (St-Onge et al. Citation2017). Software is now used routinely to support the creation of knowledge test item banks (e.g. for single best answer formats) at the individual, institutional, consortia, and national levels. Working remotely within assessment consortia to design and share assessment items presents substantial cost savings and efficiencies, and sophisticated tagging of items allows arguably better quality in the creation of tests and their constructive alignment (Kickert et al. Citation2022). The substantial costs associated with the development, emendation, and banking of items are now being challenged by the potential of automatic item generation (Lai et al. Citation2016).

The use of TEA within performance assessment has been particularly impactful using Work Based Assessment (WBA) formats, presenting opportunities to develop more learner-centred, opportunistic cultures of performance assessment in clinical practice. Institutional and national models of programme level WBA operate using technology-based platforms and technology-based portfolios (van der Schaaf et al. Citation2017; NHS eportfolios Citation2021), with the use of mobile devices to capture assessment at the point of patient care (Joynes and Fuller Citation2016; Harrison et al. Citation2019; Maudsley et al. Citation2019). Much of this advancement has been facilitated using ‘Bring Your Own Device’ (BYOD) models (Sundgren Citation2017) but care needs to be taken to avoid inequity of opportunity and to ensure sufficient infrastructure to support these approaches as highlighted in Section Background.

The growth in assessment management systems that routinely capture individual, and cohort level progress of learners has enabled meaningful use of large data sets to design newer approaches to assessment. Work to track the longitudinal consequences of borderline and underperformance in programmes of assessment (Pell et al. Citation2012) has supported the implementation of data-driven Sequential Testing formats (Pell et al. Citation2013; Homer et al Citation2018), which are increasingly demonstrating impact on assessment quality. Work supporting adaptive knowledge testing systems demonstrates the emergence of potentially highly personalised applied knowledge testing and feedback (Collares and Cecilio-Fernandes Citation2019).

Large scale, global experience of online testing (and the challenges of ‘closed book’ format testing, e.g. through traditional use of Single Best Answer formats) have renewed interest in Open Book test formats and the opportunities that technology may bring (Zagury-Orly and Durning Citation2021). Whilst previously not a dominant assessment format within HPE, there is substantial evidence about the use of Open Book or ‘take home/take away’ test formats within both medical education (Westerkamp et al. Citation2013; Durning et al. Citation2016; Johanns et al. Citation2017), and particularly within wider education (Bengtsson Citation2019; Spiegel and Nivette Citation2021), with extensive practical experience on replacement of closed book formats (Sambell and Brown Citation2021). Arguably, this presents a highly authentic approach to assessment which challenges faculty to design, and learners to solve, complex problems that require research and synthesis, either individually or in groups (e.g. aligning to the use of multi-disciplinary teams working in many health cultures, particularly for complex clinical decisions). Technology presents a real lever to redefine the ‘Book’ (e.g. a mobile device). The potential to monitor and understand how learners develop and operate search strategies and collaborate/connect with others to solve problems provides new opportunities for ‘lower stakes’ assessments, as demonstrated in Case Study 2.

Open Book Online Assessments: A case study of institutional implementation across health professions programmes (International Medical University, Malaysia)

Context

The university implemented an online assessment system (OAS) for its health professions programmes in 2018. The OAS was comprehensive in that it allowed for question development, delivery of knowledge-based and OSCE assessments with post-exam analysis, and individual student reports based on learning outcomes. During the early phase of the Covid-19 pandemic, the OAS was modified to be an offsite online test-taking platform for students and staff, as the physical campus space was unable to be used for assessments due to national lockdown restrictions.

What we were trying to achieve

A new challenge emerged from this adaptation as we were unable to invigilate the offsite, online assessment as no proctoring feature existed in the OAS. Given the modifications to teaching and learning activities due to Covid-19 and the risks of non-proctored assessment on student progression, we implemented an online, non-proctored time-based open book assessment using existing assessment formats for low stakes exams. Open book assessments used knowledge application item formats which were blueprinted against learning outcomes.

Ongoing evaluation of Impact

The first round of implementation with offsite online test taking highlighted major concerns around data capacity and device limitation of students, ability to provide technical support, and concerns of cheating amongst faculty and students. This led to further improvements including additional provisions for students with device or data capacity and the introduction of in-house online invigilation (instead of external proctoring software). Subsequent analysis showed that, with these improvements, individual student and cohort assessment performance correlates strongly with pre-adaption assessments (Er et al Citation2020).

Take home message

When shifting to offsite online open book assessments, faculty were initially more concerned about the use of open book assessments and their impact on student competencies as they progressed. Instead, issues that arose were related to technological shifts from an onsite to an offsite examination. It was beneficial to take the opportunity to adapt during a crisis, but it is also equally important to adapt the assessment after its first implementation to improve it further.

The near wholesale switch to online test delivery in response to the ongoing Covid pandemic has also allowed a fresh look at resources needed for testing (space, staffing) and opportunities to improve the timing and deployment of testing. For lower stakes tests, and particularly assessment for learning formats, Webb and Valter demonstrate the opportunities for learner engagement with assessment in non-physical settings. The constraints of booking large exam halls and scheduling multiple assessments within, and across, programmes are liberated through the intelligent use of technology, with emerging evidence about the importance of the physical assessment environment on learner anxiety and emotion (Harley et al. Citation2021). The importance of support for learners and faculty in the design, preparation, and delivery of such assessments cannot be overstated (Tweed et al. Citation2021), with the opportunity to signpost wider education resources there (Wood Citation2020a, Citation2020b).

Principle 4: Optimising assessment delivery and recording of learner achievement

It would be interesting to look back at 2020–2021 in future iterations of this consensus and review the biggest impact Covid-19 had on assessments—but at this stage, the most visible impacts of TEA have been on assessment delivery. The growth of online knowledge testing has seen the emergence of effective mass, online testing where technology is used to support the delivery of testing and automated marking. Sophisticated assessment management systems (which also provide item banking) support the automated application of recognised standard setting methods, e.g. Ebel, Angoff. Technology has also enabled the delivery and automated marking of alternative knowledge testing formats including VSA (Very Short answer) questions, allowing candidates to answer using free text, and the use of machine learning algorithms to undertake most of the marking (Sam et al. Citation2018).

Assessment Management Systems also supports the delivery of marking and automated standard setting (e.g. using Borderline Methods) in performance assessment, notably OSCEs, e.g. through scoring using mobile devices, which can also capture feedback to learners. The rise of ‘virtual’ OSCEs has demonstrated opportunities to further reconceptualise what can (and cannot) be authentically assessed using a fully virtual format, including the complicated logistics needed to return a high-quality, defensible assessment (Boursicot et al. Citation2020). Arguably, much of this experience has helped focus on a need to align TEA with technology-enhanced healthcare (e.g. the routine use of online/virtual patient-professional consultations). As highlighted in Principle 3, the use of mobile devices to facilitate the delivery of WBA has grown progressively, but less well-disseminated has been the impact of technology on delivering professionalism assessment, e.g. from fully online systems of 360/multi-source feedback, through to active research into the power of technology connectivity to instantly provide feedback or immediately raise concerns about a learner’s well-being or behaviour (JISC Citation2020).

The use of technology within assessment has also provided powerful benefits to the analysis of assessment quality, post-hoc metrics, and score reporting (Boursicot et al. Citation2021). The opportunities to record cumulative and longitudinal learner achievement through a range of linked ‘artefacts’ (i.e. the performance itself, the ‘score,’ assessor feedback, and candidate reflection) within a technology-based portfolio presents a powerful repository to influence truly sustainable assessment. A comprehensive repository of assessment and feedback evidence is central to effective decision-making about learner progression in programmes of assessment and programmatic assessment approaches. The extensive data captured as a result of TEA provides powerful insights into examiner behaviour and decision making within the OSCE, generating further research (using simple technology, such as video) into how technology might further augment OSCE quality, as demonstrated in Case Study 3.

Video-based Examiner Score Comparison and Adjustment (VESCA) as a method to enhance quality assurance of distributed OSCEs (Peter Yeates, Keele Medical School, UK)

Context

Owing to candidate numbers, most institutions run multiple parallel circuits of Objective Structured Clinical Exams (OSCEs), with different groups of examiners in each. It is critical to the chain of validity that these different ‘examiner-cohorts’ all judge students to the same standard. Whilst existing literature suggests that examiner-cohort effects may importantly influence candidates’ scores, measurement of these effects is challenging as there is usually no crossover between the candidates which different examiner-cohorts judge (i.e. fully nested designs).

What were we trying to achieve

We aimed to develop a technology-enabled method to overcome the common problem of fully nested examiner-cohorts in OSCEs.

What we did

We developed a three-stage procedure called ‘Video-based Examiner Score Comparison and Adjustment (VESCA).’ This involved (1) videoing a volunteer sample of students on all stations in the OSCE; (2) asking all examiners, in addition, to live examining, to score station-specific comparator videos (collectively all examiner-cohorts scored the same videos); (3) using the linkage provided by video-based scores to compare and adjust for examiner-cohort effects using Many Facet Rasch Modelling.

Ongoing evaluation of impact

Camera placement required careful optimisation to provide unobstructed views of students’ performances whilst mitigating intrusion. Scoring videos imposed additional time demands on the OSCE but data supported equivalence of delayed scoring via the internet thereby enhancing feasibility. Students generally welcomed procedures to enhance standardisation. Score adjustments were acceptably robust to variations in the number of linking videos or examiner participation rates. Examiner-cohort effects were sometimes substantial, accounting for more than a standard deviation of student scores. Adjusting scores accordingly could influence the pass/fail decisions of a substantial minority of students or substantially alter their rank position.

Take home message

VESCA offers a promising technology-enabled method to assist individual institutions, assessment partnerships, or national testing organisations to enhance the quality and ensure fairness of multi-circuit or distributed/national OSCE exams (Yeates et al. Citation2020, Citation2021a, Citation2021b, Citation2022).

Beyond delivery, analysis, and score reporting, the extensive use of technology has generated substantial comments about test security, cheating, and candidate proctoring (Roberts et al. Citation2020; Bali Citation2021; Selwyn et al. Citation2021). The commentary highlights the careful balance of 5 key, overlapping issues which can be best summarised as:

Trust: In the institution delivering the assessment, within and amongst Faculty and candidates themselves (and the subsequent confidence and trust in future assessments)

Professionalism: and the expectations of candidates and faculty within, and around, the delivery of assessments

Active proctoring: compassionate and proportionate responses that do not introduce context irrelevant variance, or additional cognitive load on candidates or Faculty, nor require access to complex browser systems/devices that widen digital inequity

Detection and security: of both academic malpractice within an assessment, the breach of e.g. a secure test bank, or inadvertent loss of candidate characteristics/performance data that may be stored by a third party or cloud-based systems.

Consequences: both in terms of sanctions when academic malpractice is detected, but also to reinforce the trust of learners, professionals, and patients

Data to date suggests very mixed experiences across testing institutions in relation to security, with some larger scale national assessments reporting no differences in candidate performance comparing online/offline test delivery (Andreou et al. Citation2021; Hope, Davids, et al. Citation2021; Tweed et al. Citation2021). More widely, technology has substantially enabled the growth of ‘contract cheating,’ requiring a balance of consequences across learners ‘outsourcing’ assessment and those completing the outsourcing (individuals and companies) and challenges both institutional practices and legislation (Ahsan et al. Citation2021; Awdry et al. Citation2021).

Principle 5: Tracking learner progress and faculty activity—supporting longitudinal learning and continuous assessment

Whilst the use of technology has been potentially transformational in assessment processes and practice, the advent of technology-driven ‘decisions’ on learner progress presents significant challenges and concerns. The use of ‘artificial intelligence’ (AI) more widely in healthcare presents potential benefits in the early detection of disease or more accurate diagnosis (e.g. Liu et al. Citation2019; Yan et al. Citation2020). However, Broussard highlights that all data is ultimately generated by humans, so the challenge for ‘AI’ of poor decision making, the responsibility for coding and use of any outputs remains with humans (Broussard Citation2018).

In education, AI-based technologies have heralded opportunities to predict learner success or detect those in need of targeted support, whether relating to learning or well-being (JISC Citation2020). However, there is little high-quality, empirical research that looks at the outcomes of such technology on learners and faculty (Sonderlund et. Citation2019). Within the assessment, commentaries have focused on either machine-based decision-making within individual assessments (Hodges Citation2021) or through the analysis of longitudinal data sets (Hope, Dewar, et al. Citation2021).

Within wider education, there have been several approaches to how data processing and learner analytics have been used as part of TEA. Poor design of systems, data processing, or understanding/application of outcomes can lead to failure in ‘profile and predict,’ resulting in either unclear outcomes for learners (Foster and Francis Citation2020), negative impact on learners (through reinforcing the fear of failure) or development of ‘gaming behaviours’ (Archer and Prinsloo Citation2020). Of equal consequence is poor understanding and application of such systems by faculty and institutions (Lawson et al. Citation2016). In contrast, intelligent systems design and purposeful use of data can be used to ‘gather and guide,’ using data to identify and meaningfully support learners at risk of future failure (Foster and Siddle Citation2020) or support self-regulation and better learner choices (Broos et al. Citation2020), as shown in Case Study 4. A current challenge is that this principle is situated within a limited published evidence base which highlights mixed outcomes for education in terms of meaningful impact on learning (Shum and Luckin Citation2019).

Online formative assessment for continuous learning and assessment preparation, guided by student feedback and learning analytics (Karen Scott, University of Sydney, Australia)

Context

Students in the four-year Sydney Medical Program undertake an 8-week paediatric block. Most of the 70–80 students are at The Children’s Hospital at Westmead, Sydney for the Day 1 orientation, Week 5 teaching, and Week 8 examinations; 8–12 students are at rural hospitals. For the remaining block, students undertake clinical placements at the Children’s Hospital, metropolitan and rural hospitals, and community health centres.

What were we trying to achieve?

Paediatrics comprises general paediatrics, paediatric surgery, and over 30 sub-specialties. Across this broad curriculum, students need to prepare for a range of face-to-face and virtual small- and large-group teaching sessions, and consolidate, review and extend their learning. Students also need to prepare for end-of-block written and clinical examinations, and final-year has written examinations.

What we did

Substantial online self-directed formative assessment has been developed: most comprise unfolding, case-based learning, enabling the application of core knowledge to clinical cases; some integrate core knowledge with practice activities for consolidation and extension of learning. All include extensive automated feedback, some with supplementary web links. Online materials focusing on core knowledge development (primarily short format recorded lectures) include formative assessment for self-testing and through this, long-term knowledge retention.

Evaluation of impact

Learning analytics and student surveys show most students do most formative assessments—and want more! A recent comparison of learning analytics and assessment results highlights that students’ assessment results generally correspond with the extent to which they use formative assessment.

Take-home message

This finding is being communicated throughout the Medical Program to encourage all students (especially those taking a minimalist approach) to make good use of online formative assessment.

When aligned to pedagogic principles and good technology design, TEA can provide substantial benefits in collecting the ‘right data,’ freeing up the cognitive load of humans to better understand the data and therefore focus faculty time on learner support. This can include data-driven rapid remediation (Ricketts and Bligh Citation2011), coaching and mentoring approaches (Malecka and Boud Citation2021), considered use of behavioural nudges (Damgaard and Nielsen Citation2018) and specialist case management to be applied ahead of a (potential) end of year point of failure as a preventive intervention (Winston et al. Citation2012). However, extensive data collection may cause tensions between those responsible for the management of studies and learners themselves as independent agents (Tsai et al. Citation2020). This question of data ownership remains largely unanswered by the literature but is of paramount importance.

However, the intelligent application of technology to assessment design can transform the longitudinal engagement of learners themselves, particularly through the development of portfolio tools. Driessen (Citation2017) highlights the pitfalls of portfolios depending on whether they are seen as bureaucratic tools or true enablers of learning and assessment. Drawing on the lessons from music and arts education, seeing portfolios as communication tools for learning and feedback, Silveira (Citation2013) presents powerful opportunities to actively engage learners and promote better self-regulation, ownership/curation, and longitudinal development (Clarke and Boud Citation2018). Caution however needs to be exercised about the requirement of reflection (as a component of assessment) in an easily accessible portfolio, and the psychological harm of ‘reflection as confession’ (Hodges Citation2015). Supporting learners, supervisors, and competence committees in the effective use of portfolio assessment is essential (Oudkerk Pool et al. Citation2020; Pack et al. Citation2020).

With the ability to collect and understand extensive data about learners, technology, by default, provides a powerful lens into assessors (both in the workplace and simulated assessment) and the responses of the institution to driving assessment quality. In a parallel mechanism to supporting learners, this data can drive targeted support, e.g. focused OSCE examiner training (Gormley et al. Citation2012), or redesign of TEA (e.g. webforms, apps) to facilitate better assessor engagement.

The fourth industrial revolution (McKinsey Citation2022) highlights the future that educators face, coexisting with devices recording larger volumes of (assessment) data and algorithm-based outcomes helping faculty coordinate learner progression and decision making. Key challenges of educational assessment focus on how we visualise, connect, influence, and research this interconnectivity. A better understanding of the pitfalls and potential of augmented and artificial intelligence between developers, technologists, and educationalists needs to interface with the ethical challenges that continue to arise (Selwyn Citation2021).

STAGE 3: The impact of TEA usage needs evaluating, disseminating, and accelerating within health professions education

Despite (and indeed because of) the global pandemic, 2020 saw a return to the rapid growth of innovation reports in technology use in education, including technological solutions to assessment (Fuller et al. Citation2020). What can we learn from this period of productivity? That crisis leads to creative responses is unsurprising, but a requirement that technology-based creativity requires substantial resources overlooks the value of ‘frugal innovation’ (Tran and Ravaud Citation2016). However, central to any learning from this creativity is a requirement to move beyond ‘description’ and maximise its impact through dissemination and meaningful scholarship (Ellaway et al. Citation2020).

Dissemination of scholarly work in TEA across HPE has largely been dominated by publications from individuals and small groups across North America, Europe, and Australasia. While there is a growing trend of output from the Asia Pacific region, publications from Africa and South America remain few. For TEA to truly benefit HPE globally, the narratives of design, implementation and evaluation of technology-enabled assessments need diversity too. This diversity is reflected within HPE (e.g. thematic calls, innovation reports) and from the adoption of scholarship from cues in the fast-changing realm of instructional design and social media. Such approaches mean that scholarly publications focused on TEA can be built collaboratively and progressively with input from practitioners.

The experimental nature of continually adopting new technologies within education lends itself well to frameworks of short- and long-term intended and unintended consequences. There is no agreement across HPE on the ‘best’ way to do this, but different frameworks offer suggestions for course or programme level evaluations (Cook and Ellaway Citation2015) or for evaluation of a single resource (Pickering and Joynes Citation2016). The key point of these frameworks remains that evaluation and assessment of impact need to occur in some form and that consideration is given to whether a TEA innovation has truly made a difference to learning experiences, behaviours, or processes, or whether some positive elements have been lost from the assessment which came before (William 2021).

Concluding remarks

With wider education continuing to be shaped by the pandemic, what does the future hold? The international higher education information technology group Educause proposes three models—‘restore,’ ‘evolve,’ and ‘transform’—to explore how digital technologies may continue to shape education, and assessment (EDUCAUSE Citation2021). The authors point to a series of alternative, post-pandemic futures, but where issues of affordability, digital security, digital equity, and equitable access to education will be the driving forces that must shape our strategic approaches to TEA.

Whilst predictions around technology trends of the next 10 years may highlight broader changes to work, (health), and society (Gartner Citation2015), more fundamental to HPE is how learning technologies will shift their axes to focus on people—transformative competencies (e.g. creativity and innovation), learner agency and well-being (OECD Citation2015). These present interesting potential for TEA, thinking about how we ‘assess’ learners' ability to engage with these technologies to support their self-regulation, development, contribution, and self-care. Technology futurists point to ‘human only traits’ (e.g. compassion, creativity) as increasingly valuable as we learn, and provide healthcare, using more automation (Leonhard Citation2021). How we ‘assess’ these traits, practice-based ethics, and decision making (and abdication from automated systems) in our co-existence with technology will prove major challenges for assessment in HPE.

A key theme throughout the development of this consensus from all contributors points to the need for technology to truly enable ‘better assessment futures.’ In HPE, this translates to focus on the learning and skills needed to deliver this and to ensure that truly transformative TEA considers not just the assessment lifecycle, but the needs of learners, faculty, and patients in the pursuit of authentic assessment using technology.

Acknowledgments

We would like to acknowledge the following people who kindly contributed to the consensus workshops at the Ottawa conference 2020: Christof J. Daetwyler, Drexel University, US; Mary Lawson, Deakin University, Australia; Colin Lumsden, University of Aberdeen, UK. Open access was funded by the Center for University Teaching and Learning, University of Helsinki.

Disclosure statement

The authors report no conflicts of interest. The authors alone are responsible for the content and writing of this article.

Additional information

Notes on contributors

Richard Fuller

Richard Fuller MA FRCP, is the Director of Christie Education, Manchester, UK, and a Consultant Geriatrician/Stroke Physician. They led the consensus group.

Viktoria C. T. Goddard

Viktoria C. T. Goddard PhD, is a sociologist and medical educator and is the Vice Dean of Learning and Scholarship at the University of Liverpool School of Medicine, UK.

Vishna D. Nadarajah

Vishna D. Nadarajah PhD, is the Pro Vice-Chancellor for Education and Institutional Development, International Medical University, Malaysia.

Tamsin Treasure-Jones

Tamsin Treasure-Jones MSc, is an educational technologist and co-founder of Kubify.

Peter Yeates

Peter Yeates MRCP PhD, is a senior lecturer in medical education research and a Consultant Acute and Respiratory Medicine Physician.

Karen Scott

Karen Scott PhD, is an associate professor of medical education and academic lead for evaluation of the Sydney Medical Programme, Faculty of Medicine and Health, University of Sydney, Australia.

Alexandra Webb

Alexandra Webb PhD, is an associate professor of medical education and Deputy Director at the College of Health and Medicine, Australian National University

Krisztina Valter

Krisztina Valter MD PhD, is an associate professor and Group Leader of the Valter Group, John Curtin School of Medical Research, Australian National University.

Eeva Pyorala

Eeva Pyöräla PhD, is a senior lecturer in university pedagogy and an adjunct professor of Sociology and University Pedagogy, at the Center for University Teaching and Learning, University of Helsinki, Finland.

References

- Ahsan K, Akbar S, Kam B. 2021. Contract cheating in higher education: a systematic literature review and future research agenda. Assess Eval High Educ. 47:523–539.

- Alexander B, Ashford-Rowe K, Barajas-Murphy N, Dobbin G, Knott J, McCormack M, Pomerantz J, Seilhamer R, Weber N. 2019. EDUCAUSE horizon report: 2019 higher education edition. Louisville: EDUCAUSE; [accessed 2022 Jan 21]. https://library.educause.edu/-/media/files/library/2019/4/2019horizonreport.pdf.

- Amin Z, Boulet JR, Cook DA, Ellaway R, Fahal A, Kneebone R, Maley M, Ostergaard D, Ponnamperuma G, Wearn A, et al. 2011. Technology-enabled assessment of health professions education: Consensus statement and recommendations from the Ottawa 2010 conference. Med Teach. 33(5):364–369.

- Andreou V, Peters S, Eggermont J, Wens J, Schoenmakers B. 2021. Remote versus on-site proctored exam: comparing student results in a cross sectional-study. BMC Med Educ. 21:624.

- Archer E, Prinsloo P. 2020. Speaking the unspoken in learning analytics: troubling the defaults. Assess Eval Higher Educ. 45(6):888–900.

- Aristovnik A, Keržič D, Ravšelj D, Tomaževič N, Umek L. 2020. Impacts of the COVID-19 pandemic of life of higher education students: a global perspective. Sustainability. 12(20):8438.

- Auxier B, Anderson M, Kumar M. 2019. 10 tech-related trends that shaped the decade. Pew Research; [accessed 2022 Jan 21]. https://www.pewresearch.org/fact-tank/2019/12/20/10-tech-related-trends-that-shaped-the-decade/.

- Awdry R, Dawson P, Sutherland-Smith W. 2021. Contract cheating: to legislate or not to legislate – is that the question? Assess Eval High Educ. DOI:10.1080/02602938.2021.1957773

- Bali M. 2021. On proctoring & internalised oppression #Against Surveillance; [accessed 2022 Jan 21]. https://blog.mahabali.me/educational-technology-2/on-proctoring-internalized-oppression-againstsurveillance/.

- Bengtsson L. 2019. Take-home exams in higher education: a systematic review. Educ Sci. 9(4):267.

- Bennett S, Maton K, Kervin L. 2008. The ‘digital natives’ debate: a critical review of the evidence. BJET. 39(5):775–786.

- Biggs J, Tang C. 2011. Teaching for quality learning at university. Maidenhead: Open University Press.

- Boud D. 2000. Sustainable assessment: rethinking assessment for the learning society. Stud Contin Educ. 22(2):151–167.

- Boud D, Molloy E. 2013. Rethinking models of feedback for learning: the challenge of design. Assess Eval High Educ. 38(6):698–712.

- Boud D, Soler R. 2016. Sustainable assessment revisited. Assess Eval High Educ. 41(3):400–413.

- Boursicot K, Kemp S, Ong TH, Wijaya L, Goh SH, Freeman K, Curran I. 2020. Conducting a high-stakes OSCE in a COVID-19 environment. MedEdPublish. 9(1):54.

- Boursicot K, Kemp S, Wilkinson T, Findyartini A, Canning C, Cilliers F, Fuller R. 2021. Performance assessment: consensus statement and recommendations from the 2020 Ottawa conference. Med Teach. 43(1):58–67.

- Broos T, Pinxten M, Delporte M, Verbert K, De Laet T. 2020. Learning dashboards at scale: early warning and overall first year experience. Assess Eval High Educ. 45(6):855–874.

- Broussard M. 2018. Artificial unintelligence. How computers misunderstand the world. Cambridge (MA): The MIT Press.

- Clarke JL, Boud D. 2018. Refocusing portfolio assessment: curating for feedback and portrayal. Innov Educ Teach Int. 55(4):479–486.

- Cleland J, McKimm J, Fuller R, Taylor D, Janczukowicz J, Gibbs T. 2020. Adapting to the impact of COVID-19: sharing stories, sharing practice. Med Teach. 42(7):772–775.

- Collares CF, Cecilio-Fernandes D. 2019. When I say … computerised adaptive testing. Med Educ53(2):115–116.

- Cook DA, Ellaway RH. 2015. Evaluating technology-enhanced learning: a comprehensive framework. Med Teach. 37(10):961–970.

- Cumbo B, Selwyn N. 2022. Using participatory design approaches in educational research. Int J Res Method Educ. 45(1):60–72.

- Damgaard MT, Nielsen HS. 2018. Nudging in education. Econ Educ Rev. 64:313–342.

- Daniels VJ, Strand AC, Lai H, Hillier T. 2019. Impact of tablet-scoring and immediate score sheet review on validity and educational impact in an internal medicine residency objective structured clinical exam (OSCE). Med Teach. 41(9):1039–1044.

- Darnton R, Lopez T, Anil M, Ferdinand J, Jenkins M. 2021. Medical students consulting from home: a qualitative evaluation of a tool for maintaining student exposure to patients during lockdown. Med Teach. 43(2):160–167.

- Davies N, Walker T, Joynes V. 2010. Assessment and learning in practice settings (ALPS) – implementing a large scale mobile learning programme. A report; [accessed 2022 Jan 21]. http://www.alps-cetl.ac.uk/documents/ALPS%20IT%20Report.pdf.

- Divami. 2021. Top 5 principles of EdTech design; [accessed 2022 Jan 21]. https://www.divami.com/blog/top-5-principles-of-edtech-design/.

- Driessen E. 2017. Do portfolios have a future? Adv Health Sci Educ Theory Pract. 22(1):221–228.

- Durning SJ, Dong T, Ratcliffe T, Schuwirth L, Artino AR, Boulet JR, Eva K. 2016. Comparing open-book and closed-book examinations: a systematic review. Acad Med. 91(4):583–599.

- EDUCAUSE. 2021. Top IT issues, 2021: emerging from the pandemic; [accessed 2022 Jan 21]. https://er.educause.edu/articles/2020/11/top-it-issues-2021-emerging-from-the-pandemic.

- Ellaway R, Cleland J, Tolsgaard M. 2020. What we learn in time of pestilence. Adv Health Sci Educ Theory Pract. 25(2):259–261.

- Er H, Nadarajah V, Wong P, Mitra N, Ibrahim Z. 2020. Practical considerations for online open book examinations in remote settings. MedEdPublish. 9(1):153.

- Er HM, Nadarajah VD, Hays RB, Yusoff N, Loh KLY. 2019. Lessons learnt from the development and implementation of an online assessment system for medical science programmes. Med Sci Educ. 29(4):1103–1108.

- Farrokhi F, Mohebbi SZ, Farrokhi F, Khami MR. 2021. Impact of COVID-19 on dental education–a scoping review. BMC Med Educ. 21(1):1–12.

- Folger D, Merenmies J, Sjöberg L, Pyörälä E. 2021. Hurdles for adopting mobile learning devices at the outset of clinical courses. BMC Med Educ. 21(1):594.

- Foster C, Francis P. 2020. A systematic review on the deployment and effectiveness of data analytics in higher education to improve student outcomes. Assessment and Evaluation in higher education to improve student outcomes. Assess Eval High Educ. 45(6):822–841.

- Foster E, Siddle R. 2020. The effectiveness of learning analytics for identifying at-risk students in higher education. Assess Eval Higher Educ. 45:842–854.

- Fuller R, Joynes V. 2015. Enhancement or replacement? Understanding how legitimised use of mobile learning resources is shaping how healthcare students are learning. J EAHIL. 11(2):7–10.

- Fuller R, Joynes V, Cooper J, Boursicot K, Roberts T. 2020. Could Covid-19 be our ‘there is no alternative’ (TINA) opportunity to enhance assessment? Med Teach. 42(7):781–786.

- Gartner. 2015. Technology and business in 2030; [accessed 2022 Jan 21]. https://www.gartner.com/smarterwithgartner/technology-and-business-in-2030.

- Gordon M, Patricio M, Horne L, Muston A, Alston SR, Pammi M, Thammasitboon S, Park S, Pawlikowska T, Rees EL, et al. 2020. Developments in medical education in response to the COVID-19 pandemic: a rapid BEME systematic review: BEME Guide No. 63. Med Teach. 42(11):1202–1215.

- Gormley GJ, Johnston J, Thomson C, McGlade K. 2012. Awarding global grades in OSCEs: evaluation of a novel eLearning resource for OSCE examiners. Med Teach. 34(7):587–589.

- Grafton-Clarke C, Uraiby H, Gordon M, Clarke N, Rees E, Park S, Pammi M, Alston S, Khamees D, Peterson W, et al. 2021. Pivot to online learning for adapting or continuing workplace-based clinical learning in medical education following the COVID-19 pandemic: a BEME systematic review: BEME Guide No. 70. Med Teach. 23:1–17.

- Greenhalgh T, Vijayaraghavan S, Wherton J, Shaw S, Byrne E, Campbell-Richards D, Bhattacharya S, Hanson P, Ramoutar S, Gutteridge C, et al. 2016. Virtual online consultations: advantages and limitations (VOCAL) study. BMJ Open. 6(1):e009388.

- Gulikers JTM, Bastiaens TJ, Kirschner PA. 2004. A five-dimensional framework for authentic assessment. ETR&D. 52(3):67–86.

- Harley JM, Lou NM, Lui Y, Cutumisu M, Daniels LM, Leighton JP, Nadon L. 2021. University students’ negative emotions in a computer-based examination: the roles of trait test-emotion, prior test taking methods and gender. Assess Eval High Educ. 46(6):656–972.

- Harrison A, Phelps M, Nerminathan A, Alexander S, Scott KM. 2019. Factors underlying students’ decisions to use mobile devices in clinical settings. Br J Educ Technol. 50(2):531–545.

- Hart J. 2021. Top tools for learning 2021; [accessed 2022 Jan 21]. https://www.toptools4learning.com.

- Heeneman S, de Jong LH, Dawson LJ, Wilkinson TJ, Ryan A, Tait GR, Rice N, Torre D, Freeman A, van der Vleuten CPM. 2021. Ottawa 2020 consensus statement for programmatic assessment – 1. Agreement on the principles. Med Teach. 43(10):1139–1148.

- Hegazy NN, Elrafie NM, Saleh N, Youssry I, Ahmed SA, Yosef M, Ahmed MM, Rashwan NI, Abdel Malak HW, Girgis SA, et al. 2021. Consensus meeting report “technology enhanced assessment” in Covid-19 Time, MENA regional experiences and reflections. Adv Med Educ Pract. 12(12):1449–1456.

- Hodges B. 2015. Sea monsters & whirlpools: navigating between examination and reflection in medical education. Med Teach. 37(3):261–266.

- Hodges B. 2021. Performance-based assessment in the 21st century: when the examiner is a machine. Perspect Med Educ. 10(1):3–5.

- Holmboe E. 2017. Work-based assessment and co-production in postgraduate medical training. GMS J Med Educ. 34(5):Doc58.

- Homer M, Fuller R, Pell G. 2018. The benefits of sequential testing: Improved diagnostic accuracy and better outcomes for failing students. Med Teach. 40(3):275–284.

- Hope D, Davids V, Bollington L, Maxwell S. 2021. Candidates undertaking (invigilated) assessment online show no differences in performance compared to those undertaking assessment offline. Med Teach. 43(6):646–650.

- Hope D, Dewar A, Hothersall EJ, Leach JP, Cameron I, Jaap A. 2021. Measuring differential attainment: a longitudinal analysis of assessment results for 1512 medical students at four Scottish medical schools. BMJ Open. 11(9):e046056.

- Hytönen H, Näpänkangas R, Karaharju-Suvanto T, Eväsoja T, Kallio A, Kokkari A, Tuononen T, Lahti S. 2021. Modification of national OSCE due to COVID-19 – implementation and students' feedback. Eur J Dent Educ. 25(4):679–688.

- International Telecommunication Union. 2014. Measuring the Information Society. Cited in Fuller R, Joynes V. 2015. Enhancement or replacement? Understanding how legitimised use of mobile learning resources is shaping how healthcare students are learning. J EAHIL. 11(2):7–10.

- Jaap A, Dewar A, Duncan C, Fairhurst K, Hope D, Kluth D. 2021. Effect of remote online exam delivery on student experience and performance in applied knowledge tests. BMC Med Educ. 21(1):86.

- JISC. 2016. The assessment and feedback lifecycle; [accessed 2022 Jan 21]. https://www.jisc.ac.uk/guides/transforming-assessment-and-feedback/lifecycle.

- JISC. 2020. Developing mental health and well being technologies and analytics; [accessed 2022 Jan 21]. https://www.jisc.ac.uk/rd/projects/developing-mental-health-and-wellbeing-technologies-and-analytics.

- Johanns B, Dinkens A, Moore J. 2017. A systematic review comparing open-book and closed-book examinations: evaluating effects on development of critical thinking skills. 2017. Nurse Educ Pract. 27:89–94.

- Jones C, Ramanau R, Cross S, Healing G. 2010. Net generation of digital natives: is there a distinct new generation entering university? Comput Educ. 54(3):722–732.

- Jones C. 2010. A new generation of learners? The net generation and digital natives. Learn Media Technol. 35(4):365–368.

- Joynes V, Fuller R. 2016. Legitimisation, personalisation and maturation: using the experiences of a compulsory mobile curriculum to reconceptualise mobile learning. Med Teach. 38(6):621–627.

- Judd T, Ryan A, Flynn E, McColl G. 2017. If at first you don't succeed… adoption of iPad marking for high-stakes assessments. Perspect Med Educ. 6(5):356–361.

- Khalaf K, El-Kishawi MA, Al Kawas S. 2020. Introducing a comprehensive high-stake online exam to final-year dental students during the COVID-19 pandemic and evaluation of its effectiveness. Med Educ Online. 25:1.

- Kickert R, Meeuwisse M, Stegers-Jager KM, Prinzie P, Arends LR. 2022. Curricular fit perspective on motivation in higher education. High Educ. 83(4):729–745.

- Konings KD, van Berlo J, Koopmans R, Hoogland H, Spanjers IAE, ten Haaf JA, van der Vleuten CPM, van Merrienboer JJG. 2016. Using a smartphone app and coaching group sessions to promote residents’ reflection in the workplace. Acad Med. 91(3):365–370.