ABSTRACT

The recent advances in educational technology enabled the development of solutions that collect and analyse data from learning scenarios to inform the decision-making processes. Research fields like Learning Analytics (LA) and Artificial Intelligence (AI) aim at supporting teaching and learning by using such solutions. However, their adoption in authentic settings is still limited, among other reasons, derived from ignoring the stakeholders' needs, a lack of pedagogical contextualisation, and a low trust in new technologies. Thus, the research fields of Human-Centered LA (HCLA) and Human-Centered AI (HCAI) recently emerged, aiming to understand the active involvement of stakeholders in the creation of such proposals. This paper presents a systematic literature review of 47 empirical research studies on the topic. The results show that more than two-thirds of the papers involve stakeholders in the design of the solutions, while fewer papers involved them during the ideation and prototyping, and the majority do not report any evaluation. Interestingly, while multiple techniques were used to collect data (mainly interviews, focus groups and workshops), few papers explicitly mentioned the adoption of existing HC design guidelines. Further evidence is needed to show the real impact of HCLA/HCAI approaches (e.g., in terms of user satisfaction and adoption).

1. Introduction

Current digital technologies enable the collection of fine-grained data on teaching and learning, which can potentially inform and recommend actions to a variety of stakeholders, including students, teachers, curriculum designers, and managers. Given this context, the Learning Analytics (LA) field has contributed to understanding and improving learning and its context, while Artificial Intelligence in Education (AIED) has focussed especially on simulating and predicting learning processes and behaviours. Both fields use the data generated from these systems and often apply similar analysis techniques such as machine learning (Rienties, Køhler Simonsen, and Herodotou Citation2020). For example, multiple technologies developed within the LA and AIED context have been successfully applied in educational settings to support task automation (Tsai et al. Citation2021), personalise learning (Chakraborty et al. Citation2021), and improve teacher awareness (Matcha et al. Citation2020). Consequently, during the last few years, LA and AIED have gained much attention.

Despite the rising interest in LA and AIED solutions (Salas-Pilco, Xiao, and Hu Citation2022), the widespread adoption of these technologies among stakeholders still remains limited (Sadallah et al. Citation2022). Among the multiple reasons behind this lack of adoption (e.g., costs, technical requirements, or institutional policies), several authors have pointed out the lack of contextual grounding of these solutions as one of the causes. This lack of grounding overlooks the pedagogical background and the stakeholder's actual needs (Dimitriadis, Martínez-Maldonado, and Wiley Citation2021; Sarmiento and Wise Citation2022). To overcome this limitation, many researchers emphasise the importance of adopting human-centered approaches where stakeholders are actively involved in the design, development and evaluation of LA/AIED solutions (Buckingham Shum, Ferguson, and Martinez-Maldonado Citation2019; Rodríguez-Triana et al. Citation2018). Such stakeholder involvement leads to better tailoring the solutions to the contextual requirements and needs, as well as to raising the reliability and trustworthiness of the systems (Martinez-Maldonado Citation2023), thereby removing barriers to their adoption. To address these issues, the subfields of Human-Centered LA (HCLA) and Human-Centered AI in Education (HCAI for brevity) have emerged to understand, inform and promote the design and development of human-centered solutions within their corresponding communities.

Nowadays, these two subfields are reaching a certain maturity, triggering the appearance of literature reviews to explore different aspects of HCLA/HCAI. Li and Gu (Citation2023) carried out a systematic literature review about ethical design approaches and risks of HCAI. The literature review together with a Delphi study led to the identification of 8 potential HCAI risk indicators. Additionally, Sarmiento and Wise (Citation2022) explored systematically the stakeholders' involvement in the co-design and participatory design of LA proposals, two core approaches in human-centered design. More concretely, their literature review identifies the stakeholders involved in the different design phases, as well as the tools and techniques used for that purpose. Both systematic reviews shed light on participatory approaches for AI and LA. However, to the best of our knowledge, in Li and Gu (Citation2023) the authors did not explore how stakeholders are involved in the design and development of HCAI solutions. In the case of Sarmiento and Wise (Citation2022), the authors particularly focussed on participatory design and co-design, leaving aside other terms used in the community (e.g., user-centered or human-centered design). Additionally, these papers do not offer systematic analyses of the specific methods and tools used to involve and collect data from the stakeholders while implementing the HC approaches. Furthermore, in both systematic reviews, there is no consideration of the learning theories applied to inform HCLA/HCAI research proposals and of the evaluation approaches employed on both HCLA and HCAI contributions.

We deem that the increasing number of research studies on HCLA and HCAI published in the last years presents a timely opportunity to obtain a global understanding of the HCLA/HCAI approaches employed in the literature. Such analysis can help identify current research gaps and potential research lines within the field. Thus, this paper reports a systematic literature review on HCLA/HCAI guided by the overarching research question: What is the current landscape of HCLA/HCAI empirical evidence?, which has been further subdivided into the following ones:

| (RQ1) | Which learning theories/pedagogical approaches are considered in the design of HCLA and HCAI solutions? | ||||

| (RQ2) | What are the main aims of the proposed HCLA and HCAI solutions? | ||||

| (RQ3) | Which and how stakeholders are involved in the design and development of HCLA and HCAI solutions? | ||||

| (RQ4) | Which methods and tools are used to design and develop HCLA and HCAI solutions? | ||||

| (RQ5) | How are the HCLA and HCAI solutions being evaluated? | ||||

| (RQ6) | What are the pros and cons of using HC approaches? | ||||

The rest of the paper is structured as follows. Section 2 describes the theoretical background behind the use of human-centered approaches for LA and AI. Section 3 presents the methodology followed to extract the 47 reviewed papers as well as the analysis approach. Section 4 reports the results of the review. Section 5 discusses the results according to the RQs and reflects on potential theoretical and practical implications for researchers and practitioners. Finally, Section 6 outlines the conclusions and limitations of this work.

2. Theoretical background

Recently, there has been a growing interest in human-centered design (HCD) within the Technology-Enhanced Learning field. HCD describes approaches that position the stakeholders and designers as collaborators in the creation of contextualised products (Zachry and Spyridakis Citation2016). According to Rouse (Rouse Citation2007), HCD should increase the capacities of humans, overcome their restrictions and foster technological acceptance. Dimitriadis, Dimitriadis, Martínez-Maldonado, and Wiley (Citation2021) highlighted that, when employing HCD processes, researchers should guarantee the a) “agentic positioning” of the stakeholders, b) the consideration of the learning design cycle, and c) the pedagogical grounding on educational theories to guide the design of the desired solutions.

For decades, different terminologies have been used in the literature to describe human-centered approaches. All these approaches agree on the stakeholders' involvement during the design process to understand their perspectives, needs and context. However, while these terms are sometimes used interchangeably, they have different implications in practice (Buckingham Shum, Ferguson, and Martinez-Maldonado Citation2019):

User-centered design: This approach sets the focus on the role of the stakeholders as 'users', and it refers to a design approach that concentrates on the usability of the design given the needs and experiences of users (Abras, Maloney-Krichmar, and Preece Citation2004). In this approach, the roles of the researcher, the designer and user are distinct. The user is not really a part of the design team (Sanders and Stappers Citation2008).

Participatory design: This approach examines the users' needs and requirements by empowering them to take an active role in shaping the products, services or systems (Bødker et al. Citation2022). Compared to user-centered design, in participatory design, the roles of the designer and the researcher are not independent and the user becomes a critical component of the design process, yet without participating in the decision-making (Könings, Seidel, and van Merriënboer Citation2014; Sanders and Stappers Citation2008).

Co-design: In this approach, designers and stakeholders (without design experience) collaborate during the design and development of a product, emphasising their collaboration and shared decision-making (Sanders and Stappers Citation2008). In co-design, each participant is considered an expert when it comes to their own experiences, thus, they draw upon their practical, experiential, and conceptual knowledge in the design process (Cavignaux-Bros and Cristol Citation2020).

The fields of HCLA and HCAI emerged to create actionable LA/AI solutions attending to the stakeholders' needs to overcome existing barriers, e.g., providing interpretations of the visualised data which may be challenging depending on the stakeholders' data literacy (Dimitriadis, Martínez-Maldonado, and Wiley Citation2021). HCLA refers to the use of LA to support the needs and goals of learners, instructors, and other stakeholders in the education process, in a way that the LA solutions suit the users and not the other way around (Buckingham Shum, Ferguson, and Martinez-Maldonado Citation2019). HCLA examines data related to student learning, such as engagement with course materials, performance on assessments, and interaction with peers and instructors. The shift regards the commitment of the stakeholders as co-designers, who are expected to participate in the design of LA solutions, regardless of their level of technical expertise or prior experience with analytics tools. This involves designing dashboards and visualisations that are easy to understand and interpret, as well as providing support and training to learners and instructors to help them make sense of the data.

Together with HCLA, there is a growing body of AI works that advocate HCD. AI has been applied extensively across sectors like healthcare, medicine, finances, and security (Salas-Pilco, Xiao, and Hu Citation2022). As far as it concerns the field of education, AI is being used through intelligent tutoring systems and virtual assistants, automated scoring on assignments, adaptive learning systems and predictive analytics. Shifting to HCD, HCAI supports the design and development of AI proposals that prioritise the needs, values, and goals of humans (Shneiderman Citation2020).

Both LA and AIED researchers have proposed conceptual tools such as processes (Knight et al. Citation2015; Martinez-Maldonado et al. Citation2022), strategies (Dollinger and Lodge Citation2018), and frameworks (Chatti et al. Citation2020; Martinez-Maldonado et al. Citation2015) for HC design. However, it is not clear how HCLA and HCAI approaches are put into practice.

3. Methodology

The literature review followed the guidelines proposed by Kitchenham and Charters (Citation2007) to answer the aforementioned research questions. Although these guidelines were initially conceived for the software engineering field, they are typically used in other research fields including LA (e.g., Matcha et al. Citation2020) and AIED (e.g., Hooshyar, Yousefi, and Lim Citation2019). These guidelines structure the review process in three phases: planning, conducting, and reporting. This section summarises the planning and conducting phase, and the following sections report and discuss the results.

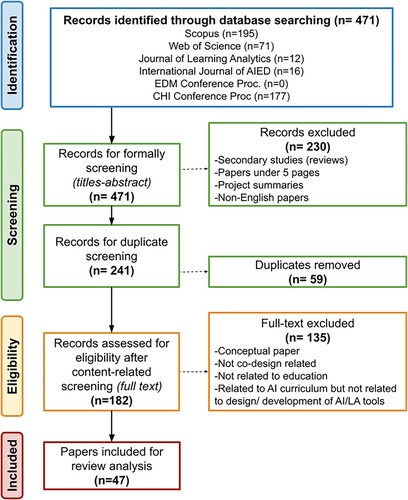

The decisions taken during the planning phase include the selection of the databases, the search string, the search location, the time window, and the inclusion and exclusion criteria (see ). The databases included (a) digital libraries that typically publish articles related to this area (i.e., Scopus, which also includes articles listed in IEEE Xplore; and Web of Science, which also indexes articles listed in ScienceDirect); (b) journals with specific focus on LA/AIED (i.e., the Journal of Learning Analytics and the International Journal of Artificial Intelligence in Education); and (c) conferences with specific focus on LA/AIED (namely, LAK, AIED, CHI and EDM).

Table 1. Decisions taken during the SLR planning phase.

The terms used in the query included the main HCD approaches introduced in the previous section plus ‘centred design’, to detect similar terms such as user, teacher or student-centred design. Moreover, we included the terms representing the domains (i.e., LA and AIED). As a result, we used the following query: [‘human-centered design’ OR ‘centred design’ OR ‘participatory design’ OR ‘co-design’] AND [‘learning analytics’ OR (‘artificial intelligence’ AND ‘education’)]. The search was performed in December 2022 without imposing any time constraints. Whenever possible, we narrowed down the query to the paper title, abstract and keywords, obtaining a total number of 294 publications (see ). Since there were variations in the way each search engine applied the query, to guarantee the same filtering criteria, we cross-checked that the title, abstract and keywords of each paper satisfied the query.

Publications were then screened according to the inclusion and exclusion criteria by manually reading the title, abstract and keywords. During this process, we selected primary studies describing complete empirical experiences in English where LA/AI solutions were designed using HC. Moreover, we excluded papers under 5 pages, project summaries, and duplicate papers. From the total number of 294 initial publications, 47 were included in this literature review (see ). The full contents of the selected publications were then read.

Content analysis has been conducted employing etic categories, i.e., predetermined categories established prior to data analysis (Given Citation2012). Then the coding scheme was developed based on the established RQs. To ensure the reliability of our findings, we implemented the following strategies (Guba Citation1981): (a) Peer debriefing by involving the research team to review the coding scheme together, (b) Triangulation among researchers respecting the interpretation of the data.

The literature review involved 6 researchers (see author list) who actively participated in the filtering and data analysis process of different primary studies. To assess the inter-researcher consistency of the coding scheme, a random paper was chosen to be coded by all reviewers prior to the data analysis phase (Kitchenham and Charters Citation2007). Once a common understanding of the identified categories was set, the remaining papers were distributed among the reviewers for individual coding. The decision on whether to include/exclude dubious cases was solved through a joint discussion and a second researcher reading the paper in depth. Finally, the different topics under review were allocated to 3 researchers who cross-checked the coding of each paper and discussed with the whole team the potential discrepancies found. An overview of the final codification is available in Appendix.

4. Results

This section provides an overview of the reviewed papers and summarises the results of the literature review in relation to the aforementioned research questions.

4.1. Sample overview

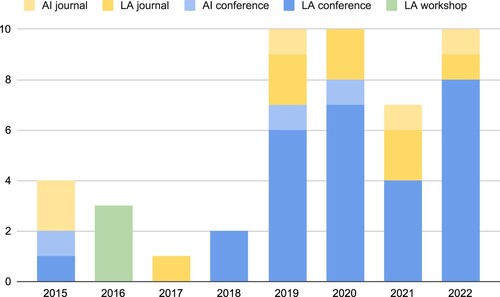

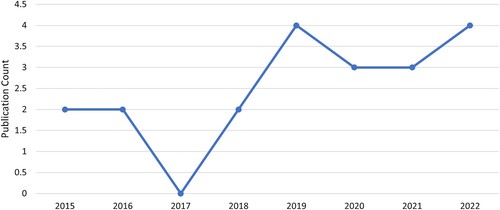

Looking at the publication date, while the first HCLA (Knight et al. Citation2015) and HCAI (Long, Aman, and Aleven Citation2015; Santos and Boticario Citation2015a, Citation2015b) empirical studies date back to 2015, most papers were published starting from 2019 (37, 78.72%). depicts the reviewed articles, including the publication type and year. In terms of the research domain, few are in the artificial intelligence or data mining domains (8, 17,02%), while most papers (43, 82.98%) pertain to the learning analytics field.

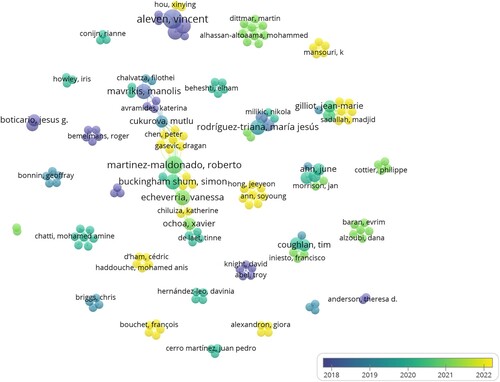

Additionally, we further analysed the relationships among the authors of the papers. In , each bubble represents a different author (168 in total), the size of the bubble represents the number of papers by each author (spanning between 1 and 5), and the clusters reflect a coauthoring relationship. Some collective efforts yielded multiple papers by the same group of authors (e.g., Aleven and Martínez-Maldonado co-authored 5 and 4 papers respectively), indicating their leadership in the field of HCLA/HCAI. Nevertheless, there are also many other different teams that contributed to the HCLA/HCAI literature, showing that a wider community is adopting human-centered approaches.

According to the publication type, 31 (65.96%) were published in conference proceedings, 13 (27.66%) in scientific journals and 3 (6.38%) in workshop proceedings. Among the conference papers, 11 (35.48%) were published in GGS1 and/or CORE2 indexed conferences, being LAK and EC-TEL conferences the most frequent venues of publication (with 9 and 7 papers, respectively). Also, among the journal papers, 5 (38.46%) were published in impact factor journals according to the Web of Science. The Journal of Learning Analytics was the most popular venue.

Among the different types of HCD approaches described in Section 2, the most frequent terminology used was co-design (15, 31.91%), followed by user-centered design (11, 23.40%) and participatory design (8, 17.02%). Interestingly, many papers used several of them interchangeably within the same study (10, 26.74%), sometimes as synonyms (e.g. Sadallah et al. Citation2022).

4.2. Learning theories and design aspects

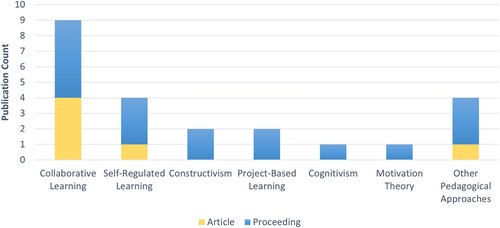

Out of the 47 papers retrieved, 27 (57.45%) did not mention which learning theories or pedagogical approaches characterised the learning context and/or informed their LA solutions. depicts the identified learning theories. Some papers included more than one learning theory, e.g., Kilińska, Kobbelgaard, and Ryberg Citation2019).

The majority of the papers with a reference to theories used Collaborative Learning and its instances (9, 45.00%) -which entail working in pairs or groups to engage in discussions about concepts or to seek solutions to problems- as a theoretical basis for the design of the LA/AI solutions. For instance, Hoffmann et al. (Citation2022) discuss the use of Computer Supported Collaborative Learning, while Martinez-Maldonado et al. (Citation2022) refer to Collocated Collaborative Learning to inform the contextual design of their LA proposal. 4 papers mention Self-Regulated Learning (4, 20.00%) -which fosters learners' self-reliance as they progress through their learning process (Zimmerman Citation2000)- to ground their work. Additionally, further papers draw upon Constructivism (2, 10.00%), as in Huh et al. (Citation2022) who use Vygotsky's Sociocultural Theory of Cognitive Development, and Porject-Based Learning (2, 10.00%). Single mentions regard the Cognitive Theory of Multimedia Learning (i.e. Revano and Garcia Citation2021) or the Motivation Theory (i.e. Long, Aman, and Aleven Citation2015).

Also, in some cases (11, 55.00%), the authors took into consideration specific aspects of the learning design to contextualise further their solutions. Examples are the actors/participants, the resources/objects, the learning objectives/goals, the social level (individual, group, classroom or institutional), the learning tasks and their types, time-related aspects, and teacher expectations about the students work. Building on the particularities of the learning design, the researchers adopted various pedagogical approaches, such as Adaptive Learning (i.e., Holstein, McLaren, and Aleven Citation2019a), Open-Ended Learning (i.e., Beheshti et al. Citation2020), Blended (i.e., Aleven et al. Citation2016) or Active Learning (i.e., Alzoubi et al. Citation2021).

presents the distribution of theories employed in the included papers. As observed in the graph, from 2018 there are more mentions to the pedagogical underpinning among the encountered papers. Keeping in mind the small numbers depicted, this result is just indicative of the potential awareness of the importance of the learning theories and learning design in grounding the LA/AI proposals.

4.3. Purposes of the HCLA/HCAI solutions

Inspired by the reference model proposed by Chatti et al. (Citation2013) to characterise LA solutions, we analysed the paper contributions according to their type (what?), target users (who?), and purpose (why?).

What? According to the results, most of the studies propose standalone or embedded LA Dashboards (21, 44.68%), being followed by the design of entire LA Tools (13, 27.66%) and the identification of relevant indicators for different purposes (6, 12.77%). Other purposes also include the design of AI agents/systems (Holstein, McLaren, and Aleven Citation2019a; Huh et al. Citation2022), recommender systems (Santos and Boticario Citation2015b), and virtual assistants (Lister et al. Citation2021). Almost half of the analysed papers (22, 46.81%) do not report the learning platform where the proposed HCLA/HCAI contribution will be implemented. From the ones that report it, we can highlight that 10 refers to web-based applications, 6 to Learning Management Systems, 3 to Intelligent Tutoring Systems and 3 to mobile applications.

Who? The final users of the targeted solutions are solely teachers (19, 40.43%), solely students (18, 38.30%), or both of them (4, 8.51%), at all different educational levels (i.e., primary, secondary and tertiary). The remaining papers target other stakeholders, or a combination of teachers with other stakeholders (5, 10.64%), including, for example, educational managers (Eradze, Rodriguez Triana, and Laanpere Citation2017), parents (Huh et al. Citation2022) or museum visitors (Beheshti et al. Citation2020). Further details about the target users are provided in the following subsection.

Why? To better understand the human-LA/AI tandems, we categorised the HCLA/HCAI contributions based on the framework proposed by Soller et al. (Citation2005). This framework identifies three types of tools: mirroring tools, which only display indicators; metacognitive tools, which compare the desired and the current results of the selected indicators; and guiding tools, which offer advice based on an interpretation of the indicators. In addition, we have included the category of ”intelligent systems” to refer to those contributions where the decision-making is done automatically. The results revealed that although mirroring tools are the most frequent purposes of HCLA solutions (e.g., Ahn et al. Citation2019), there are also guiding tools (e.g., Ouatiq et al. Citation2022) and intelligent systems (e.g., Long, Aman, and Aleven Citation2015).

4.4. Stakeholders and involvement in HC processes

The active positioning of stakeholders is one of the key aspects of HCD processes (Dimitriadis, Martínez-Maldonado, and Wiley Citation2021). The analysed data showed that teachers are the main participants involved in the human-centered processes (14, 29.79%). The teachers' involvement was particularly strong in HCLA/HCAI proposals addressing Higher Education (12 out of the 14 proposals), with only 2 HCLA solutions targeting K12 educational settings. Students were the second key actors either alone (9, 19.14%) or together with the teachers (9, 19.14%). A considerable number of papers also involved other types of participants in the HCD process either alone (4, 8.51%), together with students (3, 6.38%), together with teachers (5, 10.64%) or together with both teachers and students (3, 6.38%). IT experts, teaching assistants, school managers, project partners, and developers were among these stakeholders. One study (Huh et al. Citation2022) involved parents as well.

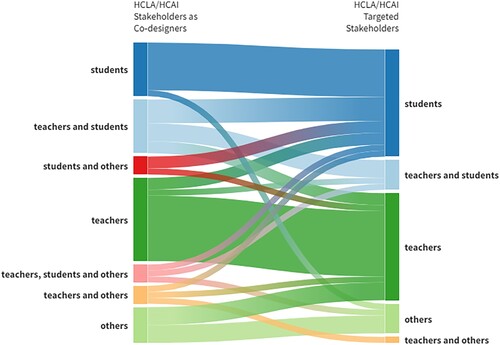

visualises the connection between the stakeholders who participated in the process of co-designing the HCAI/HCLA proposals and the end users aiming to take advantage of the final proposal. Based on the evidence gathered, it appears that almost half of the examined papers invited the final users as co-designers of the HCLA/HCAI solutions (25, 53.20%). However, in other cases, either additional stakeholders participated in the design or development of the proposals (15, 31.91%) or the involved stakeholders were different from the targeted ones (7, 14.90%). For example, Zhou, Sheng, and Howley (Citation2020) and Romero et al. (Citation2021) created AI and LA solutions to support students' algorithm understanding and self-regulated learning, respectively. Yet, in the first case, the involved stakeholders in the co-designing process were teachers and in the second case students together with project managers and developers.

Figure 6. Sankey diagram displaying the involved stakeholders in the design and development of HCLA/HCAI solutions and their target users (N = 47).

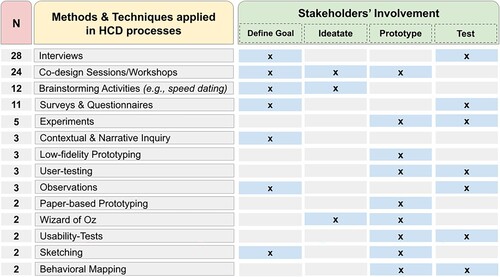

Attending the techniques and methods applied to the HCD processes, interviews seem to be the most prominent one (28, 56.57%), followed by co-design sessions and workshops (24, 51.06%) and surveys and questionnaires (13, 27.66%). Other techniques regarded low-fidelity (3, 6.38%) and paper-based prototyping (2, 4.26%), observations (3, 6.38%), Wizard of Oz (2, 4.26%), etc. Additionally, different types of cards were recurrently used to help participants express their needs and opinions (9, 19,15%), being some card decks explicitly developed for co-designing LA solutions (e.g., Alvarez, Martinez-Maldonado, and Shum Citation2020).

Each method served different purposes during the applied HCD processes. In our analysis, we followed the Human-Centered Indicator Design (HCID) framework (Chatti et al. Citation2020) to better understand the role of each technique/method in the design and development of HCLA and HCAI solutions. The framework consists of four phases: (a) define a goal/question that aims to understand the users' needs, (b) ideate that aims to support the co-creation phase, (c) prototype and d) test by getting feedback from the users. depicts how many times the techniques and methods were encountered in the publications and the purpose they served in accordance with HCID framework categories (Chatti et al. Citation2020). In terms of stakeholders' involvement in each of the HCID phases, our results indicate that human positioning happened mainly during the ‘Define the goal’ phase (44, 93,61%), and less participation was planned during the following three phases, i.e., 26 papers involved stakeholders during the ‘Ideate’ phase (55,32%), 22 during prototyping (46,80%) and 20 during the ‘Test & Evaluation’ phase (42,55%).

Figure 7. Techniques and methods applied in HCD processes together with the purpose they served in accordance with the HCID framework.

From the total number of 47 papers explored, 14 (29.79%) of them used concrete conceptual frameworks or models which were followed while designing the HCLA/HCAI solutions. Among these proposals we encounter the LATUX framework (Martinez-Maldonado et al. Citation2015), employed for co-designing and co-developing LA visualisations in Conijn, Van Waes, and van Zaanen (Citation2020) and Holstein, McLaren, and Aleven (Citation2019b); the Human-Centered Indicator Design (HCID) classification (Chatti et al. Citation2020) for the co-creation of LA indicators; and the Learning Analytics Translucence Elicitation Process (LAT-EP) (Martinez-Maldonado et al. Citation2022) to co-develop HCLA systems.

4.5. HCLA/HCAI evaluation and usage in authentic settings

Attending the evaluation mentioned in the retrieved papers, almost half of them (20, 42.55%) reported the effects of the implementation of the HCLA/HCAI proposals. For instance, Pishtari, Rodríguez-Triana, and Väljataga (Citation2021) conducted an evaluative study to gather insights from stakeholders' perceptions about the developed prototypes -on analytics for LD in location-based settings. 5 educators, 2 researchers and 2 managers served as study informants. The results shed light on the stakeholders' different needs, e.g., the educators desired a location-based tool to monitor and evaluate the learning design during their actual practice. Santos and Boticario (Citation2015a) co-designed guidelines to support personalised recommendations for online courses. They later evaluated their proposal within two experiences of different online courses. Their findings showed that the guidelines proposed could guide the design of personalised recommendations. At the same time, we encounter two cases (4.26%), i.e., Martinez-Maldonado et al. (Citation2022), and Buckingham Shum, Ferguson, and Martinez-Maldonado (Citation2019), where the authors mentioned that the evaluation of the proposals had been reported somewhere else.

In most cases, the HCAI/HCLA solutions were not applied in authentic settings (31, 65.96%) or this aspect was not clear in the paper (2, 4.36%). The rest of the papers report empirical studies framed in real educational settings (13, 27.66%). For example, Romero et al. (Citation2021) developed a feedback tool for medical students following a user-centered design approach. They later evaluated the tool within an undergraduate course. According to the results, the students perceived positively the provided feedback and considered the tool environment as easy to use. Again, one study, i.e., Martinez-Maldonado et al. (Citation2022), cited a prior work which described in detail the evaluation at the authentic setting (2.13%).

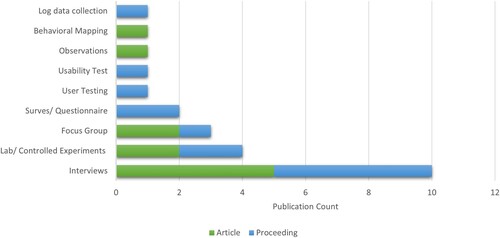

Regarding the evaluation techniques and methods employed, the users adopted an active role in all of the proposals. The type of study varied from interviews, controlled/lab experiments, to user tests and focus groups. presents the applied evaluation approaches, as reported in the research papers.

Figure 8. Evaluation methods and approaches as identified from the final pool of the included papers.

Out of 47 papers under review, 20 reported evaluation and 2 of them mentioned that the evaluation is reported elsewhere (namely, Buckingham Shum, Echeverria, and Martinez-Maldonado Citation2019; Martinez-Maldonado et al. Citation2022). Grouping the evaluation goals per topic, we found that the most frequent topic was assessing whether the solutions satisfied the user needs, e.g., looking at the effectiveness, usefulness, utility and requirement satisfaction (10, 50.00%). Authors also used the evaluation to gather ideas for future improvement (e.g., extracting user concerns, identifying core and missing aspects, eliciting further needs, or evaluating preferences) (8, 40.00%), and looked at how stakeholders used the solutions, assessing aspects such as acceptance, usability and user experience (8, 40.00%). Measuring the impact on stakeholder behaviour/engagement and evaluating understanding/interpretability appeared both with the same frequency (6, 30.00%). Other evaluation goals that gathered less attention were: the applicability, feasibility or actionability of the solutions (3, 15.00%); the impact on the learning gain (3, 15.00%); and the accuracy of the solutions (2, 10.00%).

4.6. Pros and cons of using HC approaches

In order to get a critical perspective about the benefits and challenges of using HC approaches, we extracted the pros and cons elicited in the reviewed papers, mainly looking at the discussion and conclusion sections. In total, 31 papers included this kind of reflection.

Among the positive implications, most papers highlighted: (1) the collection of meaningful and relevant idea, and the participants' capability to envision solutions, contributing to decisions which might not have been adapted to the stakeholders if only relying on the development team (14, 45.16% out of 31 papers); and, (2) the understanding gained of the stakeholders' values, needs, challenges, opportunities, requirements, existing practices, desired functionalities and preferences (13, 41.94%). The authors also perceived that the HC approaches had an impact, leading to more useful, effective, responsive and inclusive solutions (6, 19.36%); on the stakeholders engagement and satisfaction (5, 16.13%); and on the student performance (1, 3.23%). The reviewed papers also report that the involvement of the participants: (*) helped them to better understand the affordances and usage of the solutions (6, 19.35%), (*) contributed to their literacy and practice (2, 6.45%), and (*) promoted communication among different groups of stakeholders (1, 3.23%). Moreover, some authors pointed out that giving stakeholders power on the design process, raised their perception of appropriation and ownership (2, 6.45%). Regarding the benefits for the design team, the reviewed papers highlight that the acquired knowledge not only informed and triggered design decisions but also raised awareness of the impact of the design decisions (3, 9.68%).

However, the aforementioned benefits also came with some obstacles. Converging and coping with the diversity of needs, ideas and expectations about the designed product seems to be the most common problem (8, 25.81%). Sometimes, addressing all requests and implementing all proposals was not feasible and required management of expectations as well as mediating efforts. Additionally, in some cases, leaving aside some participants' ideas decreased their willingness to get implicated in later stages of the design. Also, there were several problems related to the stakeholders' difficulties in the design process such as expressing their needs, imagining their actual experience, lack of critical perspective while assessing their own designs, and lack of stakeholders' interest or motivation in the design tasks (7, 22.58 %). Furthermore, several authors highlighted that focussing on addressing the specific needs of the participants does not guarantee covering the core needs of the target audience, and the proposed solutions may not be transferable to other contexts (5, 16.13%): The common tension between contextualising and generalising. Last but not least, some authors noted that the implementation of HC approaches was a tedious and time-consuming effort (4, 12.90%).

5. Discussion

This section summarises the main lessons learnt from each research question, establishes connections with the existing literature and elaborates on the implications for the research community.

5.1. Main findings

The importance of theoretical grounding for creating sound and impactful LA and AI solutions has been often echoed in the scientific literature (Dawson et al. Citation2019). According to the findings of this study, similar concerns persist in the design process of the reviewed HCLA/HCAI solutions. In our analysis, collaborative learning and SRL have been found among the dominant theories informing the proposals discussed in the final pool of papers. This finding could be elucidated given the rise of digital and network technologies that indicated the benefits of social learning and the importance of learners' self-regulation (Jones Citation2015). Nevertheless, in 70% of the reviewed studies, there was no reference to learning or pedagogical theories. Our findings align with those of Topali et al. (Citation2023) and Khalil, Prinsloo, and Slade (Citation2022) about the limited grounding of LA research on learning theories. Although HCD approaches may lead to solutions that better cater to the needs of the target users, these solutions without a solid theoretical foundation may not afford to create the expected impact. Therefore, it is essential to establish a balanced approach during the design process that considers both users' perspectives and the theoretical principles to ensure effective and impactful solutions. At the same time, we observed that after 2018, more studies of our final pool reported a theoretical grounding on learning theories. Considering the limited data, this outcome merely serves as a hint regarding the increasing recognition of the significance of learning theories and learning design in underpinning LA/AI proposals.

Our study reported that teachers were the most relevant stakeholders involved in the co-design of the reviewed solutions, closely followed by the students, and other types of stakeholders, which aligns with the findings of the systematic literature review conducted by Sarmiento and Wise (Citation2022). These findings are expected since teachers and students were the target user groups for most design products. However, despite teachers being the most frequently involved stakeholders, students can also play a crucial role in the co-design process, as they are the end-users of many HCLA/HCAI tools, and their input might contribute to creating more effective proposals. Interestingly, teachers and other stakeholders (e.g., researchers) often contribute to the design of tools for students but not the other way around. Students are rarely involved in the design of tools devoted to other stakeholders even if those LA/AI tools are usually about their learning process. Thus, future work could consider engaging students, for instance, to verify that the LA/AIED tools reflect and contribute to improving their learning experience. Additionally, it is noteworthy that a diverse group of stakeholders were involved including IT experts, school managers, and developers, which suggests that HC approaches require efforts from individuals beyond the target users. Nevertheless, considering this distinct group of stakeholders identified, there is the inherent risk of accommodating an excessive number of diverse and potentially conflicting needs (Steen Citation2012). This risk arises from the complexity and diversity within educational settings, where students, educators, administrators, parents, and other stakeholders may have distinct and sometimes competing interests, preferences, and priorities. Accordingly, satisfying a multitude of diverse desires can pose several challenges and limitations within the context of educational design research, such as the design of broad and unfocused solutions or less feasible ideas than those generated by designers alone, as also reported by Potvin et al. (Citation2017) or Lang and Davis (Citation2023) among others. To mitigate this issue, it seems critical for researchers and designers to be coherent in the design process by defining clear design goals, considering the educational objectives and/or by conducting a needs assessment to identify and prioritise the most pressing requirements and challenges faced by the target user group.

Differently from the work of Sarmiento and Wise (Citation2022), we found out that most papers focussed on the Higher Education context rather than K12 or preschool education. This result might be argued, due to the rather less focus on LA/AI in K12 education compared to Higher Education (Kovanovic, Mazziotti, and Lodge Citation2021) and the relatively recent attention to HCD in educational design. Therefore, including young learners in HCD stands as a promising opportunity for the community.

Interestingly, most of the HCD approaches concerned stakeholders' participation during the goal definition and the problem understanding (93,62%), while fewer papers involved actively the human agents during the ideation, prototyping or testing of the proposals. Prinsloo and Slade (Citation2016) highlighted the potential risk to teachers' and students' agency when their needs and desires are excluded from the design and development of analytical solutions. To overcome such a challenge, Martinez-Maldonado (Citation2023) stressed the importance of equally positioning the human agents not only as brainstorming informants but during the whole process of HCD as equal co-designers of the targeted solutions. Building on our results, while stakeholders can serve as informants for brainstorming their problems and needs before the tool design, their experience could also inform about what aspects should be monitored, which meaningful data should be collected, the critical course design aspects affecting the learning process, etc.

We also noted several examples of tools (e.g., Sadallah et al. Citation2022) and frameworks specifically developed to systematize HCD processes such as LATUX (Martinez-Maldonado et al. Citation2015), HCID (Chatti et al. Citation2020) and LAT-EP (Martinez-Maldonado et al. Citation2022). However, while these efforts are relevant and meaningful as they provide other researchers with valuable guidelines to follow, less than a third of the reviewed papers embraced an HCD framework in the design and development processes. That is, many researchers relied on the research goals to intuitively determine how to involve the stakeholders in the tasks, phases, and procedures. While these efforts are worthy, using established HCD frameworks can promote a more comprehensive and effective design process. Furthermore, a first glimpse of the papers suggests that the usage of the HCD terms included in the query (‘co-design’, ‘human-centered design’ and ‘participatory design’) may not have been consistent across authors. Thus, future work should explore the underlying meaning of these terms, looking at the definitions provided, as well as analysing the connections between the terms and the specific HC methods, extending the work by Lang and Davis on what LA authors mean when referring to human-centeredness (Lang and Davis Citation2023).

Despite the important role that evaluation plays in the acceptance for publication of research papers, the majority of the reviewed papers do not report any evaluation of their LA/AEID proposals nor their usage in authentic studies. This signs that the proposals (and the field) may be still in an early stage. These results are in line with other LA (Larrabee Sønderlund, Hughes, and Smith Citation2019; Schwendimann et al. Citation2017) and AI reviews (Ouyang, Zheng, and Jiao Citation2022). To support this process, initiatives such as the human-centered evaluation framework for explainable AI proposed by Donoso-Guzmán et al. (Citation2023) could be of great support. Also, it is noteworthy that, despite the emphasis of HC approaches on creating solutions aligned with the user needs and context, only 10 papers evaluated whether these goals were satisfied. Furthermore, while researchers often claim using HC approaches to promote adoption, only 3 papers assessed the impact on the stakeholders' practice, and only 1 paper looked at the adoption in the longer term (Ahn et al. Citation2021). Thus, to better understand the added value of using HC approaches, it would be necessary to assess not only to what extent the proposed solutions have satisfied the user needs but also the impact of HCD in the adoption of LA/AEID solutions.

Also related to the maturity of the HCLA/HCAI solutions, in a preliminary analysis of the development stage of the solutions, we have observed that most of them reached the stage of mockups, followed by experimental prototypes and few were fully working tools when the papers were written. However, this is an aspect requiring further investigation since often this information is not clear in the publication.

As raised by Martinez-Maldonado (Citation2023), the implementation of HCD methods in LA/AIED systems has its own challenges. Also, the empirical evidence from other fields has reported on the downsides of HC methods vs methods without stakeholder involvement (Lang and Davis Citation2023), e.g., ending up with more but less feasible ideas than those generated by designers alone (Potvin et al. Citation2017). The reviewed papers have elicited several pros and cons of using HC approaches which vary from study to study. The same can be observed in the existing literature: e.g., while some studies report cost reduction throughout the development life cycle and easier-to-use systems (Bevan, Bogomolni, and Ryan Citation2001; Karat Citation1997) other studies did not identify those improvements (Hirasawa et al. Citation2010). Those differences lead us to highlight that there is no universal HCD recipe; thus, how and when stakeholders should be involved should be carefully adapted to each study to get the best out of these methods.

5.2. Theoretical and practical implications

Building on the findings discussed above, it is worth mentioning that the practical and theoretical implications of this study can be beneficial primarily for LA/AIED researchers. However, these implications may also be useful for tool providers, tool designers and educational stakeholders (e.g., practitioners or decision makers). The emerged implications extend into the following three dimensions:

The importance of pedagogically grounding the HCLA/HCAI proposals.

The need to support the stakeholders' participation within the HCD processes.

The necessity for a thorough empirical assessment of the effects of the HCLA/HCAI proposals in authentic settings.

First, the majority of the examined proposals do not explicitly take into account pedagogical aspects, and only a few studies considered the learning objectives, the task type, the learning subject matter, and the context when designing and developing the HCLA/HCAI proposals. Prior studies stressed the importance of the alignment of LA/AI solutions with theoretical foundations and the learning design to foster their effectiveness in educational practice (Gašević, Kovanović, and Joksimović Citation2017; Ouyang, Zheng, and Jiao Citation2022; Rodríguez-Triana et al. Citation2015). Omitting such theoretical aspects in LA/AI applications could potentially result in technological determinism. Learning theories elucidate the learning process and explain the mechanisms behind how and why individuals learn. Thus, the pedagogical grounding clarifies what should be measured, why and at what moment by defining e.g. where to apply LA or AI and which data should be collected within the learning and teaching process (Banihashem et al. Citation2019). Additionally, the particularities of the learning design (e.g., the difficulty of the activities or the course structure) affect the learning and teaching process. Thus, their consideration is deemed crucial when designing technological solutions for educational purposes. du Boulay (Citation2000) highlighted the importance of the pedagogical underpinning when applying AI in education to address learners' specific needs to determine what to offer and when, and to support teachers' and students' own agency. At the same time, having a pedagogical input in LA and AIED fosters a better framing of the students' and teachers' captured behaviours (Rodríguez-Triana et al. Citation2015; Wong et al. Citation2019).

The second aspect regards the stakeholders' support when participating in HCD processes. The stakeholders' involvement in the design of analytics and AI tools has been considered sometimes unproductive, given participants' relatively limited data and LA/AI literacy (Martinez-Maldonado Citation2023). Existing literature has reported, for example, that teachers often require additional assistance in reflecting on learners' data, selecting and fine-tuning the thresholds (Fernandez Nieto et al. Citation2022; Rienties et al. Citation2018) and connecting such data to their course learning design (Mangaroska and Giannakos Citation2019). Our findings indicated the use of interviews for brainstorming as the most employed HCD technique, and the initial phase of the goal definition as the most common moment of the stakeholders' co-presence. However, as Martinez-Maldonado (Citation2023) highlighted, stakeholders' lived experiences, their design visions and practical knowledge should be supported and become an equal part of the whole HCD process and of decision-making. To support that inclusion, further research is necessary to better understand how and when stakeholders should be involved and when not to, as raised by Lang and Davis (Citation2023).

Third, the evolution of HCLA/ HCAI requires further empirical research to assess the real-world impact of proposed solutions (e.g., in terms of satisfaction of user needs and user adoption), ensure ethical considerations and trust, inform decision-making, and contribute to the ongoing innovation in the field of educational technology. Recent literature highlighted a gap between the potential of LA/AI and its actual implementation in real cases (Ouyang, Zheng, and Jiao Citation2022). Conducting empirical studies in authentic settings will allow for the assessment of the real-world impact of HCLA/HCAI interventions on student learning outcomes and teaching practices. Additionally, it will permit the reconsideration of human-centered approaches followed to satisfy the needs of the involved stakeholders. Additionally, empirical studies will foster further data-driven evidence, allowing researchers, practitioners, and even policymakers to make more informed decisions about the effectiveness and feasibility of research solutions. Indeed, many proposals fail to be applied in authentic settings since they do not respond to the complexities of the educational system.

5.3. Limitations

This systematic literature review does not come without limitations, and caution has to be taken when interpreting our findings. The query, the selection of databases and the inclusion/exclusion criteria may have left out relevant papers. Terminology-wise, apart from ‘co-design’, ‘participatory design’, and ‘human-centered design’, researchers may have used other terms such as ‘co-create’ or ‘co-creation’, while referring to the user involvement in their proposals. For instance, not including both UK and US variants of ‘human-centered’ and ‘human-centered’ may lead to overlooking papers. Regarding the domain, our query targeted papers explicitly referring to ‘learning analytics’ or ‘artificial intelligence’ in ‘education’. However, there may have been related works in the areas of educational data mining, intelligent tutoring systems, adaptive systems, smart learning environments, etc. Furthermore, the inclusion of the keywords variations (e.g., codesign and co-design, and artificial intelligence or AI) as well as not restricting the query to title, abstract and keywords could have potentially returned additional papers. Dealing with the venues, we may have overlooked certain conferences, journals and databases that are not directly related to LA and AI but which eventually publish studies on these topics.

Regarding the coding process, despite the involvement of several researchers, and the codification training with one of the articles, the remaining articles were coded by one single person. In order to minimise this limitation, we cross-checked dubious cases among the whole team.

Finally, we want to highlight the unexpectedly small number of HCAI papers under review in comparison with the HCLA ones. Interestingly, most of the detected ones are only conceptual or theoretical and do not satisfy the inclusion criteria of applying HC approaches in practice. In any case, we hypothesise that AIED authors may have applied HC approaches without mentioning it in the paper title, abstract or keywords. Also, we have noticed that in order to comply with the length limitations of the papers, LA and AIED authors may have opted for omitting the details of their HC approaches. Thus, the reviewed papers may just represent the top of the iceberg in terms of HCLA and HCAI research solutions, which may not reflect the reality of the LA/AIED industry.

6. Conclusions

This study has reviewed reported practices in HCLA and HCAI research, analysing their pedagogical contextualisation, the purpose of the contributions, the stakeholder involvement in the design and evaluation, as well as the methods and tools used to support HC design. The results confirmed that, beyond the existence of conceptual and theoretical papers promoting HC approaches in LA and AIED, the community is actively involving stakeholders in the design and, to some extent, in the evaluation of their contributions. Among the reviewed papers, HC approaches were usually intended for the identification of requirements/needs, especially for the design of LA dashboards that might be integrated within web-based tools and learning management systems. Teachers and students were the main target users, with the purpose of supporting their awareness and decision-making (e.g., Aleven et al. Citation2016; Verbert et al. Citation2020). Interestingly, the stakeholder involvement went beyond the final users and sometimes included project managers, developers, as well as teachers (e.g., when the tools were devoted to students).

While multiple stakeholder-involvement techniques were used in the reviewed papers (mainly interviews, focus groups and workshops), few of them adopted existing HC design guidelines (e.g., LATUX). Thus, the research community could also benefit from compiling conceptual HCLA/HCAI contributions, guidelines -such as the principles of human-centered design proposed by the Interaction Design Foundation-, and existing standards (for instance, the ISO 9241-210:2019 standard on human-centered design for interactive systems) which may inform future studies and potentially increase the effectiveness of the stakeholder involvement. Also, as pointed out in previous reviews, researchers should bear in mind the importance of the pedagogical contextualisation of their HCLA and HCAI proposals, as well as the need for evaluation and usage in authentic settings. After this first attempt to understand the current landscape of HCLA/HCAI research, future work should further analyse aspects such as the accuracy of the usage of the HC terms (e.g., extending Lang and Davis (Citation2023) with other terms than ‘human-centered’); the stakeholders' involvement in the implementation of the solutions (Rodríguez-Triana et al. Citation2018); and the level of automation of the contributions (Molenaar Citation2022). Furthermore, to release the potential of human-centered approaches, it is paramount to take into account already identified challenges of human-centered design (e.g., ensuring representative participation, considering the stakeholders' expertise and lived experiences in LA/AIED design) while applying them in HCLA/HCAI solutions (Martinez-Maldonado Citation2023), as well as the particularities of each study context. Last but not least, further studies would be necessary to better understand the application of human-centered approaches by the ed-tech industry, similar to what has been done for the manufacturing industry (Brückner et al. Citation2023; Nguyen Ngoc, Lasa, and Iriarte Citation2022) or the e-health context (van Velsen, Ludden, and Grünloh Citation2022).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

References

- Abras, C., D. Maloney-Krichmar, and J. Preece. 2004. “User-Centered Design.” Bainbridge, W. Encyclopedia of Human-Computer Interaction, Vol. 37, 445–456. Thousand Oaks: Sage Publications.

- Ahn, J., F. Campos, M. Hays, and D. DiGiacomo. 2019. “Designing in Context: Reaching Beyond Usability in Learning Analytics Dashboard Design.” Journal of Learning Analytics 6:70–85. https://doi.org/10.18608/jla.2019.62.5.

- Ahn, J., F. Campos, H. Nguyen, M. Hays, and J. Morrison. 2021. “Co-Designing for Privacy, Transparency, and Trust in K-12 Learning Analytics.” In 11th International Conference on Learning Analytics & Knowledge, 55–65. ACM.

- Aleven, V., F. Xhakaj, K. Holstein, and B. M. McLaren. 2016. “Developing a Teacher Dashboard for use with Intelligent Tutoring Systems.” In 4th International Workshop on Teaching Analytics, 15–23. CEUR.

- Alvarez, C. P., R. Martinez-Maldonado, and S. B. Shum. 2020. “LA-DECK: A Card-Based Learning Analytics Co-Design Tool.” In 10th International Conference on Learning Analytics & Knowledge, 63–72, ACM.

- Alzoubi, D., J. Kelley, E. Baran, S. B. Gilbert, A. K. Ilgu, and S. Jiang. 2021. “TeachActive Feedback Dashboard: Using Automated Classroom Analytics to Visualize Pedagogical Strategies at a Glance.” In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems. Association for Computing Machinery.

- Banihashem, S. K., K. Aliabadi, S. Pourroostaei Ardakani, M. Nili Ahmadabadi, and A. Delaver. 2019. “Investigation on the Role of Learning Theory in Learning Analytics.” Interdisciplinary Journal of Virtual Learning in Medical Sciences 10:1–14.

- Beheshti, E., L. Lyons, A. Mallavarapu, B. Wallingford, and S. Uzzo. 2020. “Design Considerations for Data-Driven Dashboards: Supporting Facilitation Tasks for Open-Ended Learning.” Extended Abstracts of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, Hawai, USA, 1–9.

- Bevan, N., I. Bogomolni, and N. Ryan. 2001. “Incorporating Usability in the Development Process at Inland Revenue and Israel Aircraft Industries.” In Human-Computer Interaction INTERACT '01: IFIP TC13 International Conference on Human-Computer Interaction, Tokyo, Japan, July 9–13, 2001, edited by M. Hirose, 862–868. IOS Press.

- Bødker, S., C. Dindler, O. S. Iversen, and R. C. Smith. 2022. “What Is Participatory Design?” In Participatory Design, 5–13. Cham: Springer. https://doi.org/10.1007/978-3-031-02235-7_7

- Brückner, A., P. Hein, F. Hein-Pensel, J. Mayan, and M. Wölke. 2023. “Human-Centered HCI Practices Leading the Path to Industry 5.0: A Systematic Literature Review.” In HCI International 2023 Posters, edited by C. Stephanidis, M. Antona, S. Ntoa, and G. Salvendy, 3–15. Cham: Springer Nature Switzerland.

- Brun, A., G. Bonnin, S. Castagnos, A. Roussanaly, and A. Boyer. 2019. “Learning Analytics Made in France: The Metal Project.” The International Journal of Information and Learning Technology 36 (4): 299–313. https://doi.org/10.1108/IJILT-02-2019-0022.

- Buckingham Shum, S., V. Echeverria, and R. Martinez-Maldonado. 2019. “The Multimodal Matrix as a Quantitative Ethnography Methodology.” In Advances in Quantitative Ethnography, edited by B. Eagan, M. Misfeldt, and A. Siebert-Evenstone, 26–40. Cham: Springer International Publishing.

- Buckingham Shum, S., R. Ferguson, and R. Martinez-Maldonado. 2019. “Human-Centred Learning Analytics.” Journal of Learning Analytics 6:1–9.

- Cavignaux-Bros, D., and D. Cristol. 2020. “Participatory Design and Co-Design–The Case of a MOOC on Public Innovation.” In Learner and User Experience Research: An Introduction for the Field of Learning Design & Technology, edited by M. Schmidt, A. A. Tawfik, I. Jahnke, and Y. Earnshaw, 1–26. EdTech Books. https://edtechbooks.org/ux/participatory_and_co_design.

- Cerro Martinez, J. P., M. Guitert Catasus, and T. Romeu Fontanillas. 2020. “Impact of Using Learning Analytics in Asynchronous Online Discussions in Higher Education.” International Journal of Educational Technology in Higher Education 17 (1): 1–18. https://doi.org/10.1186/s41239-020-00217-y.

- Chakraborty, N., S. Roy, W. L. Leite, M. K. S. Faradonbeh, and G. Michailidis. 2021. “The Effects of a Personalized Recommendation System on Students' High-Stakes Achievement Scores: A Field Experiment.” 2021 International Conference on Educational Data Mining (EDM). https://eric.ed.gov/?id=ED615676.

- Chalvatza, F., S. Karkalas, and M. Mavrikis. 2019. “Communicating Learning Analytics: Stakeholder Participation and Early Stage Requirement Analysis.” In 11th International Conference on Computer Supported Education, 339–346. SciTePress.

- Chatti, M. A., A. L. Dyckhoff, U. Schroeder, and H. Thüs. 2013. “A Reference Model for Learning Analytics.” International Journal of Technology Enhanced Learning 4:318–331. https://doi.org/10.1504/IJTEL.2012.051815.

- Chatti, M. A., A. Muslim, M. Guesmi, F. Richtscheid, D. Nasimi, A. Shahin, and R. Damera. 2020. “How to Design Effective Learning Analytics Indicators? A Human-Centered Design Approach.” In 15th European Conference on Technology Enhanced Learning, 303–317. Springer.

- Conijn, R., L. Van Waes, and M. van Zaanen. 2020. “Human-Centered Design of a Dashboard on Students' Revisions During Writing.” In 15th European Conference on Technology Enhanced Learning, 30–44. Springer.

- Coughlan, T., K. Lister, and N. Freear. 2019. “Our Journey: Designing and Utilising a Tool to Support Students to Represent Their Study Journeys.” 13th Annual International Technology, Education and Development Conference, Valencia, Spain, 3140–3147.

- Dabbebi, I., J. M. Gilliot, and S. Iksal. 2019. “User Centered Approach for Learning Analytics Dashboard Generation.” International Conference on Computer Supported Education, Heraklion, Greece.

- Dawson, S., S. Joksimovic, O. Poquet, and G. Siemens. 2019. “Increasing the Impact of Learning Analytics.” In 9th International Conference on Learning Analytics & Knowledge, 446–455. ACM.

- de Quincey, E., C. Briggs, T. Kyriacou, and R. Waller. 2019. “Student Centred Design of a Learning Analytics System.” In 9th International Conference on Learning Analytics & Knowledge, 353–362. ACM.

- Dimitriadis, Y., R. Martínez-Maldonado, and K. Wiley. 2021. “Human-Centered Design Principles for Actionable Learning Analytics.” In Research on E-Learning and ICT in Education: Technological, Pedagogical and Instructional Perspectives, 277–296. Cham: Springer. https://doi.org/10.1007/978-3-030-64363-8_15.

- Dollinger, M., and J. M. Lodge. 2018. “Co-Creation Strategies for Learning Analytics.” In 8th International Conference on Learning Analytics and Knowledge, 97–101. ACM.

- Donoso-Guzmán, I., J. Ooge, D. Parra, and K. Verbert. 2023. “Towards a Comprehensive Human-Centred Evaluation Framework for Explainable AI.” arXiv preprint.

- du Boulay, B. 2000. “Can We Learn from ITSs?” In Intelligent Tutoring Systems, edited by G. Gauthier, C. Frasson, and K. VanLehn, 9–17. Berlin, Heidelberg: Springer Berlin Heidelberg.

- Echeverria, V., M. Wong-Villacres, X. Ochoa, and K. Chiluiza. 2022. “An Exploratory Evaluation of a Collaboration Feedback Report.” 12th International Conference on Learning Analytics and Knowledge, 478–484. https://dl.acm.org/doi/proceedings/10 1145/3506860.

- Eradze, M., M. J. Rodríguez-Triana, N. Milikic, M. Laanpere, and K. Tammets. 2020. “Contextualising Learning Analytics with Classroom Observations: A Case Study.” Interaction Design & Architecture, 71–95. https://doi.org/10.55612/s-5002-000.

- Eradze, M., M. J. Rodriguez Triana, and M. Laanpere. 2017. “Semantically Annotated Lesson Observation Data in Learning Analytics Datasets: A Reference Model.” Interaction Design and Architecture (s) Journal-IxD&A 33:75–91. https://doi.org/10.55612/s-5002-000.

- Fernandez Nieto, G. M., K. Kitto, S. Buckingham Shum, and R. Martinez-Maldonado. 2022. “Beyond the Learning Analytics Dashboard: Alternative Ways to Communicate Student Data Insights Combining Visualisation, Narrative and Storytelling.” In LAK22: 12th International Learning Analytics and Knowledge Conference, 219–229. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/3506860.3506895.

- Gašević, D., V. Kovanović, and S. Joksimović. 2017. “Piecing the Learning Analytics Puzzle: A Consolidated Model of a Field of Research and Practice.” Learning: Research and Practice3:63–78.

- Given, L. 2012. The SAGE Encyclopedia of Qualitative Research Methods. Thousand Oaks, CA: Sage.

- Guba, E. G. 1981. “Criteria for Assessing the Trustworthiness of Naturalistic Inquiries.” Educational Communication & Technology 29 (2): 75–91. https://doi.org/10.1007/BF02766777.

- Hirasawa, N., K. Yamada-Kawai, H. Kasai, and S. Ogata. 2010. “Effectiveness of Introducing Human-Centered Design Process.” Proceedings of SICE Annual Conference 2010, Taipei, Taiwan, 1430–1433.

- Hoffmann, C., N. Mandran, C. d'Ham, S. Rebaudo, and M. A. Haddouche. 2022. “Development of Actionable Insights for Regulating Students' Collaborative Writing of Scientific Texts.” In European Conference on Technology Enhanced Learning, 534–541. Springer.

- Holstein, K., B. M. McLaren, and V. Aleven. 2019a. “Designing for Complementarity: Teacher and Student Needs for Orchestration Support in AI-Enhanced classrooms.” 20th International Conference on Artificial Intelligence in Education, Chicago, IL, USA, 157–171.

- Holstein, K., B. M. McLaren, and V. Aleven. 2019b. “Co-Designing a Real-Time Classroom Orchestration Tool to Support Teacher–ai Complementarity.” Journal of Learning Analytics 6: 27–52. https://doi.org/10.18608/jla.2019.62.3.

- Hooshyar, D., M. Yousefi, and H. Lim. 2019. “A Systematic Review of Data-Driven Approaches in Player Modeling of Educational Games.” Artificial Intelligence Review 52 (3): 1997–2017. https://doi.org/10.1007/s10462-017-9609-8.

- Hou, X., T. Nagashima, and V. Aleven. 2022. “Design a Dashboard for Secondary School Learners to Support Mastery Learning in a Gamified Learning Environment.” 17th European Conference on Technology Enhanced Learning, Toulouse, France, 542–549.

- Huh, J., S. Ann, J. Hong, M. Cui, J. Y. Park, Y. Kim, B. Sim, and H. K. Lee. 2022. “Service Design of Artificial Intelligence Voice Agents as a Guideline for Assisting Independent Toilet Training of Preschool Children.” Archives of Design Research 35 (3): 81–93. https://doi.org/10.15187/adr.2022.08.35.3.81.

- Jones, C. 2015. “Theories of Learning in a Digital Age.” In Networked Learning, 47–78. Cham: Springer.

- Karat, C. M. 1997. “Chapter 32 -- Cost-Justifying Usability Engineering in the Software Life Cycle.” In Handbook of Human-Computer Interaction (Second Edition), 2nd ed. edited by M. G. Helander, T. K. Landauer, and P. V. Prabhu, 767–778. Amsterdam: North-Holland. https://www.sciencedirect.com/science/article/pii/B9780444818621500984.

- Khalil, M., P. Prinsloo, and S. Slade. 2022. “The Use and Application of Learning Theory in Learning Analytics: A Scoping Review.” Journal of Computing in Higher Education 35: 573–594.

- Kilińska, D., F. V. Kobbelgaard, and T. Ryberg. 2019. “Learning Analytics Features for Improving Collaborative Writing Practices: Insights into the Students' Perspective.” In Digital Turn in Schools–Research, Policy, Practice: Proceedings of ICEM 2018 Conference, 69–81, Springer.

- Kitchenham, B., and S. Charters. 2007. Guidelines for Performing Systematic Literature Reviews in Software Engineering, Tech. Rep., Dept. of Computer Science. Univ. of Durham. UK.

- Knight, S., and T. D. Anderson. 2016. “Action-Oriented, Accountable, and Inter(Active) Learning Analytics for Learners.” CEUR Workshop Proceedings, Edinburgh, UK, Vol. 1596, 47–51.

- Knight, D., C. Brozina, E. Stauffer, C. Frisina, and T. Abel. 2015. “Developing a Learning Analytics Dashboard for Undergraduate Engineering Using Participatory Design.” 2015 ASEE Annual Conference and Exposition Proceedings, Seattle, Washington, USA, 26.485.1–26.485.11.

- Könings, K. D., T. Seidel, and J. J. van Merriënboer. 2014. “Participatory Design of Learning Environments: Integrating Perspectives of Students, Teachers, and Designers.” Instructional Science 42 (1): 1–9. https://doi.org/10.1007/s11251-013-9305-2.

- Kovanovic, V., C. Mazziotti, and J. Lodge. 2021. “Learning Analytics for Primary and Secondary Schools.” Journal of Learning Analytics 8:1–5. https://doi.org/10.18608/jla.2021.7543.

- Lang, C., and L. Davis. 2023. “Learning Analytics and Stakeholder Inclusion: What do We Mean When We Say ‘Human-Centered’?” In LAK23: 13th International Learning Analytics and Knowledge Conference, 411–417. New York, NY, USA: Association for Computing Machinery, LAK2023. https://doi.org/10.1145/3576050.3576110.

- Larrabee Sønderlund, A., E. Hughes, and J. Smith. 2019. “The Efficacy of Learning Analytics Interventions in Higher Education: A Systematic Review.” British Journal of Educational Technology50 (5): 2594–2618. https://doi.org/10.1111/bjet.v50.5.

- Li, S., and X. Gu. 2023. “A Risk Framework for Human-Centered Artificial Intelligence in Education: Based on Literature Review and Delphi–AHP Method.” Educational Technology & Society 26:187–202.

- Lister, K., T. Coughlan, I. Kenny, R. Tudor, and F. Iniesto. 2021. “Taylor, the Disability Disclosure Virtual Assistant: A Case Study of Participatory Research with Disabled Students.” Education Sciences 11: 584. https://doi.org/10.3390/educsci11100587.

- Long, Y., Z. Aman, and V. Aleven. 2015. “Motivational Design in an Intelligent Tutoring System that Helps Students Make Good Task Selection Decisions.” In 17th International Conference on Artificial Intelligence in Education, 226–236. Springer.

- Mangaroska, K., and M. Giannakos. 2019. “Learning Analytics for Learning Design: A Systematic Literature Review of Analytics-Driven Design to Enhance Learning.” IEEE Transactions on Learning Technologies 12:516–534. https://doi.org/10.1109/TLT.4620076.

- Martinez-Maldonado, R. 2023. “Human-Centred Learning Analytics: Four Challenges in Realising the Potential.” Learning Letters 1:6.

- Martinez-Maldonado, R., D. Elliott, C. Axisa, T. Power, V. Echeverria, and S. B. Shum. 2022. “Designing Translucent Learning Analytics with Teachers: An Elicitation Process.” Interactive Learning Environments 30 (6): 1077–1091. https://doi.org/10.1080/10494820.2019.1710541.

- Martinez-Maldonado, R., A. Pardo, N. Mirriahi, K. Yacef, J. Kay, and A. Clayphan. 2015. “Latux: An Iterative Workflow for Designing, Validating, and Deploying Learning Analytics Visualizations..” Journal of Learning Analytics 2:9–39. https://doi.org/10.18608/jla.2015.23.3.

- Matcha, W., N. A. Uzir, D. Gašević, and A. Pardo. 2020. “A Systematic Review of Empirical Studies on Learning Analytics Dashboards: A Self-Regulated Learning Perspective.” IEEE Transactions on Learning Technologies 13:226–245. https://doi.org/10.1109/TLT.4620076.

- Michos, K., C. Lang, D. Hernández-Leo, and D. Price-Dennis. 2020. “Involving Teachers in Learning Analytics Design.” In 10th International Conference on Learning Analytics & Knowledge, 94–99. ACM.

- Molenaar, I. 2022. “Towards Hybrid Human-AI Learning Technologies.” European Journal of Education57 (4): 632–645. https://doi.org/10.1111/ejed.v57.4.

- Nazaretsky, T., C. Bar, M. Walter, and G. Alexandron. 2022. “Empowering Teachers with AI: Co-Designing a Learning Analytics Tool for Personalized Instruction in the Science Classroom.” In 12th International Conference on Learning Analytics & Knowledge, 1–12. ACM.

- Nguyen Ngoc, H., G. Lasa, and I. Iriarte. 2022. “Human-Centred Design in Industry 4.0: Case Study Review and Opportunities for Future Research.” Journal of Intelligent Manufacturing 33 (1): 35–76. https://doi.org/10.1007/s10845-021-01796-x.

- Oliver-Quelennec, K., F. Bouchet, T. Carron, K. Fronton Casalino, and C. Pinçon. 2022. “Adapting Learning Analytics Dashboards by and for University Students.” In European Conference on Technology Enhanced Learning, 299–309. Springer.

- Ouatiq, A., B. Riyami, K. Mansouri, M. Qbadou, and E. S. Aoula. 2022. “Towards the Co-Design of a Teachers' Dashboards in a Hybrid Learning Environment.” 2nd International Conference on Innovative Research in Applied Science, Engineering and Technology, Meknes, Morocco, 1–6.

- Ouyang, F., L. Zheng, and P. Jiao. 2022. “Artificial Intelligence in Online Higher Education: A Systematic Review of Empirical Research From 2011 to 2020.” Education and Information Technologies 27: 7893–7925. https://doi.org/10.1007/s10639-022-10925-9.

- Person, J., C. Vidal-Gomel, P. Cottier, and C. Lecomte. 2021. “Co-Design of a Learning Analytics Tool by Computer Scientists and Teachers: The Difficult Emergence of a Common World.” In Congress of the International Ergonomics Association, 524–533. Springer.

- Pishtari, G., M. J. Rodríguez-Triana, and T. Väljataga. 2021. “A Multi-Stakeholder Perspective of Analytics for Learning Design in Location-Based Learning.” International Journal of Mobile and Blended Learning 13:1–17. https://doi.org/10.4018/IJMBL.

- Potvin, A. S., R. G. Kaplan, A. G. Boardman, and J. L. Polman. 2017. “Configurations in Co-Design: Participant Structures in Partnership Work.” In Connecting Research and Practice for Educational Improvement, 135–149. Routledge.

- Pozdniakov, S., R. Martinez-Maldonado, Y. S. Tsai, M. Cukurova, T. Bartindale, P. Chen, H. Marshall, D. Richardson, and D. Gasevic. 2022. “The Question-Driven Dashboard: How Can We Design Analytics Interfaces Aligned to Teachers' Inquiry?” In 12th International Conference on Learning Analytics & Knowledge, 175–185. ACM.

- Prinsloo, P., and S. Slade. 2016. “Student Vulnerability, Agency, and Learning Analytics: An Exploration.” Journal of Learning Analytics 3:159–182. https://doi.org/10.18608/jla.2016.31.10.

- Revano, T. F., and M. B. Garcia. 2021. “Designing Human-Centered Learning Analytics Dashboard for Higher Education Using a Participatory Design Approach.” 2021 IEEE 13th International Conference on Humanoid, Nanotechnology, Information Technology, Communication and Control, Environment, and Management, Manila, Philippines, 1–5.

- Rienties, B., C. Herodotou, T. Olney, M. Schencks, and A. Boroowa. 2018. “Making Sense of Learning Analytics Dashboards: A Technology Acceptance Perspective of 95 Teachers.” International Review of Research in Open and Distance Learning 19:187–202. https://doi.org/10.19173/irrodl.v19i5.3493.

- Rienties, B., H. Køhler Simonsen, and C. Herodotou. 2020. “Defining the Boundaries Between Artificial Intelligence in Education, Computer-Supported Collaborative Learning, Educational Data Mining, and Learning Analytics: A Need for Coherence.” Frontiers in Education 5. https://www.frontiersin.org/articles/10 .3389/feduc.2020.00128.

- Rodríguez-Triana, M. J., A. Martínez-Monés, J. I. Asensio-Pérez, and Y. Dimitriadis. 2015. “Scripting and Monitoring Meet Each Other: Aligning Learning Analytics and Learning Design to Support Teachers in Orchestrating Cscl Situations.” British Journal of Educational Technology 46 (2): 330–343. https://bera-Journals.onlinelibrary.wiley.com/doi/abs/10 .1111/bjet.12198. https://doi.org/10.1111/bjet.2015.46.issue-2.

- Rodríguez-Triana, M. J., L. P. Prieto, A. Martínez-Monés, J. I. Asensio-Pérez, and Y. Dimitriadis. 2018. “The Teacher in the Loop: Customizing Multimodal Learning Analytics for Blended Learning.” In 8th International Conference on Learning Analytics and Knowledge, 417–426. ACM.

- Romero, Y. R., H. Tame, Y. Holzhausen, M. Petzold, J. V. Wyszynski, H. Peters, M. Alhassan-Altoaama, M. Domanska, and M. Dittmar. 2021. “Design and Usability Testing of An in-House Developed Performance Feedback Tool for Medical Students.” BMC Medical Education 21 (1): 354. https://doi.org/10.1186/s12909-021-02788-4.

- Rouse, W. B. 2007. People and Organizations: Explorations of Human-Centered Design. Hoboken, NJ: John Wiley & Sons Inc.

- Sadallah, M., J. M. Gilliot, S. Iksal, K. Quelennec, M. Vermeulen, L. Neyssensas, O. Aubert, and R. Venant. 2022. “Designing LADs That Promote Sensemaking: A Participatory Tool.” 17th European Conference on Technology Enhanced Learning, Toulouse, France, 587–593.

- Salas-Pilco, S. Z., K. Xiao, and X. Hu. 2022. “Artificial Intelligence and Learning Analytics in Teacher Education: A Systematic Review.” Education Sciences 12: 569. https://doi.org/10.3390/educsci12080569.

- Sanders, E. B. N., and P. J. Stappers. 2008. “Co-Creation and the New Landscapes of Design.” CoDesign4 (1): 5–18. https://doi.org/10.1080/15710880701875068.

- Santos, O. C., and J. G. Boticario. 2015a. “Practical Guidelines for Designing and Evaluating Educationally Oriented Recommendations.” Computers and Education 81:354–374. https://doi.org/10.1016/j.compedu.2014.10.008.

- Santos, O. C., and J. G. Boticario. 2015b. “User-Centred Design and Educational Data Mining Support During the Recommendations Elicitation Process in Social Online Learning Environments.” Expert Systems 32 (2): 293–311. https://doi.org/10.1111/exsy.v32.2.

- Sarmiento, J. P., and A. F. Wise. 2022. “Participatory and Co-Design of Learning Analytics: An Initial Review of the Literature.” In 12th International Conference on Learning Analytics & Knowledge, 535–541. ACM.

- Schmitz, M., M. Scheffel, E. van Limbeek, R. Bemelmans, and H. Drachsler. 2018. “‘Make It Personal!’ -- Gathering Input from Stakeholders for a Learning Analytics-Supported Learning Design Tool.” 13th European Conference on Technology Enhanced Learning, Lyon, France, 297–310.

- Schwendimann, B. A., M. J. Rodríguez-Triana, A. Vozniuk, L. P. Prieto, M. S. Boroujeni, A. Holzer, D. Gillet, and P. Dillenbourg. 2017. “Perceiving Learning At a Glance: A Systematic Literature Review of Learning Dashboard Research.” IEEE Transactions on Learning Technologies 10:30–41. https://doi.org/10.1109/TLT.2016.2599522.

- Shneiderman, B. 2020. “Human-Centered Artificial Intelligence: Three Fresh Ideas.” AIS Transactions on Human-Computer Interaction 12:109–124. https://doi.org/10.17705/1thci.

- Soller, A., A. Martínez, P. Jermann, and M. Muehlenbrock. 2005. “From Mirroring to Guiding: A Review of State of the Art Technology for Supporting Collaborative Learning.” International Journal of Artificial Intelligence in Education 15:261–290.

- Steen, M. 2012. “Human-Centered Design as a Fragile Encounter.” Design Issues 28:72–80. https://doi.org/10.1162/DESI_a_00125.

- Topali, P., I. A. Chounta, A. Martínez-Monés, and Y. Dimitriadis. 2023. “Delving Into Instructor-Led Feedback Interventions Informed by Learning Analytics in Massive Open Online Courses.” Journal of Computer Assisted Learning 39 (4): 1039–1060. https://doi.org/10.1111/jcal.v39.4.

- Treasure-Jones, T., R. Dent-Spargo, and S. Dharmaratne. 2018. “How do Students Want their Workplace-Based Feedback Visualized in Order to Support Self-Regulated Learning? Initial Results & Reflections From a Co-Design Study in Medical Education.” CEUR Workshop Proceedings. CEUR Workshop Proceedings, Vol. 2193.

- Tsai, Y. S., R. F. Mello, J. Jovanović, and D. Gašević. 2021. “Student Appreciation of Data-Driven Feedback: A Pilot Study on OnTask.” In 11th International Conference on Learning Analytics & Knowledge, 511–517. ACM.