Abstract

Nudging has been used in a range of fields to shape citizens' behavior and promote public priorities. However, in educational contexts, nudges have only been explored relatively recently, with limited but promising evidence for the role of nudging used to increase engagement in online study, particularly in higher education. This paper reports on findings from a project that investigated the use of nudging in course-specific online learning contexts. The project evaluated the effectiveness of an intervention that combined course learning analytics data with a nudge strategy that encouraged students’ engagement with crucial course resources. When implemented in a planned and strategic manner in online courses, findings show that nudging offers a promising strategy for motivating students to access key online resources.

Introduction

Given its impact on student retention and success, online student engagement continues to be a challenge for higher education (HE) institutions. Online student engagement is understood to be a multidimensional construct of interrelated engagement behaviors that occur within, and or because of, an online learning environment (O’Shea et al., Citation2015; Redmond et al., Citation2018). Positive student online engagement, defined as an ongoing and regular commitment to online learning behaviors and activities, is empirically related to student satisfaction and good educational outcomes (Martin & Bolliger, Citation2018). In contrast, online disengagement is linked to poor academic achievement, procrastination, and a failure to study systematically (You, Citation2016).

Meta-analyses have been conducted, indicating that online environments are as instructionally effective as the traditional classroom (Means et al., Citation2010). However, if students are not engaging online, instructional effectiveness cannot be adequately determined, and the negative impact of disengagement overcomes any positive benefits of instruction. Disengagement is becoming increasingly detectable with the advent of learning analytics data (LAD), with multiple interpretations of learning analytics (LA).

Common to many approaches and use of LAD is reference to the measurement, analysis, and reporting of student-generated data as a means to examine or understand student behavior and to optimize learning and the learning environment (Siemens, Citation2019). However, to date, much LA research has pursued large-scale research agendas focused on interventions in educational and institutional contexts (Joksimović et al., Citation2019; Macfadyen et al., Citation2020) with limited examples of practice-orientated approaches transferable into everyday teaching in many HE institutions (Blumenstein et al., Citation2019; Gašević et al., Citation2019). This lack of literature related to course- and activity-specific data was the motivation behind our study reported on in this paper. As an interdisciplinary team of researchers from a regional university, we sought to explore tangible solutions that could provide insights into students’ online behaviors, ultimately developing easily accessible and time-efficient tools that educators can apply to influence students’ engagement. We aimed to explore the results of the intervention across time and between disciplines. More particularly, by harnessing course-specific learning analytics (CLA) data that represented students’ engagement behavior, we sought to understand how nudges impacted student online engagement behavior.

Review of the literature

Thaler and Sunstein (Citation2008) first used the term nudge to encourage behavioral change. Recognizing that people do not always act in ways that serve their own best interests, Thaler and Sunstein suggested individual decision-making could be improved by using simple nudges. Nudges operate using choice architecture, with an essential element being that it respects people’s freedom to choose (Selinger & Whyte, Citation2011). Nudges alter the underlying choice architecture in a way that prompts people to make decisions that are predictable and beneficial (Thaler & Sunstein, Citation2008).

Applying the nudging construct to HE, educators could adopt the role of being a choice architect (Blumenstein et al., Citation2019), accepting “the responsibility for organising the context in which people [i.e. students’] make decisions” (Thaler & Sunstein, Citation2008, p. 2) and recognizing that one way to influence students’ decision-making is to implement targeted nudges. To count as a nudge, an intervention must be easy and low cost. “Nudges are not mandates” (Thaler & Sunstein, Citation2008, p. 6). The power of nudges lies in their ability to alter human behavior without coercion (Blumenstein et al., Citation2019). Note that in this way nudges are different to a prompt or promotion of a task, resource, or activity, as a nudge has the specific intent or goal of altering behavior.

With the education sector, forms of nudges and their application vary. For example, some have used nudges to increase enrollment or financial aid among potential tertiary students currently enrolled in high school (Castleman & Page, Citation2015, Citation2016; Wildavsky, Citation2014) as well as the use of different forms of nudge interventions to improve student outcomes. Others, such as Buchs et al. (Citation2016), experimented with nudges to explain to students why and how to collaborate in a cooperative learning task, with findings indicating that this form of nudge enhanced the cooperative gains made from group work. Smith et al. (Citation2017) developed a form of nudge that utilized software that appended a grade nudge, a personalized message, to each assessment item to explain to students how their current grade would impact their final grade, with results indicating improved overall student performance through a randomized trial.

Feild (Citation2015) experimented with a form of data-driven nudges to guide how students might improve their performance by adjusting when they started to work on or submit assignments. Sulphey and Alkahtani (Citation2018) used a form of nudges to encourage students to start assignments early and submit them on time, with their pilot successfully increasing submissions and scores. Finally, Blumenstein et al. (Citation2019) discussed four case studies to illustrate practical applications of nudging for engagement. Three of these involved the use of LA tools that focused on capturing and acting on timely and meaningful data about students’ engagement and success.

However, despite emerging research investigating the broader use and forms of nudges in education to encourage student engagement, and more specifically efforts to do this within the HE sector, there remains a relatively unexplored area of research within HE contexts of a form of nudge being applied at a course- or subject-specific level. While empirical tests of nudging interventions have suggested that some nudging interventions can improve student outcomes, not all nudging interventions have positive effects (Damgaard & Nielsen, Citation2020). From an engagement perspective, findings related to the effectiveness of nudging are also mixed.

To advance knowledge in this field of research, this paper reports on a study that sought to explore whether a nudge intervention would improve student engagement. The paper summarizes the empirical approach undertaken for the project, with the nudge intervention defined as a structured, staggered communication strategy targeting low- and nonengaged students. In this paper, student engagement is defined as an improvement in students accessing key resources, where the key resources targeted in the intervention were early online study materials/and or activities that the course educator identified as critical to student success. Early access to and engagement with course resources were understood to play a significant role in improving student learning outcomes (Redmond et al., Citation2018; Stone, Citation2019). Students accessing key resources was the proxy measure for student engagement. While this paper does not specifically report on details of the nudge protocol adopted by Brown et al. (Citation2022) (i.e., whom to nudge, when to nudge, how to nudge), we explore findings on the effectiveness of combining the strategic combination of LA with prompts and nudges.

Methodology and research approach

The research setting was a regional Australian university where 70% of the student cohort was enrolled online (The University of Southern Queensland Human Research Ethics Committee Approval – H18REA019). Although the method involved implementing a structured, staggered nudging communication strategy, the courses were not randomly selected from across the university but based on an instructor’s willingness to participate in the experiment. We accessed data from at least 2 years of course offers in the project, the first year (2018) being a pilot year, where nudging occurred within six courses across three disciplines. Following an evaluation, we expanded the number of courses the following year (2019).

The final nudge intervention was implemented during two teaching semesters (S1 & S2, 2019) in a single year. The S1 implementation aimed to observe its impact and then refine the intervention based on those observations to intervene in S2. In S1, the intervention was implemented in eight courses spanning five disciplines (Education, Science, Engineering, Nursing, & Business), with the majority of courses being undergraduate, except for a small cohort of students in one of the Education courses. A total 1176 students enrolled across the eight courses (806 of these were enrolled in the Nursing course) collectively received 95 nudges. In S2, the intervention was implemented in 10 courses spanning the same five disciplines. In total, 477 students enrolled across the 10 courses, 65 nudges were sent to students who had not engaged with key course resources. Six courses were offered in both S1 and S2, and the intervention was implemented in both semesters in these courses (see ).

Table 1. Average increase in the percentage of students who accessed the nudged resources.

The S1 nudge intervention followed a three-stage process:

Identification of one or several key resources that were a priority for students on an associated week.

Identification through the learning management system (LMS) analytics of low- or nonengaged students.

Delivery of a nudge communication to targeted students. The type of wording and an example of a nudge are outlined below.

Hi (name)

Just a quick touch base to see how your first few weeks of the semester have gone for (EDX***). We really do hit the ground flying, with not much wriggle room to catch up on engaging with key course materials. As we move into Week 3 of the course, we have noticed that unfortunately you have still not had time to complete the Module 1 quiz activity.

This week we are going to be moving to Module 2 and it builds on what you learnt in Module 1. Both these modules and their related activities and quizzes not only help to build your capacity as a (discipline) professional, but they also contain significant and integral information from which to draw upon in order to successfully respond to your first assignment task.

Please know that the (course name) team are here to support you and if you would like to touch base for a quick chat, please email me ☺

In S2, the nudge intervention utilized both promotions and nudges as a strategic approach that went beyond simply a prompt. A distinguishing feature of this study and employment of nudges was that nudges were informed by data (usually in the form of CLA) and focused on making explicit to students the importance and value of key resources or activities. A four-stage process for promotion and nudging was followed:

Identification of a key (or 2 keys max.) that was a priority for students on an associated week.

Promotion of key resource. A promotion was defined as a communication provided to an entire course cohort and posted, for example, as a news announcement or message on the LMS noticeboard to highlight to learners a key resource or activity to focus on for a specified week.

Identification through the LMS analytics of low- or nonengaged students.

One week after a key resource had been promoted, a nudge, which was a targeted, personalized, encouraging communication was sent only to those students who had not yet accessed that resource (either via private LMS message, email, or text). The nudge reinforced the value of the key resource to their learning, or the connection of the resource or task to their upcoming assessment (see Brown et al., Citation2022 for further details on our approach to nudges).

CLAD were collected every week to measure initial student engagement, to observe students’ access to each of the targeted resources, and to gauge the nudge intervention's impact on student engagement. Tracking whether students accessed online resources (such as students’ clicking on an assessment e-book, lecture recording, or online study module) was a decision made by the researchers early in the study as a way of observing learning behavior (Dixon, Citation2015). However, it is important to mention that at the time of conducting this research there was limited research, particularly in the LA field, or in fact within the student engagement literature to help guide us on how to identify or determine authentic levels of engagement, in this case in relation to key online resources at a course- or subject-level, so we were very much pioneering new ground and associated terms in this area. As experienced practitioners, we were aware that students who observe (or view, read, or listen to) new information, then need to process and interpret that information before they personalize, contextualize, and apply it. We were also aware that others in the field were reinforcing that data regarding student access can help inform understandings of student involvement (Soffer & Cohen, Citation2019).

Access to the resources in the online courses was always available to the students. However, what was being captured in the CLAD was evidence of students’ choice actually to access the specified resource. While it is acknowledged that students accessing online course materials does not necessarily guarantee meaningful engagement, students accessing learning materials is a factor that affects achievement (Soffer & Cohen, Citation2019). Further, students’ decision-making in accessing a specific online course resource not only reflects the value they have placed on this resource but arguably represents one type of evidence of their online engagement. Observational behaviors are a necessary but not a sufficient factor in engagement. However, they indicate the potential to be engaged (Dixon, Citation2015). Accessing resources is also recognized in the online engagement scale, which reflects the main ways in which students engage online (Krause & Coates, Citation2008).

For the purpose of this research, we recorded CLAD for each of the key resources at three different times, with two main types of data collected: total number of students who accessed a key resource; and the total number of students enrolled on the date of CLAD accessed. For example, in Week 1 in a nursing course, 41.8% accessed the promoted resource, 43.1% did not access the promoted but accessed course. At that stage, we also observed that 15.1% had not accessed the course. The three intervention dates and times for data to be collected were as follows:

Initial promotion: CLAD recorded at the time the course educator provides the promoted communication for a particular resource or activity;

Post-promotion (pre-nudge): CLAD recorded 1 week after the key resource has been promoted, at the same time as the nudge is provided to nonengaged students;

Post-nudge: CLAD recorded 1 week after students had been nudged to access the resource.

We then undertook further analysis to determine if the observed changes were due to the nudge or other factors. We selected three courses for this review and are presented in the findings. Firstly, we selected resources that were not promoted or nudged (but would be of equal importance to the course) from each course analyzed. Next, we compared the CLAD for each nudged and non-nudged resource (using the same periods specified above). Secondly, we collected and compared CLAD for the same nudged resources used in a previous semester of offer (and not nudged in that previous semester). These previous courses can be considered pseudocontrols, and interaction with the non-nudged resource can be compared to the interaction with the nudged resources to evaluate the effect of nudging on student access.

Findings

An analysis of the CLAD across all courses and over both semesters, including comparisons between the pre- and post-nudge data for all the key resources that were nudged, is presented in . The table summarizes the average increase for each course in the percentage of students who accessed the nudged resources after a nudge was provided. This increase is derived by subtracting the percentage of the course cohort who had accessed the key resource before the nudge was given (recorded on the day the nudge was given, just before it was sent) from the percentage of students who had accessed the resource one week after the nudge was given. The larger increases observed in S2 signals successful refinement and strategic implementation of the nudge intervention in S2.

presents the impact of the nudge intervention on each course with the average increase in the percentage of students who accessed the nudged resources after a nudge was provided. It could be argued that the slight increases observed in S1 are simply natural weekly increases that typically occur in student access to online resources as the semester progresses, rather than a result of any effort to nudge students to access those resources. However, the increases observed in S2 are much larger and therefore potentially exceed any such natural weekly increase, confirming the argument that the intervention was responsible for increasing student access to online resources in S2 is consistent across all the courses in which the intervention was implemented.

Each course displayed a relatively high average increase in student access in the week following a nudge for those resources in which the intervention was repeated in both S1 and S2 in the same course. The increases in student access to online resources in S2 were much higher than in S1. The next set of results further explores the increased impact of the nudge intervention. The resources that were nudged in three selected courses were analyzed in more depth by comparing student access to nudged resources against student access to resources that were not nudged. The three courses were selected from Engineering, Education, and Science. A summary of the key resources selected for further analysis in weekly CLAD access is presented in . summarizes the comparison of the percentage of students who accessed a course resource in a year the resource was not nudged, and then in a year the resource was nudged. The timing of the promotions and nudges were consistent across courses during the final semester of usage (e.g., on a Monday for the promotions and a Friday for the nudge). The data used in and are further explored within each course.

Table 2. Access to key resources for selected courses pre- and post-nudge.

Table 3. Comparison of the percentage of students who accessed a course resource in a year the resource was not nudged, and then in a year the resource was nudged.

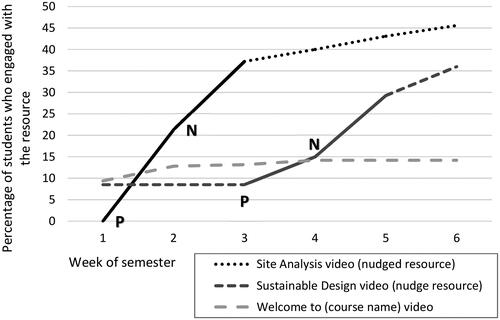

Engineering course

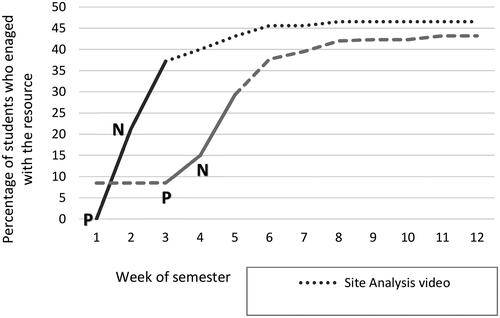

The engineering course had an enrollment of 116 students in the semester the nudge intervention was implemented (S2). Two resources promoted to students early in the semester were short video clips titled Site Analysis (V1) and Sustainable Residential Design (V2). While neither video was linked to assessment, both were designed to connect theory learned in the course to real-life practice and thus demonstrate to students why certain theoretical elements were relevant and taught in the course. V1 was promoted to students in Week 1, and V2 was promoted in Week 3. One week after each resource had been promoted to students, 21.3% of the cohort had accessed V1, and 15% had accessed V2. A nudge was then sent to those students who had not accessed each respective resource. One week after those students who had not accessed each resource were sent a nudge, total access had risen to 37.2% for V1 and 29.3% for V2, an improvement of 15.9% and 14% respectively over the week (see ). shows that the largest increase in access in any given week occurred in the weeks following the promotion and nudge for V1 and the week following Nudge for V2. The unbroken lines represent the weeks of promotion and nudge, and the broken line is outside of the promotion and nudge times. P represents the time of promotion, and N represents the time of nudge.

To provide further evidence of the efficacy of the effect of nudging, we compared the CLAD against CLAD for a non-nudged item. A similar video-based resource provided to students in the Engineering course was a Welcome to (course name) video. This video was used to orient students to the course and communicate course expectations during the first weeks of the semester. While available to students as part of the course’s Getting Started section on StudyDesk, this resource was not promoted through the nudge intervention. compares the student access data for the video resources promoted to students using the nudge strategy against the video resource that was not promoted. The figure demonstrates the much higher levels of student access to the targeted resources using the nudge intervention. The unbroken lines represent the weeks of promotion and nudge, and a broken line is outside the promotion and nudge times. P represents the time of promotion, and N represents the time of nudge.

Figure 2. Comparison of student online access for similar types of resources during a single semester.

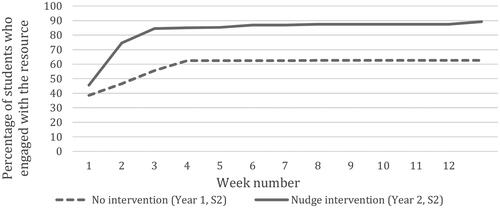

A resource used in successive iterations of the Engineering course was Weekly Task (Week 1). compares two iterations of this course: In the first iteration (Year 1, semester 1, n = 109), students did not receive a nudge intervention; in the second iteration (Year 2, semester 2, n = 116), the students received the intervention. In the week after the intervention was implemented, students who had not accessed the resource were sent a nudge communication on the Friday of Week 1. The graph highlights the much higher level of student access to the online resource in the year the intervention was implemented.

Education course

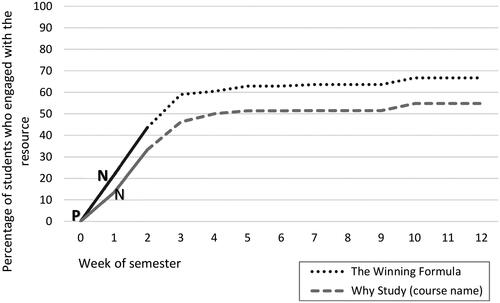

The Education course had an enrolment of 35 students in the semester the intervention was implemented (S2). Two resources deemed critical to orienting students and setting expectations for the course were The Winning Formula (resource 1) and Why Study (course name) (resource 2). Both resources were promoted to students via course announcements in Orientation Week (the week before the official start of the semester). One week after the promotion, 13.5% of the students had accessed resource 1, and 21.6% had accessed resource 2, and a nudge was sent to those students who had not accessed either of the resources. One week after the nudge was sent, access had risen to 33.3% for resource 1 and 43.6% for resource 2, an improvement of 19.8% and 22% respectively over the week. shows that the largest increase in access in any given week occurred in the weeks following the promotion and nudge for both resources. The unbroken lines represent the weeks of promotion and nudge, and a broken line is outside the promotion and nudge times. P represents the time of promotion, and N represents the time of the nudge.

While the Why Study (course name) resource was a new resource and therefore not used in any previous iterations of the course, the Winning Formula resource had been previously used. compares two iterations of this course: Students did not receive a nudge intervention in the first iteration (Year 1, semester 1, n = 87) in the second iteration (Year 2, semester 2, n = 35), the students received the intervention. One week after, those students who had not accessed the resource were sent a nudge. The graph highlights the much higher level of student engagement with the Winning Formula resource in the year the intervention was implemented.

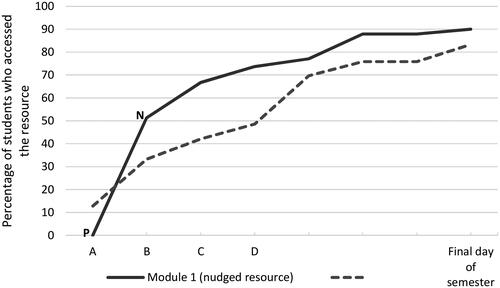

Data from two-course Study Guides were tracked further to highlight the nudge intervention within the education course. While the Module 1 Study Guide resource was promoted to students using the nudge strategy in the education course, the Module 2 Study Support resource was not. For the Module 1 resource, students who had not engaged with the resource were sent a nudge communication on the Monday of Week 2 (point B on the graph in ). As illustrated in , the promotion and follow up Nudge of the resource led to a higher percentage of students accessing the resource in the weeks after it was scheduled as an item of the study compared to the resource that was not promoted and nudged. The figure shows a change in time data rather than weekly data like the other figures to compare the difference between the nudged and non-nudged resources in the same semester due to the staggered delivery of nudged resources, where A represents Monday of the week students were expected to access the resource; B is 1 week later, C is 2 weeks later, and D is 3 weeks after students had been expected to access the resource.

Figure 6. Comparing student online access for similar resources used during a single semester with a normalized timeline.

At the end of the semester, the resource that was nudged continued to record a higher percentage of student access than the resource that was not nudged. While we acknowledge that differences in student engagement with these resources could be a result of student motivation being generally higher at the beginning of the semester, both modules were presented early in the semester (in the first 2 to 3 weeks). This, therefore, provides some evidence of the difference a nudge can make in prompting students to access key online resources.

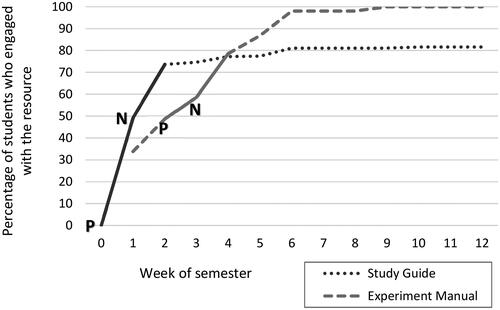

Science course

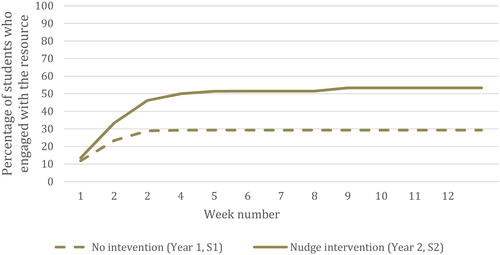

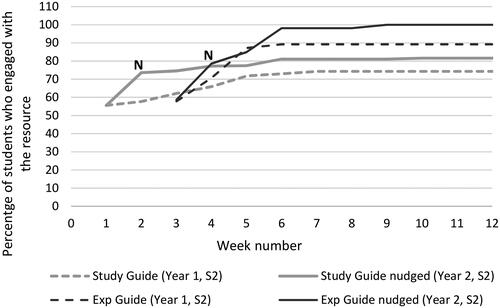

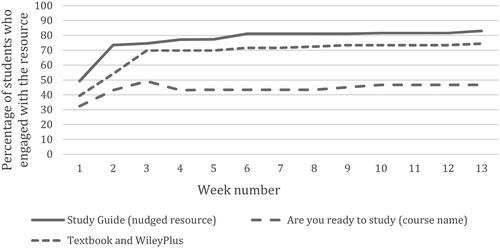

The Science (Physics content) course had an enrolment of 54 students in the semester the intervention was implemented (S2). A resource deemed critical to orienting students to the course and setting expectations was the Study Guide. This resource was promoted to students via a course announcement in orientation week. Another resource that was important for supporting students in the course's practical elements was the Experiment Manual. This resource was promoted to students via a course announcement in Week 1. One week after each of the resources had been promoted to students, 49.3% of the students had accessed the Study Guide, and 58.7% had accessed the Experiment Manual. A nudge was sent to those students who had not yet accessed each respective resource. One week after the nudge was sent, 73.6% of the cohort had accessed the Study Guide, and 78.6% had accessed the Experiment Manual, an improvement of 24.3% and 19.9% respectively over the week. While 48.6% of students had already accessed the Experiment Manual before it was promoted, it was an important resource for students’ learning early in the semester. Therefore, it was targeted for the nudge intervention. shows that the largest increase in access in any given week occurred in the weeks following the promotion and nudge for both resources. The unbroken lines represent the weeks of promotion and nudge, and a broken line is outside the promotion and nudge times. P represents the time of promotion, and N represents the time of the nudge.

The Study Guide and Experiment Manual resources were also used in successive iterations of the Physics course and, therefore, can further highlight the impact that the nudge strategy had on student access to key course resources. compares two iterations of the Physics course: In the first iteration (Year 1, semester 2, n = 37), students did not receive a nudge intervention; in the second iteration (Year 2, semester 2, n = 54), students received the intervention. While the importance of the resource was promoted to students during both iterations of the course (Years 1 & 2), students received a nudge to prompt access only in the second iteration of the course (Year 2). The first point on the graphs in (Friday Week 1 for the Study Guide; Friday week 3 for the Experiment Manual) represents a time at which students had only received a general promotion, course announcement type communication about the importance of the resource to their learning in the course. For both iterations, a similar percentage of students had engaged with the resources at this time. During the second iteration of the course (Year 2), students received a nudge emphasizing the importance of the resource to their success in the course. One week after the nudge (Friday Week 2 for the Study Guide; Friday week 4 for the Experiment Manual), a greater percentage of students had accessed the resource in the year the nudge intervention was implemented (73.6% compared to 57.7% for the Study Guide resource; 78.6% compared 70.7% for the experiment guide). At the end of the semester, more students engaged with both resources when the nudge intervention was implemented (84.8% compared to 74.3% for the Study Guide; 100% compared to 91.4% for the Experiment Manual). N represents the time of the nudge.

Two other video resources, titled Are you ready to study (course name) and Where and How to access Textbook Resources, were available to students during the semester of the nudge intervention but not nudged. The first provided students with information about the course, what it entailed (such as study load), and related course expectations and commitments. The second provided students with details about course resources, where to find them, and how to obtain them. Comparing these two study-support type resources, which were not nudged, against a study-support type resource that was nudged (the Study Guide) further highlights the impact that the nudge intervention had on student access to key online course resources. shows the student engagement statistics for these three resources. It highlights the much higher levels of student access to the resource that was nudged compared to those resources that were not nudged. N represents the time of the nudge.

Discussion

In S1, there were numerous occurrences where several resources in the same week often nudged, with many students who had not engaged with these resources receiving a separate nudge for each resource in the same week (multiple nudges). When the CLAD was analyzed for students’ responses to those nudges, we noted that while the first nudge was often effective in prompting an overall increase in student access with the nudged resource (measured by the percentage of the cohort who had accessed the resource), subsequent nudges led to only minor increases in overall access. We also observed in S1 that too many nudges over the semester or nudging multiple resources in a single week led to some students feeling overwhelmed or complaining they received too many communications from their educators. These types of communications were perceived to be closer to a nag than a nudge. In this study, a nag is defined as the overuse of a particular communication technique (Brown et al., Citation2018), such as nudging, or where the language adopted for a nudge is framed within a deficit discourse, adopts a discouraging tone, or is punitive, restrictive, or coercive.

Although the concept of a nudge appears to be straightforward, designing and applying nudges in practice can be confusing (Selinger & Whyte, Citation2011). If a nudge strategy is not well planned in advance, there is the risk that nudges will become nags, particularly, as found by the authors of this paper, if the nudges become too common or if the educator does not consider with whom they are communicating. Given this, a well-timed and well-crafted nudge written for a specific student or cohort of students in a course, with a particular purpose or intent, can increase student access to key course materials without becoming a nag (Lawrence et al., Citation2019).

Evidence of this is provided in this study where, in S2, when the intervention was implemented in a more targeted manner, the response to the intervention led to increases in student access to key resources in the weeks following promotion and nudge communications. For example, through the implementation and refinement of the intervention over multiple semesters, those nudges were more effective when they followed a promotion of a key resource and were limited in number: a maximum of 5 or 6 key resources (1 per week) should be promoted and then nudged over the early weeks of the semester. This study demonstrates that interventions that are student-centered and targeted to individual student needs are more effective.

Using these S1 reflections, the intervention was refined in S2 with a more targeted approach, including developing guidelines for the research and teaching teams. The guidelines specified the recommended number of resources to promote and then nudge, the number of times each resource should be nudged, and the proposed time to schedule the nudge (the central nudging regime occurred early in the semester). These guidelines were developed to strategically promote a key resource of activity and maximize student engagement, particularly for low- and nonengaged students, and address the need to minimize perceived nagging.

The analysis of the CLAD across all courses and over both semesters indicated that the intervention was effective in eliciting increased student access to online resources. Specifically, comparing student access to nudged resources with non-nudged resources in the same semester, and the same resource nudged in one semester and not nudged in another semester show that nudging increased student access. This supports the general proposition that behavioral interventions, such as a nudge can increase access to course resources. In addition, the findings support the suggestion by Blumenstein et al. (Citation2019) that the use of LA tools that focus on capturing and acting on timely and meaningful data about students’ engagement and success can be effectively harnessed to make a difference; however, whether this directly impacted student outcomes was not measured in this paper.

It is possible that the increases in access witnessed through an analysis of the CLAD were just clicks that reflect students’ skimming of key resources that were promoted, rather than students taking the time to read and consider each one. As previously stated, this data does not distinguish the quality or type of engagement with key resources (Redmond et al., Citation2018), simply the behavior of students’ accessing a resource. Further, while such data are not necessarily indicative of engagement with the content, they indicate access and, therefore, reflect a broad interpretation of engagement. Therefore, the data indicates the percentage of students engaging in observational behaviors, which may then lead to a deeper level of engagement behavior (Stone, Citation2016).

Further, the findings provide further insight into the use of CLAD in influencing student engagement. The use of CLAD to influence student behavior across a range of courses supports existing research by extending its application across a range of learning contexts (Nelson et al., Citation2012). The intervention applied to 11 courses across four disciplines demonstrates a practice-orientated approach. To date, this has been a criticism of current research in this area (Blumenstein et al., Citation2019; Gašević et al., Citation2019).

Conclusion and implications

To this point, limited research has explored the combination of using CLA and nudge theory to encourage student engagement (Brown et al., Citation2022). This study has supplemented the research by finding that nudges' strategic use can be a practical approach for motivating students to access key online. This plan should include consideration for what to nudge when to nudge, who to nudge, and how to nudge.

This study provides some implications for practitioners across many disciplines. Firstly, teaching teams can harness CLA to support online learning by motivating students to access those key course materials through nudges. Secondly, nudges can be developed to enhance chances of student success early in the semester, as early access to and engagement with course resources plays a significant role in improving student success and learning outcomes (Redmond et al., Citation2018; Stone, Citation2016). Interventions that increase early access to online resources are essential to the ongoing challenges related to retention and student outcomes.

This study shows that when implemented in a planned and strategic manner, nudging offers a promising opportunity to redirect students’ decisions and thus contribute to those students’ success. However, we acknowledge that the increases in access witnessed through current CLAD could have been clicks and skimming of the promoted resources, rather than students taking the time to absorb and consider each resource at a deeper cognitive level. It would, therefore, be useful to continue to compare data over multiple semesters and experiment with nudging different resources to determine whether student access to a specific resource changes with the particular nudging intervention. In addition, as LA become more capable of more than just counting clicks, the nature of the engagement and subsequent effect on student outcomes can be further scrutinized.

While we were able to provide some comparisons of resources used across multiple iterations of a course and which were nudged in one iteration but not in another, collating the data on a larger number of purposefully implemented examples would strengthen the evidence related to the impact of nudging. Indeed, the pedagogical use of nudges as a form of communication is still in its infancy. As such, there remain many opportunities for further refinements and learnings in this space. Furthermore, this study did not consider other communications with students, either by lecturers in other courses or by learning outside the disciplines. These communications can confound any slide from nudge to nag. Therefore, future nudging interventions need to consider the total communication to students to ensure they are timely, considered and focused.

As with all research, there are limitations to the study. This is a case study taken from one regional university, which means there is a small sample size. Therefore, the findings cannot be generalized to the wider population in HE. However, this is somewhat overcome by the multidisciplinary approach to the research. We hope that the detail of the method provides the ability for others to replicate the study in other contexts.

Rapid advances in LA and related data mining techniques mean we have access to tools that will enable us to understand the nature of engagement further. Building on this research, as technology changes the nature of how education and information are accessed by learners and as a greater percentage of HE learning content moves online, research that aims to support effective online learning becomes increasingly pertinent.

Acknowledgements

This work was supported by the University of Southern Queensland Office for the Advancement of Learning & Teaching under a Learning and Teaching Commissioned Project.

Disclosure statement

No potential conflict of interest was declared by the author(s).

Additional information

Notes on contributors

Alice Brown

Alice Brown is an Associate Professor in the School of Education, at the University of Southern Queensland. Her research interests include online engagement in HE, student nonengagement, and nudging. Her scholarship work is highly published and she is lead author of the HERDSA Guide Enhancing Online Engaging in HE (in press).

Jill Lawrence

Jill Lawrence is head of the School of Humanities and Communication and a professor at the University of Southern Queensland. Her research interests include student engagement, the first-year experience, and crosscultural communication. She was awarded a national teaching citation and a national award for university teaching (Humanities and the Arts).

Megan Axelsen

Megan Axelsen works as a project manager at the University of Southern Queensland. She manages the implementation of strategic and learning and teaching-based projects aimed at improving student engagement and university outcomes.

Petrea Redmond

Petrea Redmond is a professor of digital pedagogies in the School of Education at the University of Southern Queensland. Her research is situated in interrelated fields of educational technology, including improving learning experiences through formal and informal technology-enhanced learning,

Joanna Turner

Joanne Turner is a senior lecturer (Physics) in the Faculty of Health, Engineering and Sciences at the University of Southern Queensland. Her research area includes promoting STEM fields to students through the use of cyanotype media to explain how UV radiation influences our everyday lives.

Suzanne Maloney

Suzanne Maloney is the finance discipline lead and an associate professor in the School of Business at the University of Southern Queensland. Her research interests include superannuation, retirement planning, and finance and accounting education.

Linda Galligan

Linda Galligan is a professor and is head of the School of Mathematics, Physics and Computing at the University of Southern Queensland. Her research interests include first-year mathematics, and using technology (tablet PCs and mobile devices) to enhance mathematics teaching and learning.

References

- Blumenstein, M., Liu, D. Y. T., Richards, D., Leichtweis, S., & Stephens, J. M. (2019). Data-informed nudges for student engagement and success. In J. M. Lodge, J. C. Horvath, & L. Corrin (Eds.), Learning analytics in the classroom: Translating learning analytics research for teachers (pp. 185–207). Routledge. https://doi.org/10.4324/9781351113038-12

- Brown, A., Lawrence, J., Basson, M., Axelsen, M., Redmond, P., Turner, J., Maloney, S., & Galligan, L. (2022). The creation of a nudging protocol to support online student engagement in higher education. Active Learning in Higher Education. https://doi.org/10.1177/14697874211039077

- Brown, A., Redmond, P., Basson, M., & Lawrence, J. (2018, September 25). To ‘nudge’ or to ‘nag’: A communication approach for ‘nudging’ online engagement in higher education [Paper presentation]. The 8th Annual International Conference on Education and e-Learning, Singapore.

- Buchs, C., Gilles, I., Antonietti, J.-P., & Butera, F. (2016). Why students need to be prepared to cooperate: A cooperative nudge in statistics learning at university. Educational Psychology, 36(5), 956–974. https://doi.org/10.1080/01443410.2015.1075963

- Castleman, B. L., & Page, L. C. (2015). Summer nudging: Can personalized text messages and peer mentor outreach increase college going among low-income high school graduates? Journal of Economic Behaviour and Organization, 115, 144–160. https://doi.org/10.1016/j.jebo.2014.12.008

- Castleman, B. L., & Page, L. C. (2016). Freshman year financial aid nudges: An experiment to increase FAFSA renewal and college persistence. Journal of Human Resources, 51(2), 389–415. https://doi.org/10.3368/jhr.51.2.0614-6458R

- Damgaard, M. T., & Nielsen, H. S. (2020). Behavioral economics and nudging in education: evidence from the field. In S. Bradley & C. Green (Eds.), The economics of education: A comprehensive overview (2nd ed., pp. 21–35). Academic Press. https://doi.org/10.1016/B978-0-12-815391-8.00002-1

- Dixon, M. D. (2015). Measuring student engagement in the online course: The Online Student Engagement Scale (OSE). Online Learning, 19(4), 1–15. https://olj.onlinelearningconsortium.org/index.php/olj/article/view/561/165

- Feild, J. (2015). Improving student performance using nudge analytics. In O. C. Santos, C. Romero, M. Pechenizkiy, A. Merceron, P. Mitros, J. M. Luna, C. Mihaescu, P. Moreno, A. Hershkovitz, S. Ventura, & M. Desmarais (Eds.), Proceedings of the 8th International Conference on Educational Data Mining (pp. 464–467). International Educational Data Mining Society. https://www.educationaldatamining.org/EDM2015/proceedings/edm2015_proceedings.pdf

- Gašević, D., Tsai, Y.-S., Dawson, S., & Pardo, A. (2019). How do we start? An approach to learning analytics adoption in higher education. The International Journal of Information and Learning Technology, 36(4), 342–353. https://doi.org/10.1108/IJILT-02-2019-0024

- Joksimović, S., Kovanović, V., & Dawson, S. (2019). The journey of learning analytics. HERDSA Review of Higher Education, 6, 37–63. https://www.herdsa.org.au/herdsa-review-higher-education-vol-6/37-63

- Krause, K.-L., & Coates, H. (2008). Students’ engagement in first-year university. Assessment & Evaluation in Higher Education, 33(5), 493–505. https://doi.org/10.1080/02602930701698892

- Lawrence, J., Brown, A., Redmond, P., & Basson, M. (2019). Engaging the disengaged: Exploring the use of course-specific learning analytics and nudging to enhance online student engagement. Student Success, 10(2), 47–58. https://doi.org/10.5204/ssj.v10i2.1295

- Macfadyen, L. P., Lockyer, L., & Rienties, B. (2020). Learning design and learning analytics: Snapshot 2020. Journal of Learning Analytics, 7(3), 6–12. https://doi.org/10.18608/jla.2020.73.2

- Martin, F., & Bolliger, D. U. (2018). Engagement matters: Student perceptions on the importance of engagement strategies in the online learning environment. Online Learning, 22(1), 205–222. https://doi.org/10.24059/olj.v22i1.1092

- Means, B., Toyama, Y., Murphy, R., Bakia, M., & Jones, K. (2010). Evaluation of evidence based practices in online learning: A meta-analysis and review of online learning studies. U.S. Department of Education. https://shorturl.at/arAJQ

- Nelson, K., Quinn, C., Marrington, A., & Clarke, J. A. (2012). Good practice for enhancing the engagement and success of commencing students. Higher Education, 63(1), 83–96. https://doi.org/10.1007/s10734-011-9426-y

- O’Shea, S., Stone, C., & Delahunty, J. (2015). “I ‘feel’like I am at university even though I am online.” Exploring how students narrate their engagement with higher education institutions in an online learning environment. Distance Education, 36(1), 41–58. https://doi.org/10.1080/01587919.2015.1019970

- Redmond, P., Heffernan, A., Abawi, L., Brown, A., & Henderson, R. (2018). An online engagement framework for higher education. Online Learning, 22(1), 183–204. https://doi.org/10.24059/olj.v22i1.1175

- Selinger, E., & Whyte, K. (2011). Is there a right way to nudge? The practice and ethics of choice architecture. Sociology Compass, 5(10), 923–935. https://doi.org/10.1111/j.1751-9020.2011.00413.x

- Siemens, G. (2019). Learning analytics and open, flexible, and distance learning. Distance Education, 40(3), 414–418. https://doi.org/10.1080/01587919.2019.1656153

- Smith, B. O., White, D. R., Kuzyk, P., & Tierney, J. E. (2017). Improved grade outcomes with an emailed “grade nudge”. The Journal of Economic Education, 49(1), 1–7. https://doi.org/10.1080/00220485.2017.1397570

- Soffer, T., & Cohen, A. (2019). Students' engagement characteristics predict success and completion of online courses. Journal of Computer Assisted Learning, 35, 3778–3389. https://doi.org/10.1111/jcal.12340

- Stone, C. (2016). Opportunity through online learning: Improving student access, participation and success in higher education (Equity Fellowship Final Report). National Centre for Student Equity in Higher Education. https://shorturl.at/nrNP4

- Stone, C. (2019). Online learning in Australian higher education: Opportunities, challenges and transformations. Student Success, 10(2), 1–11. https://doi.org/10.5204/ssj.v10i2.1299

- Sulphey, M. M., & Alkahtani, N. S. (2018). Academic excellences of business graduates through nudging: Prospects in Saudi Arabia. International Journal of Innovation and Learning, 24(1), 98–114. https://doi.org/10.1504/IJIL.2018.092926

- Thaler, R. H., & Sunstein, C. R. (2008). Nudge: Improving decisions about health, wealth, and happiness. Penguin.

- Wildavsky, B. (2014). Nudge nation: A new way to prod students into and through college. In J. E. Lane (Ed.), Building a smarter university (pp. 143–158). State University of New York Press. https://doi.org/10.1080/13562517.2020.1748811

- You, J. W. (2016). Identifying significant indicators using LMS data to predict course achievement in online learning. The Internet and Higher Education, 29, 23–30. https://doi.org/10.1016/j.iheduc.2015.11.003