ABSTRACT

A typical practice of assessment in engineering studies, especially on large Bachelor level courses, is a final exam at the end of the course. This practice is problematic both in terms of learning and teaching, as it does not provide feedback on learning experience or student progress before the course is completed.

This study examines the gradual process of moving from a final exam towards continuous assessment that integrates the practices of summative and formative assessment. The aim is to understand how the changes affect student performance on the course over a period of four years. The changes were implemented on a large Bachelor level engineering course. The impact of adding practices of continuous assessment was analyzed in relation to the course pass rate, grade distribution, and student feedback.

The results show that replacing the final exam with weekly homework improved student performance. Some of the identified differences are statistically significant. Student feedback implies that moving towards continuous assessment had a positive impact on the learning experience. The results support increased use of continuous assessment in the assessment of student learning on large Bachelor level engineering courses.

1. Introduction

Discipline-specific traditions and limited resources for large class teaching in higher education continue to steer teachers towards using a final exam as the main assessment method (Hernández Citation2012; Elshorbagy and Schönwetter Citation2002). In engineering education, a final exam has often accounted for a significant contribution in the course grade (Cano Citation2011; Hall et al. Citation2007; Sanz-Pérez Citation2019; Simate and Woollacott Citation2017; Tormos et al. Citation2014) and has even been presented as a necessity for quality assurance (Boud Citation2010; Hall et al. Citation2007). The possibility to implement assessment without a final exam has been considered so revolutionary that, for example, Felder and Brent (Citation2016) do not present this possibility in their guide on teaching mathematics, science, and technology.

Final exam is a typical form of summative assessment that enables one-off measurement of learning for a large group of students (Brown, Bull, and Pendlebury Citation1997; Hernández Citation2012). However, an exam at the end of the course does not provide feedback on learning during the course, and thus often remains separate from the learning process (Beaumont, O’Doherty, and Shannon Citation2011). In addition, the use of a final exam as an assessment method differs significantly from the assessment and feedback practices in working life (Kearney and Perkins Citation2014; Villarroel et al. Citation2018). Lack of information about the learning process may weaken students’ commitment to completing the course work and, at worst, lead to dropping out of the course (Hall et al. Citation2007). For example, Lindberg-Sand and Olsson (Citation2008) found that summative assessment of first-year electrical engineering students’ knowledge of engineering sciences in part causes the less successful students to change their field of study. The authors conclude that students’ struggle to pass the first-year engineering courses may be due to a distinction between the teaching process and the assessment practices rather than students’ lack of knowledge (Lindberg-Sand and Olsson Citation2008).

Previous studies have suggested to move away from summative measurement of learning towards more continuous and formative forms of assessment (Boud et al. Citation2018; Deneen and Boud Citation2014; Kearney and Perkins Citation2014). Continuous assessment typically combines the practices of formative and summative assessment. Formative assessment facilitates the learning process with timely feedback (Black et al. Citation2004; Crisp Citation2012), thus allowing both students and teachers to receive information on learning while the course is still ongoing (Hernández Citation2012; Yorke Citation2003). Continuous and formative assessment have been found to encourage students to commit to their studies, set more ambitious learning goals for themselves, and achieve better learning outcomes (Black et al. Citation2004; Nicol and Macfarlane-Dick Citation2006; Tuunila and Pulkkinen Citation2015).

To identify ways of leveraging the benefits of continuous and formative assessment in engineering education, this article examines the replacement of a final exam representing summative assessment with continuous and formative forms of assessment based on weekly homework assignments and timely feedback. The new assessment practices were implemented gradually on a large Bachelor level Thermodynamics and Heat Transfer course. The research question is: How does adding the practices of continuous assessment affect student performance on the course?

2. Theoretical framework

2.1. Continuous assessment to support learning

Formative assessment is based on a social constructivist approach, in which the students construct their own learning process based on the feedback received from their activities (e.g. Boud Citation2000; Prince and Felder Citation2006). It is often carried out continuously by increasing the number of subtasks to be assessed during the course and the amount of feedback provided on them (Felder and Brent Citation2016). As continuous and formative assessment is integrated in the learning process, it supports the achievement of learning objectives (Boud Citation2015; Boud and Soler Citation2016; Hernández Citation2012).

Previous studies state that assessment should not only have positive effects on learning during the studies but also beyond them (Boud and Falchikov Citation2006). Assessment practices should develop with the society to meet the changing competence demands (Lindberg-Sand and Olsson Citation2008; Villarroel et al. Citation2018; Citation2019). As also noted in the Council of the European Union’s recommendation on key competences for lifelong learning (2018), practicing with versatile assessment methods during studies supports the development of working life skills (Boud et al. Citation2018; Villarroel et al. Citation2018; Citation2019). These skills include mastering one’s own learning process, which is needed for improving one’s performance (Boud et al. Citation2018) and balancing the workload by prioritising most urgent tasks.

Mastering one’s own learning process is possible when the student understands the usability of feedback in learning (Hernández Citation2012; Yan and Brown Citation2017) and has sufficient possibility to modify their performance based on that (Black et al. Citation2004; Boud Citation2000; Boud et al. Citation2018). Previous studies have found that timely information on the study progress may support students’ commitment to the course work (Hall et al. Citation2007) and improve their pass rate (Tuunila and Pulkkinen Citation2015). In the case of parallel courses, students can utilise information on their own study progress to detect when the minimum requirement for passing the course has been reached and the limited study time can be invested in other course work.

2.2. Continuous assessment in large classes

The use of continuous assessment in large class learning environment has been limited, as it is thought to require excessive time and effort from the teacher; the teacher must provide continuous feedback on the performance of each student and carry out numerous assessments during the course (Hernández Citation2012). As the number of students increases, the workload of an individual assessment and feedback round will also increase correspondingly, in which case it may not be possible to give timely feedback (Nicol and Macfarlane-Dick Citation2006; Yorke Citation2003). The quality of feedback may also suffer as the teacher's workload increases (Nicol and Macfarlane-Dick Citation2006).

Continuous assessment enables development of teaching during the course (Black et al. Citation2004; Nicol and Macfarlane-Dick Citation2006), as it allows identification of which topics are causing most difficulties for students to learn and adjustment of the content as well as the pace of subsequent lectures accordingly. However, teachers may have too great a workload to respond to the information received on learning. In this case, the new assignments to be assessed are unnecessarily burdensome for both the teacher and the students (Lindberg-Sand and Olsson Citation2008), which is likely to hinder their wellbeing. The scientific community’s general culture of assessment also affects the selection of teaching and assessment methods. It is feared that practices that deviate from the traditional assessment methods will cause resistance among both teachers and students (Deneen and Boud Citation2014). In fact, academic leadership plays a key role in creating an assessment culture that supports the teaching and learning process (Boud Citation2000; Lindberg-Sand and Olsson Citation2008).

Boud (Citation2015) states that if continuous assessment places a burden on a teacher, it is not likely to be sustained over a long period of time. To become an established practice on a course, continuous assessment requires transferring some of the responsibility of assessment and providing feedback from the teacher to the students as well as other course personnel, such as the assistants (Boud and Soler Citation2016; Yorke Citation2003). In this case, the role of feedback and assessment will change from teacher driven practices to involvement of the students as active agents in the assessment process (Black et al. Citation2004; Boud Citation2015; Boud et al. Citation2018; Kearney and Perkins Citation2014). The role of engineering students in the development of assessment practices has traditionally been marginal (Lindberg-Sand and Olsson Citation2008).

Large class learning environments with a low teacher-student ratio have been found to hinder students’ commitment to their studies (Hall et al. Citation2007; Hornby and Osman Citation2014). Villarroel et al. (Citation2019) suggest that supporting the commitment of students on a large class setting can be done by replacing merely theoretical assignments with assignments that imitate real-life problems. When assessment is based on assignments that combine theory with practice, it prepares students for working life (Gulikers, Bastiaens, and Kirschner Citation2004; Villarroel et al. Citation2018; Citation2019) and improves the learning outcomes (Kearney and Perkins Citation2014; Kearney, Perkins, and Kennedy-Clark Citation2016). These assignments often enable group work, which reduces the number of individual assignments to be assessed (Felder and Brent Citation2016). Instead of adding more assessment methods on a course, it is recommended to replace the old assessment practices with entirely new ones (Boud Citation2000).

Implementation of continuous assessment without a final exam has previously been studied in the context of Master's degree studies (Clavert and Paloposki Citation2015), but there is limited research available on the impact of continuous assessment without a final exam on large Bachelor's degree courses. One of the few studies available concerns the University of Porto, where Soeiro and Cabral (Citation2004) found that the pass rate and grades for an engineering Bachelor level course improved after applying continuous assessment without a final exam, and that the students also gained competences for lifelong learning. This study aims to enrich the limited body of previous research by describing a gradual stepwise process of moving towards continuous assessment on a large class Bachelor level engineering course.

3. Methodology

3.1. Context of the study

Thermodynamics and Heat Transfer course is part of the Bachelor's degree programme at Aalto University School of Engineering. A course is defined as regularly scheduled class sessions of typically one to five hours per week during a semester (Glossary of the U.S. Educational System). The course of 12 weeks is organised every fall semester. The workload is five credits according to the European Credit Transfer and Accumulation System, one credit equals approximately 27 h of study. The learning objectives pertain to understanding the basics of thermodynamics and heat transfer. The assessment focuses on the achievement of the learning objectives. Grades are given on a scale from 0 to 5 with 0 being fail and 1–5 being passing grades. A degree may be awarded with honours if the weighted average grade of the courses and the grade for the thesis both are at least 4.0.

The weekly teaching and learning activities consist of a lecture, two workshop sessions and homework assignments. The lectures combine basic theory of thermodynamics and heat transfer with analyses of the design and performance of engines, thermal power plants, and heat pumps as well as the individual components of such systems. The workshop sessions provide support for the students as they learn to perform thermodynamics and heat transfer calculations. The homework assignments are based on problem-solving with data searching, design, and calculation tasks.

The course is mandatory for students of Energy and Environmental Technology, Mechanical Engineering, and Structural Engineering who generally complete the course during the second year of their studies. In addition, a few students from other Bachelor’s degree programmes of Aalto University, other Finnish universities, and the Open University occasionally take part in the course each year. In total, the number of students is around 300 each year.

The teaching staff consists of a teacher-in-charge, a main assistant and five to 10 course assistants. The teacher-in-charge is a professor or lecturer, the main assistant is typically an experienced teacher, and the course assistants may be members of the teaching personnel, postgraduate students, or more advanced degree programme students. A more detailed course description is provided in Appendix 1.

3.2. Gradual development of assessment practices

Until 2014, the course assessment was mainly based on an open-book final exam administered at the end of semester. Students were able to earn additional points by handing in weekly homework assignments which accounted for at most 25% of the final grade. The exact weight of the altogether 12 sets of homework varied yearly and was determined only after the final exam had been graded. Thus, when the students were doing the homework assignments, they could not be sure of whether their score of additional points was going to be sufficient for passing the course. Two to three make-up exams were arranged each year for students who dropped out of the course, failed the course, or wanted to improve their grade. The students could also complete the course with a make-up exam instead of the final exam.

The exam and homework problems typically included calculations and open-ended tasks of evaluating the meaning and reliability of the solutions. The calculations were assessed as being either ‘correct’ or ‘incorrect’, and the open-ended tasks were assessed based on the logic and clarity of reasoning. In 2014, grading was mainly based on the calculations, while in 2015–2017, the importance of open-ended tasks was increased up to 20% of total points.

In 2014, the pass rate of the course was relatively low. Out of the 272 registered students only 175 (64%) passed the course by taking the final exam at the end of the fall semester. Additionally, 25 students (9%) passed the course through make-up exams. The remaining 72 students either failed in the exams, dropped out, or did not attend the course at all. To obtain a passing grade 1, the minimum number of points from the final exam and homework was 11 out of 48 (23%), which cannot be considered to demonstrate good command of the course content.

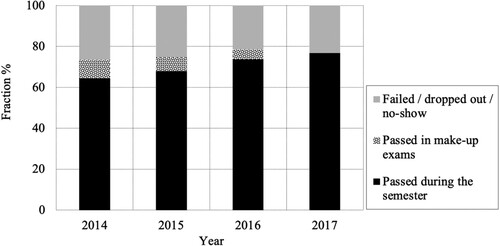

The development of assessment practices was implemented during a three-year period in 2015–2017 to improve students’ commitment to the course. The objectives were to reduce the dropout rate and the number of make-up exams required for passing the course as well as to improve students’ learning experience. shows how the different components of assessment contributed to forming the final grade each year. Year 2014 represents the starting point for the gradual development process.

Table 1. Weighing of different components of assessment in grading in 2014–2017.

3.3. From a final exam to assessment based on homework and interim exams

In 2015, four changes were made to the course assessment: (1) increasing the weight of weekly homework in the assessment to 33%, (2) replacing the final exam with two interim exams covering only half of the course material with a weight of 33% each, (3) informing the students of the weight of different components used in the assessment before the course began, and (4) increasing the minimum acceptable level of student performance to 42% with the new minimum number of points for the lowest passing grade being 30 of 72. The make-up exams were still arranged, and in line with the former final exam, they required mastering the whole course material. Both the interim and make-up exams were open-book exams. The number of homework sets was decreased from 12 to 10 to allow students more time to prepare for the interim exams.

When the logic of converting the homework and exam points into a final grade was published before the course started, the students could compare their progress with their grade objective during the course. As the importance of open-ended problems in grading was also increased, the need for timely feedback was recognised and an explicit policy was adopted to complete the grading of the homework within a week of the deadline for students to hand in their solution. A decision was also made to augment the guiding effect of homework grading by including as many verbal comments as possible in the feedback. The comments were mostly suggestions for improvement related to evaluating the trustworthiness of the selected data sources, choosing equations that are applicable in the given problem setting (for example, to avoid using equations derived for constant volume processes when the problem deals with a constant pressure process), correcting errors in performing the calculations, and discussing the usability of the results obtained.

While the minimum number of points for the lowest passing grade increased, it was explicated to the students that co-operation in solving the homework problems is encouraged. As the changes were observed to increase students’ commitment to completing the course, regardless of the higher minimum acceptable level of student performance, they were preserved in the course implementation in 2016.

3.4. Assessment based on weekly homework assignments and group work

Following the encouraging results of improved student commitment to the course when moving towards continuous assessment in 2015–2016, two more changes were made to the assessment practices in 2017: (1) the interim exams were removed, and the assessment was entirely based on 12 sets of weekly homework assignments, and (2) the new minimum number of points for the lowest passing grade was increased to 36 of 72 (50%). In addition, two of the homework assignments were modified to explicitly encourage group work, although the possibility of returning an individual solution was also retained. The group work assignments were supported with two workshops organised at Aalto University Design Factory (see e.g. Clavert and Laakso Citation2013), the facilities of which are designed to support collaboration better than the traditional lecture halls.

3.5. Collection and analysis of data

The data of this study includes the pass rates, scores, and grades attained by the students and the course feedback provided by them in 2014–2017. The pass rates, scores, and grades are based on the performance records maintained by the teaching staff, which cover the homework assignments, interim exams, final exams, and make-up exams. The material is used to examine the annual differences in the students’ study success. In addition, the correlation between the students’ success in their homework and interim exams is examined. The main method of analysis is Pearson χ2 test applied to contingency tables (e.g. Howell Citation2007). The test is chosen due to its applicability to both categorical data, such as the information on whether the students passed or failed the course, and to quantitative data, such as the course grades.

Course feedback provided by the students was collected using an electronic survey system. The survey was carried out anonymously at the end of the course and included both numerical questions, such as an overall grade for the course, and open questions. As the numerical replies were typically justified in the answers to the open questions, only the latter ones were included in the analysis. The number of respondents varied annually and by question between 42 and 90 which covers 15–29% of the cohort. The feedback questions were:

What was good about the course and promoted learning?

What was lacking in the course and impeded learning?

In 2017, questions concerning changes that took place that year were added to the feedback survey: What promoted or impeded learning in the group work assignments organised at the Design Factory? How did replacing the final exam with weekly homework affect learning?

Open student feedback was grouped using qualitative content analysis (e.g. Graneheim, Lindgren, and Lundman Citation2017). The analysis focused on feedback on the weekly homework assignments. All references to homework assignments were identified from the written feedback material and analyzed inductively. The analysis primarily focused on positive and negative impacts of homework on students’ learning experience during the course. A single statement by an individual student expressing either positive or negative impact was defined as a unit of analysis. In addition, the frequency of statements with similar meaning was analyzed. To identify the effects of the gradual development of the assessment practices, the feedback was grouped by the year the course was taken.

This study focuses on the effects of continuous assessment on student performance on the course. The study does not examine factors related to the background of students, such as possible variations between their major subjects or previous study success. Moreover, the study does not examine the potential variation between first-time course participants and those re-doing the course.

4. Findings

Based on the data analysis, it appears that the stepwise process of replacing the final exam with weekly homework had a positive impact on the course pass rate, distribution of grades and student feedback. Some of the differences observed between 2014 and 2017 were statistically significant.

4.1. Changes in the course pass rates

During the research period 2014–2017, the fraction of students who passed the course increased steadily even though the minimum scores required for the lowest passing grade increased from 23% in 2014 to 50% in 2017. The improvement of pass rates was statistically significant between 2014 and 2015 for those students who completed the course within the target schedule without a make-up exam (χ2 = 13.2 with three degrees of freedom, P = 0.004). The resulting P value indicates that had the pass rates remained the same between 2014 and 2015, the probability for such a Pearson χ2 test result would have been 0.004%. In 2014, the pass rate was 67.3%. When the final exam was replaced with two interim exams and the weight of homework was increased in 2015, the pass rate increased to 73.2%. The improvement of pass rates in 2015–2017 was not statistically significant (χ2 = 6.2 with two degrees of freedom, P = 0.044).

presents the fraction of students who completed the course within the target schedule, and the students who completed the course with a make-up exam. Each year, the fraction of students who failed the course mostly consisted of students who registered but did not attend the course, who dropped out immediately, or who struggled to meet the minimum acceptable level of performance from the beginning of the course. The opportunity for a make-up exam was available until 2016. The statistical analysis related to the evolution of the pass rate is presented in Tables A1, A2 and A3 of Appendix 2.

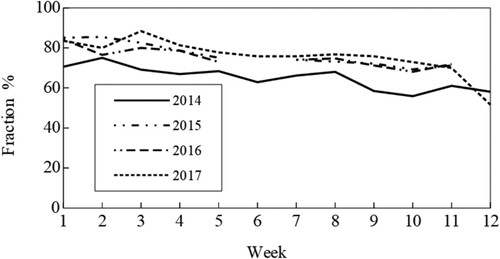

To shed light on the engagement of students during the course, the fraction of students who handed in their homework assignments in 2014–2017 was also examined (see ). As the weight of the homework and the minimum scores required for the lowest passing grade increased in 2015–2017, the fraction of students handing in their homework solutions increased accordingly. The highest rates of handing in the solutions were observed in 2017.

Each year, students’ activity in handing in their homework solutions slowly declined towards the end of the semester; the decrease was most significant in the last homework round in 2017. As there were only 10 sets of homework in 2015 and 2016, the graphics for those years have a gap in the middle of the semester and they also end earlier than the graphics for 2014 and 2017 in .

4.2. Changes in the distribution of grades

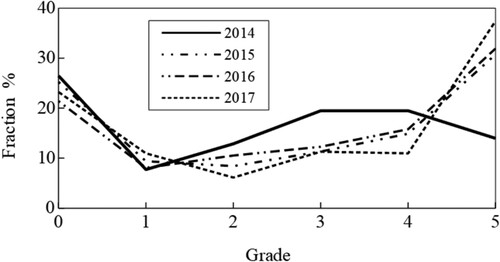

During the research period 2014–2017, the fraction of students with the highest passing grade increased even though the minimum scores required for the lowest passing grade increased simultaneously. The evolution of grade distribution is presented in . In 2014, the highest fraction of students attained grades 3 and 4. There was also a peak at grade 0 consisting of students who failed the course, dropped out, or registered but never participated, which is typical to all courses at Aalto University School of Engineering.

Figure 3. Evolution of grade distribution in 2014–2017. Students who passed the course in the make-up exams in 2014–2016 are included.

When compared with 2014, the distribution of grades in 2015–2017 was different with the highest fraction of students attaining the highest passing grade 5. For the years 2014 and 2015, the improvement was statistically significant (χ2 = 58.5 with 15 degrees of freedom, P = 0.000). In the following years 2015–2017, the fraction of students with the highest passing grade continued to increase but the difference was no longer statistically significant (χ2 = 11.1 with 10 degrees of freedom, P = 0.35). The fraction of students who failed the course with grade 0 decreased slightly in 2014–2017. The evolution of grade distribution suggests that students’ attainment of the learning objectives improved as the weight of the homework increased in the assessment.

includes students who completed the course within the target schedule and students who completed it with a make-up exam. The statistical analysis related to the distribution of grades is presented in Tables A4 and A5 of Appendix 2.

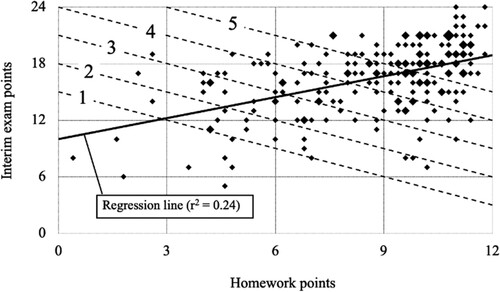

In addition to the grade distribution, correlation between students’ performance in the homework assignments and interim exams was examined. The analysis included only the 210 students who participated in both interim exams in Fall 2016. In , each student is identified with a diamond shape. The size of the diamond is larger when more students have reached the same number of points. Grade limits (1 being the lowest passing grade and 5 the highest) are presented with a dashed line.

Figure 4. Correlation between interim exam and homework performance in Fall 2016. Only the students who participated in both interim exams are included.

suggests that majority of the students who attained a high score of points from homework also scored high in the exams. The regression line showing the correlation between points from homework assignments and interim exams has a r2 value of 0.24. The value of r2 can be considered relatively high (Howell Citation2007), which implies that the exams and homework assignments measured the same thing. It also suggests that plagiarism was not a common issue in the homework-based assessment; when the exams were eliminated in 2017, there were only minor differences in the distribution of grades compared to 2016 when 66% of the assessment was still based on individual interim exam scores.

4.3. Students’ experiences of the assessment

Based on the anonymous student feedback, moving towards continuous assessment had a positive impact on students’ experience of the course. Completing the course with weekly homework assignments instead of a final exam received significantly more positive than negative feedback from the students. The emphasis of student feedback related to assessment varied between 2014 and 2017 according to the stage of course development ().

Table 2. Division of feedback for the weekly homework assignments in 2014–2017 (number of mentions in the data).

In 2014, the assessment was based on weekly homework assignments and a final exam, and the student feedback highlighted both doing the homework assignments and receiving help from the course assistants as supportive of learning.

In 2015–2016, the assessment method changed from a final exam to weekly homework and interim exams, and the students’ attention was increasingly focused on the quality of the assignments. In the following quotation, a student who participated in the course in 2015 describes the usefulness of the homework assignments beyond the immediate course context: ‘The course prepared me for working life –. I've usually done poorly in physics at the university, but now my homework exercises have gone very well. I learned a lot and I believe that the information will be useful to me in the future.’

In 2017, interim exams were replaced with assessment based solely on homework and a separate question on assessment was added to the feedback survey. In addition, the instructions for two homework assignments were modified to encourage group work. Feedback related to continuous assessment increased significantly, and the importance of group work for learning was emphasised. Assessment based on weekly homework was deemed to balance the workload and ease pressure at the end of the semester. In the following quotation, a student who participated in the course in 2017 describes the effect of eliminating the exam on their experienced level of stress.

‘The elimination of the exam was a really good idea, as it reduces stress during exam week and makes it more humane to complete the course. This will remove possible obstacles to completing the course caused by delays, illness, and accidents. – – I believe that I will remember what I’ve learned during this course better than what I learned during a course that only had an exam, or an exam combined with a huge amount of weekly homework.’

5. Discussion

This study shows that moving towards continuous assessment improves students’ commitment to the course work. Improved commitment was manifested during the examined period 2014–2017 of gradually increasing the degree of feedback on learning during the course: the pass rate increased and students’ activity in handing in the homework assignments improved. These findings imply that increased information on one’s own study progress may facilitate students’ engagement to the course work and support their commitment to completing it. Tuunila and Pulkkinen (Citation2015) have also found continuous assessment and weekly feedback to improve the pass rate on a Bachelor level technology course (see also Hall et al. Citation2007).

The findings suggest that moving towards continuous assessment may also support attainment of the learning objectives. When the final exam was eliminated and the weight of homework as well as the minimum score of points for passing the course were increased, the number of students with the highest grade increased accordingly. Previous studies have shown that integrating assessment with the teaching and learning process encourages students to achieve better learning outcomes (Black et al. Citation2004; Hernández Citation2012; Lindberg-Sand and Olsson Citation2008; Nicol and Macfarlane-Dick Citation2006). However, as pointed out by Richardson, Abraham, and Bond (Citation2012), academic performance with its correlates is a complicated issue that often extends beyond grades and pass rates.

The resulting distribution of grades suggests a fundamental difference between the exam-based and homework-based forms of assessment. A Gaussian distribution of grades would have been expected in exam-based assessment, where the performance is influenced by multiple simultaneous factors. These factors include students’ knowledge of the subject matter but also their capability of memorising information, speed of exam execution, and ability to withstand the anxiety associated with the one-off exam situation. Assessment based on homework was found to diminish the number of factors influencing students’ performance by allowing more time for completing the assignments and enabling the use of external sources of information, such as the internet. Eliminating the exam may have also supported students’ learning by reducing the anxiety related to failing the exam (Richardson, Abraham, and Bond Citation2012) or not being able attend it due to, for example, a bus strike, missed alarm, or sickness.

Practices of continuous assessment were highlighted in the student feedback as the key factors in balancing the increasing workload towards the end of semester. As the exams were eliminated and the weight of different components used in the assessment was published at the beginning of the course, the activity of some students was found to decrease towards the end of the semester. It may be that the practices of continuous and formative assessment allowed evaluating one’s own performance in terms of achieving individual learning objectives and adjusting one’s activity based on the feedback. Previous studies have highlighted timely feedback as a key factor guiding the regulation of one's own learning process (Villarroel et al. Citation2018; Yan and Brown Citation2017). The ability to regulate one's own workload and performance based on continuous feedback is also a relevant working life skill (Kearney and Perkins Citation2014; Boud et al. Citation2018).

Developing some of the individual homework assignments to encourage group work was considered as supportive of learning in the student feedback. As collaboration is often required for solving real-life problems, these assignments are likely to have prepared the students for working life. While the exam assignments were based on limited study materials provided by the teacher, the homework assignments allowed searching and evaluation of data from all available sources before performing the calculations. Previous research has found that assessment based on assignments that imitate real-life problems supports the commitment of students on a large class setting (Villarroel et al. Citation2019), improves their learning outcomes (Kearney and Perkins Citation2014; Kearney, Perkins, and Kennedy-Clark Citation2016), and prepares them for working life (Gulikers, Bastiaens, and Kirschner Citation2004; Villarroel et al. Citation2018; Citation2019).

Based on the positive correlation between students’ interim exam and homework performance in 2016, it was concluded that there were assessment practices that measured the same thing. Replacing summative exam-based assessment entirely with homework-based assessment reduced teacher’s heavy workload related to arranging the exams. In addition, replacing some of the individual assignments with group work reduced the number of individual deliveries to be assessed by the teacher (see also Felder and Brent Citation2016). Moving towards continuous assessment also increased the transparency of the learning process for the teacher, provided information on the progress of the students, and enabled giving feedback when necessary. However, providing timely feedback was heavily dependent on the availability of competent teaching personnel on the course.

In line with previous research (Boud Citation2000), this study suggests that continuous assessment should replace rather than supplement the old assessment practices. It also shows that transformation from summative to continuous assessment does require ensuring sufficient resources for teaching of large class engineering courses.

6. Limitations and development proposals

Although previous studies have been in nearly unanimous agreement on the benefits of continuous assessment for learning (e.g. Black et al. Citation2004; Hernández Citation2012), they have highlighted the difficulty in reforming assessment practices, especially on large courses (Deneen and Boud Citation2014; Nicol and Macfarlane-Dick Citation2006). Consequently, there have been limited examples of the practical implementation of continuous assessment on large engineering courses (Simate and Woollacott Citation2017; Tormos et al. Citation2014). Previous research tends to favour the use of a final exam as an important indicator of learning outcomes and a tool for ensuring the quality of teaching, even when continuous assessment is applied (Boud Citation2010; Hall et al. Citation2007). The results of this study encourage the use of continuous assessment without a final exam to support learning and commitment on large engineering courses, although the scope was limited to primarily numerical indicators of student performance.

Continuous assessment often allows students to achieve their individual grade objectives before the course ends. As the workload of parallel, exam-based courses increases towards the end of the semester, students are inclined to invest their limited study time on those courses that are assessed solely with a final exam rather than try to improve the grades obtained from continuous assessment. Prioritising courses with a final exam may hinder the positive effects of continuous assessment on balancing the workload, reducing study-related stress, and developing working life skills. Consequently, it is recommended to replace final exams with continuous assessment on a program rather than course level. To support students’ ability to regulate their own learning process, teachers are encouraged to communicate to students how the final grade is formed before the course begins.

In the future, it will be important to identify the impact of different kinds of assessment tasks and feedback on the quality of learning as well as to examine how teacher’s workload can be reduced by introducing formative self and peer assessment alongside the assessment carried out by the teacher. Further studies are also required to identify the effects of continuous assessment based on real-life problems and group work assignments in a large class setting. To examine the wider impact of continuous assessment, research that compares the outcomes of courses based solely on continuous and formative assessment with other courses assessed predominantly with a final exam is called for.

Supplemental Material

Download MS Word (72.8 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Notes on contributors

Tuomas Paloposki

Dr. Tuomas Paloposki has extensive expertise in research and higher education based on energy conversion with specific focus on combustion and gasification technologies. He was awarded as the Best International Teacher by Aalto University Student Union in 2011.

Viivi Virtanen

Dr. Viivi Virtanen works as Principal Research Scientist in the EDU research unit at Häme University of Applied Sciences. Her research interests include teaching and learning in higher education with specific interests in well-being as well as researcher education and careers, especially in STEM fields.

Maria Clavert

Dr. Maria Clavert has more than 10 years of experience in multidisciplinary research and development of engineering education and technology education at Aalto University. Her research interests include design-based learning and collaborative technological problem-solving.

References

- Beaumont, C., M. O’Doherty, and L. Shannon. 2011. “Reconceptualising Assessment Feedback: A Key to Improving Student Learning?” Studies in Higher Education 36: 671–687. https://doi.org/10.1080/03075071003731135.

- Biggs, J., and C. Tang. 2011. Teaching for Quality Learning at University (4th ed.). Society for Research into Higher Education. Open University Press.

- Black, P., C. Harrison, C. Lee, B. Marshall, and D. Wiliam. 2004. “Working Inside the Black box: Assessment for Learning in the Classroom.” Phi Delta Kappan 86 (1): 8–21. https://doi.org/10.1177/003172170408600105.

- Boud, D. 2000. “Sustainable Assessment: Rethinking Assessment for the Learning Society.” Studies in Continuing Education 22 (2): 151–167. https://doi.org/10.1080/713695728.

- Boud, D. and Associates. 2010. Assessment 2020: Seven Propositions for Assessment Reform in Higher Education. Australian Learning and Teaching Council. https://www.uts.edu.au/sites/default/files/Assessment-2020_propositions_final.pdf.

- Boud, D. 2015. “Feedback: Ensuring That it Leads to Enhanced Learning.” The Clinical Teacher 12 (1): 3–7. https://doi.org/10.1111/tct.12345.

- Boud, D., R. Ajjawi, P. Dawson, and J. Tai. 2018. Developing Evaluative Judgement in Higher Education. Assessment for Knowing and Producing Quality Work (1st ed.). Routledge.

- Boud, D., and N. Falchikov. 2006. “Aligning Assessment with Long-Term Learning.” Assessment & Evaluation in Higher Education 31 (4): 399–413. https://doi.org/10.1080/02602930600679050.

- Boud, D., and R. Soler. 2016. “Sustainable Assessment Revisited.” Assessment & Evaluation in Higher Education 41 (3): 400–413. https://doi.org/10.1080/02602938.2015.1018133.

- Brown, G., J. Bull, and M. Pendlebury. 1997. Assessing Student Learning in Higher Education. London: Routledge.

- Cano, M.-D. 2011. “Students’ Involvement in Continuous Assessment Methodologies: A Case Study for a Distributed Information Systems Course.” IEEE Transactions on Education 54 (3): 442–451. https://doi.org/10.1109/TE.2010.2073708.

- Clavert, M., and M. Laakso. 2013. “Implementing Design-Based Learning in Engineering Education at Aalto University Design Factory.” In Proceedings of the 41st SEFI Annual Conference 2013, “Engineering Education Fast Forward 1973 > 2013”, Belgium. https://www.sefi.be/wp-content/uploads/2017/10/39.pdf

- Clavert, M., and T. Paloposki. 2015. “Implementing Design-Based Learning in Teaching of Combustion and Gasification Technology.” International Journal of Engineering Education 31 (4): 1021–1032.

- Council of the European Union. 2018. “Recommendation on Key Competences for Lifelong Learning.” Official Journal of the European Union C 189), https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=CELEX:32018H0604(01)&from=EN.

- Crisp, G. 2012. “Integrative Assessment: Reframing Assessment Practice for Current and Future Learning.” Assessment & Evaluation in Higher Education 37 (1): 33–43. https://doi.org/10.1080/02602938.2010.494234.

- Deneen, C., and D. Boud. 2014. “Patterns of Resistance in Managing Assessment Change.” Assessment & Evaluation in Higher Education 39 (5): 577–591. https://doi.org/10.1080/02602938.2013.859654.

- Elshorbagy, A., and D. J. Schönwetter. 2002. “Engineer Morphing: Bridging the Gap Between Classroom Teaching and the Engineering Profession.” International Journal of Engineering Education 18 (3): 295–300.

- Felder, R., and R. Brent. 2016. Teaching and Learning STEM: A Practical Guide. Jossey-Bass.

- Glossary of the U.S. Educational System. 2023. https://educationusa.state.gov/experience-studying-usa/us-educational-system/glossary, retrieved on 1.11.2023.

- Graneheim, U., B.-M. Lindgren, and B. Lundman. 2017. “Methodological Challenges in Qualitative Content Analysis: A Discussion Paper.” Nurse Education Today 56: 29–34. https://doi.org/10.1016/j.nedt.2017.06.002.

- Gulikers, J., T. Bastiaens, and P. Kirschner. 2004. “A Five-Dimensional Framework for Authentic Assessment.” Educational Technology Research and Development 52 (3): 67–86. https://doi.org/10.1007/BF02504676.

- Hall, W., S. Palmer, C. Ferguson, and J. T. Jones. 2007. “Delivery and Assessment Strategies to Improve On- and Off-Campus Student Performance in Structural Mechanics.” International Journal of Mechanical Engineering Education 35: 272–284. https://doi.org/10.7227/IJMEE.35.4.2.

- Hernández, R. 2012. “Does Continuous Assessment in Higher Education Support Student Learning?” Higher Education 64 (4): 489–502. https://doi.org/10.1007/s10734-012-9506-7.

- Hornby, D., and R. Osman. 2014. “Massification in Higher Education: Large Classes and Student Learning.” Higher Education 67: 711–719. https://doi.org/10.1007/s10734-014-9733-1.

- Howell, D. 2007. Statistical Methods for Psychology. 6th ed. Thomson Higher Education.

- Kearney, S., and T. Perkins. 2014. “Engaging Students Through Assessment: The Success and Limitations of the ASPAL (Authentic Self and Peer Assessment for Learning) Model.” Journal of University Teaching and Learning Practice 11 (3): 4–18. https://doi.org/10.53761/1.11.3.2.

- Kearney, S., T. Perkins, and S. Kennedy-Clark. 2016. “Using Self- and Peer-Assessments for Summative Purposes: Analysing the Relative Validity of the AASL (Authentic Assessment for Sustainable Learning) Model.” Assessment & Evaluation in Higher Education 41 (6): 840–853. https://doi.org/10.1080/02602938.2015.1039484.

- Lindberg-Sand, Å, and T. Olsson. 2008. “Sustainable Assessment?” International Journal of Educational Research 47 (3): 165–174. https://doi.org/10.1016/j.ijer.2008.01.004.

- Nicol, D., and D. Macfarlane-Dick. 2006. “Formative Assessment and Self-Regulated Learning: A Model and Seven Principles of Good Feedback Practice.” Studies in Higher Education 31 (2): 199–218. https://doi.org/10.1080/03075070600572090.

- Pintrich, P. 2003. “A Motivational Science Perspective on the Role of Student Motivation in Learning and Teaching Contexts.” Journal of Educational Psychology 95 (4): 667–686. https://doi.org/10.1037/0022-0663.95.4.667.

- Prince, M., and R. Felder. 2006. “Inductive Teaching and Learning Methods: Definitions, Comparisons, and Research Bases.” Journal of Engineering Education 95 (2): 123–138. https://doi.org/10.1002/j.2168-9830.2006.tb00884.x.

- Richardson, M., C. Abraham, and R. Bond. 2012. “Psychological Correlates of University Students’ Academic Performance: A Systematic Review and Meta-Analysis.” Psychological Bulletin 138 (2): 353. https://doi.org/10.1037/a0026838.

- Sanz-Pérez, E. 2019. “Students’ Performance and Perceptions on Continuous Assessment. Redefining a Chemical Engineering Subject in the European Higher Education Area.” Education for Chemical Engineers 28: 13–24. https://doi.org/10.1016/j.ece.2019.01.004.

- Simate, G., and L. Woollacott. 2017. “An Investigation Into the Impact of Changes in Assessment Practice in a Mass Transfer Course.” In Proceedings of the Fourth Biennial Conference of the South African Society for Engineering Education, Cape Town, 277–283. https://open.uct.ac.za/bitstream/handle/11427/27592/Prince_4th%20Biennial%20SASEE%20Conference_2017.pdf?sequence=1#page=280

- Soeiro, A., and J. Cabral. 2004. “Engineering Students’ Assessment at University of Porto.” European Journal of Engineering Education 29 (2): 283–290. https://doi.org/10.1080/0304379032000157240.

- Tormos, M., H. Climent, P. Olmeda, and F. Arnau. 2014. “Use of New Methodologies for Students Assessment in Large Groups in Engineering Education.” Multidisciplinary Journal for Education, Social and Technological Sciences 1 (1): 121–134. https://doi.org/10.4995/muse.2014.2198.

- Tuunila, R., and M. Pulkkinen. 2015. “Effect of Continuous Assessment on Learning Outcomes on Two Chemical Engineering Courses: Case Study.” European Journal of Engineering Education 40 (6): 671–682. https://doi.org/10.1080/03043797.2014.1001819.

- Villarroel, V., S. Bloxham, D. Bruna, C. Bruna, and C. Herrera-Seda. 2018. “Authentic Assessment: Creating a Blueprint for Course Design.” Assessment & Evaluation in Higher Education 43 (5): 840–854. https://doi.org/10.1080/02602938.2017.1412396.

- Villarroel, V., D. Boud, D. Bruna, and C. Bruna. 2019. “Using Principles of Authentic Assessment to Redesign Written Examinations and Tests.” Innovations in Education and Teaching International 57 (1): 1–12. https://doi.org/10.1080/14703297.2018.1564882.

- Yan, Z., and G. Brown. 2017. “A Cyclical Self-Assessment Process: Towards a Model of how Students Engage in Self-Assessment.” Assessment & Evaluation in Higher Education 42 (8): 1247–1262. https://doi.org/10.1080/02602938.2016.1260091.

- Yorke, M. 2003. “Formative Assessment in Higher Education: Moves Towards Theory and the Enhancement of Pedagogic Practice.” Higher Education 45 (4): 477–501. https://doi.org/10.1023/A:1023967026413.