ABSTRACT

Effectively working in an engineering workplace requires strong teamwork skills, yet the existing literature within various disciplines reveals discrepancies in evaluating these skills. This complicates the design of a generic teamwork peer evaluation tool for engineering students. This study aims to address this gap by introducing the DRIVE teamwork rubric, a simple, valid, and reliable tool designed to assess fundamental teamwork skills in engineering students. Informed by a comprehensive literature review and experiential learning theory, the rubric incorporates five dimensions, encapsulating crucial attributes for successful teamwork. The integration of these dimensions into the rubric aims to streamline the peer evaluation process and improves student awareness about fundamental teamwork skills. Initial implementation data analysis, including exploratory and confirmatory factor analyses, alongside McDonald's Omega, substantiate the rubric's reliability and validity. A comparison between Teaching Assistants’ (TAs’) and peer evaluations affirms students’ ability to assess peers on par with TAs, underscoring the rubric's simplicity and effectiveness.

Introduction

Teamwork is an essential skill that engineering professionals require to work effectively in the twenty-first century. Modern engineers are not only required to have strong technical knowledge, critical thinking, and problem-solving skills, but also professional skills like teamwork, leadership, and communication (Elayyan Citation2021). Qadir et al. (Citation2020) identified the top five skills required for electrical and computer science engineering graduates in the twenty-first century to be teamwork, collaboration, communication skills, innovation, metacognition, and critical thinking. Furthermore, the Accreditation Board for Engineering and Technology (ABET) has added teamwork skills as a criteria for universities to ensure that students possess these skills to operate in high-performing teams (National Academy of Engineering Citation2004). These skills are not just recognised by employers and accreditation bodies, but studies (Grocutt et al. Citation2020; Hill et al. Citation2019) show that students in universities also identify that teamwork skills are important for their career progression. Some students believe they lack these skills and hence request more training for the same. Furthermore, the language used around teamwork skills is also transforming ̶ from being called soft skills to professional skills due to its growing importance (Berdanier Citation2022). Moreover, Ebrahiminejad (Citation2017) has reviewed the literature systematically and found that teamwork, communication and problem-solving are deficient skills in graduate engineers.

The above evidence suggests that teamwork is an essential part of engineering practice, and these skills are required for students to improve their employability and career progression. Therefore, a key goal for engineering education is to help students become ready for employment through the design of educational programs that focus on teamwork skill development. Skill development and progression can only be observed if there is a good method for measuring these skills. Hence, assessment methods that measure the development of these skills in students, play a crucial role in engineering education. In a team setting, the people who closely observe teamwork skills are the members of the team involved in the collaborative activity. Therefore, peer assessments provide useful opportunities to facilitate the reflection on teamwork skills development and its assessment in higher education settings (Sridharan, Muttakin, and Mihret Citation2018). Hence, peer assessment of teamwork is most commonly performed with the use of tools like assessment rubrics (Planas-Lladó et al. Citation2021). Assessment rubrics are widely used in the literature and are proven to support the development of professional skills like teamwork and communication (Pang, Fox, and Pirogova Citation2022). This research study, therefore, focuses on rubric-based assessment methods that measure students’ behaviour in a live team environment.

To reflect on the effectiveness of teamwork and to perform peer assessment, students require a simple rubric that can be easily understood and is effective for evaluating teamwork skills of each team member (Planas-Lladó et al. Citation2018). An effective rubric is one that comprises of four critical components to enable assessment: (1) task description (i.e. the assignment) (2) dimension (i.e. criteria for assessment) (3) performance indicators (descriptions of performance expectations) and (4) scale (i.e. standards or levels of achievement) (Stevens and Levi Citation2013). In the assessment of teamwork, often a scoring guide rubric that only lists the highest possible performance for each dimension is used for simplicity. In this rubric type, the dimension provides an overview of the relevant skills being measured, performance indicators describe the specific measurable actions towards obtaining these skills and the scale outlines the method to provide a grade or mark for those actions, which tells students how completely they did or did not meet the dimension (Stevens and Levi Citation2013). Ideally, the dimensions within a rubric should effectively represent the wider skills that the rubric aims to evaluate. The dimensions of a well-designed rubric should not only define the relevant skills but also enable students to assess their strengths and weaknesses within each dimension (Stevens and Levi Citation2013). Rubrics play a crucial role in enhancing consistency among raters; however, within the educational context, their significance extends beyond this function. A well-designed educational rubric should not only facilitate consistency among evaluators but also serve as a valuable tool for learners, offering them a transparent and comprehensive understanding of the criteria for full achievement. In essence, it becomes a guide that paints a clear picture of the expectations and benchmarks associated with reaching a level of complete success in a given task or assessment (Chowdhury Citation2018).

The assessment of teamwork skills is complex and multifaceted, influenced by various factors such as attitudes and behaviours (Britton et al. Citation2017; Hughes and Jones Citation2011). Consequently, there is a lack of consensus across and within different disciplines regarding the dimensions used for evaluating teamwork (Britton et al. Citation2017). Various widely recognised rubrics, including the American Association of Colleges and Universities (AAC&U) VALUE Teamwork Rubric (available from https://www.aacu.org/initiatives/value–initiative/value), CATME, Team Up, and Team Q, have shown good reliability and validity in assessing student behaviour’s utilising behavioural rubric dimensions in healthcare settings (Rhodes Citation2010; Britton et al. Citation2017; Hastie, Fahy, and Parratt Citation2014; Loughry, Ohland, and Woehr Citation2014; Parratt et al. Citation2016). However, in engineering education, individual courses often employ locally developed, context-specific dimensions and rubrics, leading to inconsistency in conceptualising teamwork and a lack of fundamental rubric dimensions for evaluating teamwork skills (Dorado-Vicente et al. Citation2020; Ercan and Khan Citation2017; Hadley, Beckmann, and Reid Citation2016; Jones and Abdallah Citation2013; Lingard and Barkataki Citation2011; Plumb and Sobek Citation2007). Therefore, a standardised approach is needed to evaluate teamwork skills. Fundamental skills can be described as those essential abilities that constitute a required foundation or core (Barnett et al. Citation2016). Teamwork as a whole encompasses certain fundamental skills which are developed over time through practical experiences (Ercan and Khan Citation2017). These fundamental teamwork skills are critical competencies and qualities necessary for successful collaboration within a team. Therefore, utilising fundamental teamwork skills as rubric dimensions to design a peer evaluation rubric has the potential to simplify the peer evaluation process and raise student’s awareness of the fundamental teamwork skills that they develop over a period of time. This approach aims to enhance student awareness of their strengths and weaknesses in these crucial skills, offering a generalised evaluation of fundamental teamwork skills within the context of engineering education. While existing teamwork rubrics from healthcare settings could be readily incorporated into engineering courses, they do not utilise fundamental teamwork skills as rubric dimensions which have the potential to enable self-reflection and standardisation for assessing teamwork skills. Therefore, there is a need for a teamwork rubric which utilises fundamental teamwork skills as rubric dimensions. Hence the research question for the study is: ‘What are the fundamental teamwork skills that can be used as dimensions to design a simple, novel, valid, and reliable engineering teamwork rubric that could help students’ peers to evaluate exhibited teamwork skills when working collaboratively?’

To address the above, this study presents a novel rubric named DRIVE, which uses rubric dimensions that represent a student’s fundamental teamwork skills, identified from a comprehensive literature review, that should be exhibited by students working on a team project. The dimensions in the DRIVE rubric are designed to help students evaluate their peers’ teamwork skills. Furthermore, DRIVE is designed to be easily understood by the students, reducing the complexity and time required to perform peer assessment. Finally, the results from an initial implementation were used to evaluate the strength of the rubric by performing reliability and validity tests.

A theoretical framework for situating teamwork skills

Understanding how students learn is important to consider while designing any educational tool or teaching method (Khalil and Elkhider Citation2016). While theoretical understanding of teamwork skills can give students awareness of the basic concepts, as stated before, teamwork is predominantly an experiential learning activity (Al-Hammoud et al. Citation2017). By actively participating in team efforts, individuals not only contribute their expertise but also observe diverse approaches to a solution, expanding their own problem-solving knowledge base through experiential learning. For instance, a marketing specialist collaborating with a software developer may gain insights into coding complexities, fostering a cross-disciplinary understanding that enhances both individual and team capabilities.

Experiential Learning Theory (ELT), is a dynamic concept that elucidates the process of acquiring knowledge through practical experience (McCarthy Citation2016). According to Kolb (Citation1984), ELT is described as ‘the process by which knowledge is generated through the conversion of experience, with knowledge arising from the integration of grasping and transforming experience.’ This literature outlines a cyclical four-stage process within ELT, involving the four phases of Concrete Experience, Reflective Observation, Abstract Conceptualisation and Active Experimentation (McCarthy Citation2016). Depending on the circumstances or stages of a learning endeavour, a learner goes through these four phases. In every teamwork experience, as mentioned in the ELT, a learner engages, reflects, and connects the lessons learned from their current teamwork experience with their previous ones to create new knowledge. Reflection is a crucial phase in ELT in the context of teamwork, as it is essential for team members to collectively reflect on their shared experience to derive valuable insights from it.

Constructivism, grounded in the notion that learners actively build knowledge upon their existing understanding, seamlessly aligns with the four phases of ELT (Wang Citation2022). As individuals engage with the world's experiences, they conscientiously reflect on these encounters, actively integrating new information into their pre-existing knowledge. This underscores the pivotal role of reflective observation in the constructive process of knowledge formation.

In the context of teamwork, the focal point is collaborative knowledge creation through active participation and reflection. Through the assessment of their peers’ contributions, students actively engage in the construction of knowledge related to effective teamwork behaviours. This process not only heightens students’ awareness but also refines their understanding, empowering them to execute desired behaviours effectively in subsequent teamwork activities.

The emphasised reflective observation aspect, common to both experiential learning theory and the constructivist framework, forms the cornerstone for the design of the DRIVE rubric. By recognising the critical importance of reflection in building knowledge and skills, this rubric aligns well with the principles of both ELT and constructivism.

Literature review

Fundamental teamwork skills

Katzenbach and Smith (Citation2015) define a team as a set of individuals committed to a common cause, goals, and approach, who possess complementary skills and hold each other mutually accountable. The process in which a distinct group of individuals accomplish a common objective is defined as teamwork (Isabella Citation2003). Teamwork skills just like any other skills develop from every team experience. Researchers categorise teamwork skills as an experiential learning activity that needs adequate reflection to promote learning (Al-Hammoud et al. Citation2017). To understand teamwork skills in depth, a thorough review of literature was conducted.

A comprehensive literature review was conducted in May 2023 on the following databases: ProQuest Education, Scopus, Wiley Online Global which was supplemented by searches on google scholar. All the three primary databases used are verified to be principal databases suitable for comprehensive literature reviews (Gusenbauer and Haddaway Citation2020). The use of these databases gave access to the most complete and influential education journals in multiple disciplines. Literature on teamwork is very diverse and therefore, teamwork concepts from all disciplines were studied, rather than limiting the search within engineering discipline. Hence, the search criteria had to be very specific to get the most relevant papers targeted towards the research question. The condition added was that the title should contain either ‘teamwork skill’ or ‘teamwork competency’, followed by a search in all categories for the keywords like teamwork skill, teamwork competency and teamwork rubric. It is important to note that skills and competencies are used as synonyms and hence both were used to capture more journal articles in this search. The following Boolean search query was used for ProQuest Education and Scopus: TITLE (‘teamwork skill’ OR ‘teamwork competencies’) AND ALL (‘teamwork skill’ OR ‘teamwork competencies’ OR ‘teamwork rubric’). The search criteria used for Wiley Global online was ‘teamwork skill’ OR ‘teamwork competencies’ OR ‘teamwork rubric’, as the Wiley’s database is significantly smaller than Scopus and ProQuest (Gusenbauer and Haddaway Citation2020). An additional constraint imposed was that the search criteria exclusively focused on peer-reviewed publications written in the English language. This search procedure returned a total of 445 articles.

The next phase was to eliminate the duplicates and screen the titles and abstracts to filter the studies not addressing the research question. The full text copies of articles were retrieved for any study retained after this first round of screening. In the second round, the full texts were screened based on the following criteria:

Identifies the teamwork skills or competencies relevant to the discipline of study or

Includes the design of a rubric or a theoretical framework or model that defines the teamwork skills or

Includes a literature review of the teamwork skills in the disciplines of psychology, healthcare, business, science and engineering.

Psychology, healthcare, business, and science and engineering disciplines were used because they have significant work on teamwork skills in the literature that provide unique viewpoints. Literature from psychology provides an understanding of the science behind teamwork skills, which is ideally a good point for starting research in teamwork. Furthermore, in healthcare, there is a conscious effort on efficiency and accuracy as time is crucial with significant consequences in terms of patient’s health. In business, there is an importance on developing leadership traits, which is crucial for career progression. Science and engineering prioritises effective and succinct communication to guarantee that all stakeholders are adequately informed, recognising that seamless coordination is crucial. Apart from the unique viewpoints, each discipline also covers the aspects of basic teamwork skills needed for the discipline. The papers gathered from this literature review were later filtered based on these disciplines.

If multiple reports of the same study are published (e.g. dissertation, journal article, conference proceedings), the journal version of the study was used as they have typically gone through a more rigorous peer review process. Relevant information from all studies that met the inclusion criteria was extracted. After applying the above inclusion and exclusion criteria, the number of articles that remained were twenty-eight. Literature on teamwork skills and competencies varies based on the unique requirements of specific disciplines. Before defining teamwork skills, one must examine the teamwork competencies defined in separate disciplines.

In the discipline of business, team attributes are focused on teamwork structure with a management plan to execute the required goals (Katzenbach and Smith Citation2015). Varela and Mead (Citation2018) conducted a theoretical review to identify the teamwork dimensions required in the field of business which include mission analysis, strategy formulation, situation monitoring, backup behaviours, coordination, conflict management, motivation and confidence building, and affect management. Kotlyar and Krasman (Citation2022) created a virtual simulation method of teamwork assessment where they modelled teamwork competencies within dimensions of team building, engaging team member, problem solving, leading, communication, and dealing with frustration. Jackson, Sibson, and Riebe (Citation2014) conducted a survey to find out undergraduate students’ perceptions of teamwork skills in their ‘Working Effectively with Others’ and ‘Employability Skills Framework’. These two frameworks consist of teamwork competencies like communicating effectively, social responsibility and accountability, conflict resolution, self-management, etc. Teamwork competencies mentioned in the teamwork competencies instrument by Halfhill and Nielsen (Citation2007) are conflict resolution, communication, planning and goal setting, and performance management. Overall, in the discipline of business, conflict resolution, communication, accountability, management, and leadership skills are the most recurring teamwork skills.

The discipline of healthcare insists on quick decision-making in their teamwork process, requiring clear and concise communication to execute a task (Olupeliyawa, Hughes, and Balasooriya Citation2022). Furthermore, in a study analysing dental students’ perceptions of teamwork, they found that accountability, mutual support, interprofessional communication, time, feedback, adaptability, trust and coordination were rated highly (Raponi et al. Citation2023). In emergency medicine, researchers developed a standard taxonomy of core teamwork competencies and found that closed loop communication, team cognition, leadership, conflict resolution, debriefing and process feedback, goal specification and coordination were essential core teamwork competencies (Fernandez et al. Citation2008). A study conducted in primary care, designed a conceptual model of team competencies which consisted of listening actively, building trust, accountability, manage conflict, give and receive constructive feedback, manage emotions and support change in others as important teamwork dimensions (Warde, Giannitrapani, and Pearson Citation2020). Team FIRST framework (Greilich et al. Citation2023) is another model that identifies teamwork competencies in interprofessional healthcare which were structured communication, closed loop communication, asking clarifying questions, sharing unique information, mutual trust, reflection, mutual performance, recognising criticality of teamwork and creating a psychologically safe environment. The big five teamwork competencies according to Salas et al. (Citation2008) and Leasure et al. (Citation2013) are adaptability, team leadership, shared mental models, mutual trust and closed loop communication. Many frameworks and models in the discipline of healthcare have overlapping ideas on teamwork skills which are more broadly communication, mutual trust, leadership, adaptability, feedback, managing conflict and accountability.

In psychology, the priority is to understand the process behind the working of a team and behaviours that lead to those outcomes. In their multidisciplinary study, Salas, Sims, and Burke (Citation2005) explained the big five in teamwork which were adaptability, team leadership, shared mental models, mutual trust and closed loop communication. In another study conducted by Salas, Burke, and Cannon-Bowers (Citation2000), they organised teamwork competencies based on the type of teams. In terms of teamwork competencies which relate to task-generic and team-generic teamwork skills, they identified the skills to be morale building, conflict resolution, assertiveness, task motivation, intra-team feedback, co-operation and information exchange. Ellis et al. (Citation2005) evaluated the use of teamwork skills for team training, which were communication, conflict resolution, planning and performance management. They argued that these are more transferable and can be more impactful rather than task-specific teamwork competencies. Overall, in psychology, the most recurring themes are conflict resolution, communication, feedback, leadership, mutual trust, and adaptability.

In the discipline of science and engineering, a project-based environment is a common experience where teamwork and management structure, critical thinking (brainstorming), conflict management and communication are more critical to achieving milestones. Chowdhury and Murzi (Citation2019) conducted a literature review and found that some of the teamwork skills for engineers are shared goals and values, commitment to team success, motivation, interpersonal skills, open and effective communication, constructive feedback, ideal team composition, leadership, accountability, interdependence, adherence to team process and performance. Tejedor Herrán et al. (Citation2022) conducted a study which found that the teamwork competencies required for engineers were group dynamics, internal team management or conflict resolution, communication, brainstorming/mind maps and design thinking. In geoscience, a focus group study was conducted which identified four teamwork skill categories: transitional skills, action skills, interpersonal skills and teamwork ethics. These categories comprise of skills like communication, trustworthiness, integrity, information synthesis, mentoring, planning and interpretation of team mission (Nyarko and Petcovic Citation2023). Koh, Hong, and Seah (Citation2014) ran an exploratory study to develop an analytical framework for teamwork competency which included competencies like team emotional support, coordination, decision making, mutual performance monitoring, constructive conflict, and team commitment. Another exploratory study (Chaiwichit and Samart Citation2022) was run to investigate teamwork competencies and their impact on student performance. This study identified competencies such as building a team relationship, supporting a team, participation in team exchanges and team relationship. A study by Hebles Ortiz, Alonso-Dos-Santos, and Yániz-Álvarez-de-Eulate (Citation2022) involved the design of a teamwork competency scale for undergraduate students which had nine specific dimensions such as group goal setting, communication, learning orientation, collective effectiveness, problem solving, planning and coordination, conflict management, performance monitoring and supportive behaviour. The repeating trends of teamwork competencies in science and engineering include conflict management, communication, accountability and trust or teamwork ethics, feedback, and leadership.

summarises the teamwork competencies identified in the disciplines presented above.

Table 1. Summary of discipline-specific teamwork competencies.

After analysing the literature and evaluating the repeating skills and competencies in the different disciplines, the five fundamental teamwork skills considered to be included in the DRIVE rubric are, (1) Dispute management (Conflict Management), (2) Reflection & feedback, (3) Information sharing (Communication), (4) Versatile leadership (Leadership), and (5) Ethical behaviour (Accountability and Trust). These teamwork skills provide the foundational basis for the design of the DRIVE rubric which are explained in detail in the Rubric Design section. These teamwork skills will be referred to as ‘fundamental teamwork skills’ henceforth in the rest of this study.

Furthermore, a well-designed rubric dimension should not only outline the relevant skills but also empower students to evaluate their strengths and weaknesses within each dimension (Stevens and Levi Citation2013). Consequently, integrating rubric dimensions that specifically focus on fundamental teamwork skills, is expected to enhance students’ ability to evaluate and reflect on their own teamwork capabilities. Therefore, in this study, the identified fundamental teamwork skills are defined as dimensions for the DRIVE rubric.

Rubric design

Rubrics outline the requirements for an assessment by detailing the dimensions and grading levels of quality from exceptional to poor. As mentioned before, a rubric has three major categories: rubric dimensions, performance indicators (measurement items) and scale. Overall, a rubric provides an evaluation of the degree of competency achieved by the user (potentially a student) (Reddy and Andrade Citation2010).

In rubric design, it is essential to follow a methodological approach to ensure that a rubric efficiently addresses the required criterion. The proposed DRIVE rubric in this study follows the rubric design methodology outlined by Reddy (Citation2011):

Determine the learning outcome and dimensions that must be displayed in a student's work to demonstrate proficient performance.

Identify the performance indicators for each of the dimensions.

Select an appropriate scale for scoring.

Collect feedback on the rubric from external stakeholders or verify the validity via thorough literature review.

Implement the rubric and collect data.

Test the reliability and validity of the rubric.

Use the results to improve the rubric further.

The process above was followed in the development of the DRIVE rubric by conducting a comprehensive literature review to identify the fundamental teamwork skills and appropriate behavioural scales from the literature. Then, the performance indicators relevant to the identified fundamental teamwork dimensions and an effective rubric scale were adopted to suit the objectives of the rubric to make it suitable for peer assessment. Finally, the rubric was implemented, and the results were used to perform reliability and validity tests to assess its quality. This study reports the results from the first iteration of the DRIVE rubric implementation.

In developing the DRIVE rubric, the merits of established teamwork rubrics were drawn upon, integrating elements like the performance indicators derived from the VALUE and Team UP rubric's simplified behavioural attributes, and the frequency-based marking scale from Team Q rubric (Rhodes Citation2010; Hastie, Fahy, and Parratt Citation2014; Britton et al. Citation2017). A study examining the teamwork Knowledge Skills and Ability (KSA) test highlighted that enhancing teamwork behaviours is pivotal for improving overall team performance (McClough and Rogelberg Citation2003). Consequently, teamwork behaviours in the form of fundamental teamwork skills were employed as performance indicators in the DRIVE rubric. Additionally, the DRIVE rubric specifically embraced frequency-based rating scales as they are ideally recommended in evaluating performance indicators such as behaviours and work habits (Brookhart Citation2013). What distinguishes the DRIVE rubric is its innovative approach of identifying and leveraging fundamental teamwork skills as the dimensions of the rubric. This design is intended to elevate students’ awareness of fundamental teamwork skills in the context of engineering education.

The DRIVE rubric has five teamwork dimensions. However, in the DRIVE rubric, there is no hierarchical representation of fundamental teamwork skills. It is important in the design of this rubric to identify the appropriate positive behavioural attributes within these dimensions. The definitions and theoretical framework behind the selection of these positive behavioural attributes in relevant rubric dimensions are discussed below.

Dispute resolution (conflict management)

Conflict is an elementary aspect of teamwork. It is defined as the process in which a group or a person perceives the actions of others as having a negative impact on their own interests. Conflict management is a process that aims to resolve conflicts such that positive outcomes are prioritised, and negative aspects are minimised. The Thomas and Kilmann conflict management model presents various approaches to resolve conflicts by (i) avoiding, (ii) dominating, (iii) accommodating, (iv) collaborating and (v) compromising (Correia Citation2005). Each style is suitable for different circumstances, however, to be able to maintain harmony one needs to strive to achieve a win-win outcome where possible without disrupting team peace (Gitlow and McNary Citation2006). Hence, the below behavioural indicators were adopted in the DRIVE rubric:

Possesses good negotiation skills and routinely achieves a win-win outcome.

Resolves destructive conflict constructively and maintains team cohesiveness.

Consistently adapts to the changing demands of the task.

Contributes appropriately to a healthy debate.

Feedback

Feedback is an essential sub-skill of the fundamental teamwork skills (Al-Hammoud et al. Citation2017; Ercan and Khan Citation2017; Thite et al. Citation2021). Providing and receiving feedback, if carried out without getting offended, can positively impact team performance (Hardavella et al. Citation2017; Jug, Jiang, and Bean Citation2019). Hence, the below behavioural indicators were adopted in the DRIVE rubric:

Provides constructive feedback without being offensive.

Accepts feedback in a non-protective and positive way.

It is worth noting that a student's aptitude to receive feedback, offer feedback in return, and subsequently engage in introspection to develop actionable steps based on that feedback is considered a reflective skill (Anseel, Lievens, and Schollaert Citation2009).

Information sharing (effective communication)

Information can be shared within a team using basic communication methods like speaking, listening, writing, and reading. To convey information accurately, effective communication is crucial for a team’s progress. Furthermore, the ability to provide and receive effective feedback is also linked with effective communication (Potosky, Godé, and Lebraty Citation2022). Hence, a deeper understanding of communication is key to a team’s success. Therefore, the transactional model of communication is adopted in this study, which involves a comprehensive approach that takes into account contextual factors when constructing the meaning of the exchanged words. Things like culture, background and non-verbal communications like body language, expressions and tone can have an influence on the meaning of the words expressed (Kuznar and Yager Citation2020). Furthermore, listening with attention and using clarification questions play a key role in avoiding misunderstandings (Brewer and Holmes Citation2016). Therefore, the below positive behavioural descriptors were adopted in the DRIVE rubric:

Uses positive:

Vocal/written tone

Facial expression

Body Language

Maintains a respectful, polite, and constructive approach in communication.

Restates views to clarify if not clearly understood.

Listens intently without interrupting.

Versatile leadership

The theory known as situational leadership emphasises the importance of adapting leadership styles to suit various situations (Fiedler Citation2015). This theory promotes versatility and suggests that leaders should adjust their approach based on specific circumstances they encounter. This adaptability leads to enhanced innovation and effectiveness. By embracing this leadership theory, leaders can readily adapt to different situations, efficiently transitioning between phases and equip themselves with the necessary skills and knowledge to handle challenging circumstances (Fiedler Citation2015). The below behavioural attributes are hence adopted for the DRIVE rubric:

Motivates teammates by showing faith in teams’ ability to complete important tasks.

Articulates merits of proposed ideas.

Constructively builds on others’ contributions.

Brings inactive members into discussion.

Helps and motivates team members to complete tasks to high quality.

Provides assistance and/or encouragement to team members.

Ethical behaviour (accountability and trust)

Each member of a team is expected to contribute and perform to achieve the teams’ objectives. However, to achieve that, each member should ensure that they are accountable for the tasks they take responsibility for. Poor accountability leads to various problems within the organisations, like poor job satisfaction, and blaming and victimisation. When team members are accountable, this creates trust within the team which boosts the performance levels (Stewart, Snyder, and Kou Citation2021). Accountability and Trust are developed as consequences of individual behaviours and actions within a team environment. Therefore, if a team member displays accountability and trustworthy actions within a team, they display a behaviour that correlates with a strong work ethic. Hence, the below behavioural indicators were adopted in the DRIVE rubric:

Completes all assigned tasks comprehensively by the deadline.

Earns trust of team members by timely completion and quality contributions.

The DRIVE rubric is shown in . The marking levels of the DRIVE rubric are represented by how often the student displays the simplified behavioural attributes on a Likert scale: 0-Never, 1-Sometimes, 2 – Usually, 3-Regularly, 4-Always.

Table 2. DRIVE rubric.

showcases how this scoring criteria looks like during peer evaluation. The task description provided to students was: ‘In your experience, how often does your peer demonstrate the behaviours mentioned in the rubric?’. Altogether, the DRIVE rubric comprised of four essential components integral to an effective rubric such as task description, dimension, performance indicators, and scale required for assessment (Stevens and Levi Citation2013).

Implementation

The DRIVE rubric was utilised to grade/mark students’ fundamental teamwork skills in a large (202 students) electrical engineering postgraduate course in 2021. This implementation was conducted to gauge how effectively the students could evaluate their peers’ fundamental teamwork skills. The DRIVE rubric was used for evaluation by peers and teaching assistants (TAs). Comparison between the peers’ and TAs’ marks provided insights into the internal reliability and how well the students understood the grading/marking rubric. This postgraduate engineering course was explicitly selected as it has about 90% international students hailing from various countries. Consequently, the diverse makeup of the cohort poses a significant challenge when it comes to implementing effective teamwork. Therefore, a measure of students’ understanding of the fundamental teamwork skills in such courses would be a significant outcome of this work. At the end of the teamwork activities in this course, a reflection exercise was conducted. During this activity, students were prompted with two questions for contemplation: (i) identifying the skills they had improved and areas requiring further development, and (ii) evaluating the aspects that worked and did not work well in their teamwork experience. These inquiries served as a catalyst for students to revisit their teamwork encounters, fostering an elevated awareness of their proficiency in fundamental teamwork skills.

The teaching mode was online due to the COVID-19 outbreak. Moodle and Microsoft Teams were used as learning management tools to deliver the course and run the team activities. The teamwork activity was a Case Study Analysis (CSA). In this activity, students were required to find an electrical incident and perform a detailed analysis to propose relevant engineering and administrative solutions to prevent such incidents. In addition, the teams were required to prepare a presentation video showcasing their findings at the end of the task. This analysis was performed as a team over eight weeks. The student teams were supported by TAs who also assessed the fundamental teamwork skills of the students based on the DRIVE rubric. The students met weekly to discuss the project, and the TAs were part of all their meetings. This TA presence and assistance scaffolded students learning experience as fast feedback was available to students when needed via Microsoft Teams. Weekly meetings consisted of CSA discussions and small team-building activities at the beginning of every meeting. This structured team-building approach enhanced the student's teamwork experience (Thite et al. Citation2020). A team size of four or five was chosen because the student cohort exceeded 200, making it possible to manage all teams with the available TAs. The fact that TAs provided individualised guidance and attention to their teams throughout the term received positive feedback. The TAs were well-equipped to assess students’ teamwork grades, given their consistent presence in all weekly team meetings throughout the term. These meetings were intentionally structured to involve various team activities and tasks, providing the TAs with valuable insights into the dynamics of each team. Additionally, the TAs underwent training on the rubric before the course began, ensuring their effective assessment of the students. In addition to TA marking, the students were also evaluated by their peers (team members). This iteration provided insights into the reliability and simplicity of the DRIVE rubric, as the students did not receive comprehensive training like TAs. The data collected from this implementation consisted of weekly teamwork marks granted by peers and teaching assistants, which were utilised for subsequent data analysis. Prior to conducting the study, ethics approval was obtained from the university ethics committee. In addition, all students were provided with a participant information sheet, which was made available on the course page, ensuring informed consent and transparency regarding their participation.

summarises the key information for the 2021 course offering for the DRIVE rubric implementation.

Table 3. Summary of Implementation.

Methodology

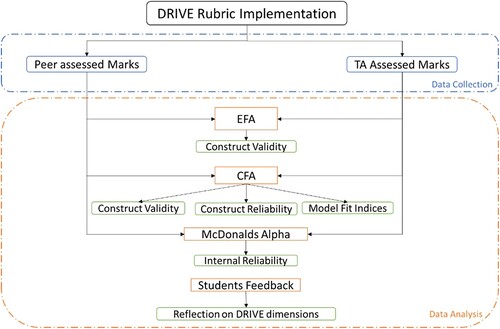

The data collection and analysis adopted is this study is showcased in the flowchart in and explained in detail next.

Data collection

The participants in this study were the students from a postgraduate electrical engineering course, as described in detail in the previous section. The teamwork activity in this course used the DRIVE rubric for teamwork evaluation. When employing the DRIVE rubric for assessment, markers (whether TA or peer) assigned a score to every behavioural attribute within each dimension of the DRIVE rubric. These marks for each behavioural attribute within a single dimension were averaged to get a single mark for the whole dimension. Ideally, averaging the behavioural attributes marks within a dimension would raise compensability issues, where compensability occurs when marks in one dimension make up for the marks in another dimension. Since the DRIVE rubric dimensions covary positively, all the fundamental teamwork dimensions develop in parallel. For example, improvement in Communication skills may lead to a slight improvement in Dispute Management. A sample of this positive covariance is shown in the covariance matrix of TA teamwork marks shown in . Therefore, compensability was considered acceptable for the DRIVE rubric.

Table 4. Sample covariance matrix of TA evaluation.

The student’s teamwork activities were marked by TAs and their peers from weeks 5 to week 9 over a 10-week term. The evaluation of students was intentionally initiated from week 5, allowing sufficient time for team interactions to establish team cohesion before the commencement of peer evaluations. The recommended duration for teams to interact before being evaluated by each other is four weeks (Strom and Strom Citation2011). Additionally, since students can join or withdraw from the course until week 4, establishing a fixed number of students in a team for the initiation of teamwork evaluations proved challenging until week 5. The peer marks of a student in a team were calculated by averaging the marks the student received from all other team members. The weekly teamwork marks (week 5 to week 9) from TAs and peer assessments were then averaged to arrive at the data which was used for analysis in this study. This quantitative data were used to conduct reliability and validity tests to evaluate the performance of the DRIVE rubric. Furthermore, qualitative feedback was also collected from students at the end of the course during the reflection exercise to evaluate the impact on their fundamental teamwork skills. As part of the reflection exercise, students were asked to consider the strengths and weaknesses in their teamwork skills, identify what aspects of their teamwork worked effectively, and pinpoint areas where improvement is needed in this teamwork experience.

Data analysis

The degree to which an assessment tool captures what it is intended to measure defines its validity (Heale and Twycross Citation2015). There are two types of validations: (i) Construct validity – It identifies whether the designed rubric criteria sufficiently address the relevant skills, and (ii) Content validity – It confirms whether the definitions within the rubric are valid according to the literature or experts in the field (Heale and Twycross Citation2015).

Reliability tests measure consistency across various areas like dimensions, raters, etc. (Heale and Twycross Citation2015). There are two types of reliability: (i) Internal reliability measures how consistently the internal constructs like criteria and raters assess the students and (ii) External reliability measures how well the rubric performs in other course types (Heale and Twycross Citation2015). To test the DRIVE rubric’s validity and reliability, exploratory factor analysis, confirmatory factor analysis and other reliability tests were performed.

Exploratory factor analysis (EFA) is a statistical analysis which is frequently used to explore the theoretical constructs arising from a survey instrument (Shrestha Citation2021; Sridharan, Muttakin, and Mihret Citation2018). However, it is also widely used in conducting theoretical validation studies for measurement instruments like rubrics in the literature (Baryla, Shelley, and Trainor Citation2012; Britton et al. Citation2017; Patac, Adriano, and Bactil Citation2021). EFA analyses the variances and covariances of all the elements in a data set to identify criteria that share a substantial amount of variance which are collapsed into a construct. A construct is a general idea, overarching theme, or subject matter that one wants to quantify through survey questions or a marking instrument. A factor is a set of standards used to evaluate a construct (Janssen, Meier, and Trace Citation2015). In this study, the DRIVE rubric dimensions will be referred to as factors while conducting EFA. This quantitative analysis method can help evaluate the number of underlying constructs in a data set, understand the relationship between criteria and a construct, assess the construct validity of a marking tool, identify whether a construct is unidimensional and build/prove/disprove theoretical constructs.

In this study, the theoretical model of the DRIVE rubric which includes the rubric’s dimensions and scales were specifically designed to measure the theoretical construct called ‘fundamental teamwork skills’. Hence, the aim of conducting the EFA in this study is to evaluate if the DRIVE rubric’s theoretical model has construct validity as has been done in other studies (Britton et al. Citation2017; Lara, Loya, and Tobón Citation2020). If the EFA results yield multiple constructs, it would be necessary to re-evaluate the design process of the DRIVE rubric. However, if a single construct is extracted from the EFA findings, it would naturally align with the construct of ‘fundamental teamwork skills’ that was derived through a comprehensive literature review and the rubric design methodology employed for creating the DRIVE rubric. It is important to mention that in the literature, it is common to come across single construct solutions, which refer to models with a single theoretical construct, during the EFA of measurement tools (Britton et al. Citation2017; Patac, Adriano, and Bactil Citation2021).

Confirmatory factor analysis (CFA) is another statistical analysis method which is performed to conduct validity and reliability analysis of a theoretical model, survey, or measurement instrument (DiStefano and Hess Citation2005). CFA is conducted when the rubric dimensions and the resulting constructs are already known to the researcher. If the DRIVE rubric’s theoretical model and construct is validated by the EFA, the next step would be to conduct a CFA using structural equational modelling to undertake further construct validity and reliability tests. The process of conducting EFA first and then following it up with the CFA is recommended for the design of measurement instruments as EFA can verify if the theoretical design is accurate before conducting CFA (Gerbing and Hamilton Citation1996). Furthermore, EFA and CFA are conducted on two independent data sets of TA and peer marks. Therefore, conducting this analysis in separate independent datasets provides more reliable results. The software SPSS (Statistical Package for the Social Sciences) and SPSS Amos were used for conducting EFA and CFA.

In terms of reliability studies, Cronbach’s alpha is the most common yet misunderstood measure. It is sensitive to outliers in a data set and has a set of assumptions which are mostly not realistic like normal distribution, essential tau equivalvance, etc. (Cho and Kim Citation2015; Cronbach and Shavelson Citation2004). Since the Cronbach’s alpha has certain limitations, McDonals omega is a better estimate as it has realistic assumptions and takes into account non – parametric factors to evaluate the internal consistency of a data set (Bonniga and Saraswathi Citation2020; Goodboy and Martin Citation2020; Kalkbrenner Citation2021). Hence, the reliability tests were conducted using Mc Donald’s Alpha and Composite reliability tests from the structural equation modelling in the CFA.

Results

Descriptive statistics

Descriptive statistics offer insights into the key characteristics and attributes of a dataset. The data initially gathered from this rubric was in ordinal form by definition as this data were collected on a Likert scale (0-Never, 1-Sometimes, 2 – Usually, 3-Regularly, 4-Always) (Boone and Boone Citation2012). However, by averaging the scores within each dimension, the ordinal data were transformed into continuous data. Therefore, means and standard deviations were calculated for the TA and Peer datasets as shown in . The means of the TA assessments ranged from 3.14 to 3.568 whereas mean for peer assessments ranged from 3.665 to 3.825, which were slightly higher than the TA assessed data. This could indicate the presence of a slight peer bias. Furthermore, the standard deviation values range from 0.286 to 0.517, indicating that the spread of data was closer to the mean. Even though the data is continuous, the range which is calculated as the difference between the maximum and minimum values – provides a valuable measure of data variability (McCluskey and Lalkhen Citation2007).

Table 5. Descriptive statistics of the DRIVE rubric.

The highlights a range of 2.2–2.8 across the fundamental teamwork dimensions, indicating a noteworthy variability in the ratings provided by TAs. Conversely, the range for peer ratings of the fundamental teamwork dimensions was from 1.75 to 2.2, indicating a proximity but a slightly lower alignment compared to TA evaluations. Specifically, the dimensions of feedback, versatile leadership, and ethical behaviour exhibited more differences in ranges between TA and peer ratings, as opposed to the relatively smaller variations observed in information sharing and dispute management. Overall, these descriptive results showcase a reduced variability in the data, suggesting the possibility of increased reliability among the raters.

Exploratory factor analysis

Prior to conducting the EFA, the eligibility of the data set was tested using KMO and Bartlett's test of sphericity. The KMO values for the TA and peer-assessed data were both above 0.8, which indicates that the sample sizes are adequate to perform the EFA analysis (Taherdoost, Sahibuddin, and Jalaliyoon Citation2022; Williams, Onsman, and Brown Citation2010). Similarly, Bartlett's test of sphericity indicated that the result is significant (ideally less than 0.05), indicating that the correlation matrix's constructability is suitable for EFA (Williams, Onsman, and Brown Citation2010).

The principal components extraction method was used to extract factors above the eigen value of one (Taherdoost, Sahibuddin, and Jalaliyoon Citation2022). Through the analysis, it was found that only one component is above the eigenvalue 1, which implies that only one construct is extracted from the analysis. As discussed earlier, this study aims to design a rubric that measures only one construct: fundamental teamwork skills. To achieve that this study has conducted a comprehensive literature review on teamwork skills and has used a rubric design methodology to design the DRIVE rubric. The purpose of conducting EFA is to verify if the rubric was designed accurately to measure the intended construct. Since this result is extracting only one construct, as would be expected, the construct extracted is fundamental teamwork skills. Hence, these results show that the DRIVE rubric is marking one theoretical construct only, which is fundamental teamwork skills, implying construct validity.

Higher the construct's cumulative variance (CV), the fewer are the measurement errors in the data set and more reliable the results are (Williams, Onsman, and Brown Citation2010). The extracted construct's CV were 81.1% and 77.36% for TA and peer assessment, respectively. There is no such accepted limit for CV, and accepted ranges vary. However, literature states that the accepted limit in humanities is above 60% CV (Williams, Onsman, and Brown Citation2010). The cumulative variances and the EFA results obtained from SPSS are summarised in to display the important indicators of the analysis.

Table 6. Summary of EFA results – DRIVE rubric.

From , we can observe that in both TA and peer markings, factor loadings are above 0.8, which indicates that they load strongly towards the construct of fundamental teamwork skills. As a rule of thumb, factor loadings above 0.5 are indicative of a strong factor (Taherdoost, Sahibuddin, and Jalaliyoon Citation2022).

In the context of this study, EFA has revealed that there is one dominant construct, fundamental teamwork skills, extracted with a strong relationship between the rubric dimensions and teamwork construct. Hence, it is clear that the theoretical model, including rubric dimensions and the DRIVE rubric's behavioural indicators, effectively evaluates fundamental teamwork skills.

Confirmatory factor analysis

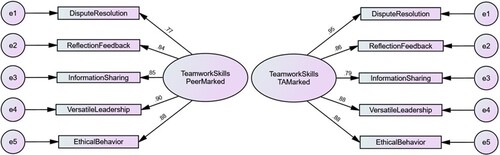

The EFA validated the DRIVE rubric's theoretical foundation, revealing a single theoretical construct called fundamental teamwork skills. With this validated theoretical foundation of the model, the CFA analysis was used to confirm if the proposed model fits the data accurately and obtain further validity and reliability measures of the DRIVE rubric. shows the path diagram for both TA and peer marking data in SPSS Amos. Within this CFA model, the rectangle boxes contain the known rubric dimensions in which there is an input of respective data sets from the implementation; the oval shape consists of the construct which is ‘fundamental teamwork skills’ for both TA and peer evaluation data sets and the circles contain the measurement error term. After running the model, the output was obtained in the form of standardised factor loadings as shown on the arrows in .

The model fit indices were computed to assess the model’s overall goodness of fit (CMIN/df, GFI, TLI, SRMR and RMSEA), and all values were within their acceptance levels (Kline Citation1998; Schumacker and Lomax Citation2016). This implies that the TA and peer assessment data appropriately fit the proposed DRIVE rubric model, indicating good construct validity. These model fit indices and their recommended values for good fit are indicated in .

Table 7. CFA model fit indices.

Furthermore, to identify the validity of a model in CFA, there are various validity types, such as convergent validity and discriminant validity (Said, Badru, and Shahid Citation2011). Convergent validity indicates whether the standardised factor loadings contribute to the construct being measured. A model is said to demonstrate high convergent validity if: (i) the standardised factor loadings are above 0.7 (ii) the average variance extracted (AVE) is above 0.5, and (iii) the construct reliability (CR) is greater than AVE (Said, Badru, and Shahid Citation2011; Tentama and Anindita Citation2020). CR is calculated as the sum of squared factor loadings divided by the sum of squared factor loadings plus the measurement error. The measurement error is calculated by subtracting the square of the standardised factor loadings from one. AVE is calculated as the average sum of squared factor loadings. The standardised factor loadings, CR and AVE for the model in this study, as seen in satisfy these convergent validity conditions. Discriminant validity is not applicable in this case, as it is only conducted when multiple constructs are extracted. The implications of CR on reliability will be further discussed in the reliability analysis section.

Table 8. CFA (TA and peer marking): standardised factor loadings, AVE & CR.

Reliability analysis

Reliability gives an idea of how consistent the measuring instrument is in its measurements. To get measures of internal consistency, the SPSS macro was used on TA and peer-marked datasets, giving Mcdonald's Omega of 0.921 and 0.923, respectively. A rule of thumb is that McDonalds Omega above 0.7 indicates a reliable instrument with good internal consistency (McNeish Citation2018). Additionaly, the CR calculated earlier during CFA, indicates how consistently the items in a measurement tool measure the required construct (Said, Badru, and Shahid Citation2011). The CR for the ‘fundamental teamwork skill’ construct was calculated to be 0.941 and 0.920, respectively, for TA and peer markings repectively. CR above 0.7 is considered to indicate a good internal consistency measure (Tentama and Anindita Citation2020). The results above demonstrate that the DRIVE rubric displayed good internal consistency.

In summary, the standardised factor loadings from EFA and CFA, model fit indices and the reliability measures for both the TA and peer assessment marks are very close. This shows that the students were able to mark their peers on par with the trained TAs with the DRIVE rubric. This is a significant outcome displaying DRIVE rubric’s ease and simplicity of use.

Discussion

In the literature on teamwork, the primary focus for most researchers is on teamwork effectiveness, which involves studying the characteristics of effective teams. However, it is equally important to consider the individual teamwork skills within a team if the goal is to evaluate or enhance the overall teamwork capabilities of team members. This is because various complex factors such as personality traits, educational backgrounds, cultural influences, and past experiences can impact an individual's level of teamwork skills. To address this, researchers often choose to concentrate on specific factors and investigate their influence on teamwork skills. Thus, it becomes necessary to identify the individual teamwork skills from existing literature across multiple disciplines and break them down into fundamental teamwork dimensions. However, this task of defining teamwork skills into fundamental dimensions proves to be highly complex, particularly when conducting a literature review across diverse disciplines that approach teamwork skills differently. Hence, a significant outcome of this research is the identification and analysis of patterns in literature from various disciplines, which aids in better understanding of the fundamental teamwork skills. This understanding facilitated the design of the DRIVE rubric.

The results from the exploratory factor analysis revealed that a single construct was extracted. This meant that the rubric measured a single theoretical construct, i.e. fundamental teamwork skills, which is consistent with the aim of this study. Higher factor loadings imply that the rubric's respective dimensions strongly reflect the proposed fundamental teamwork skills construct (Williams, Onsman, and Brown Citation2010). Furthermore, confirmatory factor analysis was performed with sequential equation modelling in SPSS Amos. The results of the CFA from TA and Peer marked data sets revealed the following: (i) good results for the model fit indices (satisfied the cut-offs from literature), indicating that the proposed model was a good fit for the data (ii) high construct reliability (greater than 0.9) which demonstrates good internal consistency, and (iii) high convergent validity as demonstrated by the CFA model satisfying all the conditions for convergent validity. These statistics from CFA analysis demonstrate good construct validity, high internal consistency, and construct reliability of the DRIVE rubric.

Additionally, McDonald’s Omega was preferred to be used as a test for internal consistency over Cronbach’s alpha due to the limitations of Cronbach’s alpha. McDonald’s Omega’s results for both TA and Peer marked data sets were above 0.9, which displays good internal consistency of the DRIVE rubric. Moreover, measures of composite reliability obtained from CFA also displayed good internal consistency (greater than 0.9). Another significant outcome from this study was that the results of the Peer and TA evaluations in terms of reliability, validity, EFA and CFA were all very close to each other. This shows that the students found this rubric easy to understand and could mark their peers on par with the trained teaching assistants. This was further evidenced by the qualitative feedback obtained from the reflective exercise conducted at the conclusion of the teamwork activities. Participants expressed sentiments such as ‘The DRIVE rubric was easy to understand’ and ‘The feedback from my peers motivated me to improve my skills further’.

As discussed in the literature review, teamwork skills are best developed through experiential learning activities. Consequently, it is recommended that educators offer students opportunities for self-reflection through exercises or activities, such as surveys, forms, reports, etc. This reflection will bring the best outcome from using the DRIVE rubric as it allows students to self-reflect on the five teamwork dimensions identified through this rubric and assess their progress, weaknesses, and strengths in each of these fundamental teamwork skills. This was reflected in the qualitative feedback from the students with statements such as ‘I have worked in the industry earlier; hence my communication skills are fine; the DRIVE rubric emphasised the need to provide good feedback to my teammates’ and ‘The feedback from my peers motivated me to improve my skills further’.

In future investigations, it is important to consider other tests not used in the current study. One such test is the intra-rater analysis, which checks the developed instrument's internal reliability. The intra-class coefficient indicates inter-rater reliability; however, such analysis requires multiple raters marking the same students to check the consistency in their marking. Due to the big class size and high workload to mark two separate sets of students by a single teaching assistant, this analysis could not be done in this implementation. Further studies can be designed such that multiple markers mark the same set of students to get the inter-rater analysis results. The study faced a limitation in its initial implementation of the DRIVE rubric, where the analysis included averaging the scores of performance indicators within each rubric domain. Consequently, a detailed breakdown and individual validation of these performance indicators were not feasible. To address this, a more comprehensive approach in future iterations could involve conducting an 18-variable Confirmatory Factor Analysis (CFA) using the scores from each performance indicator within each rubric domain. This would allow for a more in-depth exploration of the reliability of each performance indicator. Moreover, it is important to note that the current study did not consider gender and cultural influences on the rubric's performance, suggesting a potential avenue for exploration in future investigations. Furthermore, since this rubric has been designed to address fundamental teamwork skills, it would be an interesting prospect for future studies to evaluate the impact on students from different disciplines too. Overall, this rubric has the potential to reach a population of students outside of engineering. Hence, more future work in this aspect should be considered. Additionally, a noteworthy limitation for this rubric could be the incorporation of both verbal and non-verbal elements within a single indicator. Furthermore, qualitative feedback suggested that another potential enhancement could involve explicitly peer marking individually nominated roles within their teamwork activities. Subsequent iterations of the rubric could benefit from further refinement based on feedback received during the initial implementation. Furthermore, future efforts could consider conducting focus group studies to assess the influence of the DRIVE rubric on students’ awareness and self-reflection regarding their strengths and weaknesses in fundamental teamwork skills. These focus groups could enable a comprehensive evaluation of the impact of the DRIVE rubric on students’ ability to recognise and analyse their own fundamental teamwork skills.

Conclusion

In this study, the research question: ‘What are the fundamental teamwork skills that can be used as dimensions to design a simple, novel, valid, and reliable engineering teamwork rubric that could help students’ peers evaluate exhibited teamwork skills when working collaboratively?’ was addressed. A comprehensive literature review was conducted on teamwork skills to identify the five rubric dimensions, namely, Dispute management, Reflection & feedback, Information sharing (Effective communication), Versatile leadership, and Ethical behaviour. These fundamental teamwork skills were used in designing a simple but novel teamwork rubric named DRIVE.

The DRIVE rubric was implemented in an electrical engineering postgraduate course where it was used by TAs and peers to mark students’ fundamental teamwork skills. These two datasets were used to perform validity and reliability tests like EFA, CFA, McDonalds Alpha, and composite reliability. The results from these tests indicated good construct validity, convergent validity, and internal consistency of the DRIVE rubric. Furthermore, the feedback collected from the students during the reflection exercise at the conclusion of the team activity indicated that students were able to reflect on their own strengths and weaknesses in terms of the five teamwork dimensions defined within the DRIVE rubric without prompting. The close validity and reliability statistics observed between the TA and peer markings suggest that students were able to assess their peers’ performance on par with the trained TAs. This finding is particularly promising considering that the students initially lacked prior knowledge of teamwork attributes. The results of this study indicate that the students were able to effectively evaluate their peers’ fundamental teamwork skills, highlighting the potential of the DRIVE rubric as a reliable and valid teamwork assessment tool.

The major contribution to the field of engineering education from this study is the DRIVE rubric which is a simple, valid, reliable, and novel teamwork tool that can be utilised in courses where evaluation of students’ fundamental teamwork skills is a key learning outcome. This research can be further expanded to consider a more comprehensive implementation across various disciplines with large student cohorts.

Ethics statement

The participants were protected by hiding their personal information during the research process. They knew that their participation was voluntary, and they could withdraw from the study at any time. An ethics approval was formally obtained (HC210223) from UNSW.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability Statement

The unidentified data can be obtained by sending a request e-mail to the corresponding author.

Additional information

Notes on contributors

Swapneel Thite

Swapneel Thite is a PhD student and an Education Developer of Innovation at UNSW, Sydney. Swapneel's research focuses on improving teamwork skills for students in engineering education. As a major goal of his research, he is designing a framework for enhancing teamwork skills in engineering students. Swapneel has won the PGC research candidate award for his outstanding contributions to the research community at UNSW.

Jayashri Ravishankar

Dr Jayashri Ravishankar is a Professor with the School of the School of Electrical Engineering and Telecommunications and Engineering Associate Dean (Education) at University of New South Wales (UNSW), Sydney. She is a multi-award-winning educator who has been institutionally and internationally recognised for the impacts of her innovative, research-led and highly effective teaching and leadership. Esteem indicators include Senior Fellowship of the Higher Education Academy awarded by Advance HE (UK), Scientia Education fellow of UNSW, Teaching Excellence Award from UNSW and the Australian Awards for University Teaching (citation). Ravishankar has a deep interest in engineering education research and consistently implements evidenced based strategies using technology innovations and industry partnerships to improve students’ active learning. She regularly publishes her teaching innovations in international flagship educational conferences and Q1 journals.

Inmaculada Tomeo-Reyes

Dr Inmaculada Tomeo-Reyes is an education focussed academic in the School of Electrical Engineering and Telecommunications at University of New South Wales (UNSW), Australia. Her innovative education approaches have led to increased attendance, engagement, and sense of belonging, as well as recognition at institutional and international levels, including various teaching awards and Senior Fellowship of the Higher Education Academy (UK). Tomeo-Reyes is very interested in applying engineering education research to the courses she designs and runs, and consistently implements strategies to improve student engagement, collaboration, and active learning. She usually delivers very large first-year courses, so she has developed a passion for teaching first-year students and effectively teaching at scale. She is also very committed to student retention and wellbeing, as well as developing the teaching capabilities of casual staff.

Araceli Martinez Ortiz

Dr Araceli Martinez Ortiz holds the UTSA Microsoft President's Endowed Professorship in Engineering Education in the Department of Biomedical and Chemical Engineering. In this role, she serves as the Director of the Engineering Education department where she is expanding on her engineering education research portfolio and will lead a new graduate degree program in engineering education. With her awarded endowment from Microsoft, Araceli represents the University of Texas at San Antonio as an advisor on national and international intervention and research efforts involving women and other historically underrepresented populations in engineering. Araceli collaborates with NASA as a selected Faculty Fellow serving as a policy advisor with NASA's MUREP and Office of STEM Engagement. Araceli was also recently named as Director of the UTSA Pre-Engineering Education Program (PREP). Her research interests include studying the role of engineering as a curricular context and problem/challenge-based learning as an instructional strategy to facilitate students’ mathematics and science learning. She works with students from traditionally under-served populations and seeks to understand challenges and frame solutions for improving student motivation and academic readiness for college and career success.

References

- Al-Hammoud, Rania, Ada Hurst, Andrea Prier, Mehrnaz Mostafapour, Chris Rennick, Carol Hulls, Erin Jobidon, Eugene Li, Jason Grove, and Sanjeev Bedi. 2017. “Teamwork for Engineering Students: Improving Skills through Experiential Teaching Modules.” Proceedings of the Canadian Engineering Education Association (CEEA).

- Anseel, Frederik, Filip Lievens, and Eveline Schollaert. 2009. “Reflection as a Strategy to Enhance Task Performance After Feedback.” Organizational Behavior and Human Decision Processes 110 (1): 23–35. https://doi.org/10.1016/j.obhdp.2009.05.003.

- Barnett, Lisa M., David Stodden, Kristen E. Cohen, Jordan J. Smith, David Revalds Lubans, Matthieu Lenoir, Susanna Iivonen, Andrew D. Miller, Arto Laukkanen, and Dean Dudley. 2016. “Fundamental Movement Skills: An Important Focus.” Journal of Teaching in Physical Education 35 (3): 219–225. https://doi.org/10.1123/jtpe.2014-0209.

- Baryla, Ed, Gary Shelley, and William Trainor. 2012. “Transforming Rubrics Using Factor Analysis.” Practical Assessment, Research & Evaluation 17: 4.

- Berdanier, Catherine G. P. 2022. “A Hard Stop to the Term “Soft Skills”.” Journal of Engineering Education 111 (1): 14–18. https://doi.org/10.1002/jee.20442.

- Bonniga, Ravinder, and A. B. Saraswathi. 2020. “Literature Review of Cronbachalphacoefficient and McDonald's Omega Coefficient.” European Journal of Molecular & Clinical Medicine 7 (06): 2943–2949.

- Boone, Harry N., Jr., and Deborah A. Boone. 2012. “Analyzing Likert Data.” The Journal of Extension 50 (2): 48.

- Brewer, Edward C., and Terence L. Holmes. 2016. “Better Communication = Better Teams: A Communication Exercise to Improve Team Performance.” IEEE Transactions on Professional Communication 59 (3): 288–298. https://doi.org/10.1109/TPC.2016.2590018.

- Britton, Emily, Natalie Simper, Andrew Leger, and Jenn Stephenson. 2017. “Assessing Teamwork in Undergraduate Education: A Measurement Tool to Evaluate Individual Teamwork Skills.” Assessment & Evaluation in Higher Education 42 (3): 378–397. https://doi.org/10.1080/02602938.2015.1116497.

- Brookhart, Susan M. 2013. How to Create and Use Rubrics for Formative Assessment and Grading. ASCD.

- Chaiwichit, Chianchana, and Swangjang Samart. 2022. “The Empirical Research of Teamwork Competency Factors and Prediction on Academic Achievement Using Machine Learning for Students in Thailand.” Перспективы науки и образования 4 (58): 540–556.

- Cho, Eunseong, and Seonghoon Kim. 2015. “Cronbach’s Coefficient Alpha: Well Known But Poorly Understood.” Organizational Research Methods 18 (2): 207–230. https://doi.org/10.1177/1094428114555994.

- Chowdhury, Faieza. 2018. “Application of Rubrics in the Classroom: A Vital Tool for Improvement in Assessment, Feedback and Learning.” International Education Studies 12 (1): 61–68. https://doi.org/10.5539/ies.v12n1p61.

- Chowdhury, Tahsin, and Homero Murzi. 2019. “Literature Review: Exploring Teamwork in Engineering Education.” Paper presented at the Proceedings of the 8th Research in Engineering Education Symposium, REES 2019-Making Connections, Cape Town, South Africa.

- Correia, Ana Paula. 2005. Understanding Conflict in Teamwork: Contributions of a Technology-rich Environment to Conflict Management. Citeseer.

- Cronbach, Lee J., and Richard J. Shavelson. 2004. “My Current Thoughts on Coefficient Alpha and Successor Procedures.” Educational and Psychological Measurement 64 (3): 391–418. https://doi.org/10.1177/0013164404266386.

- DiStefano, Christine, and Brian Hess. 2005. “Using Confirmatory Factor Analysis for Construct Validation: An Empirical Review.” Journal of Psychoeducational Assessment 23 (3): 225–241. https://doi.org/10.1177/073428290502300303.

- Dorado-Vicente, Rubén, Eloisa Torres-Jiménez, José Ignacio Jiménez-González, Rocío Bolaños-Jiménez, and Cándido Gutiérrez-Montes. 2020. “Methodology for Training Engineers Teamwork Skills.” Paper presented at the 2020 IEEE Global Engineering Education Conference (EDUCON), Porto, Portugal.

- Ebrahiminejad, Hossein. 2017. “A Systematized Literature Review: Defining and Developing Engineering Competencies.” Paper presented at the 2017 ASEE Annual Conference & Exposition, Columbus, Ohio.

- Elayyan, Shaher. 2021. “The Future of Education According to the Fourth Industrial Revolution.” Journal of Educational Technology and Online Learning 4 (1): 23–30. https://doi.org/10.31681/jetol.737193.

- Ellis, Aleksander P.J., Bradford S. Bell, Robert E. Ployhart, John R. Hollenbeck, and Daniel R. Ilgen. 2005. “An Evaluation of Generic Teamwork Skills Training with Action Teams: Effects on Cognitive and Skill-based Outcomes.” Personnel Psychology 58 (3): 641–672. https://doi.org/10.1111/j.1744-6570.2005.00617.x.

- Ercan, M. Fikret, and Rubaina Khan. 2017. “Teamwork as a Fundamental Skill for Engineering Graduates.” Paper presented at the 2017 IEEE 6th International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Tai Po, Hong Kong.

- Fernandez, Rosemarie, John A. Vozenilek, Cullen B. Hegarty, Ivette Motola, Martin Reznek, Paul E. Phrampus, and Steve W.J. Kozlowski. 2008. “Developing Expert Medical Teams: Toward an Evidence-Based Approach.” Academic Emergency Medicine 15 (11): 1025–1036. https://doi.org/10.1111/j.1553-2712.2008.00232.x.

- Fiedler, F. R. E. D. 2015. “Contingency Theory of Leadership.” Organizational Behavior 1: Essential Theories of Motivation and Leadership 232:1-2015.

- Gerbing, David W., and Janet G. Hamilton. 1996. “Viability of Exploratory Factor Analysis as a Precursor to Confirmatory Factor Analysis.” Structural Equation Modeling: A Multidisciplinary Journal 3 (1): 62–72. https://doi.org/10.1080/10705519609540030.

- Gitlow, Howard, and Lisa McNary. 2006. “Creating Win-Win Solutions for Team Conflicts.” Journal for Quality & Participation 29 (3): 20–26.

- Goodboy, Alan K., and Matthew M. Martin. 2020. “Omega Over Alpha for Reliability Estimation of Unidimensional Communication Measures.” Annals of the International Communication Association 44 (4): 422–439. https://doi.org/10.1080/23808985.2020.1846135.

- Greilich, Philip E., Molly Kilcullen, Shannon Paquette, Elizabeth H. Lazzara, Shannon Scielzo, Jessica Hernandez, Richard Preble, Meghan Michael, Mozhdeh Sadighi, and Scott Tannenbaum. 2023. “Team FIRST Framework: Identifying Core Teamwork Competencies Critical to Interprofessional Healthcare Curricula.” Journal of Clinical and Translational Science 7 (1): e106. https://doi.org/10.1017/cts.2023.27.

- Grocutt, A., A. Barron, M. Khakhar, T. A. O'Neill, W. D. Rosehart, R. Brennan, and S. Li. 2020. Development of The Individual and Team Work Attribute Among Undergraduate Engineering Students: Trends Across 4 Years of Assessment.” Proceedings of the Canadian Engineering Education Association (CEEA).

- Gusenbauer, Michael, and Neal R. Haddaway. 2020. “Which Academic Search Systems are Suitable for Systematic Reviews or Meta-Analyses? Evaluating Retrieval Qualities of Google Scholar, PubMed, and 26 Other Resources.” Research Synthesis Methods 11 (2): 181–217. https://doi.org/10.1002/jrsm.1378.

- Hadley, Kevin, Laura Beckmann, and Kenneth Reid. 2016. “General Strategy for Development of Teamwork Skills.” Paper presented at the 2016 IEEE Frontiers in Education Conference (FIE), Erie, Pennsylvania.

- Halfhill, Terry R., and Tjai M. Nielsen. 2007. “Quantifying the “Softer Side” of Management Education: An Example Using Teamwork Competencies.” Journal of Management Education 31 (1): 64–80.

- Hardavella, Georgia, Ane Aamli-Gaagnat, Neil Saad, Ilona Rousalova, and Katherina B. Sreter. 2017. “How to Give and Receive Feedback Effectively.” Breathe 13 (4): 327–333. https://doi.org/10.1183/20734735.009917.

- Hastie, Carolyn, Kathleen Fahy, and Jenny Parratt. 2014. “The Development of a Rubric for Peer Assessment of Individual Teamwork Skills in Undergraduate Midwifery Students.” Women and Birth 27 (3): 220–226. https://doi.org/10.1016/j.wombi.2014.06.003.