?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

We propose an adaptive sequential testing procedure for clinical trials that test the efficacy of multiple treatment options, such as dose/regimen, different drugs, sub-populations, endpoints, or a mixture of them in one trial. At any interim analyses, sample size re-estimation can be conducted, and any option can be dropped for lack of efficacy or unsatisfactory safety profile. Inference after the trial, including p-value, conservative point estimate and confidence intervals, are provided.

1. Introduction

Clinical trials are expensive. Moore et al. (Citation2018) analyzed the costs for the pivotal trials for novel therapeutic agents approved by the US Food and Drug Administration during the period of 2015–2016: “Trials designed with placebo or active drug comparators had an estimated mean cost of $35.1 million (95% CI, $25.4 million-$44.8 million)”. The cost for the most expansive trial in this report was $346.8 million. Many efforts have been made to reduce the cost. One category of such efforts is the multi-arm-multi-stage (MAMS) design. Such a design essentially combines several trials into one, and multiple treatments are compared to a shared single control arm, hence eliminating the need for several control arms in separate trials and reduces the total sample size.

The main advantage of MAMS is cost efficiency (Freidlin et al. Citation2008 ; Jaki and Hampson Citation2016; Jaki and Wason Citation2018) from this sample size reduction. Other benefits of reduced sample size include the shortening of trial duration and the reduction of burden of patient recruitment, especially in trials that require a large number of patients per treatment arm, or in rare diseases where the enrollment is extremely difficult due to small patient population. For example, the European Medicines Agency issued a “Call to pool research resources into large multi-centre, multi-arm clinical trials to generate sound evidence on COVID-19 treatments” (19 March Citation2020). The MAMS design has been adopted in practice, e.g., i) The multi-arm optimization of stroke thrombosis (National Institute of Health Citationn.d.); ii) A 3-arm multi-center, randomized controlled study comparing transforaminal corticosteroid, transforaminal etanercept and transforaminal saline for lumbosacral radiculopathy (clinicaltrials.gov, NCT00733096).

The main statistical challenges in such trials include: i) Family-wise error (FWE) control with simultaneous testing of multiple hypotheses at multiple time points. An optimal control is such that the FWE never exceeds the nominal error level but can be equal to the nominal level under certain conditions. A conservative control is such that the FWE never reaches the nominal level under any situation. The Bonferroni method is an example of conservative control. Conservative control will lead to reduced power. Hence, methods that achieve less conservative or optimal control are more preferable; ii) Sample size and power calculation (as always, such calculations involve assumptions on the effect size) which are important to trial planning; iii) The assumptions for sample size calculation could be inaccurate (due to uncertainty about effect sizes) and adaptive measures, such as sample size re-estimation (SSR), may be desirable to achieve adequate power. To obtain a proper new sample size, a method for calculating conditional power will be necessary. Further, FWE must be adequately controlled when adaptations are applied to the trial; iv) Inference must be made after the completion of the trial. Hence, p-values, estimates of the effect sizes, confidence intervals are desirable. v) When adaptations are made to the trial, inferences will be more complicated, but their relevance and importance is not reduced; vi) Computations with multiple tests at multiple time points could be complicated and very time consuming. A MAMS procedure won’t be practical without efficient means of computations (e.g., Ghosh et al. Citation2017).

In addition to the need for investigating multiple treatments in one trial as in MAMS, there are also other types of trials that share the same statistical challenges as MAMS. These include trials that investigate multiple sub-populations (FDA guidance [population enrichment], 2019), and/or multiple endpoints (FDA guidance [adaptive design], 2019), in one trial. Statistical solutions for MAMS can also be applied to such trials. Sequential designs and adaptive sequential designs that include multiple options such as multiple treatment (e.g., doses/regimens), multiple sub- populations (FDA guidance [population enrichment], 2019), and/or multiple endpoints (FDA guidance [adaptive design], 2019), may be more generally named as (adaptive) multiple comparison sequential designs (AMCSD/MCSD). In this article, we propose such a procedure that addresses all of the above-mentioned statistical issues.

2. Background and features of proposed method

2.1. Background

Take a trial that tests multiple doses as an example for MAMS, other situations can be dealt with similarly. Suppose that the trial includes dose groups and a control arm, and a total of

interim/final analyses. Let

(m = 1,…, M) be the efficacy for each dose being considered, larger

indicates better efficacy. Let

. Let the related hypotheses for each dose comparison be: null hypothesis

:

, and one-sided alternative hypothesis

:

. The overall null hypothesis is

:

. Or equivalently,

. The overall alternative hypothesis is

, at least one

, or equivalently,

. At the

-th interim analysis, each of the

parameters

, is associated with an estimate

, and the standard error

,

. The comparison of each dose and the control is associated with a Wald statistic

, or equivalently, a score function

. At each interim analysis, these Wald statistics or score functions form an

-dimensional random vector, with a multi-variate normal distribution. Hence, there will be a total of

Wald statistics or score functions. Thus a MAMS trial involves the testing of these

test statistics and the conditional probabilities associated with the adaptive decisions during the trial. A fundamental challenge is to control family-wise error (FWE). A major distinction between the Wald statistics and the score functions is that the score function has independent increment and is approximately a Brownian motion (Jennison and Turnbull Citation1997). The score function vectors form a multi-dimensional Markov process with independent increment. The research into the MAMS design can be traced at least back to 1989 (Bauer et al. Citation2015). Several methods employ Dunnett’s multiple-testing procedure (on each

-dimensional random vector of the Wald statistics) in some form (Magirr et al. Citation2012; Wason et al. Citation2016, Citation2017). Stallard and Friede (Citation2008) uses the score function and Dunnett’s procedure (on each

-dimensional random vector of the score functions) to construct a group-sequential design where a set number of treatment options are dropped at each interim analysis. The null hypothesis is rejected if the test statistic is above a predefined efficacy threshold. It requires the number of doses to be dropped at any given interim analysis to be pre-specified, hence limiting its flexibility. Bretz et al. (Citation2006) used closed testing procedures and combination tests to control the type-I error whilst allowing many modifications to be made at the interim. The control of FWE in these methods is a two-level procedure, one level controls the FWE for each

-dimensional random vector at each interim analysis, then the combined FWE at all interim/final analyses is controlled. A drop-the-loser approach proposed by Chen et al. (Citation2010) utilizes the multi-variate normality of the Wald statistics and constructed a sequential design with interim analyses that is parallel to the usual group sequential design for comparing one experimental treatment and a control (e.g., O’Brien and Fleming Citation1979; Pocock Citation1977). It requires the assumption that the covariance coefficient to be ½, this limits the types of trials the method can be applied to. This method does not use multi-testing procedures, such as the Dunnett’s procedure or closed testing procedure to control the FWE. All the above-mentioned methods do not provide p-values and estimates, and there are no sample-size re-estimations. Gao et al. (Citation2014) considers an adaptive group sequential design using a Markov process (formed by the score functions) approach. The procedure does not use the Dunnett method or closed testing procedure. Instead, it uses Markov transition probabilities for the calculations of the critical boundaries, type I error control and sample size re-estimation. It provided exact FWE control in the strong sense, sample size calculation, and sample size re-estimation. It is limited to cases in which the covariance matrix of the score functions are known (it does include cases in which the covariance coefficient is ½ such as in Stallard and Friede Citation2008; or; Chen et al. Citation2010). Gao et al. (Citation2014) only included p-values but not parameter estimates after the trial. Both Chen et al. (Citation2010) and Gao et al. (Citation2014) use the correlations of the

- dimensional random vectors to control the FWE, instead of the two level FWE control such as those by Bauer et al. (Citation2015),Wason et al. (Citation2016), Wason et al. (Citation2017), Magirr et al. (Citation2012), Stallard and Friede (Citation2008), Bretz et al. (Citation2006). All of previous literature, including Chen et al. (Citation2010) and Gao et al. (Citation2014) investigates the properties of

separate

- dimensional random vectors (Chen et al. Citation2010 uses the Wald statistics, and Gao et al. Citation2014 uses the score functions). The algorithm in Gao et al. (Citation2014) uses the Markov transition probabilities which involves iterative multi-dimensional integrals and is very time-consuming (see Ghosh et al. Citation2017). Ghosh et al. (Citation2017) proposed new computing algorithm which linearized the multi-dimensional integrals and greatly reduced the time for the computations, but the computing code is very complicated.

2.2. Features of proposed method

In the theory of GSD that compares a test treatment and a control, the recursive algorithm of Armitage et al. (Citation1969), which involves iterative integrals, has been essential for the derivation of critical boundary, sample size/power calculation (e.g., Jennison and Turnbull Citation2000). In a GSD, a score function (is the information time) can be created, which is approximately a Brownian motion (Jennison and Turnbull Citation1997) and also a one-dimensional Markov process. All the methodologies regarding the GSD can be explained using intuitions about the trajectories of the score function as a Brownian motion/Markov process (e.g., Gao et al. Citation2008, Citation2013). With this intuition, a Brownian trajectory corresponds to the score function curve of an infinitely long clinical trial. A GSD involves

information time points

, score function values

can be obtained, which has a joint multivariate normal distribution, with a

covariance matrix. The entire theory of GSD can be described using this multivariate normal distribution and some calculus (see online supporting material, section 1), without using the recursive algorithm. We refer to this as the Brownian motion approximation (BMA) approach for GSD. In this article, we use a parallel BMA approach on MCSD/AMCSD and improve upon Gao et al. (Citation2014). For the comparison of the

-th dose (suppose that there are

doses) and the control, a score function

can be created. Suppose that there are

interim analysis/final analyses at information time points

. There will be

score functions,

,

. These score functions have a joint multivariate normal distribution, with an

dimensional covariance matrix. All previous methods on MAMS in the literature use some kind of iterative algorithm (e.g., see citations of literature in previous section). By using the multivariate normal distribution of these

score functions, our proposed method does not use any iterative algorithm, and is thus different from all methods in the literature. The proposed procedure is entirely parallel to that of the BMA approach for GSD/ASD (see online supporting material, section 1): i) Similar sample size and power calculations (i.e., using integration over multivariate normal distribution); ii) similar type I error control using critical boundaries(i.e., using integration over multivariate normal distribution); iii) Similar SSR using conditional power; iv) Similar type I error/FWE control when there is sample size change (i.e. maintaining conditional type I error/FWE, as in Gao et al. Citation2008; The conditional type I error/FWE are obtained by using integration over multivariate normal distribution); v) Similar logic for calculating inferences (i.e., using the inverse of a monotone function to obtain point estimate, p-value, and confidence interval, as in Gao et al. Citation2013); vi) Similar logic (using backward image, Gao et al. Citation2013) for obtaining inferences when there was sample size change.

With (and because of) this algorithm, the proposed procedure possesses the following features: i) FWE control is optimal. The FWE equals to the nominal error level in the special case when the off-diagonal elements of the covariance matrix are known (e.g., off diagonal elements are 0.5, or zero) and the “play the winner” rule (i.e., selecting the best observed treatment option) is applied for adaptation and for hypothesis testing. The FWE is less than the nominal level (i.e., conservative) in all other situations. ii) Sample size and power calculation are exact for the special case and conservative in other situations; iii) Sample size re-estimation is precise in the special case when the observed efficacy equals the true efficacy. Conditional FWE is controlled exactly in the special case and conservative otherwise, hence the overall FWE is controlled; iv) Conservative inferences: Exact inferences require knowledge of the configuration of the vector and the covariance matrix. But neither is known in actual trials and exact inferences are not possible. Conservative estimates such as p-values (larger than the true value), point estimate (smaller than the true value), and confidence interval (wider than the exact interval) can be obtained with the proposed procedure to make valid conclusions about the efficacy. v) Conservative inferences with adaptation (such as SSR and dropping some comparisons (e.g., ineffective doses) can also be obtained; vi) Computation efficiency: Gao et al. (Citation2014) used iterative multiple integration to calculate probabilities. The iterations are very time consuming (see Ghosh et al. Citation2017, for discussions). Because the new proposed algorithm does not use iterations, it does not require long computation time. Sample size and power calculation takes about 2–3 seconds. The algorithm is also much simpler than that of Ghosh et al. (Citation2017). vii) By using Slepian’s lemma (Huffer Citation1986; Slepian Citation1962), the design can be applied to cases in which the covariance coefficients are not known. Hence, the design can be applied not only to dose selections, but also to trials involving different regimens, drugs, or population enrichment. Further, conservative point estimates and confidence intervals, with or without sample size change, are provided. viii) Lastly, all previous methods only test the overall null hypothesis

that at least one experimental treatment is superior to the control. Our method includes a closed testing procedure (similar to an example that uses a modified Dunnett’s testing in Marcus et al. Citation1976) that can test all the

-level null hypotheses

(a

contains

-elements) for all

, and can be used to reject multiple individual null hypotheses

. All

-level testing will use a common

-level critical boundaries.

We refer our method as the Brownian motion approximation (BMA) approach for MCSD, which reduces to GSD/ASD when . The BMA approach does not require in-depth knowledge of either Brownian motion or Markov processes. It only uses calculus and four basic (widely known) properties of Brownian motion: i) It is additive:

; ii) It has independent increment; iii)

; iv) For

,

has a joint multivariate normal distribution (e.g., Jennison and Turnbull Citation2000; Whitehead Citation1997). An MCSD/AMCSD design is an adaptive design. The FDA guidance on adaptive designs (Citation2019) summarized the major potential advantages of adaptive designs: a) Statistical efficiency; b) Ethical considerations; c) Improved understanding of drug effects; d) Acceptability to stakeholders. In the same guidance, the FDA (2019) recommended that three statistical principles should be satisfied: a) Controlling the Chance of Erroneous Conclusions (type I error control); b) Estimating Treatment Effects (valid inference, including point estimate, two-sided confidence interval, and p-value); c) Trial Planning (including sample size/power calculation); (The FDA guidance (Citation2019) also included a fourth principle, which is operational: d) Maintaining Trial Conduct and Integrity). Our method, the MCSD/AMCSD, satisfies all the three statistical principles in the FDA guidance (2019). The MCSD/AMCSD is supported by the DACT (Design and Analysis for Clinical trials) software at https://www.innovativstat.com/software.html (The software is free for academic researchers). Computation codes are available upon request.

3. Method

3.1. Score function and GSD

To demonstrate the similarities between GSD and MCSD, we first describe the GSD using BMA. In a GSD, one experimental treatment is compared with a control. Let be the parameter of interest, larger

indicates better efficacy, and the null hypothesis is

:

, and the alternative hypothesis is

:

, Let

be an unbiased estimate, and

the standard error. Then,

is the Wald statistics, and the score function is

. The Fisher’s information time is

. Approximately,

(Jennison and Turnbull Citation1997). The GSD (O’Brien and Fleming Citation1979; Pocock Citation1977) was based on the Wald statistics. Interim analyses are planned, to be performed at information time points

. Critical boundaries

are chosen such that

is rejected in favor of

if the event

is observed (

is the Wald statistics at

). Type I error is controlled by requiring

.With the BMA, the score functions are

. Let

and name the

‘s as the “exit” boundaries. The rules of a GSD can be equivalently stated as

. The random vector

has a multivariate normal distribution, with a

covariance matrix. All probabilities such as sample size and power calculation, type I error, can be calculated using multivariate integration on

without using the recursive algorithm (Armitage et al, Citation1969). A brief description is provided in the online supporting material.

3.2. The proposed MCSD

3.2.1. The vector of the score functions

Each of the parameters

, is associated with an estimate

(at any information time

) and a score function

.

3.2.2. Hypothesis testing and type I error control

The trial will include a total of K analyses including the interim analyses and the final analysis. Let be the information time for the

th component

at the i-th interim analysis. For each,

. This forms an information vector at each interim analysis,

, i = 1, …, K. Type I error control is achieved by selecting critical boundaries

, …,

such that the null hypothesis

is rejected if

is observed. The type I error is controlled if

, …,

satisfy the condition that

.

3.2.3. The closed testing procedure

3.2.3.1. The L-level tests and critical boundaries

The closed testing procedure has been used to control FWE for testing (e.g., Bretz et al. Citation2006). We propose the use of the closed testing procedure for a different purpose. Marcus et al. (Citation1976) included an example for closed testing procedure by modifying Dunnett (Citation1955) in which multiple individual null hypotheses can be rejected using different levels of rejection criteria. Such a procedure is more powerful than a single criterion that only tests

. The proposed closed testing procedure is similar to this example. With this closed testing procedure, our proposed design is more powerful than the methods in the literature (e.g., Bretz et al. Citation2006; Chen et al. Citation2010; Gao et al. Citation2014; Stallard and Friede Citation2008). The closed testing procedure is a multi-step procedure: Step 1: Test

(level M test); Step 2: Test

for all Q that contains M −1 numbers from {1, …, M} (level M −1 test); ….; Step M −1: Test

, for all Q that contains 2 numbers from {1, …, M} (level 2 test); Step M: Test

:

(level 1 test) for all

. These tests will be referred as level

tests for each 1 ≤ l ≤ M. If

is rejected, the overall alternative hypothesis is claimed that

. A null hypothesis

can be rejected if and only if all l- level (1 ≤ l ≤ M) tests containing

have been rejected. At each level

, the l -level FWE is controlled by the sequential testing critical boundaries

, …,

, derived with BMA procedure. All of the published literature test

only.

For simplicity of notations, we assume that for each i, . Such an assumption may not always hold (e.g., if the efficacy endpoint is time to event, or binary), those situations result in more complicated notations, but does not invalidate the procedure. Interim analysis will be held at

. Let

. For a level l test, type I error control is achieved by selecting critical boundaries

, …,

such that the null hypothesis

is rejected if for any 1 ≤ i ≤ K such that

for any m ∈ Q. The

, …,

satisfy the condition that

, where each

(t) ~ N(0, t) is a Brownian motion. So M groups of critical boundaries

, … ,

will be selected, with

being the critical boundaries for each l level testing. The critical values can be chosen such that

. So if (

≥

for a particular m at some

, then (

) ≥

, for all q < l.

3.2.3.2. Using the closed testing procedure to potentially reject multiple null hypotheses

Similar to the example in Marcus et al. (Citation1976), it is possible to reject more than one individual null hypotheses with the closed testing procedure. For example, assume M = 3, and the trial is terminated at the I -th interim analysis, with . Let

be the Wald statistics. Assume that

, and

,

, and

. Without the closed testing procedure, only

:

can be rejected (and

, implicitly maybe), the individual null hypotheses

,

could not have been rejected. With the closed testing procedure, all of

,

, and

can be rejected (this is very similar to the example in Marcus et al. Citation1976). For simplicity, we will only discuss the M level test. Other l level tests are similar. To simplify the notations, we denote

.

3.2.4. Critical/Exit boundary determination

3.2.4.1. General requirement

Let . Let

, i = 0, 1, …, K. The

’s are “information fractions”. Usually, for large samples, sample sizes are proportional to the information times (e.g., Whitehead Citation1997). Let

,

, and

. Then

is an M × K dimensional vector with multivariate normal distributions. Let the joint density function for

be

(where

). The construction of the

dimensional covariance matrix

is discussed in the online supporting material. Under the null hypothesis,

. To control the type I error, it is sufficient that

)} be satisfied. Then

The use of the M × K dimensional vector is motivated by the Markov process theory and independent increment property of the score functions. It converts the use of Markov process theory into the use of multi-variate normal distribution. It is the foundation of all the calculations used in the proposed method. It greatly simplifies the algorithms of those used in Gao et al. (Citation2014) and Ghosh et al. (Citation2017). The integral over the multi-variate normal distribution can be easily carried out using the R-function pmvnorm. Details of the calculations are provided in the online supporting material. The l -level critical boundaries for the closed testing procedure,, …,

, are derived similarly.

3.2.4.2. O’Brien-Fleming boundary

The O’Brien-Fleming boundaries satisfy , which is equivalent to

). Let

. Then

is a decreasing function of e. Let

. Then

, i = 1, …, K are the desired O’Brien-Fleming boundaries.

3.2.4.3. α-spending functions

Let . This is the event that the critical boundaries were not crossed at any interim analysis before

, and was crossed at

. Note that the events

are mutually exclusive, or that

for

. Hence,

. Under the null hypothesis, observing an

means the occurrence of type I error. Let

. Then

is the type I error “spent” at

. Therefore, the

’s need to be chosen such that

. The critical boundaries

can be selected successively as detailed in the online supporting material.

3.2.5. Power calculation and sample size determination

Suppose that the “information fraction” ’s, i = 1, …, K, and the critical boundaries

, i = 1, …, K, have been determined. Let the information time at the final analysis be denoted as T, and let

,

. Let the assumed efficacy parameters under the alternative hypothesis be

. The power with information time T is

Details of the calculation are provided in the online supporting material. is an increasing function of T. The desired power of the trial can be obtained by choosing

. Sample size

can then be determined by the relationship that

for some distribution specific constant

.

3.3. The general situation and Slepian’s lemma

The above discussed calculations of type I error, exit/critical boundaries, sample size/power assume that the covariance matrix is known, which is possible only in the special situation of treatment selection with a common control, and the variable is normally distributed. We refer all other situations as the general situation, such as treatment selection with non-normally distributed variable (e.g., time-to-event), population selection, endpoint selection, or mixed selections (2019). The FDA guidance noted that “It may be particularly difficult to estimate Type I error probability and other operating characteristics for designs that incorporate multiple adaptive features.” The FDA guidance (adaptive designs, 2019) hypothesized that, for the general situation, “it can be argued that assuming independence among multiple endpoints will provide an upper bound on the Type I error probability.” A mathematically rigorous proof for this hypothesis is provided by using the Slepian’s lemma (Huffer Citation1986; Slepian Citation1962). Hence, conservative statistics can be obtained that satisfy the three statistical principles in the FDA guidance (Citation2019): upper bound of type I error that does not exceed the desired level, conservative lower confidence limit and upper bound of p-value. These calculations are confirmed through simulations shown in later sections.

3.3.1. Slepian’s lemma

Slepian’s (Citation1962) (Huffer Citation1986; Slepian Citation1962) : Suppose that and

are Gaussian vectors and that

,

, i = 1, … ,n, and

for i ≠ j. Then the following inequality holds for all real numbers

:

.

3.3.2. Applications

For multiple dose comparison with normally distributed endpoint and a common control, the covariance matrix is known (see online supporting material). In all other cases (such as in multiple treatment comparison in which the distribution of the efficacy variable is not normally distributed, or trials with population enrichment, and/or multiple primary endpoint comparison), the covariance matrix is generally unknown. Let where

for m ≠ l (i.e.

’s are mutually independent Brownian motions). Let

. Let

be the

’s and

the

’s in Slepian’s lemma. For all potential options/comparisons to be considered in a multiple comparison trial, it is reasonable to assume that

, or that the endpoints are non-negatively correlated. This assumption is in agreement with the opinion that (FDA guidance,Citation2019) “Most secondary endpoints in clinical trials are correlated with the primary endpoint, often very highly correlated). Then

. Hence, the conditions for Slepian’s lemma are met, and

. So if the critical boundaries

are chosen such that

, then

as well. Hence the type I error is controlled for the MCSD.

3.4. Adaptive Multi-comparison Sequential Design (AMCSD)

3.4.1. Sample size modification

To facilitate the sample size change discussion and dose dropping (or dropping options for lack of efficacy), we use the superscript(1) to indicate statistics, parameters in the original design, and the superscript(2) to indicate statistics, parameters after the sample size change. For example, the information time points for interim analysis in the original design will be ,

,

, critical boundaries will be

, etc. Information times and critical boundaries after sample size change will be

,

, respectively. The original number of comparisons will be

, and the new number will be

.

3.4.2. Conditional power and conditional type I error

The conditional power and type I error calculation follow almost exactly as in Gao et al. (Citation2008), except the notations between multi-dimensional vector (in this article) and a single Brownian motion (Gao et al. Citation2008). And suppose that at the -th interim analysis,

has been observed. Let

. Let

, and

. The conditional power under the alternative hypothesis

can be calculated as,

This can be calculated as an integration with a multi-variate normal distribution. The details of the calculation are provided in the online supporting material. The conditional type I error is obtained by setting .i.e.

.

3.4.3. New conditional power, new conditional type I error, and new sample size

The new conditional power and type I error calculation follow almost exactly as in Gao et al. (Citation2008), except the notations. Suppose that after the -th interim analysis, the number of options (e.g., doses, sub-groups, regimens, sub-populations, or endpoints) will be reduced from

to

, and the sample size will be modified. Denote the remaining options as

. This is a rearranged subset of

. For simplicity of notations, we assume that the remaining indices are 1, …,

. Suppose that the remaining interim analysis are re-scheduled at information times

… <

. Let

… ,

be the new exit boundaries. Let

. Let

. The new conditional power is

This can be calculated as an integration with a multi-variate normal distribution. The details of the calculation are provided in the online supporting material.

is an increasing function of

. Let

. Then, the conditional power will be 1 − β if a new sample size corresponding to

is selected. The conditional type I error is obtained by setting

. Let

. To control type I error, it is sufficient to choose new critical boundaries

,such that

. Hence, the new boundaries (which depends on the choice of

) and

should satisfy two conditions: i)

; ii)

should provide conditional power 1 − β. Both the new critical boundaries and the new sample size can be obtained through an iteration procedure.

3.5. Inference

3.5.1. Point estimate and lower confidence limit for the option with the best efficacy

The parameter and confidence limit calculation follow the same logic as in Gao et al. (Citation2013), except the notations (i.e., Gao et al. Citation2013 used the inverse of the monotone function , while the monotone function

is used in this article). Suppose that the trial terminated at I-th interim analysis (information time

), with

. Let

. Let

, which is the maximum of the observed Wald statistics at

. Let

(The details of the calculation are provided in the online supporting material). Let’s rearrange such that

. For purpose of estimation, it’s reasonable to assume that

. Thus, it would be desirable to estimate

. An unbiased estimate of

requires the knowledge of the configuration of

which is generally unknown. We attempt to obtain a conservative estimate of

, which would be sufficient for regulatory purposes. Let

. Then

is an increasing function of θ.

is the p-value for the M-level testing (It can be shown that

is uniformly distributed on

). Let

.

would be a conservative estimate for

, since this estimate assumes

. For the same reason,

is a conservative estimate for the lower confidence limit for

. For convenience of discussion, we denote

.We note that

is consistent with the hypothesis test, in the sense that

0 if and only if

. A conservative estimate of

can be obtained similarly using the M −1 level test critical boundary. If desired, this process can continue until

is obtained.

3.5.2. Upper confidence limit

The calculation of the upper limit of the confidence interval uses the inverse of the monotone function . For the upper limit of the confidence interval, conservativeness means an over-estimate (which then results in a wider confidence interval). Unlike the lower confidence limit, which was obtained using an

-dimensional random vector, the upper confidence limit will be obtained using a one-dimensional Brownian motion. For this purpose, let W(t) = B(t) + θt, and

,

be the exit and critical boundaries for the M-level test. Let

(The details of the calculation are provided in the online supporting material.) Then is an increasing function of θ. Note that

is the function for estimating unbiased estimate for a usual group sequential procedure between two arms (Gao et al. Citation2013). Since

are smaller than

,

, and

. Therefore,

is a “conservative” estimate for the upper confidence limit.

3.6. Inference with adaptation

3.6.1. Lower confidence interval limit and p-value

Suppose that the trial terminated at -th interim analysis (information time

), with

. Let max

. Let

be ranked such that

. Let

. There is a unique

, and a unique

, such that

The pair is the (

level) multi-dimensional backward image of

, given

. Let

. Let

. Then

is the Hayter (Citation1986) type lower limit boundary for

, and

is a conservative point estimate for

. For convinience of discussion, we denote

. The p-value is obtained as

.

3.6.2. Upper confidence limit

Let W(t) = B(t) + θt, and ,

be the planned exit and critical boundaries for the

-level test, and

be the new exit boundaries. There is a unique

, and a unique

, such that

The pair is the one-dimensional backward image of

, given

. Let

(The details of the calculation are provided in the online supporting material.) Where is the covariance matrix of

. Then

is an increasing function of θ. Similar to the estimation without sample size change,

is a “conservative” estimate for the upper confidence limit.

3.7. Repeated sample size modification

Repeated sample size modifications can be conducted. Successive backward images will need to be obtained for inference. Details are provided in the online supporting material.

4. Examples and simulations

We provide examples of the design of multiple dose comparisons, simulation results for type I error control, point estimate and two-sided confidence interval coverage. We also provide examples on how the AMCSD procedure may be applied in clinical trials. All calculations, such as critical boundaries, sample size, conditional power and conditional type I error, point estimate, confidence interval, sample size re-estimation, power and type I error simulations are available with DACT.

4.1. Designing examples and inference simulations

4.1.1. Critical boundary selection and type I error control

We consider a design for a trial that compares two doses of a new drug with a common control (), with normally distributed efficacy endpoint (), and another example with non-normally distributed endpoint (). There will be one interim analysis at information fraction = 1 ⁄ 2, and a final analysis at

= 1. The boundary is an O’Brien-Fleming type critical boundary.

Table 1. Two doses, two looks, common control and normally distributed endpoint.

Table 2. Critical boundary with two treatments and two looks, any distribution.

Table 3. Type I error control with two doses and with two looks.

Type I error simulations are performed for both normally distributed endpoint and survival analysis using the O’Brien-Fleming boundaries from , for a study that enrolls 100 patients randomized to the two dose groups and the control group in a ratio of 1:1. For the normally distributed variables, the control has an N(0, 1) distribution, the dose groups have an N(, 1) and an N(

,1) distribution, respectively. The repetition for the simulation is 100,000. The type I error is the rejection rate when the Z-score associated with a dose with

= 0 crossed the critical boundary. For survival analysis, the planned number of events is 300. The efficacy of the different dose groups are (

,

). Type I error is the rejection rate when the Z-score associated with a dose with

crossed the critical boundary. The repetition for the simulation is 10,000.

4.1.2. Point estimate and confidence interval simulations

Simulations are performed for dose selection studies. The selection rule for the simulations in is for a two (active) doses (with one control) design. is estimate for the dose with the largest Wald statistics at the termination of the trial.

is the true efficacy corresponding to the selected

. The bias is

. The point estimates and confidence interval coverage for

are presented. In our simulations, sample size re-estimation is performed at the interim analysis.

Table 4. Point estimates and confidence interval coverage.

All the simulation results agree with the mathematical derivations: i) The rate of rejecting the null hypothesis is consistent with the lower boundary of the confidence interval (not shown in the table); ii) The point estimate is conservative (the median bias is negative); iii) the coverage of the two-sided CI is conservative; vi) The upper limit of the confidence interval is conservative; v) The lower limit of the confidence interval is conservative.

4.2. Two trial examples

We use one trial design and two possible scenarios as examples. We use a hypothetical trial to demonstrate how to perform sample size re-estimation and final analysis. Suppose that an oncology trial is being conducted, in which two regimens are compared to a common control. It is noted that the covariance matrix is not known. Hence the trial is designed according to . Note that . Suppose that the efficacy endpoint is time to event (say, time to progression free survival, PFS), the efficacy is the hazards ratio HR, and

. Suppose that the initial sample size is 300 events for the three arms. Suppose that a sample size re-estimation is conducted at the first look. Hence,

, and the total number of events at the interim analysis is 150. Suppose that larger values of

(smaller HR) indicates better efficacy, and an effect size of

is considered to be clinically insufficient. In the example, we assume that combined number of events between an active arm and the control at the interim analysis is 100 (in an actual trial, this number should be the observed number). All the calculations were performed using the DACT software, and users can repeat the calculations using the DACT software.

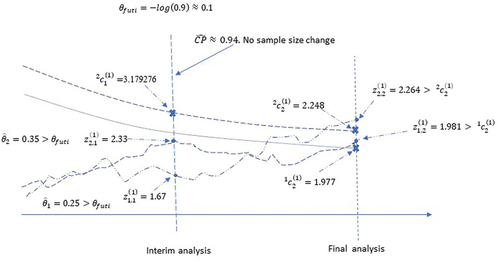

4.2.1. Scenario 1: no sample size change

Suppose that are observed at the interim analysis, with common standard error

. Accordingly,

; and

. Since

3.179276 and

3.179276, the null hypothesis is not rejected. Also, since

and

, the efficacy from both regimens are potentially clinically beneficial. The estimated conditional power

under the assumption of

is 0.943916. Since this is greater than 0.9, a sample size reduction (from 300) to 264 events between an active arm and the control (and with adjusted final critical boundary 2.303584) is an option (see in the online supporting material).

Suppose that the trial continues as planned without sample size change (hence no change in the final critical boundary). Suppose that at the final analysis, were observed with common standard error

. Then

,

. Note that

, and

. Hence, level 2 hypothesis testing rejects the null hypothesis of

:

. Since also

, the level 1 test rejects the null hypothesis

:

as well. Since

,

:

can’t be rejected with the level 2 test. However, since the null hypothesis of

has been rejected by

,

only needs to be compared with

per the closed testing procedure. Since

,

:

is rejected. The rejection of

would not be possible without the closed testing procedure. The

-value for the overall test (level 2) is

. The conservative point estimate for

is

, and the conservative two-sided 95% confidence interval is (0.0017,0.4475) (see in the online supporting material). Note that because

, it is assumed that

. The estimate and confidence interval for

needs to be calculated separately using the level 1 boundaries. Two imaginary curves of Wald statistics are used in for illustration. The two curves illustrate the trajectories of the Wald statistics

,

.

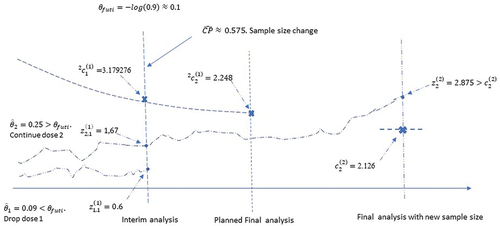

4.2.2. Scenario 2: sample size change and regimen dropping

Suppose that are observed, with common standard error

. Accordingly,

; and

. Since

3.179276 and

3.179276, the null hypothesis is not rejected. Also, since

and hence did not meet the criteria for clinically meaningful benefit, regimen 1 will be dropped from further enrollment. After dropping regimen 1, the conditional power with the planned sample size is 0.575. Sample size re-estimation suggests that the total number of events for the two remaining arms should increase to 374 (this number excludes the events in the dropped regimen before the interim analysis) to achieve a conditional power of 90%. No more interim analysis is planned except a final analysis. The new critical boundary for the final analysis is

2.126. Suppose that at the final analysis,

were observed with standard error

.

. Hence the null hypothesis of

:

is rejected. Since regimen 1 has been dropped, this suggests that

. The final p-value is

.The point estimate and the conservative two-sided 95% confidence interval are

(0.047616,0.393584). figure Two imaginary curves of Wald statistics are used in for illustration. The two curves illustrate the trajectories of the Wald statistics

,

.

5. Discussion

The properties of the score function are well known (Jennison and Turnbull Citation1997). It is approximately a Brownian motion. Because of this property, the multi-dimensional Markov process, formed by the vector of the score functions, is a precise mathematical model for describing the accumulative data for an MCSD/AMCSD procedure. The type I error is exact and sample size/power calculation is precise if the covariance matrix is known. In more general cases, the type error control, sample size/power calculation is conservative. In this sense, the procedure is optimally conservative. The method allows flexible decision rule for adaptive changes.

By utilizing Slepian’s lemma, the procedure can be applied to any distribution for which the score function is approximately a Brownian motion (e.g., Jennison and Turnbull Citation1997), such distributions include the normal distribution, binary distribution, survival analysis, Poisson distribution, and the negative binomial distribution. In a dose selection trial with a normal endpoint, the correlations between any two score functions can be derived as 0.5. For all other situations, the correlation is considered to be unknown, and Slepian’s lemma is applied, which conservatively assumes the correlation coefficients to be zero. All these distributions are supported by the DACT software. The procedure can be applied to a wide range of trials, including dose, treatment, end point selection, or a mixture of these selections. It can also be applied to population enrichment. To apply the procedure, score functions need to be created. Suppose that there are

active doses/treatments in a dose/treatment selection trial, the comparison of each active dose/treatment arm with the control corresponds to a score function. In a trial that involves one active treatment arm and a control arm with

endpoints, each endpoint corresponds to a score function. In a population enrichment trial that is randomized between an active treatment arm and a control arm, and in which a total of

(sub) populations are considered, the comparison between the active treatment and the control for each subpopulation corresponds to a score function. Such an association between a comparison and a score function can be applied to a trial that involves a mixture of dose/treatment, endpoint. population enrichment comparison in the same manner. The method is complete in the sense that it includes sample size and power calculation, sample size re-estimation and it provides inference which includes point estimate, confidence interval and p-values.

Supplemental Material

Download MS Word (50.8 KB)Acknowledgements

The authors would like to thank the reviewers for helpful and constructive comments.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Supplementary material

Supplemental data for this article can be accessed online at https://doi.org/10.1080/10543406.2023.2233590.

Additional information

Funding

References

- Armitage, P., C. K. McPherson, and B. C. Rowe. 1969. Repeated significance tests on accumulating data. Journal of the Royal Statistical Society (General) 132 (2):235–244.

- Bauer, P., F. Bretz, V. Dragalin, F. König, and G. Wassmer. Twenty-five years of confirmatory adaptive designs: Opportunities and pitfalls. Statistics in Medicine 35(3):325–347. 15 Mar 2015. doi:10.1002/sim.6472.

- Bretz, F., H. Schmidli, F. Knig, A. Racine, and W. Maurer. 2006. Confirmatory seamless phase II/III clinical trials with hypotheses selection at interim: General concepts. Biometrical Journal 48 (4):623–634. doi:10.1002/bimj.200510232.

- Chen, Y. H., D. L. DeMets, and K. K. G. Lan. 2010. Some drop-the-loser designs for monitoring multiple doses. Statistics in Medicine. 29(17):1793–1807. 10.1002/sim.3958.

- clinicaltrials.gov. A 3-arm multi-center, randomized controlled study comparing transforaminal corticosteroid, transforaminal etanercept and transforaminal saline for lumbosacral radiculopathy. https://clinicaltrials.gov/ct2/show/NCT00733096

- Dunnett, C. W. 1955. A multiple comparison procedure for comparing several treatments with a control. Journal of the American Statistical Association. 50 (272):1096–1121. doi:10.1080/01621459.1955.10501294.

- European medicines agency. 2020. Call to pool research resources into large multi-centre, multi-arm clinical trials to generate sound evidence on COVID-19 treatments. Media and Public Relations. (March 19).

- Freidlin, B., E. L. Korn, R. Gray, and A. Martin. Jul 15, 2008. Multi-arm clinical trials of new agents: Some design considerations. Clinical Cancer Research: An Official Journal of the American Association for Cancer Research 14 (14):4368–4371. doi:10.1158/1078-0432.CCR-08-0325.

- Gao, P., L. Y. Liu, and C. Mehta. 2013. Exact inference for adaptive group sequential designs. Statistics in Medicine 32 (23):3991–4005. 15 October 2013. doi:10.1002/sim.5847.

- Gao, P., L. Y. Liu, and C. Mehta. 2014. Adaptive sequential testing for multiple comparisons. Journal of Biopharmaceutical Statistics 24 (5):1035–1058. doi:10.1080/10543406.2014.931409.

- Gao, P., J. H. Ware, and C. Mehta. 2008. Sample size re-estimation for adaptive sequential designs. Journal of Biopharmaceutical Statistics 18 (6):1184–1196. 2008. doi:10.1080/10543400802369053.

- Ghosh, P., L. Y. Liu, P. Senchaudhuri, P. Gao, and C. Mehta. December 2017. Design and monitoring of multi-arm multi-stage clinical trials. Biometrics 73(4):1289–1299. doi: 10.1111/biom.12687.

- Hayter, A. J. 1986. The maximum familywise error rate of Fisher's least significant difference test. Journal of the American Statistical Association 81:1001–1004.

- Huffer, F. 1986. Slepian’s inequality via the central limit theorem. Canadian Journal of Statistics 367–370. doi:10.2307/3315195.

- Jaki, T., and L. V. Hampson. 2016. Designing multi-arm multi-stage clinical trials using a risk–benefit criterion for treatment selection. Statistics in Medicine 35 (4):522–533. doi:10.1002/sim.6760.

- Jaki, T., and J. M. S. Wason. Multi-arm multi-stage trials can improve the efficiency of finding effective treatments for stroke: A case study. BMC Cardiovascular Disorders 18(1):215. 2018 Nov 27. doi:10.1186/s12872-018-0956-4.

- Jennison, C., and B. W. Turnbull. 1997. Group sequential analysis incorporating covariance information. Journal of the American Statistical Association 92 (440):1330–1441. doi:10.1080/01621459.1997.10473654.

- Jennison, C., and B. W. Turnbull. 2000. Group sequential methods with applications to clinical trials. Boca Raton, FL: Chapman & Hall/CRC. doi:10.1201/9781584888581.

- Magirr, D., T. Jaki, and J. Whitehead. 2012. A generalized Dunnett test for multi-arm multi-stage clinical studies with treatment selection. Biometrika (2012) 99 (2):494–501. doi:10.1093/biomet/ass002.

- Marcus, R., E. Peritz, and K. R. Gabriel. 1976. On closed testing procedures with special reference to ordered analysis of variance. Biometrika 63 (3):655–660. doi:10.1093/biomet/63.3.655.

- Moore, T. J., H. Zhang, G. Anderson, and G. C. Alexander. 2018. Estimated costs of pivotal trials for novel therapeutic agents approved by the us food and drug administration, 2015-2016. JAMA Internal Medicine 178 (11):1451–1457. doi:10.1001/jamainternmed.2018.3931.

- National Institute of Health. n.d. The multi-arm optimization of stroke thrombosis. https://www.nihstrokenet.org/most/most

- O’Brien, P. C., and T. R. Fleming. Sep 1979. A multiple testing procedure for clinical trials. Biometrics 35(3):549–556. doi: 10.2307/2530245.

- Pocock, S. J. 1977. Group sequential methods in the design and analysis of clinical trials. Biometrika 64 (2):191–199. doi:10.1093/biomet/64.2.191.

- Slepian, D. 1962. The one-sided barrier problem for Gaussian Noise. Bell System Technical Journal 41 (2):463–501. doi:10.1002/j.1538-7305.1962.tb02419.x.

- Stallard, N., and T. Friede. 2008. A group-sequential design for clinical trials with treatment selection. Statistics in Medicine 27 (29):6209–6227. doi:10.1002/sim.3436.

- U.S. Department of Health and Human Services Food and Drug Administration (CDER and CBER). 2019. Enrichment strategies for clinical trials to support determination of effectiveness of human drugs and biological products guidance for industry. https://www.fda.gov/regulatory-information/search-fda-guidance-documents/enrichment-strategies-clinical-trials-support-approval-human-drugs-and-biological-products

- U.S. department of health and human services food and drug administration (CDER and CBER). 2019. Guidance for Industry Adaptive Design Clinical Trials for Drugs and Biologics.

- Wason, J., D. Magirr, M. Law, and T. Jaki. 2016. Some recommendations for multi-arm multi-stage trials. Statistical Methods in Medical Research 25 (2):716–727. doi:10.1177/0962280212465498.

- Wason, J., N. Stallard, J. Bowden, and C. Jennison. 2017. A multi-stage drop-the-losers design for multi-arm clinical trials. Statistical Methods in Medical Research 26 (1):508–524. doi:10.1177/0962280214550759.

- Whitehead, J. 1997. The design and analysis of sequential clinical trials. Rev. 2nd ed. England, West Sussex: John Wiley & Sons Ltd.