ABSTRACT

Organisations increasingly invest in resilience to better deal with future uncertainties and change. An organisation’s training service is one of the critical ingredients of this effort. However, its role in posturing organisational sustainment in a volatile operational environment and organisational resilience-building effort is rarely considered in its own right and often overlooked. This paper reports developing, verifying, and validating a new survey instrument for assessing the resilience performance of the organisation’s training systems. The instrument is based on six resilience attributes juxtaposing organisational ability and capacity to allow management to compare its resilience expectations with the actual resilience and make trade- off decisions. The efficacy of the training service policy is also considered to enable appropriate attribution of the survey findings to the training policy issues or its poor implementation. The survey incorporated a robust mixed-method, multi-attribute and multi- perspective approach that has been applied extensively with 1,403 respondents from more than 20 military training establishments over three years. This research provides organisational leadership with a focused diagnostic instrument in their training aspects’ performance against resilience metrics, where such training aspects are often a dynamic enabler for change and, thus, overall organisational sustainability and evolutionary competitiveness.

1. Introduction

Organisations are characterised by increasing complexity and interdependencies in their constituent capabilities and processes. They often encounter new uncertainties, new emergent threats, technological advances, dramatically increased availability of information, and scarce resources. State-based and commercial conflicts are becoming more public, scrutinised in real time and less remote. The information domain and the speed of information distribution, the power of data, and the rise of artificial intelligence have become our new reality (Alberts, Garstka, and Stein Citation2000).

Organisations must continuously review and adapt their priorities and requirements, hoping their decisions will secure an adequate response to perturbations to posture for their sustainability and continuous improvement. In this study, sustainability is conceptually located between ‘survival’ and ‘successes’. It represents an optimal positioning of the organisation to overcome volatility and future uncertainty of its operational environment for the ultimate purpose of organisational sustainment to continue to achieve its required outputs and evolve to adapt to these challenges. Managing these challenges whilst anticipating and addressing capability gaps and needs during uncertain and often adverse operational environments is associated with resilience. Recently Ayoko (Citation2021) reminded us that:

… Resiliency and leadership may be able to buffer the stress and uncertainty that are associated with organisational crisis, turbulence, and disruptions more broadly… Future research should continue to tease out the relationship between leadership and resiliency and at multiple levels.

the major hardware and software products, the organisation within which it will be fielded, the personnel that will interact with it, the collective training systems required, as well as facilities, data, support, and the operating procedures and organisational policies.

Common system responses are used to form resilience question sets. Resilience questions are supported by quantitative questions about the efficacy of the extant training system design and qualitative free-form questions to further analyse the system’s performance.

The instrument was implemented across more than 20 military training establishments at various levels of aggregation in and outside Australia. Although the instrument and its applications are all in a military organisational training context, they can be contextualised and implemented in any significant organisational training system facing resilience challenges. The comprehensive methodology developed includes tailorable strategies for survey design; conduct; training, data analysis, reporting and management. The survey instrument received rigorous verification and validation of its design at every project phase. This paper also presents indicative results from data analysis from one of the survey applications and outlines the research contributions to the resilience body of knowledge, its benefits, limitations and constraints for future implementations.

2. Literature review

The new resilience measurement instrument reported in this paper was developed based on an extensive literature review across system engineering, complex system management and organisational governance methodologies. Four areas are briefly covered from the literature, starting with resilience definitions and attributes, resilience mechanisms, resilience measurement, and resilience surveys in organisations.

2.1. Resilience definitions and attributes

Resilience emerges as a desirable system characteristic demanded at all organisational levels due to future uncertainties and the never-ending need to change and adapt quickly to adversity while retaining a required performance and functionality level. The resilience concept is genuinely multidisciplinary, with examples of resilience definitions available in the disciplines of ecology, psychology, engineering, organisational or enterprise supply chain management, economics, systems engineering, computer science, material science, disaster management; organisational theory, risk management, sociology, and Defence, for example, in Uday and Marais (Citation2015); Erol et al. (Citation2010); Park et al. (Citation2013); Laprie (Citation2008); INCOSE Resilient Systems Working Group (Citation2021), etc.

Resilience is often represented as a complex function of multiple attributes. Erol et al. (Citation2009) state that extended enterprise resilience and flexibility is a function of agility, efficiency, and adaptability. Their 2010 paper formulates resilience as a function of vulnerability, flexibility, adaptability and agility (Erol, Henry, and Sauser Citation2010). Ponomarov and Holcomb (Citation2009) select readiness and preparedness, response and adaption, and recovery or adjustment as resilience attributes.

Tierney and Bruneau (Citation2007) propose their ‘R4’ model of resilience consisting of robustness, redundancy, rapidity and resourcefulness, while Ma, Xiao, and Yin (Citation2018) argue that the attributes such as robustness, redundancy and recovery are concepts overlapping with, but not resilience, per se.

Jnitova et al. (Citation2020) consider resilience in an organisational context. Their extensive analysis of the existing resilience definitions in its multidisciplinary academic publications enabled their grouping into increments. These increments in a way demonstrated the evolution of the resilience concept that started from the organisational response to adverse events, gradually adding organisational preparation, anticipation, and ultimately evolution as exemplified in .

Table 1. Examples of resilience definition increments.

The organisational evolution for resilience relies upon the organisational ability and capacity to learn from change. The evolution enables the organisational transition to its modified state to adapt to the changed or a new operational environment, in order to continue to perform its required functions and deliver its required outputs. In this context, organisational resilience was presented as an emergent property of a continuously evolving system triggered by changes in the environment that are only partially predictable.

Some authors go beyond definitions and propose resilience frameworks to support their systemic approaches to achieving resilience. For example, Andersson et al. (Citation2021) highlight a lack of consensus in resilience considerations and propose a conceptual resilience framework to ‘support systematic design, generation, and validation of resilient, software- intensive, socio-technical systems with assurances’. The main pillars of their framework are

(1) the fundamental change types that affect system resilience; (2) the different facets of resilience based on a dynamic characterisation of resilience; (3) the mapping of each of these facets to design strategies; and (4) metrics that can be used to assess its achievement. In addition, they propose a set of four system descriptions that contribute to the notion of system resilience: robustness, graceful degradability, recoverability and flexibility. The INCOSE Resilient Systems Working Group also pursue a holistic approach to the system’s resilience that covers the concept’s definition, means [or mechanisms] of resilience, description of adversity, three-level taxonomy for resilience and strategies for achieving resilience.

In their extensive resilience literature review, Jnitova et al. (Citation2020) propose a comprehensive resilience framework also used in the research reported in this paper and discussed further in the section 3: Research Methodology.

2.2. Resilience mechanisms

Yılmaz Börekçi et al. (Citation2021) argue that organisational resilience is ‘a linchpin against the possibility of breakdowns within and between organisations’ that may occur in organisational operations or relations with the various stakeholders within and outside the organisation under consideration. They use multiple-design case studies methodology based on interviews, observations and analysis of organisational document sets in multiple organisations operating in Istanbul, Turkey, to support their organisational resilience categorisation into operational and relational resilience. They define operational resilience as ‘the survival and sustainability of an organisation’s operations including task completion, work performance, and product delivery in case of operational disruption. In contrast, relational resilience is defined as ‘survival and sustainability of internal and external organisational relationships against adversity’. The authors also developed a conceptual model depicting the combined effort of organisational and relational resilience in achieving overall organisational resilience.

Duchek (Citation2020) develops a resilience framework combining the concepts of ‘resilience capabilities’ and ‘stages’ to represent resilience as a process before, during and after the event triggering resilience response. Pritchard and Gunderson (Citation2002) and Dalziell and McManus (Citation2004) similarly distinguish ‘ecological’ and ‘engineering’ resilience, the former focusing on the magnitude of a disturbance the system can withstand before it is modified and the latter on the speed of return to equilibrium. This research follows a transitional ‘state machine’ resilience model that features prominently in the reviewed literature, such as in Bhamra et al. (Citation2011); Buchanan et al. (Citation2020); Burnard and Bhamra (Citation2011); Carpenter et al. (Citation2001); Holling et al. (Citation1995); Limnios et al. (Citation2014); Mafabi et al. (Citation2012); Park et al. (Citation2013); Sawalha (Citation2015); Tran et al. (Citation2017), Jackson et al. (Citation2015). For example, Henry and Ramirez-Marquez (Citation2010) develop a conceptual model of ‘resilience mechanisms’ of a system that consists of transitions between five states of resilience in response to adversity. The resilience aspect of organisational performance management is also considered by complex systems management (Gorod, Hallo, and Ireland Citation2019) and governance (Keating and Katina Citation2019), including pathological diagnosis using established metafunctions.

Jnitova et al. (Citation2021) argued that to continue achieving their desired effect; organisations must be capable of their resilience response to adversity in a spectrum of agile environments, performed by resilience mechanisms. The authors defined resilience mechanisms as ‘performers that enable resource(s) to continue to perform capability realisation activity after experiencing an unplanned disturbance’. Hefner (Citation2020) grouped resilience mechanisms into the following four types: (1) avoidance: resisting disturbance conditions by retaining the equilibrium state in which the resource can fully realise the capability; (2) withstanding: resisting disturbance conditions by retaining the equilibrium state in which the resource can fully realise the capability; (3) recovery: returning to the resource’s original desired state after a partial or complete loss of capability in which a capability realisation continues; and (4) evolution: assuming a new desired state after a partial or complete loss of capability in which a capability realisation continues, because the original desired state is no longer able to support the required capability realisation levels.

2.3. Resilience measurement approaches

Organisational performance is traditionally measured under defined conditions using Key Performance Indicators (KPIs) to determine how well they achieve their critical requirements under the specified conditions (Jnitova et al. Citation2022). The KPIs are then subjected to risk assessment to determine if the performance stays within the acceptable performance margins, beyond which the risk needs to be managed. An organisation’s ability to continue to perform its required functions outside the specified conditions in the context of adversity and uncertainty falls outside the organisational performance and risk management practices described above and manifests as resilience. Organisational resilience is risk dependent, and as risks constantly change, so should our organisational resilience assumptions Ferris, (Citation2019)). The authors also state that organisations that pursue resilience need a continuity plan to be created, tested, and monitored for currency, integrity, reliability, and completeness to derive meaningful assumptions about their systems’ operational capability to continue. Resilience aspects of organisational performance management are considered by complex systems management (Gorod, Hallo, and Ireland Citation2019) and governance. (Keating et al. Keating and Katina Citation2019).

A growing pool of academic literature is devoted to developing and applying resilience measurement methodologies. For example, Ferris (Citation2019) states that resilience measures should be helpful to guide management choices during the system life cycle. Methodologies for the systematic design and validation of resilience for capability systems are thus essential and require contributions from different fields. (Andersson et al. Citation2021).

An example of earlier work on measuring resilience and its associated metrics can be found in the Adaptive Cycle Model by C. S. Holling et al. (Citation1995), continued by Carpenter et al. (Citation2001) and Cumming et al. (Citation2005). Andersson et al. (Citation2021) proposes measuring failure prevention, cumulative degradation amount and severity, and recoverability to support system resilience strategies. Ferris (Citation2019) tailors variations of the system’s Generic State-Machine Model by Jackson et al. (Citation2015) to a specific context of the system to measure resilience by enumerating events that could cause state transitions, and then selects the number of the system’s functional capability levels, followed by determining the number of system states. This research also uses the Jackson et al. (Citation2015) Generic State-Machine Model and incorporates it into its training systems’ resilience considerations.

2.4. Resilience surveys

One of the biggest challenges in organisational resilience performance measurement lays in the resilience ‘potential’ nature because its actual response is manifested during adverse events that are uncertain and only partially predictable (Hollnagel, Woods, and Leveson Citation2006). Brtis and Jackson (Citation2020) identify multiple sources and types of adversity; for example, from environmental sources, due to typical failure, as well as from opponents, friendlies, and neutral parties. The authors continue that adversities may be expected or unexpected and may include ‘unknown unknowns.’ Ferris (Citation2019) stated that the measurement method for resilience should incorporate the effect of uncertainty on the system when only the potential for resilience response could be considered.

One of the approaches to dealing with this challenge is to indirectly determine the system’s resilience performance potential using a survey instrument. The survey instruments facilitate collecting opinions from its milieu inhabitants formed from their direct interaction with a surveyed system and its environment, which are later analysed and reported as required. Our academic literature review has not identified any examples of such surveys focused on organisational training resilience. However, we identified two recent examples of organisational resilience surveys during our literature review that influenced the design of the reported survey instrument. These are briefly described below.

In the first example, Rodríguez-Sánchez et al. (Citation2021) developed their survey to test their hypothesis that ‘corporate social responsibility for employees can enhance organisational resilience’ and ‘how resilience results in organisational learning capability and firm performance’, where resilience is defined from the perspective of the workforce, as ‘the capacity employees have, which is promoted and supported by the organisation’. Their survey design is based on 19 items, five focusing on resilience and the remaining 14 on organisational learning capability. Resilience questions are based on scale questions that focus on employee response to adversity. Questions are grouped into five workplace behaviour categories or scales: experimentation, risk-taking, interaction with the external environment, dialogue, and participation. The results were statistically analysed using various methods, including Cronbach’s alpha reliability test for internal consistency. The authors believe that they proved their initial hypothesis, stating that the analysis showed how corporate social responsibility for employees positively impacts organisational resilience, which positively affects organisational learning capability.

The second example outlines the work by Morales et al. (Citation2019), who researched their predictor model between the organisational strategy and outcomes using survey methodology. The authors identified 33 variables ‘that may explain the development of [organisational] resilience’ in an extensive academic literature review on organisational development, behaviour and complex adaptation. The variables are organised into seven groups (attributes) on two levels: four groups on the organisational level and three on the individual level. Gradually, the authors build a complex relationship model between the seven attributes and their sub-attributes loosely based on the strategy-outcome predictor model by Zahra (Citation1996). The model is then used to develop a questionnaire to determine the relative importance of attributes in several industrial sectors to facilitate future organisational performance prediction. A Likert-type scale with six categories is used from ‘totally disagree’ to ‘totally agree’. The results were validated using Cronbach’s alpha coefficient, and the sample size was validated using the criteria recommended by Burnard and Bhamra (Citation2011).

Overall, the review of the literature suggested that:

resilience performance management is challenged by the uncertainty of future adverse events and their organisational impacts that are only partially predictable,

there are many types of organisations and many constituent elements of organisations on which resilience depends, but resilience attributes, mechanisms and measures are organisation’s type-agnostic and can be contextualised to any type as required,

survey work to measure organisational resilience with such scientific rigour as demonstrated in this paper is relatively rare, and

no research has been done to survey the resilience of an organisation’s training systems.

As such, applied research in organisational resilience, analogous to applied studies in efficiency and effectiveness of previous decades, would be very beneficial, especially for the training system. A new resilience instrument reported in this paper was developed to support the applied research. It is backed by an original multi-attribute resilience framework grounded in resilience conceptualisation as reported in the relevant academic literature. The new resilience framework and its survey instrument need to be evolved and utilitarian to the interdependent resilience attributes manifest across organisations, especially juxtaposed and balanced across an organisation’s ability and capacity.

3. Methodology

The paper reports a survey instrument and its new resilience metrics grounded in the research original resilience framework and developed in the context of training organisations.

3.1. Resilience framework

Resilience is often discussed in various disciplines, but the plethora of the concept interpretations present a challenge for defining a meaningful set of resilience measures for a given system. There is little consensus regarding what resilience is, what it means for organisations, and how greater resilience can be achieved in the face of increasing threats. Although it was not intended to provide an in-depth review of such diverse literature, more than 90 papers were selected from multidisciplinary resilience to form a holistic view of resilience to support this research (Handley and Tolk Citation2020). The papers for this review were selected using combinations of the following keywords: military training, workforce planning, system, resilience, measuring resilience, future proofing, resilience engineering, measuring resilience, enterpriser, architecture, and others. Despite the differences in terminology and lack of agreement between the academics on resilience attributes, six attributes were identified that featured most prominently in the reviewed publications. These attributes formed the basis of the resilience framework depicted in , representing resilience as a complex function. The higher a system’s resilience, the lower its vulnerability to perturbations such as threats or changes in the system’s environment.

Figure 2. Capability systems’ resilience framework (Jnitova et al. Citation2022).

Each attribute in the framework displays two distinctive characteristics: ability and capacity. Ability denotes actual as opposed to a potential skill that can be native or acquired, ‘natural aptitude or acquired proficiency’. In contrast, capacity denotes the potential to develop a skill and denotes the maximum amount or number that the system can contain or accommodate by the system (Mish Citation2003). When applying the framework, both characteristics of ability and capacity are considered for all six attributes. Resilience attribute relationships, however, are not straightforward – targeting an improved attribute performance in any system could have an unpredictable effect on its overall resilience. Therefore, designing resilience capability systems must account for these interdependencies unique to each capability system.

The proposed framework aligns with some founding organisational resilience research, such as Kantur and İşeri-Say (Citation2015), who developed a scale for measuring organisational resilience. The approach is founded in the authors’ earlier work that pioneered academic research into understanding these complex relationships (Kantur and İ̇şeri-Say Citation2012). Their novel integrative framework for organisational resilience, shown in and the research they have spawned, was an inspiration for and a clear link to the resilience framework in .

Figure 3. Integrative resilience framework by Kantur and İşeri-Say (Citation2012, 765).

3.2. Survey design considerations

Many of the influences of the resilience framework in are rooted in cybernetics (Han, Marais, and DeLaurentis Citation2012) and complex systems governance (Keating and Katina Citation2019) where:

‘governance is concerned with the design for “regulatory capacity” to provide appropriate controls capable of maintaining system balance’ and therefore, ‘governance acts in the cybernetic sense of “steering” a system by invoking sufficient controls (regulatory capacity) to permit continued viability’

The link between a complex system or organisation’s resilience and evolvability is ‘organismic’. Therefore, the proposed resilience framework is also inherently pathological and meets the Keating and Katina (Citation2019) criteria to qualify as a governance pathology, which is explained by the authors as follows:

Understanding of system performance involves discovery of conditions that might act to limit that performance. … These aberrant conditions have been labelled as pathologies, defined as ‘a circumstance, condition, factor, or pattern that acts to limit system performance, or lessen system viability <existence>, such that the likelihood of a system achieving performance expectations is reduced.

3.3. Survey development

The relevant Defence and Academic Ethics Boards approved a proposal for training organisation’s resilience survey (TORS) design and conduct. The TORS used the Defence- approved Survey Manager Platform accessible in both Defence-protected and private environments, with only anonymous responses collected. The TORS design was extensively developed, verified, and validated in four main applications conducted in different military training system contexts, harvesting more than 1,400 survey responses for various purposes. Although all applications were completed in military training establishments, these were very diverse in their training, from basic induction through initial trade training and professional advancement courses. The reported training included technical, medical, chaplaincy, logistics (supply), leadership and many others. This diversity should mean that, despite the Defence context, the metrics and survey could be readily adapted in many civilian organisations with structured in-house training.

The first two applications, titled ‘Pilot’ and ‘Verification’, were conducted in the same central training establishment with eight diverse lodger units participating. They aimed to improve the organisational monitoring and governance of their training systems under consideration.

The ‘Pilot’ study involved 107 questions, initially conceptualised by the first author and refined with the co-authors in a series of survey design online sessions. Following the ‘Pilot’ study, the questions were re-balanced to an equal number of nine questions per attribute and reduced to 54 based on validation workshops with the key stakeholders and statistical tests, as further detailed in Section 4.1.

The integrity of the survey design was preserved during the subsequent applications with a similar three to five workshops to ensure resilience questions were accurate and appropriate for the purpose and that the questions on the extant training system policy efficacy were right for each context. The validation workshops were commonly online and of one hour each, comprising three to five participants.

Once the 54 questions instrument was verified in a second major study, Verification’, at the same service training units as the pilot, the instrument design was validated on a ‘Broadscale’ and an ‘International’ validation application. The ‘Broadscale’ application was conducted across several diverse training establishments nominated by the second service to undergo a large-scale training reform. The purpose of the Broadscale survey was two-fold: (1) to inform the reform’s decision-making and (2) to establish a baseline for pre-reform resilience performance to compare to the post-reform performance. The ‘International’ application was conducted in a large, allied service training establishment with four lodger units participating. There was great interest in the survey’s potential to support local decision-making targeting continuous improvement initiatives.

Another aspect of the Defence training context is that these services have policies for a ‘systems approach’ to training, intended to feedback on training needs and efficacy in continuous improvement. These policies date back at least three decades and seek to guide training feedback and adaptation at the organisation’s micro-, meso-, and macro-levels. They are intended to ensure that training staff capture course feedback from students, workplace supervisors’ feedback on students’ competencies post-training, and how well the training is adapted to new technologies and procedures or generational and societal change in the student population. A ‘system approach’ policy is already an investment in training resilience, not unique to the military.

Our resilience survey instrument was designed first to access the chosen resilience attributes without reference to extant training system compliance or effectiveness. However, secondly, it had specific questions and ratings for that policy efficacy. Most civilian enterprises will also have extant training feedback and feed-forward policies that will need to be assessed alongside new survey work. This aspect is the nuance of answering ‘whether our training is appropriately resilient and to what extent our extant management change policies are effective or need to adapt’.

3.4. Target population, survey content and structure

The TORS is multi-perspective: it comprises six target populations, five reporting their ‘actual’ training system experience. The sixth consists of key system stakeholders invited to set up a ‘desired performance’ benchmark for the resilience performance of their training system under survey. lists the survey target populations and their descriptions.

Table 2. Survey target populations.

Each target population is represented by its specific questionnaire template to capture the language differences between different audiences. For example, the wording ‘in your experience’ used in the quantitative questions for trainees, instructors and training specialists is replaced with ‘to your knowledge’ in the workplace supervisor and capability manager templates to reflect their positioning outside a training system accurately.

After the primary introductory and demographic sections, the main quantitative questions have a ‘multi-attribute design’ based on our evolved resilience framework (). The validated instrument represents resilience as a complex function of six resilience attributes. Each attribute is defined by nine multiple-choice questions to ascertain desired and actual performance of the training system under consideration, with 54 quantitative resilience questions in Annex A. The quantitative section of the survey in Annex B also contains seven training service policy questions to enable appropriate attribution of the survey findings either to the training policy issues or its poor implementation.

The organisational training resilience performance score is calculated and reported separately for each resilience attribute. There is no separate question for the overall resilience performance. It is calculated as a function of six attributes, where each contribution is considered equal. The extant training policy performance is calculated slightly differently compared to resilience. Each training service process as outlined in the organisational training policy, as well as the overall performance of this policy, are considered independently of each other and are represented by separate questions to enable separation of influences of each training service process and the extant training policy on the training service performance and, ultimately, on the organisational resilience.

The identical ‘Likert Scale’ is used across all quantitative questions: Never 0.00–0.19 [red], Rarely 0.20–0.39 [orange], Sometimes 0.40–0.59 [orange], Often 0.60–0.79 [yellow], Always 0.80–1.00 [green]. The questions have a balanced mix of positive and negative logic, to which the opposite ‘Likert Scale’ scoring directions apply.

The reliability and validity of this resilience performance measurement were supported by a sufficient Cronbach’s alpha coefficient and the evidence of content and face validities. There are additional quantitative questions about the organisation’s training policy efficacy and whether there are any issues in its implementation. An example of the extant training policy question set is in Annex B.

Qualitative freeform questions sought respondents’ opinions and feedback on their training systems. These questions are also given in Annex B and were a vital source of verification of quantitative measures and for diagnosis informing managers of why quantitative differences may exist.

3.5. Survey conduct

The survey conduct in training organisations is reported as it was performed in the four survey applications completed during the research project. It was conducted in the following key steps: (1) Planning; (2) Preparation to survey conduct; (3) Distribution and Data Collection; (4) Data Analysis and Reporting; (5) Closure of the survey’s application. The steps, their implementation, and opportunities for improvement are discussed below.

Step 1 – Planning. It commenced upon receipt of the request to conduct the survey from key stakeholders of a training organisation. The requirements and procedures for the remaining four conduct steps were determined during the planning step in consultation with the key stakeholders.

Step 2 – Preparation of the survey conduct. The survey conduct’s stakeholders and their roles and responsibilities defined in the planning step were allocated during Step 2 and are as follows:

Champions: the survey’s application success relies on securing support and commitment from the key organisational stakeholders that could encourage their communities to participate, advocate the importance of the survey outcomes for the organisational success, and are the decision makers on the survey conduct and results implementation commitments. They commonly trigger the survey commencement via an executive directive or wide-distribution email explaining the survey purpose, importance, time to complete, and duration of the survey conduct period.

Design Validation Workshop Participants: the survey design was still evolving during the project and undergoing verification and validation reviews via the validation workshops with the nominated organisational stakeholders. Although the survey design was verified and validated, it is intended to continue running similar workshops in the future for all organisations participating in the survey for the first time, to contextualise the survey instrument to better suit these organisations, without change of its associated resilience measures and question’s structure and intent.

Benchmarkers: a critical group of survey participants that sets up desired resilience performance levels for their organisation. In the project’s four applications, they were identified before the commencement of the survey to secure their agreement to participate and understanding of the survey’s purpose. The benchmarker numbers ranged from one to 20 and included various combinations of internal, external (commonly represented by high-level organisational management), or their combination stakeholders selected by the organisational decision makers.

Survey Distribution and Collection point of Contact (POCs): all participating units within the surveyed organisation allocated their POCs responsible for the identification of the survey respondent contact mechanisms (emails in the case of this project), the survey timely distribution, monitoring the survey completion rate during its allocated conduct

periods, and issuing reminder email to the groups with a low response rate to encourage participation in the survey

Survey participants: the survey participants were identified in five target groups to determine if there were different perspectives of the actual organisational resilience performance within the same surveyed system, or if the experience of all respondents was homogenous

Research team: the research project team coordinated the activities performed by the other groups, implemented validation workshops’ feedback into the survey design, and informed the POCs of the completion rates.

Based on the stakeholder feedback and research team experience, the survey conduct would benefit from a more formal approach to training different survey user categories in preparation for the survey conduct and to improve their understanding of the survey tool design and purpose. It is envisioned to develop training targeting different stakeholders and different levels of engagement, with the examples of such training approaches are as follows:

Familiarisation training: a 5–10-minute PowerPoint presentation or a video based on the presentation outlining the resilience concepts related to the survey; resilience metrics used in the survey; survey aim, design, methodology limitations, and potential benefits to the participating organisations

Benchmarker training: like the Familiarisation package, with the addition of the Benchmarker roles and responsibilities

Distribution and data collection online course: an online self-paced learning package [of approximately two hours duration] for the training organisation’s managers and nominated survey conduct POCs to learn about their roles and responsibilities for the survey distribution and responses’ collection

Data analysis and results reporting workshop: one-two day interactive face-to-face or via online media workshop that introduces its participants to quantitative and qualitative data analysis techniques used in the survey, and survey results reporting templates. The workshop contains several lectures and interactive group activities using simulated and/or actual data samples.

Step 3 – Survey Distribution and Data Collection. It was conducted within a specified survey application period across the participating organisation. The survey conduct POCs in collaboration with the research team coordinated the survey distribution, response rate monitoring, issue of reminder emails, and the survey closure.

Step 4 – Data Analysis and Reporting. This step was performed by the research team. Results of the quantitative analysis were supported by the analysis of the qualitative responses with a final mixed method report containing the survey’s findings and recommendations presented to the key organisational stakeholders.

The extracted quantitative and qualitative responses were analysed and reported separately, followed by a mixed methods analysis and a complete report. The performance score is calculated and reported separately for each resilience attribute. There is no separate question for the overall resilience performance. Instead, it is calculated as a function of six attributes, each contribution being considered equal. The responses to qualitative freeform questions were analysed using the following methods as outlined by Clarke and Braun (Citation2013):

identification and analysis of the themes and sub-themes

response clustering according to their depth, breadth (how many themes are covered), and strength of themes’ affiliation

response allocation to the resilience attributes

response sentiment analysis

Some reporting automation has been successful but is still in development and will be separately reported in our future publications.

Step 5 – Closure of survey application. Distribution of the final survey report was followed up by the debriefing sessions between the surveyed organisation stakeholders and the research team, to collect feedback on the survey content and conduct from the participating organisations, investigate the organisational requirement for a deeper data analysis using nine demographic filters additional available in the survey design and be individual attributes or questions; and to discuss the organisation’s intent for the future survey applications and other collaboration opportunities targeting improvement of organisational resilience.

4. Research results

This section discusses the indicative quantitative, qualitative and mixed-methodology results achieved during its four applications and reports the approach to the survey’s design verification and validation.

4.1. Survey responses, reliability and validity

The ‘Pilot’ and ‘Verification’ applications were conducted at eight units in September- October 2020 and April-May 2021, respectively. The ‘Broadscale’ application was conducted across five large training establishments from May-July 2021. The ‘International’ TORS saw four lodger units from an extensive training establishment participating in April-June 2022.

For the ‘Pilot’ and ‘Verification’ applications, the project aimed for and achieved an ambitious target of around 30 per cent of the survey population responding from the surveyed establishment, whilst for the two validation applications, the targets were set at a much more modest 10 per cent subject to all populations having a minimum of five responses from each target population. The lower survey target sample in the two validation applications was justified by using the verified survey design. As a result, integrity was preserved during the subsequent applications and expected to harvest reliable results. Furthermore, a reasonable return rate was also achieved, which was sufficient to enable flexibility of data analysis using demographic filters and various statistical tests.

The data collection achieved during the four applications is summarised in .

Table 3. Summary of TORS responses.

The TORS content validity was achieved by grounding its design in the resilience framework in , underpinned by an extensive literature review. Meanwhile, validation workshops with stakeholders to ensure the instrument looks valid to them are a way to achieve face validity. The issues, consequences, and recommendations of the post-pilot design validation workshops are described in and were implemented in the subsequent survey versions.

Table 4. Issues identified in the ‘Pilot’ TORS design.

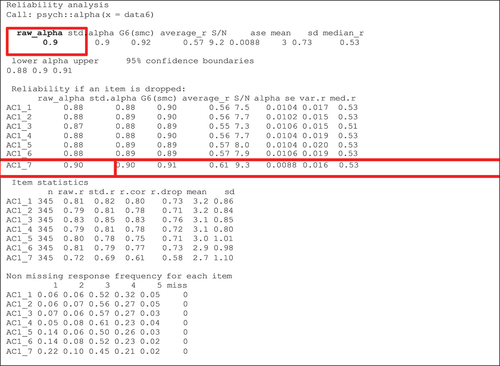

The survey design review was also supported by Cronbach’s alpha reliability test for internal consistency of its quantitative resilience responses by attribute. The alpha score for the ‘Pilot’ application was 0.963. The maximum alpha value is recommended to be 0.90 (Tavakol and Dennick Citation2011). A very high score can be attributed to the redundancy or duplication in the initial 107 quantitative resilience questions, revealed during the deeper alpha reliability test conducted within the attribute question sets (see the example in ). At the same time, the number of questions per set was also inconsistent, ranging from three to seven in different attributes.

The ‘Likert Scale’-based quantitative response options detailed in Section 3.4 were standardised for all 54 resilience questions. The response trends in the ‘Pilot’ survey quantitative resilience questions were further analysed to determine to what extent the different target populations reported their experience of their respective training systems similarly or differently, where the dominant response option selection was expressed as a percentage from the total number of responses. An example of homogeneity analysis is depicted in .

Table 5. Example of homogeneity analysis for the ‘Pilot’ application.

Different homogeneity trends were identified. Where responses showed heterogeneity, as depicted in , this was further analysed to determine whether heterogeneity can be usefully discriminated. If the answer was yes, then the question was preserved. Otherwise, the wording was refined to address the risk of uncertainty and verified and validated in the later survey runs.

The Cronbach’s alpha reliability scores for the ‘Verification’, ‘Broadscale’ and ‘International’ applications are 0.808, 0.822, and 0.827, respectively. This outcome indicates that the project has achieved a reliable design of our TORS instrument because all scores are above 0.7 (Tavakol and Dennick Citation2011).

4.2. Organisational results

This section exemplifies indicative results from the TORS applications and briefly discusses the identified general resilience performance and associated extant training policy trends. The overview content and visualisations comprise three distinctive areas: (1) respondents’ demographics overview, (2) resilience performance overview, and (3) extant training policy efficacy overview.

4.2.1. Demographic results

The high-level overview report generated on completion of each survey application focuses on two demographic variables: the number of experience responses by (1) target populations and (2) participating organisations and their lodger units, visualised in side-by-side tables and pie-charts. The actual target population result was reported on the front page of the Level 1 Overview report. This reporting gave confidence to the key stakeholders of an appropriate representation from all five training population perspectives and participating units.

The benchmark response numbers ranged from one to 20. In cases where the desired performance responses produced conflicting benchmarks, moderation of the results was carried out by the key decision-makers or, in one case, by moderation sessions between the benchmarkers.

The demographic section also contains nine additional variables for the reported respondents, providing an excellent opportunity for future analysis.

4.2.2. Indicative quantitative results of resilience performance

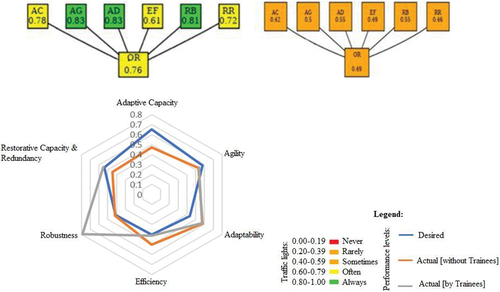

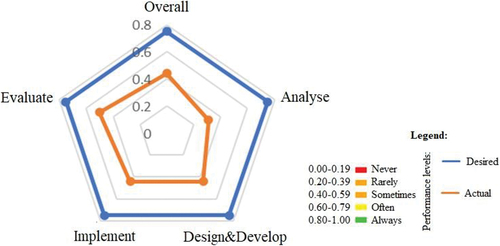

The high-level overview report generated after each survey application to key stakeholders offers a selection of visualisations of the resilience performance results, , including overall actual resilience performance, the overall actual performance of each resilience attribute and overall actual resilience performance from the target population perspectives compared to the desired performance set by the benchmarker population. Note that these resilience attribute views highlight that trainees responded to only 34 of 54 resilience questions and were exempt from the questions on Adaptive Capacity. The trainee perspective, however, is critical wherever it can be meaningfully ascertained, for example, to inform the development of learner-focused strategies tailored to the actual learners or to obtain the trainee’s first-hand experience of training disruptions and best practices.

1 The resilience attribute titles were abbreviated as follows: Adaptive capacity – ‘AC’, Agility – ‘AG’, Adaptability – ‘AD’, Efficiency – ‘EF’, Robustness – ‘RB’, Restorative capacity and Redundancy – ‘RR’ and overall resilience – ‘OR’.

The radar plot in (low-left) has the desired resilience performance by attribute from the benchmarker perspective represented as a blue line; the actual performance experienced by the four target populations, namely instructors, training specialists, workplace supervisors and capability managers, is represented by a red line. A grey line represents the actual performance experienced by the trainees. This radar plot shows a deficit in adaptive capacity and an excess in efficiency, indicating how training governors should adjust their decision- making emphasis. For trainees, the main point to note in this example is that they do not see many challenges in robustness compared to other populations.

Individual question analysis rather than the aggregate of the resilience attribute could also be revealing, although it was not included in the automated high-level overview report. At this stage, it is performed at the individual stakeholders’ request or to demonstrate various data analysis techniques available for the data sets.

4.2.3. Indicative qualitative results of resilience performance

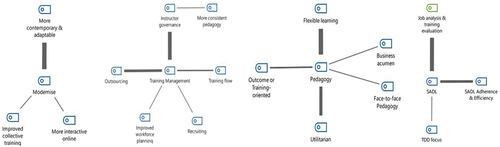

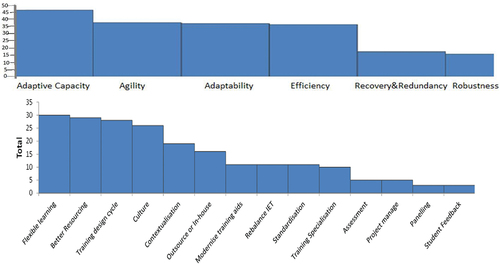

The high-level overview report generated after each survey application to key stakeholders offers a selection of visualisations of respondent opinions and feedback on the qualitative free- form questions, delivering valuable insight into the rationales for the quantitative responses. This reporting also facilitated in-depth deductive and inductive trend analysis, exemplified in .

Figure 7. Examples of frequency from deductive (upper) and inductive (lower) thematic analysis of freeform responses.

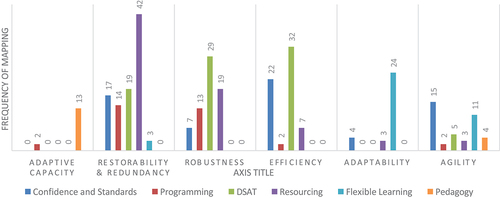

The example Pareto plot in confirms that adaptive capacity is this application’s most discussed resilience attribute. For the inductive themes in , the respondents were most concerned about ‘Flexible Learning’ and ‘(Better) Resourcing’, with both themes easily relating to the deductive findings. The themes were coded using the MAXQDA ® with an example of the inductive codes and sub-codes shown in .

The responses analysed in commented on the need to ‘Modernise’ training approaches, with subthemes on ‘Better Collective Team Training’ and ‘More Interactive Online’ Aspects. ‘Training Management’ theme comments were dominated by ‘Governance of Instructors’ and ‘Outsourcing’ concerns, as shown by the thickness of the connecting lines, which denote the frequency of comments. Comments on the theme of ‘Pedagogy’ were dominated by desires for more ‘Flexible Learning’ and more practical and utilitarian aspects, hence the earlier Pareto charts (). At times, the freeform responses code maps were printed in full with the comments arrayed on nodes. The full code maps enhanced with their respective comment examples helped management to see the weight of comments in the words of the target populations associated with their training systems under survey. It was a powerful way in all survey applications to give meaning to the results and drive decision-making and continuous improvement.

4.2.4. Indicative results of efficacy of extant training policy

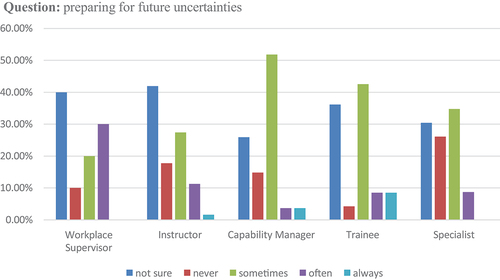

The extant training policy efficacy section in the high-level overview report generated after each survey application contains visualisations of the responses, as shown in . It includes desired and actual performance traffic lights and radar plot diagrams.

The role and importance of such policy performance are evident from the strongly desired expectation (). It is also apparent that the training policy efficacy experienced by the four ‘actual’ target populations is below the desired benchmark, with the actual training analysis being the weakest of the processes. Most comments in the free responses to the training policy qualitative question were on the need to analyse jobs better to align training and workplace requirements and to evaluate the effectiveness of training in the workplace (i.e. close the outer feedback loop). Such trend analysis informs the system stakeholders of the quality of their training policy compliance, areas for improvement and potential trade-off opportunities.

4.2.5. Mixed methods– comparative analysis

Additional depth in reporting the TORS results is achieved through a comparative analysis of the identified quantitative and qualitative trends. An example of such a mapping for one of the validation applications is provided in , showing that the most significant alignment of the inductive theme of Resourcing is to the resilience attributes of Restorability & Redundancy and Robustness.

5. Discussion

The research was triggered by the training practitioner’s need for an approach to managing and improving the training service resilience in their organisations, with the ultimate purpose of its posturing for support of organisational sustainment and evolutionary competitiveness. This paper places the concept of resilience at the centre of its organisational performance management and continuous improvement strategies for use in training organisations. It reports an extensive body of work to design, implement, verify, and validate the multi-attribute and multi-perspective resilience survey instrument and its associated resilience performance metrics.

The survey is based on the research original resilience framework representing resilience as a complex function of six attributes. It is designed as a cybernetic instrument as defined by Han et al. (Citation2012) where the reported experience of the organisational resilience performance is calculated for each attribute and compared to a desired organisational performance as reported by the key system stakeholders. This comparative analysis results in the identification of organisational resilience performance gaps and potential trade-off opportunities to achieve its desired performance level, and, in a long run, facilitates continuous improvement of the organisational design targeting resilience. The instrument is also inherently pathological as it targets the identification of a training organisation’s resilience performance gaps. The resilience measures are also supported by the training system policies’ quantitative questions, concerned with the extant policy’s efficacy to maintain an appropriate organisational resilience posture and whether any issues in its performance [if identified] can be attributed to the policy’s content or poor implementation.

The continuous improvement of organisational resilience includes a significant expansion of the verification and validation approaches far beyond those commonly used in four survey- based workplace projects, engaging more than 20 Defence training establishments during a three-year period. These four actual workplace applications of the resilience survey facilitate a gradual verification and validation of the survey design. The research approach to verification and validation of the survey design is further diversified through use of the common statistical tests, design validation and contextualisation workshops with the key stakeholders and survey technical support personnel, as well as through the comparative analysis of results within the same or between different applications. For example, the results’ comparison is conducted between the qualitative and quantitative analysis; the individual attributes or their questions; the survey’s nine demographic filters either individually or their combinations. In addition, these research activities facilitate continuous improvement of the survey design and performance management strategies, as well as the research results’ reliability.

The survey’s academic pursuits are extended with the organisational purposes, as requested by the organisational stakeholders. It ranged from establishing a system resilience performance baseline to enable its measurement and targeting system design for resilience, through the improvement of the organisational resilience monitoring and governance, to informing the training system reforms. The feedback received from the organisational practitioners indicates that, in most cases, the benefits achieved from implementing the survey recommendations went far beyond their modest goals at the start of the application. These include reported improvements in the local governance and training management, as well as the survey’s contributions to various strategic reform initiatives in the training organisational context.

The four discussion aspects are further detailed below, namely: 1) research significance, 2) how achieved research outcomes benefited the participating organisations, 3) research limitations and 4) future work.

5.1. Research significance

Despite prolific resilience research and great interest in achieving organisational resilience from the organisational stakeholders, our review of the relevant academic literature found that the reported organisational approaches to resilience performance management are predominantly reactive and consider resilience as an enabler of other organisational objectives, not the objective of its own right.

One of the biggest challenges in the organisational resilience performance measurement is ‘to incorporate the effect of uncertainty on the system when only the potential for resilience response could be considered’ (Ferris Citation2019). The resilience survey instruments propose to address this challenge by targeting the survey respondents’ perceptions as an indirect measure of organisational resilience. So long as the fact that perceptions are investigated is clear to the researcher, it is a powerful way of eliciting views about effects that enable the researcher to deduce if the system is resilient through achieving stakeholder purposes under adversity.

Two resilience performance measurement surveys described earlier (Morales et al. Citation2019; Rodríguez-Sánchez et al. Citation2021) are the most advanced examples of survey instruments recently developed for this purpose. Nevertheless, our research and survey have a specific organisational focus on the training in organisations compared to the broader workforce management in the first and the strategic business focus in the second. As such, our research is original and provides organisations with a focused diagnostic instrument in a training system, which is a crucial enabler for overall organisational resilience, ultimately securing its sustainment and co-evolution with its operational environment.

The methodological approach to the survey design and validation of the two recent literature examples (Morales et al. Citation2019; Rodríguez-Sánchez et al. Citation2021) inspired our methodology but with some crucial distinctions. Both examples have in common with our research a solid theoretical background, verified design, detailed description of their implementation and data analysis approaches, and a multi-perspective approach for their resilience questions and measures. Both studies, however, approach resilience slightly differently from our research, arguably, utilising it as a powerful enabler of other organisational purposes. In the first example, resilience is utilised to establish the link between the corporate social responsibility for employees and the organisational learning capability and firm performance, and in the second example – to enable prediction of the organisational outcomes based on the selection of the organisational strategy.

In contrast, our approach focuses on an organisational aspect, training, and resilience alone. Organisational resilience performance management is a pinnacle of this research that amalgamates the organisation and resilience concepts and targets addressing the needs of organisational stakeholders in training establishments.

Using a cybernetics approach brings it from the shadows of a potential response to future adversity to a measurable organisational characteristic. Arguably, we might strengthen our survey’s linkage to higher objectives, but we also have a unique focus from the comparison research. Additionally, there is limited or no reporting of the benefit from the research to the participating organisations and no sharing of any plans for future implementation of the two survey examples used in this paper. This trend is disappointedly common in cross-domain academic literature. Our research survey instrument is designed to address this research gap with an organisational practitioner audience in mind. Furthermore, it has already achieved a broad spectrum of applications across training establishments, reporting actual benefits to participating organisations. Finally, having both quantitative and qualitative questions in our survey design enables mixed-method data analysis and supports cross-validation of the outcomes from different survey sections.

5.2. Benefits to training organisations

Participating organisations in the four main applications communicated different reasons for using TORS. Some organisations sought to establish a performance baseline to enable resilience measurement and incrementally change the training system for a better balance of resilience. Other organisations openly sought evidence and guidance from the survey results in focusing on anticipated reforms for their training organisation. The reports generated on completion of the four applications containing the survey response analysis findings and recommendations were delivered to the key stakeholders and supported by the debriefing and discussion sessions. The researchers received feedback from the participating training establishments on the benefits their organisations achieved from implementing the TORS recommendations that, in most cases, went far beyond their modest goals at the start of the applications. They included reported improvements in the local governance and training management, as well as the TORS effects and contributions to various strategic reform initiatives in the organisational training context.

Stakeholders highlighted that the Defence Training System needs to respond to changing organisational priorities and skills demands and supports workforce development. Training system structures, sub-systems and processes must evolve to drive the agile, collaborative, flexible and integrated training system needed to grow the emerging workforce. Therefore, the stakeholder feedback on the results was focused on two aspects: (1) desired levels and (2) actual performance of the training system under review. The organisation came into the survey with a significant body of work conducted in the training quality, quantity and timeliness domains supported by various metrics to gauge optimal production of workforce capacity and capability via training. One of the three leading implementers of the TORS shared that, to them, the significant gap between the desired and actual performance in most of the six resilience attributes was sobering. It also highlighted areas within the training system that was ‘over-performing’ in areas not highly desirable/needed. The training system’s adaptive capacity, agility and restorative capacity and redundancy were most desired and currently underperforming. Most stakeholders deemed the robustness and efficiency of the training system as ‘over-performing’ and not as critical as other resilience attributes. Feedback from the managers is that they found systemic improvements from reporting each resilience attribute, usually quite nuanced and not previously appreciated. Such feedback supports the pathological intent of this survey instrument.

The applied phase of the survey concluded that, overall, measuring the resilience of any capability system such as a training system through a resilience framework, provides key insights from key stakeholders into actual and desired resilience attributes and hence the optimal balance required to achieve workforce outcomes. The key stakeholders also stated that the TORS report recommendations are in the process of being implemented and expressed their interest in long-term installation of the TORS enabled resilience measurement in their organisation to enable longitudinal performance studies, inform resilience design and enable system quality assurance and continuous improvement. Similar sentiment of willingness to continue the TORS application was also expressed by other participating organisations.

In the broader context, the management feedback illustrates that this survey approach, at least for the training aspects of an organisation, focuses leadership on critical organisational resilience. As such, the developed survey approach is an example of the earlier cited bridging research called for by Ayoko (Citation2021). Further, it helps leadership focus on the critical success determinants of organisational change management, such as the items from research by Errida and Lotfi (Citation2021), 1) clear and shared vision, 2) change readiness and capacity for change, 8) stakeholder engagement and 12) monitoring and measurement. Such in-house training in an organisation is perhaps what Paavola and Cuthbertson (Citation2022) refer to as a second-order dynamic capability for organisational transformation, where they caution that many such capabilities are, at best, configured to support incremental change. Indeed, our application all found the policies on a ‘systems approach’ to training were highly ‘throttled’ or ‘resistive’ to change with bureaucracy and limited adaptive capacity protecting training to achieve efficiency, generally at the expense of agility and adaptability. Research by Mirza et al. (Citation2022) on applying open innovation models to such service functions in organisations is promising as they examine how to moderate and mediate the ‘role of absorptive capacity between open innovation and organisational learning ability.’ The adaptive capacity of an organisation’s training system and its training policies are the first extant points of such moderating and mediating.

5.3. Research limitations

Although the TORS achieved its four successful applications in various organisations, this complex methodology continues to evolve. Three of the TORS methodology issues and considerations for their improvement are as follows:

Capturing the complexity of attribute relationships. The TORS resilience response analysis considers the contribution of each resilience attribute to the overall resilience performance to be of equal value. Our literature review and feedback from the organisations participating in the survey emphasise that relationships between the attributes are not straightforward and unique to each capability system. Ideally, the weighting of questions and attributes could be included in future survey refinement using techniques like Rasch modelling (Rasch Citation1980).

Benchmark Subjectivity. Survey methodologies that use quantitative approaches often rely on respondents’ subjective opinions, such as in our examples from the literature review (Morales et al. Citation2019; Rodríguez-Sánchez et al., 2019). In addition, problems with the surveys’ scale development practices often led to difficulties in interpreting the results of field research (Cook et al. Citation1981; Schriesheim et al. Citation1993, Hinkin Citation1995). Our selection of the ‘Likert Scale’ used in the extant training policy and resilience attribute questions has led to objective responses from the target populations reporting their actual training system experience. The desired performance benchmark, however, currently relies on the subjective perception of the critical stakeholders selected to respond to the benchmarking survey. Our approach to addressing this issue is under development. Still, it involves 1) automation of the desired performance benchmarking based on modelling and simulation of resilience mechanisms and resilience effects and 2) educating benchmarkers before they participate in the survey to improve their understanding of resilience concepts and associated system priorities and trade-offs in the context of their training systems.

Implementation Sustainability. TORS implementation is currently conducted on a small scale facilitated by our researchers in consultation with the key stakeholders from the participating training units. To upscale the TORS implementation and facilitate longitudinal studies requires a skilled workforce and sustainable data collection and management practices.

There are additional challenges outside the training environment. The authors firmly believe that the resilience measurement instrument reported in this paper is inclusive, scalable, and can be contextualised to any organisation seeking to measure and improve its resilience. The survey questions, however, are context-specific and could be reused to measure resilience in training organisations only. These questions could be formulated for any type of the organisation as required using the proposed methodology. If the reported resilience survey design principles are adhered to, this could open new exciting research opportunities for like- term cross-organisational resilience performance analysis.

5.4. Future research directions

The survey instrument continues to evolve. The generic nature of the resilience metrics and the survey design is suitable for contextualisation to any organisation concerned with their training, as well as for its modification for use with other organisational types, examples include various capability systems such as sea platforms, commercial and government enterprises, safety systems, supply chains, technical systems, etc. Data collection performed in this research, however, did not reach beyond the military organisations and need to be expanded in the future to ensure inclusiveness and to enable cross-organisational data sharing and comparative analysis. The scalability of such data collection in less regimented environments needs to be addressed for this approach to reach beyond Defence organisations.

The performed data analysis was also limited to the trends associated with target populations outlined in , and did not take a full advantage of the other nine demographic filters and attribute weighting scores due to resource limitations, relatively large sample from its four applications, and tight research timeframe. The collected data is available for future in-depth trend analysis and longitudinal studies using the current samples as a resilience performance baseline for participating organisations to compare their future performance to.

A large body of work is also required to achieve sustainability of the proposed organisational resilience performance management approaches to enable the transition of the research solutions to organisational management. The research strategies to enable the research continuous improvement and conduct under the organisational management are developed with organisational practitioners in mind and were at different stages of implementation. These are as follows: (1) to develop the procedural guidance for the resilience survey conduct and information management; (2) to simplify and improve the efficiency of the data analysis and reporting approaches; (3) to develop training and education strategies for organisational resilience survey management, conduct, and reporting.

The survey conduct requires tailoring to the needs of the participating units to ensure they are not compromised and fully achieved. The survey data management approach requires its practical implementation as a digital multi-layer platform owned by the participating organisation. This work continues with the organisations that participated in the survey in the past, and others willing to conduct the resilience survey in their training systems for the first time. The research hopes to continue to support these organisations in the future and proposes the development of comprehensive procedural guidance for survey conduct and information management as the direction for future research. The research also presents ample opportunities for longitudinal studies and cross-organisational sharing of the organisational resilience improvement efforts.

Other developments underway proposed for future research are automation of the survey results’ analysis and reporting to improve their efficiency and to minimise the future survey data processing burden on participating organisations. The initial research effort includes the progress achieved in the automation of the survey data analysis and reporting in the following two areas (1) qualitative thematic analysis and (2) quantitative results’ reporting. Both these pursuits produced promising results but require a significant research effort to achieve commercially viable products.

The issue of the subjectivity that is commonly associated with the survey metrics was partially resolved in this research for the actual resilience performance, but it still needs to resolve the subjectivity of its reporting of the resilience desired performance by the benchmarkers, expressing their subjective opinions, that could be influenced by competing priorities and ill- informed trade-off decisions. Some of this difficulty is likely due to the low population size of benchmarking governance leaders in a training organisation and low familiarity with resilience concepts and attributes. The solution to this issue is complex and might require the amalgamation of several key future research activities, such as: (1) development and implementation of the benchmarker training, to ensure their informed decisions when providing their assessment of the desired organisational resilience performance; (2) further quantification of resilience aspects and their relationships to improve the objectivity of their assessment; (3) automating allocation of the resilience attribute weightings, or developing other approaches that could stand the rigour of statistical testing; (4) automating the survey analysis and reporting.

6. Conclusion

This paper outlines the development, verification, and validation of a new survey instrument for assessing the resilience of an organisation’s training systems. The ultimate purpose is to posture the organisational training to support the overall organisational sustainment and evolutionary competitiveness. Our six resilience attributes that were the basis of this instrument, juxtapose organisational ability and capacity. At the same time, a seventh aspect assessed is the efficacy of extant policies on a systems approach to training (i.e. continuous improvement). Our survey reporting has developed distinct ways for management to compare its expectations of resilience, or its resilience posture, with the survey response and to make trade-off decisions, accepting a significant interrelationship in the resilience attributes (dimensions). Compared to recent peer research measuring organisational resilience, we have a unique focus; applied our approach more extensively; incorporated a more robust mixed- method approach that has been progressively and comprehensively verified and validated over four major applications; and are proud to report significant benefits to participant organisations. Our research is original and provides organisational leadership with a focused diagnostic instrument in their training aspects, and an opportunity to contextualise this organisational type-agnostic resilience measurement methodology to the other organisational aspects as required. An organisation’s training system often plays a pivotal role as a dynamic enabler for change to adapt to its operational environment’s volatility and future uncertainty and, thus, to secure overall organisational sustainment and evolutionary competitiveness. Measuring its resilience is now possible thanks to our survey instrument reported in this paper. We continue to advance this research to address the issues and constraints to the future survey applications identified during this effort. The breadth and depth of our survey applications in a wide variety of Defence training establishments both in Australia and abroad provides us with the exciting opportunities of comparative cross-organisational analysis that is currently underway. Comparing results from the surveys conducted in different periods would also enable our future longitudinal studies to monitor organisational resilience performance as the truly dynamic characteristic that reflects the nature and quality of the organisational design and its evolution.

We are looking forward to any further questions and feedback from the resilience practitioners and academics. We are open to collaboration opportunities and sharing further details of this approach with those wishing to apply our instrument.

7. Annexes

TORS Quantitative Resilience Questions [Actual Performance (Facilitator) Set]

TORS Quantitative and Qualitative Training Policy Efficacy and Qualitative (Free Response) Questions [Actual Performance (Facilitator) Set]

Supplemental Material

Download MS Word (30.8 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

Supplemental data

Supplemental data for this article can be accessed online at https://doi.org/10.1080/14488388.2024.2307083.

Additional information

Notes on contributors

Victoria Jnitova

Victoria Jnitova is the RAN Training Systems officer. She completed her PhD in Jun 2023. She also has Master Degrees in Systems Engineering, Business and project Management. This paper is a manifestation of her PhD research written in collaboration with the Defence and Academia, both Aus. and international. It amalgamates the authors’ combine knowledge and application of multi-disciplinary tools to develop an innovative approach to organisational resilience measuring.

Keith Joiner

Dr. Joiner is a senior lecturer and researcher at the University of New South Wales, in Canberra. His degrees include aeronautical engineering, a Master of Aerospace Systems Engineering with distinction through Loughborough University, a Ph.D. in Calculus Education, and a Master of Management. He has vast experience in the fields of test and evaluation, system design, project management, cyber security, and others. The last position of his 30 years Defence career as Director General of Australia’s Test and Evaluation culminated in the award of a Conspicuous Service Cross.

Adrian Xavier

Adrian Xavier was born in Singapore and joined the Singapore Air Force (RSAF) from school, in 1988. He left Singapore in 2003, after his last tour at Hill AFB, Utah, USA as the RSAF Senior National Representative for the Peace Carvin IV project - the F-16 Block 52+ FMS acquisition project with the USAF and Lockheed Martin. Prior to that he was Officer Commanding in Tengah Air Base, for the A4, E2C and F-16 fleet, overseeing maintenance and engineering issues at Ordinary, Intermediate and Depot Levels. He later went on to join the APS, as Air Force Training Group’s – Royal Australian Air Force (RAAF) Director Training Governance in 2010. The position was re-named to Director Strategy, Performance & Governance in January 2018. CPCAPT Xavier graduated from the University of Bristol, UK with a BEng (Hons) in 1995 and has a Masters in Aeronautical Science (MSc) from USA and a Masters in Systems Engineering with University of New South Wales (UNSW) at ADFA.

Elizabeth Chang

Professor Chang leads the ICT Big Data research in the Griffith University, Queensland. She also leads research groups targeting the key issues in Logistics ICT, Defence Logistics and Sustainment, Big Data Management, Predictive Analytics, Cyber-Physical Systems, Trust, Security, Risk and Privacy. She has delivered over 50 Keynote/Plenary speeches at major IEEE Conferences in the areas of Semantics, Business Intelligence, Big Data Management, Data Quality, etc. She has published 7 authored books, over 500 international journal papers and conference papers with an H-Index of 47 (Google Scholar) and over 12,000 citations.