?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.ABSTRACT

Taking a person-centered approach – we explored different constellations of social-psychological characteristics associated with (dis)information belief in order to identify distinct subgroups whose (dis)information belief stems from different social or political motives. Hungarian participants (N = 296) judged the accuracy of fake and real news items with a political (pro/anti-government) and nonpolitical narrative. Two profiles of ‘fake news believers’ and two of ‘fake news non-believers’ emerged, with a high conspiracy mentality being the main marker of the former two. These two ‘fake news believers’ profiles were distinguishable: one exhibited extreme trust in the media and in politicians, and the other deep distrust. Our results suggest that not only political distrust, but also excessive trust can be associated with disinformation belief in less democratic social contexts.

Introduction

Disinformation, fabricated knowledge content for profit or political purposes (Wardle, Citation2017), is a pressing issue in today’s polarized societies and evolving media environments. Among the most obvious recent examples of the real-life consequences of disinformation was the rise of the anti-vax movement in response to the COVID-19 pandemic (Mahmud et al., Citation2021). It is also open to weaponization in hybrid wars to initiate, maintain or fuel political and military conflicts such as between Russia and Ukraine (Mejias & Vokuev, Citation2017). On a more general level, disinformation threatens democratic processes (Colomnia et al., Citation2021). To combat it, therefore, might be helpful to know more about the characteristics and societal contexts that encourage people to believe disinformation.

Disinformation beliefs have been linked to deficiencies in analytical (e.g., Palonen, Citation2018; Pennycook & Rand, Citation2017), confirmation bias (Oswald & Grosjean, Citation2004) and motivated reasoning (Kahan, Citation2012, p. 201) – the last of which suggests that disinformation is being interpreted in light of existing views and attitudes (Szebeni et al., Citation2021). Factors like news consumption habits (Calvillo et al., Citation2021), personality traits (e.g., Barman & Conlan, Citation2021), and other social-psychological characteristics such as conspiracy mentality (Halpern et al., Citation2019) and interpersonal trust (Sindermann et al., Citation2020), influence susceptibility to disinformation.

The standard methodology applied in research on disinformation thus far has been the variable-centered approach, in other words exploring associations between predictor variables and receptivity to disinformation. While calculations in the variable-centered approach are based on each participant’s individual replies, they aim at exploring the associations at the group level that describe the dynamics of an average participant, and so are unable to describe the variety of individual response patterns. The variable-centered approach, thus, fails to address the potential existence of distinct subgroups or communities within the population, with members whose belief of disinformation originates in varying experiences or capacities and may serve different social or political motives. In the variable-centered approach, the entire sample is characterized collectively, and usually only one set of averaged parameters are generated (Howard & Hoffman, Citation2018). The person-centered approach we adopt in this paper, on the other hand, focuses on the individual, not the variable, as the unit of analysis, and identifies subgroups of people who share a set of characteristics that differentiate them from other sub-populations, and as such presents an alternative perspective on the interaction of multiple motivations in people.

Person-centered approaches have proven to be useful in many areas of social psychology; e.g., in research on prejudice and stigmatized minority groups (e.g., Agadullina et al., Citation2018; Dangubić et al., Citation2021) and in research on integration profiles among immigrants (Renvik et al., Citation2020). Most pertinent to the present research, Frenken and Imhoff (Citation2021) used such an approach to identify specific response patterns in claims regarding conspiracy theories, which have been missed in previous research conducted with a variable-centered methodology. Meeusen et al. (Citation2018) called attention to the blindness of variable-centered generalized measures of prejudice to its variability across different social groups. We argue that the person-centered approach allows scrutiny of whether political and nonpolitical (dis)information appeals to different people for different reasons.

We conducted our study in Hungary, an Eastern-European, post-communist country. The political climate in Hungary is highly polarized, with both the government and the opposition spreading disinformation in a constrained media environment. While in the past decades populist (radical) right-wing parties became prominent in Western European countries (see e.g., Bjånesøy & Ivarsflaten, Citation2016), Hungary – while being an EU country – developed into a prime example of the maintenance of an (authoritarian) populist regime (Ádám, Citation2019) and exemplifies democratic decline. This sociopolitical context is ideal for the person-centered method, as we expect more heterogeneity in the population, in regard to their disinformation-beliefs, conspiracy mentality, and trust in public bodies.

In this study, therefore, we explore the extent to which trust in the media and in politicians, satisfaction with the government, conspiracy mentality, media-consumption behaviors, and educational level can be used to identify groups of people who differ in how accurately they are able to identify (dis)information in the Hungarian sociopolitical context.

The correlates of disinformation belief

Previous research on disinformation, mainly using a variable-centered approach, identified several correlates relevant to our study. Conspiracy mentality is plausibly associated with disinformation-belief (e.g., Frischlich et al., Citation2021), implying the acceptance of conspiracy beliefs, frequently defined as explanatory beliefs involving multiple, powerful actors who secretly pursue a hidden goal that is widely considered illegal or malicious (Zonis & Joseph, Citation1994). Even though disinformation may contain conspiratorial narratives, conspiracy theories differ from mere disinformation in that they are resistant to falsification, complex, and speculative (Douglas et al., Citation2019). On the one hand, conspiratorial thinking is a relatively stable personality trait describing individual differences in the extent to which people believe in conspiracies or conspiracy theories (e.g., Frenken & Imhoff, Citation2021; Imhoff & Bruder, Citation2014). On the other hand, it is also connected to processes of social cognition, prejudice against outgroups (Imhoff & Bruder, Citation2014).

Conspiratorial thinking has been connected to a lack of trust in official institutions such as traditional or legacy media (van der Linden et al., Citation2021). It has been suggested that mainstream media may play an important role in how citizens perceive societal problems, and that distrust in mainstream sources may encourage people to turn more to alternative media, and to be more inclined to believe the (dis)information that is shared there (Swire et al., Citation2017). Social media are among the main sources and amplifiers of disinformation (e.g., Singer, Citation2014). According to a study conducted by Calvillo et al. (Citation2021), participants who spent more hours consuming political news were worse at distinguishing between fake and real information. In the US, Xiao et al. (Citation2021) have shown that social media news use was associated with higher conspiracy beliefs related to the COVID-19 pandemic, and trust in social media news further strengthened the relationship between social media news use and conspiracy beliefs.

Be it mainstream media or social media, a lack of variety in terms of consumption may be associated with encapsulation into a ‘filter bubble’ (Sindermann et al., Citation2020). This is particularly likely in heavily constricted media environments, in which those in governmental power create ‘echo chambers’ by echoing the same narratives from different government-influenced channels. Encountering disinformation or biased reporting may reduce trust in political institutions – foreign actors might even purposefully employ false information to destabilize democratic institutions (Wardle, Citation2017). Moreover, distrust in political institutions could foster interest in other, sometimes less reliable sources of information. Nevertheless, although distrust in politicians and political institutions may cause harm, excessive trust – in politicians or media – may not be ideal, either. Xiao et al. (Citation2021) talk about the danger of a ‘blind trust’ in media that stimulates the spread of disinformation and puts obstacles to combatting its impacts. Some degree of distrust in political institutions and media contents may, thus, facilitate the discerning of fake news from real news in environments with less democratic forms of government.

Research on ideological leaning and disinformation acceptance is inconclusive. Some studies have shown that conservatives may be more likely to believe (e.g., Allcott & Gentzkow, Citation2017) and share disinformation (Tucker et al., Citation2018), Osmundsen et al. (Citation2021). However, others have suggested that extreme partisans, regardless of the direction of their political leaning, share the most fake news. This supports the motivated reasoning account (Kahan, Citation2012; Kunda, Citation1990), according to which people at both ends of the political spectrum will more easily accept information that is in line with their beliefs (e.g., Pasek et al., Citation2015), regardless of its accuracy (Faragó et al., Citation2019). In other words, believing disinformation is related to confirmation bias: people believe information that aligns with their previous knowledge or ideological stance. Another possibility is that it is not ideological leaning per se that matters, but the stance toward the prevailing political reality and governance reflected in support of or opposition to the current government. This may apply particularly in Europe, where the economic left-right divide is complemented with a social-cultural divide that spans from the green/libertarian/alternative to nationalism/authority/traditionalism (Hooghe & Marks, Citation2018).

Finally, it is implied in most studies that higher levels of education, literacy and analytic thinking may act as a buffer against disinformation. It was found recently that a higher educational level and trust in information from the government were associated with lower beliefs in COVID-19 disinformation (Melki et al., Citation2021). Similarly, lower levels of health literacy (Scherer et al., Citation2021) and education (Kim & Kim, Citation2020) have been connected to higher susceptibility to health disinformation. However in the US context it was found that educational level was not associated with believing false headlines (Calvillo et al., Citation2021), and that politically knowledgeable individuals – admittedly not necessarily related to educational level – still consumed fake news (Guess et al., Citation2018). However Pennycook and Rand (Citation2018) found that susceptibility to fake news is mainly driven by the lack of analytic thinking. It thus seems that the positive association between higher education and a lower propensity to believe disinformation is dependent on both the political context and the content of the news. In sum, it seems from the above reviews, with their mixed and conflicting explanations and results in terms of believing disinformation, that a person-centered approach could help to shed more light on this phenomenon.

The Hungarian context

Humprecht, Esser, and Van Aelst (Citation2020) recognized macro-level factors that may make countries more resilient to disinformation, such as low polarization in society, low levels of populist communication, high trust in news media, strong public-service media, less fragmented, overlapping audiences, smaller ad markets and less use of social media. Although Hungary was not involved in their study, several of the factors listed are important when looking at the Hungarian society and its proneness for disinformation belief.

The Viktor Orbán-led Fidesz party has been in power in Hungary since 2010, and with a supermajority in parliament has transformed the media landscape. The government influences or controls both the public- and part of the private-sector media. Whereas in other democratic countries public-service media is considered an effective tool in the fight against disinformation (e.g., Horowitz et al., Citation2021), the opposite is true in Hungary, in which it has become a government-controlled source of disinformation (e.g., Polyák, Citation2019); moreover, the same false narratives are to be found in public, private, and social media (Szicherle & Krekó, Citation2021). Although in theory the public has access to foreign media – in 2021, 91% of Hungarian households had internet access (Eurostat, Citation2021) – large parts of the population are completely reliant on Hungarian news sources. One reason for this is the language barrier; in 2016, only 41% of the population (aged 25–64) spoke a foreign language (Eurostat, Citation2016).

False narratives and conspiracies are also spread directly by the government, including prime minister Viktor Orbán. Since 2017, for instance, Fidesz has relied on anti-Soros conspiracy theories that utilize anti-Semitic tropes in which the Hungarian-American financer, George Soros is portrayed as a puppet master, attempting to undermine the Hungarian government, to finance immigrants, and to fund democratic actors in and outside of the country (Plenta, Citation2020). Plenta (Citation2020) concluded in his analysis of anti-Soros narratives in Hungary and Slovakia, that anti-Soros conspiracies were widely believed in Hungary – unlike in Slovakia – because the narratives were spread not only by Orbán and his government, but also by the government-affiliated mass media. More generally, it is not only political disinformation that has run amok in Hungary: science and technology conspiracy theories have earned more traction in the country than is common in Europe (Special Eurobarometer 516, Citation2021).

Orbán’s populist logic – the distinction between ‘enemies’ and ‘the people’ – has generated antagonism and has polarized the country (Palonen, Citation2018). While government communication often features external ‘enemies’ from which the government protects ‘the people’, Orbán is able to renew hegemony – and stay in power – through showing statesmanship, as well as the features of an ordinary man (Szebeni & Salojärvi, Citation2022). The main cleavage in Hungarian politics currently runs between the Fidesz-led government and the opposition – consisting of various parties across the political spectrum. As such, the political arena resembles a two-party structure, which in turn provides fertile ground for further polarization (Humprecht et al., Citation2020), and the increased dissemination and production of online disinformation.

Indeed, it is not only the Fidesz-led government, but also the opposition, and the opposition-affiliated media, that engage in the spreading of false information. For instance, the candidate of the united opposition in the 2022 parliamentary elections – Péter Márky-Zay – has claimed that Orbán regularly sought help for a psychiatric condition at a private clinic in Graz – a common but unfounded (Diószegi-Horváth, Citation2022) disinformation narrative in Hungary. The term ‘fake news’ is used by both sides as a discursive signifier, to delegitimize the other side and to define what is ‘truth’ (the situation is closely analogous to the US context; see Farkas & Schou, Citation2018). The opposition connects ‘fake news’ fabrication to the government-friendly media, ultimately linking government supporters with irrationality and thereby delegitimizing them (such as accusing the public media of spreading fake news about the war in Ukraine; Szurovecz, Citation2022). Meanwhile the Orbán-led government uses the term ‘fake news’ in a similar manner as former US president Donald Trump used it, to delegitimize and attack mainstream, independent media. Such a strategy could be used to dismantle critical journalism on the discourse level. A concrete example of this is when Orbán refuses to take questions from independent journalists, claiming that they represent ‘fake news factories’ (Index.hu, Citation2018). Spreading false information and weaponizing fake news is part of the arsenal of both sides.

The present study

Although there has been a surge of academic interest in what predicts the believing of disinformation, the research so far has been conducted almost exclusively within the variable-centered framework. By way of contrast, we take a person-centered approach to differentiate between people who either believe or contest disinformation. When investigating research questions aimed at (a) classifying people into common subpopulations based on variables, (b) comprehending the relations of these subpopulations with correlates or predictors, the person-centered approach is particularly useful as it identifies the dynamics of sub-populations in the sample (Howard & Hoffman, Citation2018). As such instead of asking the question ‘How trust in media/politicians, satisfaction with the government, conspiracy mentality, media consumption, and formal education are associated with disinformation belief’, in this study, we ask: ‘Are there distinct profiles based on trust in media/politicians, satisfaction with the government, conspiracy mentality, media consumption, and if so, how do people belonging to these different profiles also differ in their disinformation belief?’

In addition, we focus on both political and nonpolitical disinformation to explore how specifically the believing of fake news is connected to the content of (disinformation), i.e, the extent to which the news in question is politically partisan. Some recent findings indicate that the content of the news, even if politically charged, does matter. Erlich and Garner (Citation2021) conducted a study among Ukrainians targeted by Russian disinformation campaigns in 2019: although, on average, the respondents distinguished between true and fake stories, the content of the disinformation mattered: if it concerned the economy it was more likely to be believed than if it was about politics, historical happenings, or the military. Moreover, as could be expected based on the notion of motivated reasoning, they found that Ukrainians with partisan and ethnolinguistic ties to Russia were more likely to believe pro-Kremlin disinformation across topics. We will expand on the idea that content matters by including disinformation devoid of political meaning.

Moreover, as seen from the literature review above, most previous research on disinformation has either been conducted in Western contexts – mainly in the United States – or has explored Russian disinformation campaigns from a Western perspective. As such, it would be important to examine other countries, where disinformation is prevalent, like in Hungary. Hungary is also an example for not only democratic decline, but for the maintenance of an authoritarian populist regime, and from previous studies we know that populist communication can make societies more prone for disinformation belief (e.g., Humprecht et al., Citation2020). Viktor Orbán and the ‘Hungarian model’ – including the political and media sphere – is also receiving increasing international attention, for example Orbán held the opening speech at CPAC 2022 (Conservative Political Action Conference) in the U.S.A. Hungary can be especially ideal for the person-centered approach, as the relation between trust toward institutions, conspiracy mentality and fake news belief may not be as straightforward as it is in most Western contexts. As in Hungary the government actively spreads disinformation, we expect, that there may be more heterogeneity in the society regarding disinformation-belief, media use, and trust components, as such it is important to take an approach with which we can identify these different subpopulations. This may also assist in better tailoring interventions to combat disinformation in Hungary and other similar contexts.

We explored how trust in the media, trust in politicians, satisfaction with the government, conspiracy mentality, media-consumption behaviors, and educational level characterize and helpful in identifying groups of people who differ in their capacity to distinguish between fake and real news. We asked participants to evaluate pro-government, anti-government, and nonpolitical news in terms of their truthfulness.

Method

Participants and procedure

The data was collected in Hungary between 8 April and 20 May 2019 and is publicly available (see Data availability section). Our participants were recruited through social media. Psychology Master’s students from Eötvös Loránd University (Budapest, Hungary) shared the link to the questionnaire on their pages with the goal to recruit as many participants as possible in the given timeframe. The data collection ended one week before the 2019 European Parliamentary elections. The participants gave their informed consent, were not monetarily or otherwise compensated, and could withdraw at any time. The research was approved by the Ethical Committee of Eötvös Loránd University, Budapest, Hungary.

Participants who did not complete at least 99% of the questionnaire were excluded. The final sample included 296 participants, with a mean age of 36.41 years (SD = 15.86). In terms of gender, 169 (58.7%) identified themselves as female, 115 (39.9%) as male, four as other (1.4%), and eight (2.7%) gave no indication. As regards the educational level, 43.9% of the participants had at least a bachelor’s degree (15.5% of participants had lower education than high school, 38.9% of participants had a high school diploma, and 42.5% had some form of degree in higher education). In terms of socioeconomic status, 33.2% of the participants reported living comfortably, 48% told coping on their current income, 12.5% were finding it difficult, and 3.4% found it very difficult to live on their present income.

Measures

Beliefs about the news

Participants were asked to rate the accuracy of various news headlines taken from Hungarian news websites in the months prior to the data collection. Participant responses to the question: ‘To what extent do you think this news is true?’ were rated on a Likert-type scale ranging from one to seven: (1= I am very sure this news is not true, 4= the news is as likely to be true as it is likely to be false and 7= I am very sure that this news is true). This response scale draws on prior research on accuracy and believability (e.g., Clayton et al., Citation2020; Faragó et al., Citation2019; Pennycook & Rand, Citation2018). A total of 18 news items were presented (fake: 3 pro-government, 3 anti-government, 3 nonpolitical; real: 3 pro-government, 3 anti-government, 3 nonpolitical) in randomized order. All the items were in the format in which they would have been encountered on Facebook, and included the headline, a picture and a byline, but no source. The news items are included among the Supplementary Materials.

In selecting the news items we followed best practices in the literature (e.g., Calvillo et al., Citation2021; Pennycook & Rand, Citation2018; Scherer et al., Citation2021): political news had to have a pro- or anti-government narrative, whereas nonpolitical news had to be strictly nonpolitical. All the items had to make a claim that could be fact-checked, and all coauthors conducted fact-checking individually that included source and context analysis. Some of the fake news items have also been either refuted previously or have been challenged at court. Our index of belief related to a particular type of news was computed as the average level of belief across the three news items in that category.

Cronbach’s α and McDonald’s omega were both computed for all types of news scales, the omega values shown in brackets. The reliability coefficients for believing anti-government fake news were .65 (.65); anti-government real news .41 (.44); nonpolitical fake news .37 (.41); nonpolitical real news .45 (.47); pro-government fake news .71 (.71); and pro-government real news .34 (.42). The reliability coefficients for fake news in general was .66 (.59), and for all real news .47 (.47).

Satisfaction with government

The participants indicated their satisfaction with the government by responding to two questions: ‘Thinking about the Hungarian government, how satisfied are you with the way it is doing its job?’ (on a scale ranging from 0–10, 0= extremely dissatisfied, 10= extremely satisfied); ‘On the whole, how satisfied are you with the way democracy works in Hungary?’ (on a scale ranging from 0–10, 0= extremely dissatisfied, 10= extremely satisfied). The questions were taken from the European Social Survey. The average score for these two items constituted our measure of general satisfaction with the government. The Cronbach’s α was .92, the Spearman-Brown coefficient .92. This measure served as our indicator of political orientation – at the time we took the measurements, a more typical conservative/liberal or left/right divide would not have made sense as the all-encompassing cleavage in Hungary’s political arena ran between government and opposition.

Trust in politicians and in the media

The participants indicated their trust in politicians and in the media on a scale ranging from 0 to 10, where 0 indicated no trust and 10 complete trust. The questions and scales were similar to those in the European Social Survey. The average score was 3.04 (SD = 2.04) for trust in politicians, and 3.57 (SD = 1.83) for trust in the media.

Conspiracy mentality

We asked the participants to complete the Hungarian version (translated by Orosz et al., Citation2016) of the five-item Conspiracy Mentality Questionnaire (Bruder et al., Citation2013). They rated their agreement with statements such as ‘I think that politicians usually do not reveal the true motives for their decisions.’ on a scale ranging from one to seven (1= not true at all, 7= completely true). The average score for these five items was calculated as a score for conspiracy mentality. Cronbach’s α was .79, and the average score was 4.5 (SD = 1.19).

Time spent on media consumption

We also asked the participants to indicate how much time, on average and in minutes, they spent on media consumption daily. This item served as a measure for all types of media content, without further specification.

Education

We measured the participants’ formal educational level on a scale ranging from 1 to 10, on which 1 = completed primary education, 5 = an advanced accredited vocational qualification and 10 = higher education with an academic degree; the participants revealed their highest educational degree. The categories were based on ESS templates. From the 10 educational level, we created three categories: 1 = a lower education than high school, 2 = a high school diploma, and 3 = some form of degree in higher education.

Sociodemographic variables

The participants were also asked to indicate their age (in years), gender (male, female, other) and socioeconomic status. The measure of socioeconomic status required them to indicate: ‘Which of the descriptions below comes closest to how you feel about your household’s income nowadays? By household we mean all the people with whom you live and share the costs of living.’ The scale ranged from one to four (1= Living comfortably on my present income, 4 = Finding it very difficult on my present income). The question and scale were similar to the one in the European Social Survey.

Results

Descriptive statistics

To detect outliers and unusual response patterns in the data, we inspected responses for straight-lining or alternating patterns and used boxplots to visualize response distributions. We also examined response times, flagging those significantly below average as potential indicators of random responding. In all cases, we found no evidence of outliers or unusual patterns. The absence of unusually quick response times suggests that participants likely did not fact-check the news items presented. presents the descriptive statistics of the sample, as well as the means, standard deviations and Cronbach-α values for all scales. The participants showed average levels of trust in politicians (M = 3.04, SD = 2.04) and in the media (M = 3.57, SD = 1.83). They were generally also relatively satisfied with the government (M = 3.51, SD = 2.55). A conspiracy mentality among the sample was somewhat above the midpoint of the scale (M = 4.5, SD = 1.19). The average daily time spent consuming media was about one hour (M = 57.51, SD = 65.53 minutes). Among the whole sample, trust in different news types ranged from medium (M = 2.98, SD = 1.24) for nonpolitical fake news, to moderate (M = 4.16, SD = 1.25) for anti-government real news. gives the Pearson correlations between all variables, with and without controlling for age and gender.

Table 1. Descriptive statistics.

Table 2. Descriptive statistics and correlations for study variables.

Clustering and profiles

We conducted a two-step cluster analysis based on the following standardized variables: education, conspiracy mentality, trust in politicians, trust in and time spent on media, and satisfaction with government. We decided to use a two-step process cluster analysis: it has been used before in identity-related studies (Meca et al., Citation2015; Schwartz et al., Citation2011) and has the capacity to create groups using categorical and continuous variables while also being one of the most reliable type of analysis in terms of the number of subgroups detected (e.g., Kent et al., Citation2014). Two-step cluster analysis is a hybrid method that employs a distance metric to first distinguish groups and a probabilistic method (similar to latent class analysis) to select the best subgroup model (Gelbard et al., Citation2007). We used log likelihood distance as a measure during the cluster analysis.

In order to find the final cluster solution we followed the best practice outlined by (Weller et al., Citation2020); that is: (1) we used multiple fit statistics; (2) we used BIC (Bayesian Information Criterion), as it is often identified as the most reliable indicator of model fit; and (3) we finally considered theoretical interpretability.

We present the model fit indices (BIC and AIC – Akaike Information Criterion) in , where lower BIC and AIC indicate a better fit. We further report entropy in in respect to the news belief scales. Entropy is a diagnostic statistic, which indicates how accurately the model defined the clusters (Celeux & Soromenho, Citation1996) and how homogenous the clusters are in terms of a variable. Generally, entropy above .8 is ideal. When choosing the final cluster solution, we also considered the number of participants in each cluster, seeking to avoid cluster smaller than 5% of the sample. The model fit indices and our theoretical interpretations fit best with the 4-cluster structure, even if this meant that 2.7% of our participants did not fit into any clusters.

Table 3. Model fit indices (BIC and AIC) for different cluster solutions.

Table 4. Entropy for the different cluster models and news belief variables.

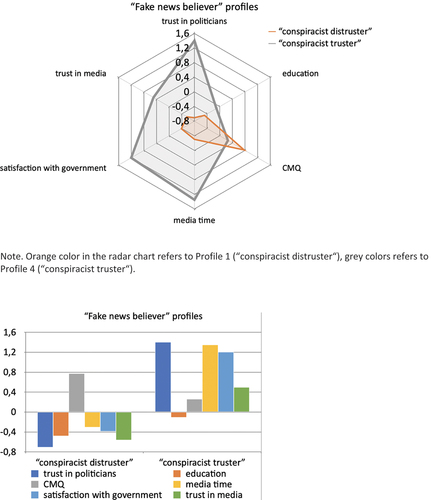

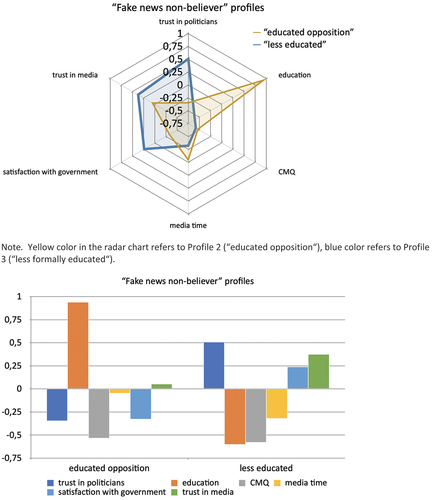

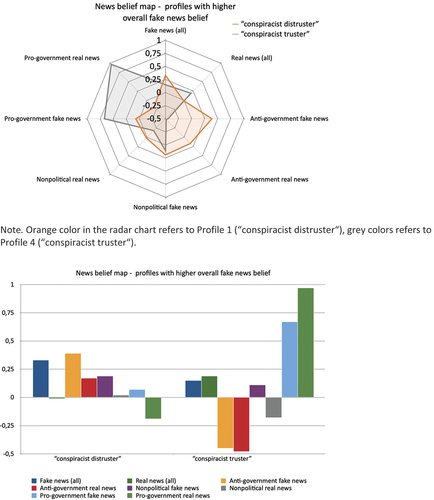

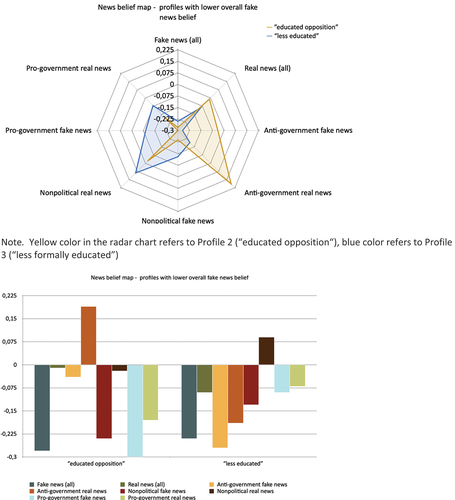

Following the clustering, we compared the mean values for the different fake and real news items in the different clusters. Apart from establishing the sub-categories of fake and real news, we also compiled all fake news and all real news in separate variables so that we could study the extent to which general fake/real news was believed. shows the standardized cluster centroid values and the demographics of the cluster profiles (age, gender, and socioeconomic status). shows the standardized means in the four clusters or profiles of participants. show the identified profiles in terms of the clustered variables. show the ‘news belief map’ of the above profiles. shows the highest educational level of the profiles compared with the national average (KSH, Citation2016).

Figure 1. Cluster profile means of ‘fake news believer’ profiles on radar and bar chart.

Figure 2. Cluster profile means of ‘fake news nonbeliever’ profiles on radar and bar chart.

Figure 3. News belief map of profiles with higher overall fake news belief on radar and bar chart.

Figure 4. News belief map of profiles with lower overall fake news belief on radar and bar chart.

Table 5. Cluster centroids (standardized) and demographics of cluster profiles.

Table 6. Descriptive statistics of the four cluster profiles in terms of news belief (standardized).

Table 7. Highest education of profiles in our study and the national average (KSH, Citation2016).

We identified four profiles in total, two of which (Profiles 1 - ‘conspiracist distruster’ and 4 - “conspiracist truster) could be characterized by a higher overall level of belief in fake news, and two (Profiles 2 – ‘educated opposition’ and 3 – ‘less formally educated’) by lower levels of fake news belief. Primarily, a high conspiracy mentality distinguished believers of fake news (Profiles 1 and 4) from those who questioned it (Profiles 2 and 3). One-way ANOVAs were used to compare the means of the different news belief items across the clusters (). To control for the inflation of type I error due to multiple comparisons Bonferroni correction was applied to the results of the ANOVAs. Bonferroni correction is used to adjust the significance level (alpha) when conducting multiple comparisons to reduce the risk of Type I errors (false positives). Since there were no significant differences observed for the nonpolitical (fake) news items in the initial ANOVA analysis, there was no need to adjust the significance level or perform further comparisons for those items.

Table 8. One-way analyses of variance between groups in news belief items.

The results after Bonferroni correction are shown in for four news item categories: anti-government fake and real news, and pro-government fake and real news (there were no difference between clusters in terms of nonpolitical fake and real news; see ). In the Bonferroni-corrected comparisons, we found significant differences in beliefs across the different clusters for anti-government and pro-government news items. Notably, for both anti-government fake and real news, as well as pro-government fake and real news, there were consistent differences in beliefs between cluster 1 and the other clusters. In particular, significant differences in beliefs were observed between cluster 1 and all other clusters (2, 3, and 4) for pro-government fake and real news. We found similar patterns for anti-government fake news. In contrast, there were no significant differences for nonpolitical fake and real news, suggesting that beliefs regarding these types of news items were not significantly different across the clusters. While the Bonferroni correction may be conservative and could lead to type II errors, it allows for greater confidence in the statistically significant results reported after the correction.

Table 9. Results for Bonferroni’s multiple comparisons between different clusters in terms of news belief.

Discussion

Employing a person-centered approach, we identified four distinct profiles of news consumers based on their socio-demographic background and socio-political orientation (conspiracy mentality, trust in politicians and the media, satisfaction with the current government, time spent on media consumption and formal education). This method revealed that news consumers exhibit varying degrees of susceptibility to fake news, which is shaped by their formal education on the one hand and by socio-political attitudes and behaviors on the other hand. We discuss our results in relation to the Hungarian sociopolitical context.

The two more disinformation-resistant profiles (2 and 3, ‘educated opposition’ and ‘less formally educated’, respectively) differed substantially in their education level and while being the best out of the four profiles, neither of them perfectly recognized fake and real news. Profile 2 (‘educated opposition’) was the most ‘fake news resistant’ profile, best at discerning fake and real news overall. However, they were skeptical toward pro-government real and anti-government false information. Despite being highly educated, they were likely to have been influenced by their opposition to the government. Formal education – especially on the higher level – may have equipped these participants with the information literacy skills that helped them discern false information from real. On the other hand, some of the processes underlying self-selection into higher education could also account for this pattern. It is known from previous research that knowledge alone may not suffice to recognize false information (Guess et al., Citation2018), but that analytical thinking may be related to the ability to discern false from real information Faragó et al. (Citation2023). Hence, interventions aimed at improving information literacy may be effective, given that those with deeper media knowledge are more skeptical of the messages (Jeong et al., Citation2012).

Pantazi et al. (Citation2021) suggest that people fall for disinformation because they are either too gullible toward false information or display too much epistemic vigilance; that is, are too skeptical of true, reliable information. It could be that although Profile 2 benefitted from their analytic skills in the context of nonpolitical topics, they became ‘too vigilant’ of pro-government (true) information and anti-government false informationthese types of information would be the most likely to be fabricated by the pro-government media.

Meanwhile, those in Profile 3 (‘less formally educated’) showed a general disbelief in both real and fake news. What also set them apart is that Profile 2 represented the highest, and Profile 3 (‘less formally educated’) the lowest educational groups. It is worth noting here that all the profiles differed from the Hungarian average in terms of education (see ).

In the case of Profile 3 (‘less formally educated’), a moderate stance on the government, low media consumption and rating all news as non-accurate reflect general disengagement in public issues. These people may be less affected by partisan narratives, and thus more skeptical toward all news, including fake news. On the other hand, retreating from public issues and discussions as well as from political participation could hamper the ability to identify real news. This phenomenon serves to illustrate the double-sided nature of media consumption: online consumption may increase political participation (Boulianne, Citation2020), but because of the proliferation of disinformation and hyper-partisan content, it could also drive people from politics and news consumption (Tucker et al., Citation2018).

To understand these dynamics, one should acknowledge that politics in Hungary is marked by increasing polarization between the government and the opposition. Polarization has been associated with lower county-level resilience toward disinformation (Humprecht, Esser, and Van Aelst, Citation2020). Furthermore, Hungary has come to resemble a two-party system (government vs. a united opposition), which could further drive the production of online disinformation (Allcott & Gentzkow, Citation2017). Polarization is visible in our results in two respects. First, we found a clear difference between government (Profile 4, ‘conspiracist truster’) and opposition (Profile 2, ‘educated opposition’) supporters in terms of their trust in political (pro- or anti-government) fake and real news, both groups accepting and contesting news contents that best fitted their view of the government. The two groups of ‘believers’ (Profiles 1 and 4, ‘conspiracist distruster’ and ‘conspiracist truster’, respectively) could also be distinguished based on their satisfaction with the government. This pattern points to the possible importance of motivated reasoning, which has been show to play a role in many previous studies (e.g. Baptista & Gradim, Citation2022; Faragó et al., Citation2019; Szebeni et al., Citation2021). Motivated reasoning may be particularly important in the Hungarian context, as societal polarization has been suggested to amplify motivated reasoning – individuals may become more entrenched in their political stances and increasingly resistant to information that challenges their perspectives (Hart et al., Citation2009).

Previous research has shown that conspiracy beliefs go hand in hand with low institutional trust, e.g., distrusting those in power, distrusting experts (Imhoff et al., Citation2018), and distrusting legacy media (Stempel et al., Citation2007). These characteristics are also evident in Profile 1 (‘conspiracist distruster’). On the other hand, Profile 4 (‘conspiracist truster’) was marked by institutional trust, which is contrary to what has been reported in some previous studies (Agley & Xiao, Citation2021; Linden et al., Citation2021). Whereas previous research has documented associations between distrust in the media and susceptibility to disinformation (e.g.Hameleers, Citation2022), our results show that excessive trust may also be harmful. Research on the system justification motive; i.e., people’s desire to view the system or status quo as legitimate and fair, has shown that both extremes of the system justification continuum are associated with political passivity (Cichocka & Jost, Citation2014). Analogously, trust in institutions may also be U-shaped, in the sense that both extreme cynicism and blind trust with regards to political institutions and media may induce susceptibility to disinformation (e.g., Xiao et al., Citation2021) either one trusts in nothing that the government and its institutions communicate, or one trust all of it. Especially in a less democratic environment, some degree of distrust in political institutions may be useful for the purposes of discerning fake news from real news. Profile 4 is also characterized by a conspiratorial mind-set, hence the conspiratorial narratives espoused by government may appeal to them (e.g., Plenta, Citation2020). The heavy consumption of pro-partisan government media could have influenced these participants’ evaluations of the fake news we presented (Broockman & Kalla, Citation2022).

It is worth mentioning that previous research has shown that older individuals interact (e.g., Loos & Nijenhuis, Citation2020) and share (Guess et al., Citation2018) more than younger age groups. Two of the four cluster profiles were around 30 (mean age 29 and 34 for Profile 1 and 3), while the other two were around 40 (40 and 42 for Profile 2 and 4), the latter being close to the median age, 43.3 as of 2020 in Hungary. Based on our results, there were no differences between these two age groups in terms of fake news belief.

A large body of scholarship is currently preoccupied with testing interventions against fake news targeted at consumers, while platforms and governments are also introducing changes aimed at stopping the spread of disinformation. Although inoculation Lewandowsky and van der Linden (Citation2021), media literacy training (e.g., Guess et al., Citation2020) and interventions including accuracy prompts (e.g., Pennycook & Rand, Citation2021) have yielded promising results, several limitations have also been indentified, such as possible lack of long-term effects (van der Linden et al., Citation2021). Our results contribute to this literature by suggesting that a one-size-fits-all approach may not be effective – different people may believe the same disinformation for entirely different reasons. Studies on interventions have also tended to ignore the importance of the sociocultural context, thus exaggerating their generalizability across contexts. For example, and intervention that seeks to increase trust in government and media will be counterproductive in an environment in which the government and media spread unreliable information. However, encouraging general media-skepticism can also be fruitless, as it may discourage people from trusting also reliable and legitimate sources. Our results indicate that interventions should be developed with different types of people and different contexts in mind. Through the person-centered approach is also becomes clear, that all individuals possess a certain amount of gullibility and attentiveness – a narrative than can serve to reduce the polarized discourse around the topic of ‘fake news’. Furthermore, as opposed to the variable-centered approach, here we can capture better how some individuals have specific biases when it comes to (dis)information belief. Adopting a person-centered approach can also be helpful in other contexts in (re-)evaluating existing theories in disinformation research.

Besides intervention and study methods, are results also have implication for the Hungarian, as well as for other national contexts. Populist parties have moved from the fringes to the mainstream and have become government-forming parties. Disinformation and conspiracy theories may be advantageous for populist leaders in constructing the ‘enemies’ of ‘the people’, whom they claim to represent. As populist parties and leaders increasingly rise into governmental power it is important to (re)consider how the voters’ attitudes may shift in terms of trust in government and public bodies. As our research shows excessive trust in media and government is possible and can go hand in hand with a conspiratorial mind-set under extreme circumstances. This trust, coupled with the belief of disinformation can contribute to the maintenance of ‘post-truth’ populist regimes. Such governance can potentially polarize populations further, as it ‘removes the basis’ of political conversations and can yield distrust in the citizens who are not in favor of the current government. As disinformation can contain emotional narratives – and especially if it is present on both political sides – it can create affective polarization, besides political polarization. Such polarization can affect democratic processes through people supporting an increasingly undemocratic regime (exemplified by Profile 4, ‘conspiracist truster’) or by people turning away from politics (exemplified by Profile 3, ‘less formally educated’). While the Hungarian context is extreme, the described phenomena is also relevant for other country contexts where there are leaders in the subnational context who embrace conspiracy theories (e.g., in the US), or actively disseminate disinformation to upkeep an autocratic system (e.g., Russia).

Limitations and future directions

Some limitations warrant mention. It would, in retrospect, have been worthwhile analyzing media consumption across platforms. The profiles we identified may have relied on distinct platforms or sources for their news, and this would have been associated with different levels of information literacy. This should be investigated in future research. We further acknowledge that we cannot be certain if the participants had already encountered the news items in our study, or whether they had any prior knowledge of these topics. This limitation is common in many studies that deal with current events, as it is hard to ensure that participants have not been exposed to the news items before.

Another limitation was our reliance on a convenience sample gathered via snowballing on social media. This did not give us a representative view of the Hungarian population at large. It should be noted, however, that sampling from populations who are active on social media, and potentially come across fake news on the internet, could in itself be valuable. Some scales that were similar to the one found in ESS (Citation2018) could be compared to our sample: people scored 3.04 on trust on the politician scale, compared with 3.73 in ESS (both on a scale ranging from 1 to 11). A larger proportion of people in our sample than in the ESS sample claimed to live comfortably or to cope on their present income, whereas those in our sample were less satisfied with the government than those in the ESS representative sample. Given the influential role that education played in our results, we should point out that our participants were more highly educated, on average, than the general population. As such, it would be worth investigating the profiles of citizens with less education as well. The final cluster solution excluded 2.7% of our participants. With a larger sample size, a cluster that would have included also these people could perhaps have been identified. A larger sample size would also have allowed us to employ more complex models – we could, for instance, have looked at the interactions between different characteristics of the news items. This further emphasizes the need for more research among larger populations. As a final limitation, the study was not preregistered; however, all data and stimuli material are openly available.

Conclusion

In conclusion, our study highlighted the benefits of adopting a person-centered approach in the study of disinformation – an approach that could give valuable insights into different subpopulations who may vary not only in the news they believe but also in why they believe it. Recognizing such differences between subgroups could be of value when developing and employing various interventions, and it could also help to explain why interventions regarding disinformation yield conflicting results.

Our study also highlights the fact that, although social and political trust – in the media or in public bodies – is often assumed to be negatively associated with a belief in conspiracy theories or in disinformation, it may also be detrimental, particularly if the sociopolitical context is highly polarized and politically constrained.

In themselves, our results indicate that research on disinformation, and on citizen’s capacities and motivations for information processing, should place a stronger emphasis on the sociopolitical context and the heterogeneity of populations. In that most theories and studies on these topics are developed and conducted in Western, mostly US, contexts and apply a variable-centered approach, many do not consider the context, nor do they break down the population into different subgroups. This can easily lead to overgeneralization. It would be worth testing purportedly universal theories in more varying contexts. Most studies also put forward individual-level solutions, aiming to enhance the capacities of the individual to resist false information. However, macro-level factors may seriously impair such solutions – structural problems require structural resolution. However, our results do emphasize the need to develop interventions that are specific to the wider sociopolitical context, and to different subgroups. More generally, we call for more person-centered research, and more research in non-Western societies.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Data availability statement

The data that support the findings of this study and the supplementary materials are openly available in OSF at https://doi.org/10.17605/OSF.IO/QSKFM

Additional information

Funding

References

- Ádám, Z. (2019). Explaining Orbán: A political transaction cost theory of authoritarian populism. Problems of Post-Communism, 66(6), 385–27. https://doi.org/10.1080/10758216.2019.1643249

- Agadullina, E. R., Lovakov, A. V., & Malysheva, N. G. (2018). Essentialist beliefs and social distance towards gay men and lesbian women: A latent profile analysis. Psychology and Sexuality, 9(4), 288–304. https://doi.org/10.1080/19419899.2018.1488764

- Agley, J., & Xiao, Y. (2021). Misinformation about COVID-19: Evidence for differential latent profiles and a strong association with trust in science. BMC Public Health, 21(1), 89. https://doi.org/10.1186/s12889-020-10103-x

- Allcott, H., & Gentzkow, M. (2017). Social media and fake news in the 2016 election. Journal of Economic Perspectives, 31(2), 211–236. https://doi.org/10.1257/jep.31.2.211

- Baptista, J. P., & Gradim, A. (2022). Who believes in fake news? Identification of political (A)symmetries. Social Sciences, 11(10), 460. https://doi.org/10.3390/socsci11100460

- Barman, D., & Conlan, O. (2021). Exploring the links between Personality traits and susceptibility to disinformation. Proceedings of the 32st ACM Conference on Hypertext and Social Media, 291–294. https://doi.org/10.1145/3465336.3475121

- Bjånesøy, L. L., & Ivarsflaten, E. (2016). What kind of challenge? right-wing populism in contemporary Western Europe. In Y. Peters & M. Tatham (Eds.), Democratic Transformations in Europe (0 ed.). Routledge. https://doi.org/10.4324/9781315657646

- Boulianne, S. (2020). Twenty years of digital media effects on civic and political participation. Communication Research, 47(7), 947–966. https://doi.org/10.1177/0093650218808186

- Broockman, D., & Kalla, J. (2022). The manifold effects of partisan media on viewers’ beliefs and attitudes: A field experiment with fox news viewers [preprint]. Open Science Framework. https://doi.org/10.31219/osf.io/jrw26

- Bruder, M., Haffke, P., Neave, N., Nouripanah, N., & Imhoff, R. (2013). Measuring individual differences in generic beliefs in conspiracy theories across cultures: Conspiracy mentality questionnaire. Frontiers in Psychology, 4, 4. https://doi.org/10.3389/fpsyg.2013.00225

- Calvillo, D. P., Garcia, R. J. B., Bertrand, K., & Mayers, T. A. (2021). Personality factors and self-reported political news consumption predict susceptibility to political fake news. Personality and Individual Differences, 174, 110666. https://doi.org/10.1016/j.paid.2021.110666

- Calvillo, D. P., Rutchick, A. M., & Garcia, R. J. B. (2021). Individual differences in belief in fake news about election fraud after the 2020 U.S. Election. Behavioral Sciences, 11(12), 175. https://doi.org/10.3390/bs11120175

- Celeux, G., & Soromenho, G. (1996). An entropy criterion for assessing the number of clusters in a mixture model. Journal of Classification, 13(2), 195–212. https://doi.org/10.1007/BF01246098

- Cichocka, A., & Jost, J. T. (2014). Stripped of illusions? Exploring system justification processes in capitalist and post-Communist societies: STRIPPED of ILLUSIONS? International Journal of Psychology, 49(1), 6–29. https://doi.org/10.1002/ijop.12011

- Clayton, K., Blair, S., Busam, J. A., Forstner, S., Glance, J., Green, G., Kawata, A., Kovvuri, A., Martin, J., Morgan, E., Sandhu, M., Sang, R., Scholz-Bright, R., Welch, A. T., Wolff, A. G., Zhou, A., & Nyhan, B. (2020). Real solutions for fake news? Measuring the effectiveness of general warnings and fact-check tags in reducing belief in false stories on social media. Political Behavior, 42(4), 1073–1095. https://doi.org/10.1007/s11109-019-09533-0

- Colomnia, C., Sánchez Margalef, H., & Youngs, R. (2021). The Impact of Disinformation on Democratic Processes and Human Rights in the World (P. 54) [Study]. Directorate-General for external policies - Policy department. https://www.europarl.europa.eu/RegData/etudes/STUD/2021/653635/EXPO_STU(2021)653635_EN.pdf

- Dangubić, M., Verkuyten, M., & Stark, T. H. (2021). Understanding (in)tolerance of Muslim minority practices: A latent profile analysis. Journal of Ethnic and Migration Studies, 47(7), 1517–1538. https://doi.org/10.1080/1369183X.2020.1808450

- Diószegi-Horváth, N. (2022). ORBÁN ÁLLÍTÓLAGOS GRAZI ÚTJAIT SEM MÁRKI-ZAY, SEM AZ INTERNETEN KERINGŐ FOTÓK NEM TUDJÁK BIZONYÍTANI. lakmusz.hu. https://www.lakmusz.hu/orban-allitolagos-grazi-utjai/

- Douglas, K. M., Uscinski, J. E., Sutton, R. M., Cichocka, A., Nefes, T., Ang, C. S., & Deravi, F. (2019). Understanding conspiracy theories. Political Psychology, 40(S1), 3–35. https://doi.org/10.1111/pops.12568

- Erlich, A., & Garner, C. (2021). Is pro-kremlin disinformation effective? Evidence from Ukraine. The International Journal of Press/politics, 28(1), 5–28. https://doi.org/10.1177/19401612211045221

- European Social Survey. (2018). European Social Survey Round 9 data. ESS ERIC. https://ess.sikt.no/en/?tab=overview

- Eurostat. (2016). Foreign Language Skills Statistics. https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Foreign_language_skills_statistics

- Eurostat. (2021). Digital Economy and Society Statistics—Households and Individuals. https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Digital_economy_and_society_statistics_-_households_and_individuals

- Faragó, L., Kende, A., & Krekó, P. (2019). We only believe in news that we doctored ourselves: The connection between partisanship and political fake news. Social Psychology, 51(2), 77–90. https://doi.org/10.1027/1864-9335/a000391

- Faragó, L., Krekó, P., & Orosz, G. (2023). Hungarian, lazy, and biased: The role of analytic thinking and partisanship in fake news discernment on a Hungarian representative sample. Scientific Reports, 13(1), 178. https://doi.org/10.1038/s41598-022-26724-8

- Farkas, J., & Schou, J. (2018). Fake news as a floating signifier: Hegemony, antagonism and the politics of falsehood. Javnost - the Public, 25(3), 298–314. https://doi.org/10.1080/13183222.2018.1463047

- Frenken, M., & Imhoff, R. (2021). A uniform conspiracy mindset or differentiated reactions to specific conspiracy beliefs? Evidence from latent Profile analyses. International Review of Social Psychology, 34(1), 27. https://doi.org/10.5334/irsp.590

- Frischlich, L., Hellmann, J. H., Brinkschulte, F., Becker, M., & Back, M. D. (2021). Right-wing authoritarianism, conspiracy mentality, and susceptibility to distorted alternative news. Social Influence, 16(1), 24–64. https://doi.org/10.1080/15534510.2021.1966499

- Gelbard, R., Goldman, O., & Spiegler, I. (2007). Investigating diversity of clustering methods: An empirical comparison. Data & Knowledge Engineering, 63(1), 155–166. https://doi.org/10.1016/j.datak.2007.01.002

- Guess, A. M. Lerner, M. Lyons, B. Montgomery, J. M. Nyhan, B. Reifler, J. & Sircar, N.(2020). A digital media literacy intervention increases discernment between mainstream and false news in the United States and India. Proceedings of the National Academy of Sciences, 117(27), 15536–15545.

- Guess, A., Nyhan, B., & Reifler, J. (2018). Selective exposure to misinformation: Evidence from the consumption of fake news during the 2016 US presidential campaign. 9; European Research Council.

- Halpern, D., Valenzuela, S., Katz, J., & Miranda, J. P. (2019). From belief in conspiracy theories to trust in others: Which factors influence exposure, believing and sharing fake news. In G. Meiselwitz (Ed.), Social computing and social media. Design, human behavior and analytics (Vol. 11578, pp. 217–232). Springer International Publishing. https://doi.org/10.1007/978-3-030-21902-4_16

- Hameleers, M. (2022). Separating truth from lies: Comparing the effects of news media literacy interventions and fact-checkers in response to political misinformation in the US and Netherlands. Information, Communication & Society, 25(1), 110–126. https://doi.org/10.1080/1369118X.2020.1764603

- Hart, W., Albarracín, D., Eagly, A. H., Brechan, I., Lindberg, M. J., & Merrill, L. (2009). Feeling validated versus being correct: A meta-analysis of selective exposure to information. Psychological Bulletin, 135(4), 555–588. https://doi.org/10.1037/a0015701

- Hooghe, L., & Marks, G. (2018). Cleavage theory meets Europe’s crises: Lipset, Rokkan, and the transnational cleavage. Journal of European Public Policy, 25(1), 109–135. https://doi.org/10.1080/13501763.2017.1310279

- Horowitz, M., Cushion, S., Dragomir, M., Gutiérrez Manjón, S., & Pantti, M. (2021). A framework for assessing the role of public service media organizations in countering disinformation. Digital Journalism, 10(5), 843–865. https://doi.org/10.1080/21670811.2021.1987948

- Howard, M. C., & Hoffman, M. E. (2018). Variable-centered, person-centered, and person-specific approaches: Where theory meets the method. Organizational Research Methods, 21(4), 846–876. https://doi.org/10.1177/1094428117744021

- Humprecht, E., Esser, F., & Van Aelst, P. (2020). Resilience to online disinformation: A framework for cross-national comparative research. The International Journal of Press/politics, 25(3), 493–516. https://doi.org/10.1177/1940161219900126

- Imhoff, R., & Bruder, M. (2014). Speaking (un-)truth to power: Conspiracy mentality as a generalised political attitude: Conspiracy mentality. European Journal of Personality, 28(1), 25–43. https://doi.org/10.1002/per.1930

- Imhoff, R., Lamberty, P., & Klein, O. (2018). Using power as a negative cue: How conspiracy mentality affects epistemic trust in sources of historical knowledge. Personality and Social Psychology Bulletin, 44(9), 1364–1379. https://doi.org/10.1177/0146167218768779

- Index.hu ( Director). (2018). Hungarian PM Calls Country’s Leading News Website Fake. https://www.youtube.com/watch?v=lGFzBWNTKD8

- Jeong, S.-H., Cho, H., & Hwang, Y. (2012). Media literacy interventions: A meta-analytic Review. Journal of Communication, 62(3), 454–472. https://doi.org/10.1111/j.1460-2466.2012.01643.x

- Kahan, D. M. (2012). Ideology, motivated reasoning, and cognitive reflection: An experimental study. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.2182588

- Kent, P., Jensen, R. K., & Kongsted, A. (2014). A comparison of three clustering methods for finding subgroups in MRI, SMS or clinical data: SPSS TwoStep cluster analysis, latent gold and SNOB. BMC Medical Research Methodology, 14(1), 113. https://doi.org/10.1186/1471-2288-14-113

- Kim, S., & Kim, S. (2020). The Crisis of public health and infodemic: Analyzing belief structure of fake news about COVID-19 pandemic. Sustainability, 12(23), 9904. https://doi.org/10.3390/su12239904

- KSH. (2016). A magyar népesség iskolázottsága. https://www.ksh.hu/interaktiv/storytelling/iskolazottsag/index.html

- Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108(3), 480–498. https://doi.org/10.1037/0033-2909.108.3.480

- Lewandowsky, S., & van der Linden, S. (2021). Countering misinformation and fake news through inoculation and prebunking. European Review of Social Psychology, 32(2), 348–384. https://doi.org/10.1080/10463283.2021.1876983

- Linden, S., Panagopoulos, C., Azevedo, F., & Jost, J. T. (2021). The paranoid style in American politics revisited: An ideological asymmetry in conspiratorial thinking. Political Psychology, 42(1), 23–51. https://doi.org/10.1111/pops.12681

- Loos, E., & Nijenhuis, J. (2020). Consuming fake news: A matter of age? The perception of political fake news stories in Facebook Ads. In Q. Gao & J. Zhou (Eds.), Human aspects of IT for the aged population. Technology and society (Vol. 12209, pp. 69–88). Springer International Publishing. https://doi.org/10.1007/978-3-030-50232-4_6

- Mahmud, M. R., Bin Reza, R., & Ahmed, S. M. Z. (2021). The effects of misinformation on COVID-19 vaccine hesitancy in Bangladesh. Global Knowledge, Memory & Communication, 72(1/2), 82–97. https://doi.org/10.1108/GKMC-05-2021-0080

- Meca, A., Ritchie, R. A., Beyers, W., Schwartz, S. J., Picariello, S., Zamboanga, B. L., Hardy, S. A., Luyckx, K., Kim, S. Y., Whitbourne, S. K., Crocetti, E., Brown, E. J., & Benitez, C. G. (2015). Identity centrality and psychosocial functioning: A person-centered approach. Emerging Adulthood, 3(5), 327–339. https://doi.org/10.1177/2167696815593183

- Meeusen, C., Meuleman, B., Abts, K., & Bergh, R. (2018). Comparing a variable-centered and a person-centered approach to the structure of prejudice. Social Psychological and Personality Science, 9(6), 645–655. https://doi.org/10.1177/1948550617720273

- Mejias, U. A., & Vokuev, N. E. (2017). Disinformation and the media: The case of Russia and Ukraine. Media, Culture & Society, 39(7), 1027–1042. https://doi.org/10.1177/0163443716686672

- Melki, J., Tamim, H., Hadid, D., Makki, M., El Amine, J., Hitti, E., & Gesser-Edelsburg, A. (2021). Mitigating infodemics: The relationship between news exposure and trust and belief in COVID-19 fake news and social media spreading. PLOS ONE, 16(6), e0252830. https://doi.org/10.1371/journal.pone.0252830

- Orosz, G., Krekó, P., Paskuj, B., Tóth-Király, I., Bőthe, B., & Roland-Lévy, C. (2016). Changing conspiracy beliefs through rationality and ridiculing. Frontiers in Psychology, 7, 7. https://doi.org/10.3389/fpsyg.2016.01525

- Osmundsen, M., Bor, A., Vahlstrup, P. B., Bechmann, A., & Petersen, M. B. (2021). Partisan polarization is the primary Psychological motivation behind political fake news sharing on Twitter. American Political Science Review, 115(3), 999–1015. https://doi.org/10.1017/S0003055421000290

- Oswald, M. E., & Grosjean, S. (2004). Confirmation bias. In R. F. Pohl (Ed.), A handbook on fallacies and biases in thinking, judgement and memory (1st ed., pp. 79–96). Psychology Press.

- Palonen, E. (2018). Performing the nation: The Janus-faced populist foundations of illiberalism in Hungary. Journal of Contemporary European Studies, 26(3), 308–321. https://doi.org/10.1080/14782804.2018.1498776

- Pantazi, M., Hale, S., & Klein, O. (2021). Social and cognitive aspects of the vulnerability to political misinformation. Political Psychology, 42(S1), 267–304. https://doi.org/10.1111/pops.12797

- Pasek, J., Stark, T. H., Krosnick, J. A., & Tompson, T. (2015). What motivates a conspiracy theory? Birther beliefs, partisanship, liberal-conservative ideology, and anti-black attitudes. Electoral Studies, 40, 482–489. https://doi.org/10.1016/j.electstud.2014.09.009

- Pennycook, G., & Rand, D. G. (2017). Who falls for fake news? The roles of analytic thinking, motivated reasoning, political ideology, and bullshit receptivity. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3023545

- Pennycook, G., & Rand, D. G. (2018). Lazy, not biased: Susceptibility to partisan fake news is better explained by lack of reasoning than by motivated reasoning. Cognition, 188, 39–50. https://doi.org/10.1016/j.cognition.2018.06.011

- Pennycook, G., & Rand, D. G. (2021). The Psychology of fake news. Trends in Cognitive Sciences, 25(5), 388–402. https://doi.org/10.1016/j.tics.2021.02.007

- Plenta, P. (2020). Conspiracy theories as a political instrument: Utilization of anti-Soros narratives in Central Europe. Contemporary Politics, 26(5), 512–530. https://doi.org/10.1080/13569775.2020.1781332

- Polyák, G. (2019). Media in Hungary: Three pillars of an illiberal democracy. In E. Połońska & C. Beckett (Eds.), Public service broadcasting and media systems in troubled European democracies (pp. 279–303). Springer International Publishing. https://doi.org/10.1007/978-3-030-02710-0_13

- Renvik, T. A., Manner, J., Vetik, R., Sam, D. L., & Jasinskaja-Lahti, I. (2020). Citizenship and socio-political integration: A person-oriented analysis among Russian-speaking minorities in Estonia, Finland and Norway. Journal of Social and Political Psychology, 8(1), 53–77. https://doi.org/10.5964/jspp.v8i1.1140

- Scherer, L. D., McPhetres, J., Pennycook, G., Kempe, A., Allen, L. A., Knoepke, C. E., Tate, C. E., & Matlock, D. D. (2021). Who is susceptible to online health misinformation? A test of four psychosocial hypotheses. Health Psychology, 40(4), 274–284. https://doi.org/10.1037/hea0000978

- Schwartz, S. J., Luyckx, K., & Vignoles, V. L. (Eds.). (2011). Handbook of identity theory and research. Springer New York. https://doi.org/10.1007/978-1-4419-7988-9

- Sindermann, C., Cooper, A., & Montag, C. (2020). A short review on susceptibility to falling for fake political news. Current Opinion in Psychology, 36, 44–48. https://doi.org/10.1016/j.copsyc.2020.03.014

- Singer, J. B. (2014). User-generated visibility: Secondary gatekeeping in a shared media space. New Media & Society, 16(1), 55–73. https://doi.org/10.1177/1461444813477833

- Special Eurobarometer 516. (2021). European citizens’ Knowledge and Attitudes Towards Science and Technology. https://europa.eu/eurobarometer/surveys/detail/2237

- Stempel, C., Hargrove, T., & Stempel, G. H. (2007). Media use, social structure, and belief in 9/11 conspiracy theories. Journalism & Mass Communication Quarterly, 84(2), 353–372. https://doi.org/10.1177/107769900708400210

- Swire, B., Berinsky, A. J., Lewandowsky, S., & Ecker, U. K. H. (2017). Processing political misinformation: Comprehending the Trump phenomenon. Royal Society Open Science, 4(3), 160802. https://doi.org/10.1098/rsos.160802

- Szebeni, Z., Lönnqvist, J.-E., & Jasinskaja-Lahti, I. (2021). Social Psychological predictors of belief in fake news in the run-up to the 2019 Hungarian elections: The importance of conspiracy mentality supports the notion of ideological symmetry in fake news belief. Frontiers in Psychology, 12, 790848. https://doi.org/10.3389/fpsyg.2021.790848

- Szebeni, Z., & Salojärvi, V. (2022). “Authentically” maintaining populism in Hungary – visual analysis of Prime Minister Viktor Orbán’s instagram. Mass Communication & Society, 25(6), 812–837. https://doi.org/10.1080/15205436.2022.2111265

- Szicherle, P., & Krekó, P. (2021, July 6). Disinformation in Hungary: From Fabricated News to Discriminatory Legislation. https://eu.boell.org/en/2021/06/07/disinformation-hungary-fabricated-news-discriminatory-legislation

- Szurovecz, I. (2022). Az EBESZ-hez fordul az ellenzék a közmédia álhírei miatt. 444.hu. https://444.hu/2022/02/28/az-ebesz-hez-fordul-az-ellenzek-a-kozmedia-alhirei-miatt

- Tucker, J., Guess, A., Barbera, P., Vaccari, C., Siegel, A., Sanovich, S., Stukal, D., & Nyhan, B. (2018). Social media, political polarization, and political disinformation: A Review of the scientific literature. SSRN Electronic Journal. https://doi.org/10.2139/ssrn.3144139

- Wardle, C. (2017). Information disorder (DGI(2017)09). Council of Europe. https://rm.coe.int/information-disorder-toward-an-interdisciplinary-framework-for-researc/168076277c

- Weller, B. E., Bowen, N. K., & Faubert, S. J. (2020). Latent class analysis: A guide to best practice. Journal of Black Psychology, 46(4), 287–311. https://doi.org/10.1177/0095798420930932

- Xiao, X., Borah, P., & Su, Y. (2021). The dangers of blind trust: Examining the interplay among social media news use, misinformation identification, and news trust on conspiracy beliefs. Public Understanding of Science, 30(8), 977–992. https://doi.org/10.1177/0963662521998025

- Zonis, M., & Joseph, C. M. (1994). Conspiracy thinking in the Middle East. Political Psychology, 15(3), 443. https://doi.org/10.2307/3791566