?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.

?Mathematical formulae have been encoded as MathML and are displayed in this HTML version using MathJax in order to improve their display. Uncheck the box to turn MathJax off. This feature requires Javascript. Click on a formula to zoom.Abstract

Purpose

This study aimed to improve the accuracy of brain-computer interface (BCI) systems based on motor imagery (MI) and mental arithmetic (MA) by utilizing functional near-infrared spectroscopy (fNIRS) and an improved dilation CapsuleNet (ID-CapsuleNet) model.

Methods

The study focused on the characteristics of fNIRS and employed large-kernel dilation convolution to extract hemodynamic features from fNIRS data. Inspired by CapsuleNet’s success in image classification, an ID-CapsuleNet model was designed, combining large-kernel dilation convolution and CapsuleNet. Four publicly available datasets (A, B, C, and D) were utilized for evaluating the proposed model. Datasets A and B were MA type, while datasets C and D were MI type. Ablation experiments were conducted to assess the usefulness of large-kernel convolution, dynamic routing, and dilation convolution.

Results

The average accuracies for each dataset were 95.01%, 76.88%, 74.03%, and 80.29% respectively. Cross-subject average accuracies were 88.72%, 75.80%, 75.78%, and 80.34%. Ablation experiments confirmed the importance of large-kernel convolution, dynamic routing, and dilation convolution in the ID-CapsuleNet model.

Conclusion

The developed ID-CapsuleNet model demonstrated promising potential for enhancing the performance of BCI systems based on MI and MA. The findings contribute to the advancement of BCI technology, offering improved assistive tools for disabled individuals.

1. Introduction

Brain-Computer Interface (BCI) technology is a cutting-edge field that enables direct communication with the brain for controlling external devices or performing various tasks [Citation1,Citation2]. Functional Near-Infrared Spectroscopy (fNIRS) is a non-invasive brain imaging technique that utilizes near-infrared light to measure changes in the concentrations of oxygenated hemoglobin (HbO) and deoxygenated hemoglobin (HbR) in brain tissue, thereby detecting alterations in cerebral blood flow [Citation3,Citation4]. This allows for the study of brain activity, providing real-time information about brain function. In comparison to other brain imaging techniques such as electroencephalography (EEG) and functional magnetic resonance imaging (fMRI), fNIRS has several advantages. It is non-invasive, resistant to interference, and portable [Citation5–9]. It is suitable for individuals of different ages, including infants and the elderly, because it does not require significant cooperation or immobility. In contrast, EEG may need additional attention and preparation for infants and young children [Citation10], while fMRI for children and young participants may require the use of sedatives that may not be suitable for certain populations, such as pregnant women or individuals with metal implants [Citation11,Citation12]. Moreover, although fNIRS has lower spatial resolution compared to fMRI, fNIRS devices are more convenient and suitable for mobile scenarios [Citation13]. In comparison to EEG, fNIRS shows an advantage of the higher spatial resolution. It is less susceptible to electrical noise and motion artifacts [Citation14,Citation15].

Motor Imagery (MI) is a brain activity in which neural signals related to actual movements are generated when individuals imagine performing a specific action [Citation16,Citation17]. This mental activity is accompanied by physiological changes in brain blood oxygenation levels [Citation18]. Using fNIRS technology, it is possible to capture changes in blood flow in brain regions triggered by MI, allowing for control of external devices or task execution through mental imagery [Citation19–21]. Mental Arithmetic (MA) is a cognitive activity unrelated to physical movements. BCI systems that combine fNIRS technology can identify different MA activities and use them to control external devices [Citation22]. The fNIRS-based BCI has potential applications in various fields, including rehabilitation medicine, human-computer interaction, and neuroscience research [Citation23]. In the field of rehabilitation medicine, fNIRS-based BCI for MI/MA can assist stroke, spinal cord injury, and other motor impairment patients in regaining their motor abilities [Citation24,Citation25]. Additionally, researchers can use fNIRS and BCI to explore cognitive processes and understand brain functions related to movement, decision-making, and concentration [Citation26]. In the field of BCI based on fNIRS for MA, there have been advancements in both personalized adaptation and real-time feedback. Personalized BCI systems are under development, optimizing according to each user’s brain characteristics and the nature of the MA task. Real-time and effective feedback mechanisms are aiding users in better control and execution of MA tasks [Citation14, Citation27].

fNIRS classification primarily involves both traditional machine learning and deep learning approaches. For instance, statistical features such as mean, peak, and slope extracted from fNIRS signals are utilized for training Support Vector Machines (SVM), Linear Discriminant Analysis (LDA), and Hidden Markov Models (HMM). Additionally [Citation15, Citation22, Citation28–32], vectors like the changes in cerebral blood volume (+CBV) and vector magnitude can also be employed for the training [Citation33]. However, traditional machine learning heavily relies on manually crafted feature extraction and prior knowledge. In recent years, deep learning has emerged as the mainstream in near-infrared spectroscopy classification research. Deep learning models can directly learn and extract diverse features from raw fNIRS signals, providing an end-to-end approach [Citation34,Citation35]. Specifically, Convolutional Neural Networks (CNNs) and Long Short-Term Memory networks (LSTMs) have been applied in multiple fNIRS-based Brain-Computer Interface (BCI) applications [Citation31, Citation35]. Due to the image-like characteristics of multi-channel near-infrared signals, which can be organized into multiple matrices, they can be treated as image data for processing [Citation14]. This approach, leveraging convolutional layers for spatial and channel-level feature extraction followed by classification using Transformer, has demonstrated high performance [Citation14]. fNIRSNet, an efficient and concise knowledge-driven model, incorporates delayed hemodynamic responses as domain knowledge into the model design [Citation36]. This streamlined model has achieved performance nearly comparable to larger models.

When individuals perform MI/MA tasks, different brain regions exhibit varying levels of activity, and relevant neural signals activate within the brain, resulting in changes in brain oxygenation, leading to the emergence of the cerebral hemodynamic response. Therefore, extracting features related to the hemodynamic response of fNIRS is crucial for fNIRS-based BCI for MI/MA [Citation36]. Due to the inherent delay in the hemodynamic response of the brain, long-range dependencies are observed in the temporal dimension [Citation37,Citation38]. Convolution is typically used to extract image features, whereas large-kernel convolutions can capture these long-range dependencies but introduce parameter redundancy [Citation39]. Dilation convolutions can address this issue by reducing parameters. CapsuleNet [Citation40], a type of deep learning architecture, offer some interpretability and exhibit a degree of resistance to transformations compared to traditional convolutional neural networks (CNN). While this approach has not been applied to the field of fNIRS-based BCI, we aim to propose a network architecture that combines CapsuleNet structures with large dilation convolutions for classification tasks using fNIRS signals.

2. Datasets and participants

In this study, four datasets are adopted for the validation of the proposed method. The detail of the datasets is described below.

Dataset A was designed to investigate how the levels of HbR and HbO in the prefrontal cortex change when individuals perform MA [Citation41–43]. Dataset B is a publicly accessible dataset that primarily concentrates on analyzing visual instructions related to MA tasks [Citation44]. Dataset C is an open-access three-class classification dataset [Citation45]. This dataset comprises three types of movements: right-hand finger-tapping (RHT), left-hand finger-tapping (LHT), and foot-tapping (FT). Dataset D is an open-access binary classification dataset that includes left and right hand grip data [Citation46].

lists the number of subjects, experimental paradigms, and task specifications for each dataset.

Table 1. Details of the four datasets.

3. Method

3.1. Signal preprocessing

According to the Beer-Lambert law [Citation47], the concentration changes of cerebral hemoglobin (ΔHbR and ΔHbO) can be inferred by measuring the change in optical density (Δ) of light during a specific time interval (Δ

), along with known values for the absorption coefficient (

) and the path length (d).

(1)

(1)

In this context, and

represent different illumination wavelengths, and

refers to the differential path length factor for

To remove noise and motion artifacts, we applied filtering techniques to each of the four datasets based on previous research. Dataset A was filtered using a fourth-order low-pass Butterworth filter with a cutoff frequency of 0.009 Hz [Citation41]. Dataset B utilized a bandpass filter with a frequency range of 0.01–0.1 Hz [Citation42], while Dataset C employed a third-order Butterworth filter with a frequency range of 0.01–0.1 Hz [Citation45]. Dataset D employed a seventh-order elliptical low-pass filter with a cutoff frequency of 0.25 Hz [Citation46].

3.2. Feature extraction

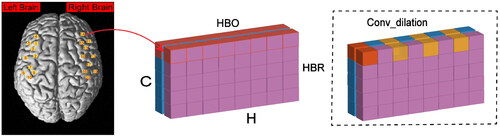

Selecting features relevant to the task paradigm is crucial for effective classification in fNIRS. fNIRS data consists of information from both HbO and HbR, represented as a C × H × W matrix, where C represents the two chromophores (HbO and HbR), H is the number of channels, and W is the number of sampling points. Normalizing the fNIRS signals through z-score standardization is employed to ensure the preservation of fNIRS features effectively in this arrangement [Citation14]. Taking into account the task paradigm and the characteristics of the brain’s hemodynamic response, we utilize large-kernel dilation convolution (Conv_dilation) to focus on the hemodynamic response features of brain regions, aiming to extract the long-range dependency features of fNIRS (). The CapsuleNet we have introduced, incorporating Conv_dilation, is referred to as improved dilation CapsuleNet (ID-CapsuleNet).

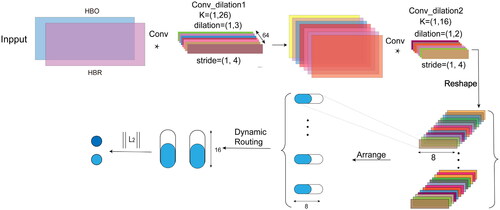

3.3. Structure of ID-CapsuleNet

In the ID-CapsuleNet, we start by using two Conv_dilation to extract long-range dependencies from fNIRS data, with specific parameters dilation in . Following the first dilation convolution operation and relu activation, we proceed with the second dilation convolution to complete feature extraction. Next, we partition the extracted features into p segments, each of size 1 × 8. The number of segments, p, for each of the four datasets is specified in . Subsequently, we transform these feature segments into primary capsule vectors, each of length 8. The number of primary capsule vectors matches the number of feature segments. These primary capsule vectors are then fed into the dynamic routing [Citation48], which produces q 16-dimensional output capsules. The size of each of the q output capsules is determined by calculating the L2 norm and then applying softmax activation. Here, q represents the number of categories for classification (). The sizes of these q output capsules correspond to the probabilities of belonging to various categories. The category associated with the highest probability among these q output capsules is selected as the class to which the input data belongs ().

Figure 2. The ID-CapsuleNet includes two Conv_dilations for feature extraction. After dynamic routing, the size of the output vectors is computed.

Table 2. Parameter settings of the model.

Dynamic routing is an iterative learning algorithm in CapsuleNet used to learn the routing logit between PrimaryCaps and DigitCaps in order to obtain the representation of DigitCaps. In our capsule network, there are PrimaryCaps

and DigitCaps

where m and n represent the number of capsules, and

represent the dimensions of the capsules. The routing logit

can be expressed as:

(2)

(2)

where

is the transformation matrix used to calculate the candidate vector for high-level capsule

as a weighted sum of all the PrimaryCaps, based on the routing logit

(3)

(3)

the weights

are calculated by applying softmax to

(4)

(4)

the vector for the high-level capsule is obtained through a non-linear compression of

(5)

(5)

The dynamic routing process is typically iterated three times to achieve convergence [Citation48,Citation49]; hence, we obtain the output vector after three rounds of dynamic routing.

The size of the capsule vectors () is calculated by computing the L2 norm of the capsule vectors, where n represents the number of elements in the vector, in this case, n is the dimension of the output vectors, which is 16. L2 norm is as follow:

(6)

(6)

3.4. Training strategy

Label Smoothing is a technique used to improve the classification performance and generalization of deep learning models [Citation50,Citation51]. When training deep learning models on fNIRS data, overfitting is often a concern. Therefore, we modify the loss function using label smoothing. For K labels, the probability of each label k is first calculated as Wherein zi is the unnormalized log-probabilities.

(7)

(7)

the cross-entropy loss function is:

(8)

(8)

When a classification is correct, the label equals 1. The smoothing parameter α is typically set to 0.1, and

is smoothed to

(9)

(9)

The label smoothing is written as:

(10)

(10)

3.5. Classifiers for comparison

In our comparative experiments, we employed both traditional machine learning classifiers and deep neural networks (DNN). The traditional machine learning algorithms used in the experiments include the Artificial Neural Network (ANN). The ANN comprises 128 hidden layer units and is trained for a maximum of 1000 iterations [Citation14]. In this study, we not only compared its performance with two existing deep neural network (DNN) models, CNN and LSTM, but also contrasted it with the original CapsuleNet and the efficient and concise knowledge-driven model, fNIRSNet [Citation36]. The CNN model consists of three 1-dimensional convolutional layers, two fully connected layers, and a softmax layer. Each convolutional layer includes 32 filters with a kernel size of 3 [Citation52]. The LSTM model consists of 4 LSTM layers and 2 fully connected layers, with each LSTM layer containing 64 hidden units, and the fully connected layers having 512 and 128 hidden nodes [Citation35], respectively. CapsuleNet comprises three convolutional layers and dynamic routing, with each convolutional layer having a kernel size of 9. The primary capsules are 8-dimensional, and the output capsule vectors have a dimension of 16 [Citation40]. fNIRSNet is a model proposed with delayed hemodynamic responses as domain knowledge, consisting only of three convolutional layers and one fully connected (FC) layer [Citation36].

3.6. Training parameters

We set the batch size to 32, conducted training for 120 epochs, and maintained a learning rate of 0.0001. For two datasets representing different MA paradigms, two datasets representing MI paradigms, and considering the varying data sizes within these four datasets, we applied the AdamW [Citation53] with a weight decay of 0.01. We utilized label smoothing to accelerate training and improve performance.

To assess the classification accuracy of the test set, we conduct 3 runs × 5-fold cross-validation. In order to evaluate the model’s generalization capability on individual subjects, we implemented a leave-one-subject-out cross-validation strategy (LOSO-CV) [Citation54]. This method involves using one subject’s data as the evaluation set while utilizing data from other subjects as the training set. We selected multiple metrics to evaluate the performance of the classification outcomes, including accuracy, recall, F1 score (macro F1 score for Dataset C), and the Kappa coefficient.

4. Results

4.1. Classification results

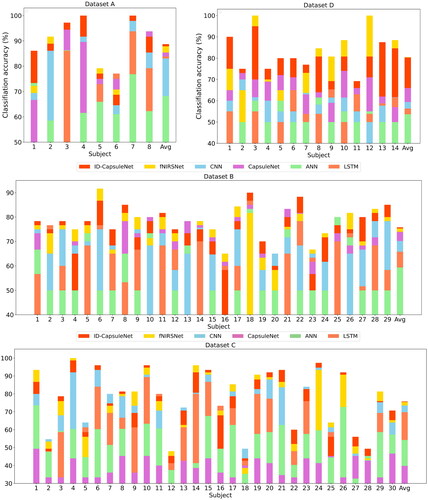

To assess the classification accuracy of our model on MI and MA tasks based on fNIRS data, we conducted three rounds of 5-fold cross-validation on the MI and MA datasets (). It can be observed that ID-CapsuleNet shows the best classification performance, surpassing CNN, LSTM, and CapsuleNet with a significant margin. CNN and LSTM exhibit significantly better classification performance compared to the traditional classifier ANN. Our model achieved the highest average classification accuracy across the three datasets, outperforming the latest knowledge-driven model, fNIRSNet, with a noticeable advantage. These results indicate that our model possesses robust classification capabilities for fNIRS data based on MI and MA tasks, showing significant improvements over the original CapsuleNet and highlighting the effectiveness of our enhanced model.

Table 3. Average accuracy of the test sets.

To further evaluate the model’s individual-level generalization ability, we employed a LOSO-CV strategy. displays the classification accuracy for each individual. It can be observed that our model achieves the highest accuracy for the majority of individuals across the four datasets. lists the detailed results. From the results of F1 score and Kappa coefficient, it can be seen that our model exhibits the best generalization ability at the individual level.

Table 4. Average classification results of all individual subjects.

4.2. Dilation convolution

To validate the effectiveness of dilation convolutions, we conducted classification experiments in ID-CapsuleNet using both dilation convolutions and normal convolutions while ensuring the same receptive field. The experimental results indicate that under the same receptive field conditions, dilation convolutions significantly outperform norm convolutions in terms of classification accuracy (), and dilation convolutions have approximately half the parameters of regular convolution (). Additionally, dilation convolutions can reduce parameters and improve processing speed [Citation39, Citation55].

Table 5. Average classification results of different convolution.

Table 6. The parameters of different convolution.

The fNIRS measurements of brain hemodynamic responses based on MI and MA exhibit certain time-delay characteristics, indicating long-range dependencies in the data [Citation37,Citation38]. This long-range dependence has a long-time span, which may lead to data redundancy. Therefore, dilation convolutions can capture long-range dependencies while reducing feature redundancy, ultimately enhancing the network’s classification performance.

5. Discussion

In this study, we propose a new method that utilizes fNIRS signals for MI and MA classification, combining dilation convolution and CapsuleNet. Our results mainly focus on comparing the classification accuracy of our proposed method with other prevalent techniques. Furthermore, we compared the parameter differences and classification accuracy between using dilation convolution and regular convolution in our model.

In the following discussion, we will delve into the structural significance of ID-CapsuleNet and the possibilities for achieving a fast and robust BCI system in more detail.

5.1. Margin loss and reconstruction loss for ID-CapsuleNet

Margin loss encourages correct dynamic routing between DigitCaps, with its main goal being to ensure that the correct DigitCaps receive higher probabilities while incorrect ones receive lower probabilities [Citation48]. On the other hand, reconstruction loss primarily aims to measure the difference between input data and the reconstructed data generated by the model [Citation48], thus encouraging the model to learn effective data representations.

To encourage correct dynamic routing between DigitCaps and promote effective data representation learning, we used margin loss and reconstruction loss as the overall loss (CapsuleLoss) for model training [Citation40, Citation49, Citation56]. However, the results indicated that using cross-entropy loss with label smoothing outperformed the individual effects of CapsuleLoss (). This suggests that cross-entropy loss with label smoothing is a better match for fNIRS data and ID-CapsuleNet.

Table 7. Average classification results of different loss.

The difference in classification accuracy may be attributed to the fact that the primary functions of margin loss and reconstruction loss are not directly related to classification. Therefore, CapsuleLoss may not be well-suited for the specific task of classification. Cross-entropy loss with label smoothing [Citation51, Citation57], on the other hand, is designed to optimize classification tasks and appears to be a more suitable choice for this particular scenario. In summary, the choice of loss function depends on the specific task and dataset. While margin loss and reconstruction loss have their advantages in certain contexts, they may not always be the best choice for classification tasks, as demonstrated in the results.

5.2. Ablation study

In order to validate the importance of Conv_dilation and dynamic routing in our model, we conducted ablation experiments on ID-CapsuleNet. ID-CNN replaced the dynamic routing in ID-CapsuleNet with pooling layers and fully connected layers while retaining the Conv_dilation. We compared ID-CapsuleNet with ID-CNN to assess the importance of dynamic routing. Additionally, since CapsuleNet includes normal convolutions, we compared ID-CapsuleNet with CapsuleNet to evaluate the effectiveness of Conv_dilation.

The experimental results showed that both Conv_dilation and dynamic routing algorithms are crucial for our model, and the absence of either component led to a decrease in model performance (). Conv_dilation extract long-range dependency features, while dynamic routing captures feature invariance, resulting in better feature representation and, consequently, improved classification performance. Furthermore, we compared ID-CNN with CNN to verify the performance gain achieved by Conv_dilation compared to convolutions with a kernel size of 3. The results demonstrated that Conv_dilation led to significant improvements on certain datasets, with Dataset A and Dataset D showing improvements of 6% and 10%, respectively. Some improvements were also observed on the other two datasets (), indicating that Conv_dilation is more effective at feature extraction. In summary, both Conv_dilation and dynamic routing are essential components of our model, and they work synergistically to enhance feature extraction and classification performance.

Table 8. Average accuracy of the test sets.

In the context of MA and MI tasks, specific brain regions are known to become activated, and opposite changes in HbO and HbR are often observed [Citation58]. When participants engage in MI, the concentration of HbO associated with active brain regions usually increases, while the concentration of HbR decreases. In MA tasks, studies have found that HbO responses are stronger in the left dorsolateral prefrontal cortex (DLPFC) and anterior prefrontal cortex (APFC), whereas HbR responses are relatively weaker and less pronounced [Citation59,Citation60]. In the context of MI, the brain activation regions can differ depending on the specific MI task. For example, left and right hand MI tasks are associated with activation in contralateral brain regions, whereas foot MI tasks activate both brain regions [Citation61–63]. These active brain regions are closely related to cerebral hemodynamic responses, and the long-range dependencies extracted by our model represent the cerebral hemodynamic responses. Therefore, in some respects, this provides an approach to understanding neural physiological reactions in the brain. These findings have important practical implications in the field of fNIRS-based classification.

5.3. Limitation

The receptive field of Conv_dilation may vary for each dataset and may require adjustments for different data lengths, which can be time-consuming. Additionally, CapsuleNet have a high computational complexity, leading to longer training times, which may hinder their scalability and deployment in certain practical applications. Further research is needed to validate their performance in multi-class classification problems. In terms of interpretability, the internal workings of CapsuleNet are20 relatively complex, making them challenging to explain and visualize.

6. Conclusion

In this study, we utilized Conv_dilation for extracting features from fNIRS-based brain hemodynamic responses and employed dynamic routing to capture feature invariance. Consequently, Conv_dilation combined with dynamic routing were employed for MI and MA classification based on fNIRS. Our experiments were conducted on four publicly available datasets. Label smoothing was employed to address overfitting problem. The proposed network architecture demonstrated strong performance in terms of classification accuracy, and ablation studies confirmed the importance of Conv_dilation and dynamic routing. Therefore, the proposed network architecture not only offers an effective approach for classifying MI and MA based on fNIRS but also provides conceptions for designing network models specifically tailored to fNIRS. It serves as an inspiration for gaining insights into specific neural activities within the brain.

Disclosure statement

No potential competing interest was reported by the authors.

Additional information

Funding

References

- Abougarair AJ, Gnan HM, Oun A, et al. Implementation of a brain-computer interface for robotic arm control. 2021 IEEE 1st International Maghreb Meeting of the Conference on Sciences and Techniques of Automatic Control and Computer Engineering MI-STA. 2021; 58–63. doi: 10.1109/MI-STA52233.2021.9464359.

- Zhou Z, Yin E, Liu Y, et al. A novel task-oriented optimal design for P300-based brain-computer interfaces. J Neural Eng. 2014;11(5):056003. doi: 10.1088/1741-2560/11/5/056003.

- Villringer A, Dirnagl U. Coupling of brain activity and cerebral blood flow: basis of functional neuroimaging. Cerebrovasc Brain Metabol Rev. 1995;7(3):240–276.

- Jöbsis FF. Noninvasive, infrared monitoring of cerebral and myocardial oxygen sufficiency and circulatory parameters. Science. 1977;198(4323):1264–1267. doi: 10.1126/science.929199.

- Tinga AM, Clim M-A, de Back TT, et al. Measures of prefrontal functional near-infrared spectroscopy in visuomotor learning. Exp Brain Res. 2021;239(4):1061–1072. doi: 10.1007/s00221-021-06039-2.

- Wang H, Tang C, Xu T, et al. An approach of one-vs-rest filter bank common spatial pattern and spiking neural networks for multiple motor imagery decoding. IEEE Access. 2020;8:86850–86861. doi: 10.1109/ACCESS.2020.2992631.

- Paulmurugan K, Vijayaragavan V, Ghosh S, et al. Brain-computer interfacing using functional near-infrared spectroscopy (fNIRS). Biosensors. 2021;11(10):389.). doi: 10.3390/bios11100389.

- Lazarou I, Nikolopoulos S, Petrantonakis PC, et al. EEG-based brain-computer interfaces for communication and rehabilitation of people with motor impairment: a novel approach of the 21st century. Front Hum Neurosci. 2018;12:14. doi: 10.3389/fnhum.2018.00014.

- Gratton E, Toronov V, Wolf U, et al. Measurement of brain activity by near-infrared light. J Biomed Opt. 2005;10(1):11008. doi: 10.1117/1.1854673.

- DeBoer T, Scott LS, Nelson CA. Methods for acquiring and analyzing infant event-related potentials. 2013;5–38.

- Shellock FG, Kanal E. SMRI safety committee. Policies, guidelines, and recommendations for MR imaging safety and patient management. J Magn Reson Imaging. 1991;1(1):97–101. doi: 10.1002/jmri.1880010114.

- Kozel FA, Padgett TM, George MS. A replication study of the neural correlates of deception. Behav Neurosci. 2004;118(4):852–856. doi: 10.1037/0735-7044.118.4.852.

- Kleinschmidt A, Obrig H, Requardt M, et al. Simultaneous recording of cerebral blood oxygenation changes during human brain activation by magnetic resonance imaging and near-infrared spectroscopy. J Cereb Blood Flow Metab. 1996;16(5):817–826. doi: 10.1097/00004647-199609000-00006.

- Wang Z, Zhang J, Zhang X, et al. Transformer model for functional near-infrared spectroscopy classification. IEEE J Biomed Health Inform. 2022;26(6):2559–2569. doi: 10.1109/JBHI.2022.3140531.

- Naseer N, Hong KS. fNIRS-based brain-computer interfaces: a review. Front Hum Neurosci. 2015;9:3. doi: 10.3389/fnhum.2015.00003.

- Guillot A, Di Rienzo F, et al. The neurofunctional architecture of motor imagery. Advanced brain neuroimaging topics in health and disease-methods and applications. 2014; 433–456.

- Wang HT, Li T, et al. A motor imagery analysis algorithm based on spatio-temporal-frequency joint selection and relevance vector machine. Kongzhi Lilun Yu Yingyong/Control Theory Appl. 2017;34:1403–1408.

- Raichle ME. Behind the scenes of functional brain imaging: a historical and physiological perspective. Proc Natl Acad Sci U S A. 1998;95(3):765–772. doi: 10.1073/pnas.95.3.765.

- Pfurtscheller G, Neuper C. Neuper motor imagery and direct brain-computer communication. Proc IEEE. 2001;89(7):1123–1134. doi: 10.1109/5.939829.

- Wang H, Bezerianos A. Brain-controlled wheelchair controlled by sustained and brief motor imagery BCIs. Electron Lett. 2017;53(17):1178–1180. doi: 10.1049/el.2017.1637.

- Wang H, Li T, Bezerianos A, et al. The control of a virtual automatic car based on multiple patterns of motor imagery BCI. Med Biol Eng Comput. 2019;57(1):299–309. doi: 10.1007/s11517-018-1883-3.

- Hong KS, Naseer N, Kim YH. Classification of prefrontal and motor cortex signals for three-class fNIRS-BCI. Neurosci Lett. 2015;587:87–92. doi: 10.1016/j.neulet.2014.12.029.

- Hsu WY, Sun YN. EEG-based motor imagery analysis using weighted wavelet transform features. J Neurosci Methods. 2009;176(2):310–318. doi: 10.1016/j.jneumeth.2008.09.014.

- Ang KK, Guan C, Chua KSG, et al. A large clinical study on the ability of stroke patients to use an EEG-based motor imagery brain-computer interface. Clin EEG Neurosci. 2011;42(4):253–258. doi: 10.1177/155005941104200411.

- Li C, Xu Y, He L, et al. Research on fNIRS recognition method of upper limb movement intention. Electronics. 2021;10(11):1239. doi: 10.3390/electronics10111239.

- Gero JS, Milovanovic J. A framework for studying design thinking through measuring designers’ minds, bodies and brains. Des Sci. 2020;6:e19. doi: 10.1017/dsj.2020.15.

- Ghaffar MSBA, Khan US, Iqbal J, et al. Improving classification performance of four class FNIRS-BCI using mel frequency cepstral coefficients (MFCC). Infrared Phys Technol. 2021;112:103589. doi: 10.1016/j.infrared.2020.103589.

- Liu R, Walker E, Friedman L, et al. fNIRS-based classification of mind-wandering with personalized window selection for multimodal learning interfaces. J Multimodal User Interface. 2021;15(3):257–272. doi: 10.1007/s12193-020-00325-z.

- Xu T, Zhou Z, Yang Y, et al. Motor imagery decoding enhancement based on hybrid EEG-fNIRS signals. IEEE Access. 2023;11:65277–65288. doi: 10.1109/ACCESS.2023.3289709.

- Wang H, Xu T, Tang C, et al. Diverse feature blend based on filter-bank common spatial pattern and brain functional connectivity for multiple motor imagery detection. IEEE Access. 2020;8:155590–155601. doi: 10.1109/ACCESS.2020.3018962.

- A J, M S, Chhabra H, et al. Investigation of deep convolutional neural network for classification of motor imagery fNIRS signals for BCI applications. Biomed Signal Process Control. 2020;62:102133. doi: 10.1016/j.bspc.2020.102133.

- Schudlo LC, Chau T. Dynamic topographical pattern classification of multichannel prefrontal NIRS signals: II. Online differentiation of mental arithmetic and rest. J Neural Eng. 2013;11(1):016003. doi: 10.1088/1741-2560/11/1/016003.

- Nazeer H, Naseer N, Khan RA, et al. Enhancing classification accuracy of fNIRS-BCI using features acquired from vector-based phase analysis. J Neural Eng. 2020;17(5):056025. doi: 10.1088/1741-2552/abb417.

- Ho TKK, Gwak J, Park CM, et al. Discrimination of mental workload levels from multi-channel fNIRS using deep leaning-based approaches. IEEE Access. 2019;7:24392–24403. doi: 10.1109/ACCESS.2019.2900127.

- Asgher U, Khalil K, Khan MJ, et al. Enhanced accuracy for multiclass mental workload detection using long short-term memory for brain-computer interface. Front Neurosci. 2020;14:584. doi: 10.3389/fnins.2020.00584.

- Wang Z, Fang J, Zhang J, et al. Rethinking delayed hemodynamic responses for fNIRS classification. IEEE Trans Neural Syst Rehabil Eng. 2023;31:4528–4538. doi: 10.1109/TNSRE.2023.3330911.

- Frederick B D, Nickerson LD, Tong Y, et al. Physiological denoising of BOLD fMRI data using regressor interpolation at progressive time delays (RIPTiDe) processing of concurrent fMRI and near-infrared spectroscopy (NIRS). Neuroimage. 2012;60(3):1913–1923. doi: 10.1016/j.neuroimage.2012.01.140.

- Shibasaki H. Human brain mapping: hemodynamic response and electrophysiology. Clin Neurophysiol. 2008;119(4):731–743. doi: 10.1016/j.clinph.2007.10.026.

- Guo M-H, Lu C-Z, Liu Z-N, et al. Visual attention network. Comp Visual Media. 2023;9(4):733–752. doi: 10.1007/s41095-023-0364-2.

- Van Quang N, Chun J, Tokuyama T. CapsuleNet for micro-expression recognition. 2019 14th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2019). 2019; 1–7. doi: 10.1109/FG.2019.8756544.

- Bauernfeind G, Scherer R, Pfurtscheller G, et al. Single-trial classification of antagonistic oxyhemoglobin responses during mental arithmetic. Med Biol Eng Comput. 2011;49(9):979–984. doi: 10.1007/s11517-011-0792-5.

- Pfurtscheller G, Bauernfeind G, Wriessnegger SC, et al. Focal frontal (de)oxyhemoglobin responses during simple arithmetic. Int J Psychophysiol. 2010;76(3):186–192. doi: 10.1016/j.ijpsycho.2010.03.013.

- Bauernfeind G, Steyrl D, Brunner C, et al. Single trial classification of fNIRS-based brain-computer interface mental arithmetic data. Annu Int Conf IEEE Eng Med Biol Soc. 2014;2014:2004–2007. doi: 10.1109/EMBC.2014.6944008.

- Shin J, von Luhmann A, Blankertz B, et al. Open access dataset for EEG + NIRS single-trial classification. IEEE Trans Neural Syst Rehabil Eng. 2016;25(10):1735–1745. doi: 10.1109/TNSRE.2016.2628057.

- Bak S, Park J, Shin J, et al. Open-access fNIRS dataset for classification of unilateral finger-and foot-tapping. Electronics. 2019;8(12):1486. doi: 10.3390/electronics8121486.

- Ortega P, Zhao T, Faisal AA, et al. HYGRIP: full-stack characterization of neurobehavioral signals (fNIRS, EEG, EMG, force, and breathing) during a bimanual grip force control task. Front Neurosci. 2020;14:919. doi: 10.3389/fnins.2020.00919.

- Cope M, Delpy DT. System for long-term measurement of cerebral blood and tissue oxygenation on newborn infants by near infra-red transillumination. Med Biol Eng Comput. 1988;26(3):289–294. doi: 10.1007/BF02447083.

- Sabour S, Frosst N, et al. Dynamic routing between capsules. Adv Neural Inform Proces Syst. 2017;30:3856−3866.

- Yin J, Li S, Zhu H, et al. Hyperspectral image classification using CapsNet with well-initialized shallow layers. IEEE Geosci Remote Sensing Lett. 2019;16(7):1095–1099. doi: 10.1109/LGRS.2019.2891076.

- Szegedy C, Vanhoucke V, Ioffe S, et al. Rethinking the inception architecture for computer vision. Proceedings of the IEEE conference on computer vision and pattern recognition. 2016; p. 2818–2826.

- Müller R, Kornblith S, Hinton GE. When does label smoothing help. Adv Neural Inform Proces Syst. 2019;32:5.

- Trakoolwilaiwan T, Behboodi B, et al. Convolutional neural network for high-accuracy functional near-infrared spectroscopy in a brain-computer interface: three-class classification of rest, right-, and left-hand motor execution. Neurophotonics. 2018;5(1):011008–011008.

- Loshchilov I, Hutter F. Decoupled weight decay regularization. arXiv Preprint arXiv:1711.05101. 2017.

- Zhao X, Zhang H, Zhu G, et al. A multi-branch 3D convolutional neural network for EEG-based motor imagery classification. IEEE Trans Neural Syst Rehabil Eng. 2019;27(10):2164–2177. doi: 10.1109/TNSRE.2019.2938295.

- Yu F, Koltun V. Multi-scale context aggregation by dilated convolutions. arXiv Preprint arXiv:1511.07122. 2015.

- Wang Z, Chen C, Li J, et al. ST-CapsNet: linking spatial and temporal attention with capsule network for P300 detection improvement. IEEE Trans Neural Syst Rehabil Eng. 2023;31:991–1000. doi: 10.1109/TNSRE.2023.3237319.

- Zhang Z, Sabuncu M. Generalized cross entropy loss for training deep neural networks with noisy labels. Adv Neural Inform Proces Syst. 2018;31:154.

- Villringer A, Planck J, Hock C, et al. Near infrared spectroscopy (NIRS): a new tool to study hemodynamic changes during activation of brain function in human adults. Neurosci Lett. 1993;154(1–2):101–104. doi: 10.1016/0304-3940(93)90181-j.

- Menon V, Rivera SM, White CD, et al. Dissociating prefrontal and parietal cortex activation during arithmetic processing. Neuroimage. 2000;12(4):357–365. doi: 10.1006/nimg.2000.0613.

- Ischebeck A, Zamarian L, Schocke M, et al. Flexible transfer of knowledge in mental arithmetic—an fMRI study. Neuroimage. 2009;44(3):1103–1112. doi: 10.1016/j.neuroimage.2008.10.025.

- Hardwick RM, Caspers S, Eickhoff SB, et al. Neural correlates of action: comparing meta-analyses of imagery, observation, and execution. Neurosci Biobehav Rev. 2018;94:31–44. doi: 10.1016/j.neubiorev.2018.08.003.

- Kilintari M, Narayana S, Babajani-Feremi A, et al. Brain activation profiles during kinesthetic and visual imagery: an fMRI study. Brain Res. 2016;1646:249–261. doi: 10.1016/j.brainres.2016.06.009.

- Fulford J, Milton F, Salas D, et al. The neural correlates of visual imagery vividness-An fMRI study and literature review. Cortex. 2018;105:26–40. doi: 10.1016/j.cortex.2017.09.014.