ABSTRACT

In recent years, short-form social media videos have emerged as an important source of health-related advice. In this study, we investigate whether experts or ordinary users in such videos are more effective in debunking the common misperception that talking about suicide should be avoided. We also explore a new trend on TikTok and other platforms, in which users attempt to back up their arguments by displaying scientific articles in the background of their videos. To test the effect of source type (expert vs. ordinary user) and scientific references (present or absent), we conducted a 2 × 2 between-subject plus control group experiment (n = 956). In each condition, participants were shown a TikTok video that was approximately 30 seconds long. Our findings show that in all four treatment groups, participants reduced their misperceptions on the topic. The expert was rated as being more authoritative on the topic compared to the ordinary user. However, the expert was also rated as being less credible compared to the ordinary user. The inclusion of a scientific reference did not make a difference. Thus, both experts and ordinary users may be similarly persuasive in a short-form video environment.

Mental health problems have seen a notable increase in recent years, particularly among the younger population (CDC, Citation2021). Concurrently, the use of social media has surged, with short-form videos emerging as a popular content type shared on these platforms. One of the first platforms to make extensive use of this format is TikTok. The platform is used by 67% of US teens (Vogels et al., Citation2022), and an increasing proportion of its users use it for news purposes (Matsa, Citation2022). It has also become a common place for general health and mental health advice (Basch et al., Citation2022). While the negative mental health effects of social media use are often discussed, these platforms also provide an opportunity to disseminate accurate public health information to a young audience that may be more difficult to reach through other channels (Engel et al., Citation2023).

While we have gained extensive insight into how misinformation and corrections are received in traditional social media formats such as text and static images, our understanding of how to apply this prior research to short-form videos shared on platforms such as TikTok remains limited. Furthermore, little attention has been paid to understanding the multiple credibility cues present in these videos. Specifically, authenticity is often highlighted as a central component of credibility (Barta & Andalibi, Citation2021), while other research suggests that formal expertise is an important determinant of credibility (O’Sullivan et al., Citation2022). In the social media domain, these two aspects often compete, but there is a lack of research that elucidates which cue – authenticity or expertise – has greater influence on users’ credibility judgments. Furthermore, some evidence suggests that references to scientific evidence are persuasive (Boothby et al., Citation2021). However, research has yet to examine how all of these elements – short videos, correction of social media misinformation, perceived expertise, and scientific evidence – interact in a holistic manner. The present study seeks to address this knowledge gap.

Using unique short-form videos that mimic the style of TikTok and were created and recorded specifically for this study, we examined two different aspects of credibility – self-described medical expertise and reference to a scientific study – to assess their effectiveness in correcting a common misconception about mental health.

Mental health information on social media

With the increasing rates of mental health issues reported among the younger population (CDC, Citation2021), social media have become vital platforms for discussing mental health topics (Basch et al., Citation2022). One of the most popular platforms is TikTok, which became popular primarily because of its short-form video feature, and many other platforms have since adopted this feature. For example, Instagram introduced its video feature “Reels,” Facebook “Lasso” and “YouTube Shorts.” While most users use these features for entertainment, some are also seeking mental health information (Basch et al., Citation2022). At the same time, social media use is correlated with depression, eating disorder, body dissatisfaction, and anxiety (Blanchard et al., Citation2023). However, the impact of social media on mental health may depend on how young people use social media and what kind of content they are exposed to (Valkenburg et al., Citation2022). Therefore, the type of mental health information and advice that social media users are exposed to is important.

From a pessimistic perspective, platforms like TikTok may provide another breeding ground for misinformation. For example, it is difficult to correctly identify the original source of many videos due to extensive reposting and the lack of visibility regarding user identification and dates (Nilsen et al., Citation2022). Indeed, the vast majority of health-related content producers on social media are laypeople, rather than experts (McCashin & Murphy, Citation2022; O’Sullivan et al., Citation2022). These non-expert users are more prone to disseminating misleading health information. For instance, a recent study found that 84% of mental health advice on TikTok was misleading (PlushCare, Citation2022). Additionally, the app prompts users to “passively consume and swipe through hundreds of short videos subject to clickbait” (O’Sullivan et al., Citation2022, p. 373), potentially encouraging them to accept information at face value without conducting thorough research.

From a more optimistic perspective, social media platforms provide new low-threshold avenues for health promotion (Engel et al., Citation2023; Pretorius et al., Citation2022). For example, social media is often used by individuals with lived mental health experience, who can offer health information which helps people to cope with their own conditions (Engel et al., Citation2023; Heiss & Rudolph, Citation2022). Furthermore, social media also provide spaces for corrective information (Walter et al., Citation2021). However, the question under which circumstances these efforts are successful is crucial.

This study focuses on a specific aspect of health promotion: correcting a common misconception about suicide conversations. Recent research suggests that suicide-related content is prevalent on social media (e.g., Arendt, Citation2019). Therefore, it is important to provide counter-narratives and also disseminate information about how to interact with people who may be at risk, such as those who share or engage with suicide-related content.

The study tests 30 second-long short-form videos, in which the person debunking a common misperception appears either as an expert or an ordinary individual, and either uses a reference to a scientific study or not.

Correction of misinformation

At first glance, we might expect that people are unlikely to be swayed by corrections of misinformation on social media, especially considering that they are broadly skeptical of information encountered on social media, with 59% expecting most information to be inaccurate (Shearer & Mitchell, Citation2021). However, a broad set of research now points to the effectiveness of corrections on social media (Bode & Vraga, Citation2021; Walter et al., Citation2021). While correction has been tested in static social media content on Facebook (Bode & Vraga, Citation2015; Borah et al., Citation2022; Smith & Seitz, Citation2019), Twitter (Vraga & Bode, Citation2018), Instagram (Vraga et al., Citation2020), and Facebook Live (Vraga & Bode, Citation2022), it has not been tested using audiovisual shorts. An important exception is a study by Bhargava et al. (Citation2023), which tested a correction video on its own as well as following a misinformation video, and found that the correction following the misinformation was most effective. Here, we test a slightly different approach. We focus on how scientific credibility cues in videos may modify the effect of a correction video. However, based on existing research on the correction of health information, we believe that any type of correction can reduce misperceptions.

In addition, corrective information may also increase an individual’s self-efficacy in the area of mental health. Self-efficacy is defined as “beliefs in one’s ability to organize and execute the courses of action necessary to produce given achievements” (Bandura, Citation1997, p. 3). Effectively debunking mental health misinformation should not only promote more accurate beliefs, but may also increase an individual’s agency (Gesser-Edelsburg et al., Citation2018). Thus, our first hypothesis is:

H1:

Compared to the control group, participants in any condition featuring a correction will a) reduce misperception and b) increase mental health efficacy.

Experts vs. ordinary users

Source credibility models highlight communicators’ positive characteristics which may influence whether or not a receiver accepts a message (Ohanian, Citation1990; Vrontis et al., Citation2021). One of these characteristics is perceived expertise. Generally, there is evidence that experts, such as medical scientists, are highly trusted compared to other social elites, for example, politicians (Kennedy et al., Citation2022). In line with this, increasing the perceived source expertise of health-promoting messages was found to reduce vaccine hesitancy (Xu et al., Citation2020). Furthermore, Vraga and Bode (Citation2017) showed that experts are particularly effective correctors of health misinformation. This finding was supported by a meta-analysis, indicating that corrections are more effective when misinformation is debunked by experts as compared to peers or other non-expert sources (Walter et al., Citation2021).

However, the same meta-analysis found that correcting misinformation spread by peers is more challenging than correcting misinformation spread by news organizations (Walter et al., Citation2021). In fact, individuals tend to trust information provided by people they perceive as similar to themselves, even if they lack medical expertise (Walter et al., Citation2021, p. 7; see also Wang et al., Citation2008). Moreover, emerging research suggests that corrections from close ties, such as family members and friends, are more effective than those from weak ties (Heiss et al., Citation2023; Pasquetto et al., Citation2022). More broadly, trust may matter more than expertise in creating an effective correction (Guillory & Geraci, Citation2013). These findings have important implications for social media, where ordinary users can influence large audiences through perceived authenticity and closeness, even if they lack relevant expertise (Engel et al., Citation2023). These users, often referred to as influencers, frequently post about health-related topics and compete with expert sources like psychologists or medical doctors.

The existing evidence suggests that, in addition to close tie contacts (family and friends), experts may be the most influential correctors. Social media influencers may mimic close tie relationships. However, in our experimental design, we compare the effect of first-time exposure to an ordinary user and a self-described expert. In such a first-time exposure situation, where deeper para-social relationships with the ordinary user are absent, we assume that the self-described expert will be more persuasive in delivering the correcting message than the ordinary social media user.

H2:

A person presenting herself as expert will be more likely to be perceived a) as an expert and b) as credible, compared to the ordinary user.Footnote1

H3:

Content presented by the self-described expert is a) more likely to be perceived as credible and b) more likely to be believed (i.e., reduce misperception) as compared to content presented by the ordinary user.

Referring to scientific evidence

Incorporating scientific validation by citing a relevant study can also enhance the credibility of a message. Consistent with information processing theories, citing a scientific study can serve as a heuristic cue for individuals to evaluate the strength of the argument being made (Chaiken, Citation1987; Knoll et al., Citation2020). This influence may be independent of the perceived expertise of the person delivering the message. Indeed, there is evidence that some people trust scientific methods and principles much more than scientific institutions or representatives (Achterberg et al., Citation2017). Thus, there is reason to believe that the communicators themselves may be less important than the evidence being presented. In other words, if people assume that the evidence was generated in a scientific way, they may believe experts as much as ordinary users.

One way to emphasize that evidence is based on scientific inquiry is by citing scientific sources. Putnam and Phelps have observed the existence of a “citation effect,” meaning that people are more likely to believe evidence presented with a citation than without one (Putnam & Phelps, Citation2017). This finding was derived from a series of six experiments where participants were asked to evaluate the veracity of trivia claims with and without in-text citations. Putnam and Phelps theorize that these citations support claims because they direct the reader to previous research, increasing the perceived “truthfulness” of the statement (Putnam & Phelps, Citation2017, p. 121).

This citation effect may also apply to the assessment of web-based information. Freeman and Spyridakis (Citation2009) investigated the credibility of web pages by randomly assigning participants to evaluate the credibility of web articles. The study found that the presence of citations on websites enhanced credibility and trustworthiness. This effect may also be observed in short video messages on TikTok, given that the scientific source is embedded in a compelling narrative (Allen et al., Citation2000; Jones & Crow, Citation2017). Building on this rationale, our next hypotheses are:

H4:

Content including a scientific study is a) more likely to be perceived as credible and b) more likely to be believed (i.e., reduce misperception) than content which does not include a scientific study.

H5:

A person who cites a scientific study is more likely to be perceived as a) credible and b) an expert than a person who does not.

We are also interested in how source (expert vs. ordinary user) interacts with a content credibility cue (reference to a scientific study). Given that we lack evidence on this question, we formulate a research question.

RQ1:

How does presence of an expert and presence of a scientific study interact?

There is reason to assume that the effects of source and content cues may not be homogenous across all individuals. Individuals with higher trust in science, higher education, higher mental health knowledge and higher media literacy be more strongly affected by the expert and scientific cues in the videos, compared to those with lower values on these variables.

H6:

The treatment effects will be stronger for individuals with a) greater trust in science, b) higher levels of education, c) increased self-reported knowledge of mental health, and d) enhanced self-reported media literacy.

Finally, treatment effects may also vary by use of and attitudes toward TikTok as well as age. This is because people who use TikTok regularly or perceive it as a reliable source may process these video formats differently than those who are relatively new to the format. In addition, younger people in general may process the information differently because they are heavy users of TikTok and similar short video platforms such as Instagram or YouTube. In our pre-registration, we asked the following questions:

RQ2:

How do experimental treatment effects vary by a) TikTok use, b) perceived credibility of TikTok, and c) age?

Methods

This research followed a 2 × 2 between-subject design, along with a control group. All participants were randomized using block randomization, in which they were assigned randomly to one of the five equally sized blocks within the Qualtrics platform: one for each treatment video and one for the control video. Ethical approval for the study was granted by the Institutional Review Board (IRB) at Georgetown University. The design, assumptions, and analyses were pre-registered on November 7 2022. Pre-registration of this study and the associated questionnaire and data are publicly available in our supplemental online material.Footnote2 Some deviations of the pre-registration should be noted. First, the order of the hypotheses in this article slightly deviates from the pre-registration, and some adjustments have been made to the wording of the hypotheses for better readability. Second, we initially differentiated between perceived trustworthiness and credibility as distinct constructs in the preregistered hypotheses. However, subsequent to the pre-registration, we slightly revised the wording of our survey items, and also a factor analysis suggested to consolidated these two constructs into a single dimension. Thus, we do not differentiate between credibility and trustworthiness in the hypotheses presented in this article (H2 and H5).

Sample

We sampled 956 participants from Amazon Mechanical Turk via the CloudResearch platform (Douglas et al., Citation2023; Litman et al., Citation2017). Participants were paid $2.50, were all at least 18 years old, located in the United States, and have completed at least 100 HITs with 95% approval rates. Participants who failed the attention check (N = 1) were excluded from the data.

The mean age was 40.33 years old (SD = 11.13). The youngest participant was 20 years old and the oldest was 85 years old. (We originally asked for the birth year and calculated the age by subtracting the birth year from 2022, the year of the study). Educational backgrounds were diverse, with 35% reporting at least some high school education, 49% reporting a Bachelor’s degree, 13% reporting a Master’s degree, and 2% reporting a PhD (1% preferred not to answer). Of the participants, 55% identified as male, 44% as female, and less than 1% identified as non-binary/third gender (3 individuals) or preferred not to answer (4 individuals). Regarding race, 74% identified as White, 12% as Black or African American, 7% as Asian American, 4% as Hispanic or Latino, 3% reported two or more races, and the remaining identified as American Indian or Alaskan Native (3 participants), Native Hawaiian or Pacific Islander (1 participant), or preferred not to say (8 participants). Reflecting on the past three months, 25% of participants reported daily use of TikTok, 17% used it 2–3 times per week, 20% used it weekly, 14% used it monthly, and 24% used it less frequently or never at all.

Experimental design

Participants were randomly assigned to one of the five groups: a control group (n = 208), an expert-only group (n = 192), an expert-with-scientific-reference group (n = 187), an ordinary user group (n = 192), and an ordinary user-with-scientific-reference group (n = 177). A randomization check indicated that there were no significant differences between the groups in terms of gender, age, education, and other pretest variables.

In each group, participants were shown a TikTok video approximately 30 seconds long. All five videos contained the same speaker. We created private TikTok accounts featuring the videos, wrote the script, and recorded the videos using the TikTok app. All four treatment groups delivered a debunk of the following misperception: Talking with depressed people about potential suicidal thoughts should be avoided because this could trigger them to actually commit suicide. This misperception is untrue according to a number of meta-analyses (Blades et al., Citation2018; Dazzi et al., Citation2014; Polihronis et al., Citation2022) – in fact, asking people if they are thinking about hurting themselves is considered the appropriate course of action. The control group saw the same person in the video, but talking about an unrelated topic (discussing the field of Astrobiology).

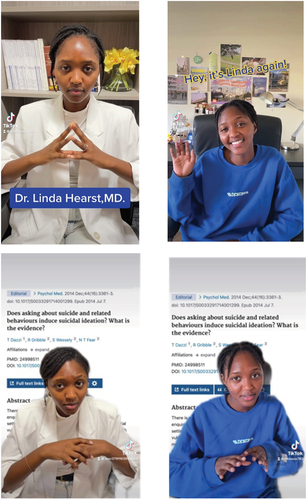

For two treatment groups (expert vs. ordinary user), the speaker in the TikTok video identified herself as a clinical psychologist and mental health expert, dressed in business casual attire (white suit similar to a doctor’s white coat). The account name included the title “Dr..” The other two treatment groups saw an ordinary TikTok user without any expertise in mental health, who wished to share her knowledge on TikTok. The ordinary user wore casual attire and appeared in a standard bedroom setting. The account name did not include any professional title.

In addition, each of the two treatment conditions was split into two subgroups (with or without a scientific reference). In one of those two subgroups, a scientific study was displayed in the background of the video as a reference to support the claim that talking about suicide is actually helpful in certain situations. The study was titled “Does asking about suicide and related behaviours induce suicidal ideation? What is the evidence?” (the study is Dazzi et al., Citation2014). For the other subgroup condition, the speaker used no reference.

The control group saw a video similar in length as the treatment videos, featuring a student speaking about astrobiology, using an outdoor scene as background. This astrobiology video was featured by a third account we created on TikTok. All videos are available in our supplemental online material.

Measures

Misperception Q1 and Q2

We used two questions in which participants judged the accuracy of the targeted misinformation. These two questions are referred to as misperception Q1 and misperception Q2. Misperception Q1 (M = 1.72, SD = 0.82, Median = 2) presented participants with the false statement, “Asking someone who is depressed about whether they’re thinking about suicide will make them more likely to commit suicide” and asking them to rate the statement as “very inaccurate,” “somewhat inaccurate,” “somewhat accurate,” or “very accurate,” with numerical values (1–4) assigned to each category. Higher values indicate greater misperceptions.

For misperception Q2 (M = 1.79, SD = 0.67, Median = 2), participants were shown the accurate information presented in the TikTok videos, which stated that “openly talking about suicide is a good suicide prevention strategy for people who suffer from depression.” Participants were then asked to rate the extent to which they believed this was a good strategy, choosing from the options “very good strategy,” “somewhat good strategy,” “somewhat bad strategy,” and “very bad strategy,” with numerical values (1–4) assigned to each choice. The mean (M) was 1.79, standard deviation (SD) was 0.67, and median was 2. Again, higher values indicate greater misperceptions.

Mental health efficacy

Mental Health Efficacy (ρ = .91, M = 5.29, SD = 1.12) was assessed by asking participants to indicate their level of agreement with the following statements: 1) I feel well-informed about mental illness and 2) I am confident that I know where to seek information about mental illness. Both items were measured using a 7-point labeled scale (from “Strongly disagree” to “Strongly agree”). Higher values indicate stronger agreement with the statements.

Source and content evaluation

All items were measured using a 7-point labeled scale (from “Strongly disagree” to “Strongly agree”). Before we created mean scales, we tested the factor structure of all source and content evaluation items. Prior to creating mean scales, we examined the factor structure of all source and content evaluation items. We utilized parallel analysis and principal axis factor analysis with oblimin rotation and found support for a three-factor solution. The factors were perceived expertise of the source (Eigenvalue = 3.95), perceived source credibility (Eigenvalue = 4.14), and perceived content credibility (Eigenvalue = 4.25). All factor loadings were above 0.69 and all cross loadings were below 0.15. Correlations among the dependent variables are included in the appendix in .

Perceived expertise (α = .96, M = 4.60, SD = 1.41) was measured by asking participants to indicate the extent to which they agree or disagree with the five statements regarding expertise: 1) “I think the woman in the video is knowledgeable about the topic;” 2) “I think the woman in the video is skilled in the topic’s field;” 3) “I think the woman in the video is qualified to talk about the topic;” 4) “I think the woman in the video is experienced in the topic’s field;” 5) “I think the woman in the video is an expert on the topic.”

For perceived source credibility (α = .97, M = 5.25, SD = 1.24), participants were asked to indicate the extent to which they agree or disagree with the following five statements: 1) “I think the woman in the video is trustworthy;” 2) “I think the woman in the video is reliable;” 3) “I think the woman in the video is credible;” 4) “I think the woman in the video is honest;” 5) “I think the woman in the video is dependable.”

For perceived content credibility (α = .97, M = 5.21, SD = 1.21), participants were asked the same set of questions but in relation to the content, rather than the source: 1) “I think the information conveyed in the TikTok video is credible;” 2) “I think the information conveyed in the TikTok video is trustworthy;” 3) “I think the information conveyed in the TikTok video is reliable;” 4) “I think the information conveyed in the TikTok video is accurate;” 5) “I think the information conveyed in the TikTok video is based on facts” (on the same five-point agree/disagree scale).

To measure TikTok use (M = 3.06, SD = 1.51, Median = Weekly), we asked participants how often they had used TikTok in the past three months. They responded on a labeled scale from “Never or less than monthly,” “Monthly,” “Weekly,” “2–3 times per week,” and “Daily or more often.” Numerical values (1–5) were assigned to each outcome category.

To measure (perceived) credibility of TikTok (M = 2.32, SD = 1.20, Median = Somewhat disagree) as a source we asked how participants would agree or disagree with the following statement: “In general, I think TikTok is a reliable source of information.” They could choose between “Strongly disagree,” “Somewhat disagree,” “Neither agree nor disagree,” Somewhat agree,” “Strongly agree.”

To measure media literacy (Spearman – Brown (ρ) = .85, M = 3.51, SD = 0.93, Median = 4.00), asked participants as how difficult or easy they judged the following tasks: 1) Telling if the information I find on social media is trustworthy, 2) Evaluating the evidence that backs up people’s opinions on social media. They responded on a 5-point labeled scale from “Extremely difficult,” “Extremely easy” (for a similar approach, see Kahne & Bowyer, Citation2017).

To measure mental health knowledge (ρ = .80, M = 3.11, SD = 0.81, Median = 3.00), we asked participants: “How much would you say you know about mental health?” and “How much would you say you know about depression?.” They responded on a labeled scale from “None at all,” “A little,” “A moderate amount,” “A lot,” “A great deal.” Numerical values (1–5) were assigned to each outcome category.

To measure trust in science (M = 3.98, SD = 0.90, Median = 4.00), we asked participants: How much do you trust science in general? They responded on a labeled scale from “None at all,” “A little,” “A moderate amount,” “A lot,” “A great deal.”

Treatment check questions

Participants were asked two questions: The first question was, “What did the woman in the video say her job was?” We provided four answers (“astrobiologist,” “influencer,” “clinical psychologist,” “she didn’t say”). Depending on the assigned group, the correct answer was either “clinical psychologist” or “she didn’t say.” The second question was “Which of the following was mentioned in the video? We provided four answers (“A scientific research study,” “A personal experience,” “A public opinion poll,” “None of the above”). Depending on the group assigned, the correct answer was either “A scientific research study” or “None of the above.”

Statistical analysis

To test our hypotheses, we conducted a series of t-tests, which are presented below. To test the effects on misperception Q1 and Q2, we used the Wilcoxon rank sum test (also known as the Mann-Whitney test), which is a nonparametric alternative to the t-test. This test is appropriate when the outcome variable is measured on an ordinal scale, especially when the differences between the scale points are asymmetric, as is the case with the two misperception questions. For example, the distance between the response options “very accurate” and “somewhat accurate” may be different than the distance between the response options “somewhat accurate” and “somewhat inaccurate.” The test transforms the original data into ranks and assesses whether one group has higher values than the other. Thus, it is a test of both the location and the spread of the data (Hart, Citation2001). To visualize the treatment effects, we present both means and medians. Group summary statistics can be found in and . All analyses were conducted using R.

Results

Treatment check

We conducted chi-square tests to compare frequencies across groups. In the expert group, 91% of participants correctly identified the person in the video as a clinical psychologist, while only 5% of participants in the ordinary user condition chose this option (χ2(1) = 552.54, p < .001). Additionally, in the condition where a scientific study was shown, 80% of participants correctly reported that a scientific study was mentioned in the video, while only 39% did so when no scientific study was mentioned (χ2(1) = 185.50, p < .001). Therefore, we concluded that the treatment as successful.

General effect of correction

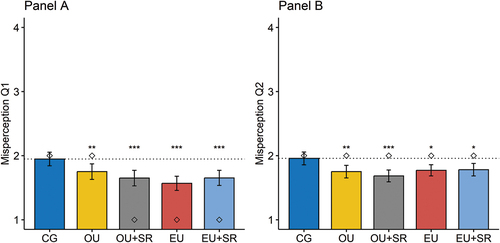

Our first hypothesis assumed that any type of correction might reduce misperceptions (H1a). To test this hypothesis, we visualized mean values with confidence intervals as well as median locations in . P-values were calculated using Wilcoxon rank sum tests. Overall, we found support for H1a. Compared to the control group, any intervention to correct the common misperception was successful. There is some evidence that for misperception Q1, the expert user without a scientific reference condition (M = 1.57, SD = 0.78, Median = 1) was more successful than the ordinary user without a scientific reference condition (M = 1.75, SD = 0.86, Median = 2). An additional test (W = 20594, p = 0.03) indicates that there is indeed a significant difference between these two conditions. Adjusting p-values for the multi-group analysis (as shown in ) did not alter our key findings (see ).Footnote3

Figure 1. The bars show the mean values across the experimental groups, with 95% confidence intervals. The diamond symbols indicate the location of medians. Asterisks denote the p-value for the difference of each treatment group compared to the control group (CG). The p-values were calculated using Wilcoxon rank sum tests. The dotted line visualizes the benchmark mean value of the CG. The dependent variables represent the common misperception of avoiding talking about suicide with people who seem at risk.

We conducted a series of t-tests to examine group effects on mental health efficacy (H1b). However, we did not find any statistically significant effects of our treatment on mental health efficacy (see for details).

Effect of expert source

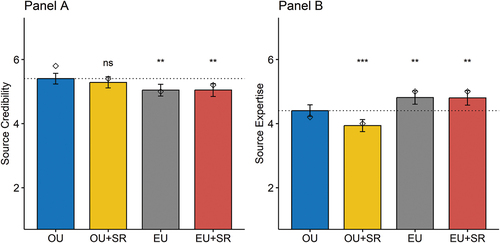

H2a stated that a person presented as an expert is more likely to be perceived as an expert. To test this hypothesis, we combined data from the two expert user conditions (with and without reference to a scientific study) and the two ordinary user conditions (with and without reference to a scientific study). For mean values across the combined groups, see in the appendix. We found support for this hypothesis (t(731.25) = 6.08, p < .001). H2b hypothesized that a person presented as an expert is more likely to be perceived as credible compared to a lay person. However, we found the opposite pattern: the expert user was perceived as less credible than the ordinary user (t(736.39) = −3.30, p = 0.001).

H3 proposed that content from a person presented as an expert would have higher credibility (a) and be more effective in reducing misperceptions (b) than content from a lay person. However, our analysis did not find support for these predictions. Specifically, we did not find a significant difference in content credibility between the pooled group exposed to an expert user and the pooled ordinary user group (t (744.45) = −1.64, p = 0.10). Similarly, there was no significant difference for misperception Q1 (W = 65818, p = 0.12) or Q2 (W = 73542, p = 0.17).

Effect of reference to scientific study

H4 suggested that content including references to scientific studies may receive higher credibility ratings (a) and could be more effective in reducing misperceptions than content without such references (b). To test this hypothesis, we compared data from the two conditions with scientific references (pooled) against the two conditions without them (pooled). in the appendix shows the mean values across the pooled groups. Contrary to H4a, we did not find any evidence to support that references to scientific studies enhance content credibility (t(744.81) = −0.18, p = 0.86). Furthermore, we did not find any significant difference in general misperception Q1 (W = 70159, p = 0.92) and Q2 (W = 71348, p = 0.58), thereby refuting H4b.

H5 suggested that referencing scientific studies would lead to higher ratings of source credibility (a) and expertise (b). Although we did not find any effect of the scientific reference condition (pooled) on source credibility (t(739.92) = 0.65, p = 0.52), we did find a significant effect on perceived source expertise, which was contrary to our expectation. In particular, the presence of a scientific reference led to a decrease in perceived source expertise (t(732.85) = 2.1524, p = 0.03).

RQ1 aimed to investigate the interaction between the presence of an expert and the presence of a scientific study. To answer this question, we visualize the effects of the unpooled groups in . The graph provides robust evidence for the pooled findings. Panel A shows that the mean values for both ordinary groups are higher than those for the expert conditions. An additional analysis indicates that the ordinary user with scientific reference condition is not significantly different from any other group. Panel B shows the results for perceived source expertise. The group exposed to an ordinary user with a reference to a scientific study scored the lowest on perceived source expertise, while the group exposed to the expert user scored the highest. The figure also shows that the negative effect of scientific reference on source credibility occurs within the ordinary user condition, but not in the expert condition. Finally, no significant effects were found on content credibility (which is not visualized in the figure).

Figure 2. This figure presents a comparison of mean values between experimental groups, along with 95% confidence intervals. The diamond symbols indicate the location of medians. Asterisks indicate the p-value for the difference of each group compared to the ordinary user group (OU). P-values are calculated using t-tests. The dotted line represents the benchmark mean value of OU. The dependent variables in this analysis are the perceived credibility of the woman in the video (Panel A) and the perceived expertise of the woman in the video (Panel B). CG = control group, OU = ordinary user, OU + SR = ordinary user with scientific reference, EU = expert user, EU + SR = expert user with scientific reference.

In an additional analysis, we used the Holm-Bonferroni method to correct the p-values for multi-group analysis. The adjusted p-values do not alter our key findings and can be found in the appendix ().

Conditional treatment effects

H6a-d posited that treatment effects would be stronger for individuals with a) greater trust in science, b) higher levels of education, c) increased self-reported knowledge of mental health, and d) enhanced self-reported media literacy. However, we did not uncover a consistent pattern that would support these conditional effects. RQ2 asked whether treatment effects vary across a) TikTok use, b) the perceived credibility of Tiktok as a source, and c) age. Again, we did not find any a systematic pattern which would support these conditional effects. The complete analysis is available in the Supplemental Online Materials (file: supplemental analysis).

Additional exploratory analysis

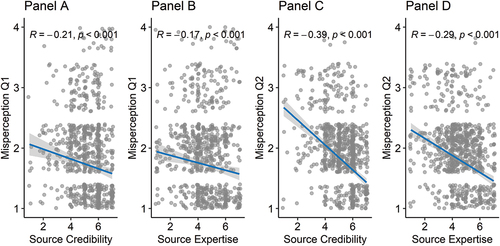

To better understand the weak differences in misperceptions and also content credibility between the expert vs. ordinary user conditions, we examined how source credibility and perceived source expertise related to these outcomes. This analysis was not preregistered. As shown in , higher levels of both source credibility and source expertise are associated with lower misperceptions Q1 and Q2. Since source credibility was higher in the ordinary user condition and source expertise was higher in the expert user condition, this may explain the negligible differences in misperceptions between the expert and ordinary user conditions. Even stronger correlations (Pearson) are observed between source credibility and content credibility (r = .81, p < .001) and source expertise and content credibility (r = .61, p < .001). This may also explain the lack of variation in content credibility between the expert and ordinary user conditions.

Figure 3. The scatterplots show the bivariate correlations (Spearman) between source credibility and misperception Q1 (Panel A), perceived source expertise and misperception Q1 (Panel B), source credibility and misperception Q2 (Panel C), and perceived source expertise and misperception Q2 (Panel D). The Spearman’s rank correlation coefficient is shown along with the p-value. The blue line represents the best-fitted line with a confidence interval. Participants from the control group (CG) were excluded from this analysis.

Discussion

In this study, we found that debunking a common mental health misperception in short-form videos can be effective regardless of whether it comes from a self-proclaimed expert or a layperson, or whether a scientific reference is provided or not. This is consistent with previous research demonstrating the effectiveness of debunking in general (Walter et al., Citation2021) and in short-form videos in particular (Bhargava et al., Citation2023).

However, while this effectiveness is encouraging from a normative perspective for our study and others aimed at debunking misinformation, it also raises concerns about the potential misuse of the medium by malicious actors or genuinely misinformed individuals to convince people of false information. Essentially, the impact of any given “corrective” information depends on whether it is genuinely sharing corrective information or disguising misinformation as correction. This observation is consistent with existing research suggesting that the corrective template can be used to reinforce misperceptions when inaccurate information is shared instead of accurate information (Vraga & Bode, Citation2022).

Our second finding reveals that while people acknowledged the expertise of a person identified as an expert, they surprisingly perceived this person as less credible and trustworthy. In fact, when the person in the video appeared as an ordinary user, this person was judged as more credible. This observation seems paradoxical at first glance, but it is consistent with the emphasis on authenticity on TikTok, where even advertisements are designed to appear as though they were created by regular users.

This desire for authenticity is particularly pronounced among adolescents (Vogels et al., Citation2022), who have turned to applications like BeReal that prioritize authenticity over novelty, aesthetics, and other attributes traditionally favored by social media (Davis, Citation2022). In our experiment, the desire for authenticity seems to have played a role in people trusting ordinary people more than experts. This may also explain the rise of the social media influencer phenomenon. These influencers play a key role in disseminating health information and advice on social media, which is often linked to their ability to build para-social relationships over time (Engel et al., Citation2023). However, even simple authenticity cues may be sufficient to provide similar authority for health advice as a self-described expert. However, it is worth noting that in our study, source credibility and perceived expertise were high for all four conditions that viewed a video about mental health (ranging from 3.94 to 5.40 on the 7-point scales), so this does not merely reflect a general skepticism of information on social media (Shearer & Mitchell, Citation2021).

Additionally, we found that referencing a scientific study did not increase belief in accurate information, nor did it improve credibility or increase perceived expertise of the person sharing the information. In fact, participants assessed a non-expert sharing a scientific study as having lower expertise than one who does not cite a scientific study. This effect may be due to effectively reminding people that the ordinary person is not an expert, as opposed to those who wrote the study they cite. It could also be related to a particular genre of TikTok video, which purports to share a scientific study but does so in a misleading and “amateurish” way (Morbia, Citation2021). Perhaps people have come to recognize this trope and are able to award it less credibility as a result. Although that would suggest that TikTok users would be more likely to display such patterns, we did not observe any variations in the treatment effects for different levels of TikTok use. The experimental manipulation for scientific study was also relatively weak. While the study was mentioned (and the manipulation checks demonstrate that people did notice it), it was not discussed in depth. Perhaps a more thorough treatment of a scientific study would be perceived differently by participants.

Finally, we did not observe a statistically significant effect on mental health efficacy. One explanation is that the debunking message may have introduced new information, but it may also have caused participants to recognize the inherent complexity of mental health issues, ultimately resulting in a neutral overall effect.

Limitations

This study is not without its limitations. We included only a single scientific article in the stimulus material, tested a single topic, and used a single actor. However, testing multiple versions of each of these features would increase the generalizability of our findings (Thorson et al., Citation2012). For example, we cannot determine whether similar effects would manifest for political topics, or whether replacing the female actor with a male actor would yield comparable results.

Another limitation concerns the way we included the reference to the scientific study. Specifically, the title of the study is presented in the form of a question, which may have influenced participants’ perception of the information conveyed. In addition, we cannot be sure of the extent to which the effects found would generalize to a real-life scenario and across different video-based platforms. In addition, we do not know how these videos would be circulated and interpreted in the fast-paced TikTok environment, where users scroll through dozens of short-form videos in a short period of time, nor how interactions such as likes and comments would affect the way their content is perceived. Likes and comments are an important way for younger generations to navigate online content, so future research should consider these specific affordances of TikTok.

Finally, the TikTok algorithm may play an important role in determining what content people see and how they process it. Our research does not shed light on the extent to which typical users are exposed to such content; future research could use audit studies to examine characteristics of the algorithm in this regard. Finally, we used a convenience sample from an online panel and about 24% of participants reported to rarely or never use TikTok. Although we did not find heterogeneous treatment effects conditional on TikTok use, future research could investigate whether active TikTok users may process short videos differently, especially in real-world situations. In addition, preexisting biases or perceptions of experts and science may also influence the reception of debunking videos, which also needs to be explored in more detail.

Conclusion

The results of this study have direct applications for both social media users and health professionals in terms of how they communicate on social media platforms such as TikTok. First, we should empower laypeople to correct misinformation when they are qualified to do so. Correction is effective not only among experts but also among laypeople, and has the potential to reduce the impact of misinformation by decreasing belief in it. At the same time, we should equip users with the tools to distinguish truthful information from that intended to deceive, since correction can be used for nefarious purposes as well as noble ones.

It is important to note that there are two ways to achieve correction – either by being perceived as an expert or by being perceived as credible and trustworthy. This means that ordinary users can be involved in correcting misinformation and can have a meaningful impact if they are perceived as trustworthy sources (Heiss et al., Citation2023). It also means that public communication campaigns do not necessarily need to hire an expert as a spokesperson, which may open up opportunities for organizations with fewer resources (Mataira et al., Citation2014) or networks with experts. However, on the flip side, this also means that non-experts can effectively communicate sensitive content, which can contribute to misinformation (Engel et al., Citation2023).

Finally, this study can also serve as a call to action for more guidance for users of platforms like TikTok, who may have difficulty assessing who or what information from these platforms to trust. To address this, policymakers need to focus on improving media and health literacy in K-12 education, as young people are the most frequent users of these platforms (Vogels et al., Citation2022). However, platforms should also actively engage in helping users identify credibility cues by making them more visible and understandable. Informed users and a healthy information environment play a crucial role in disseminating accurate and corrective information, while reducing the appeal of misinformation.

Disclaimer

All authors have agreed to the submission and that the article is not currently being considered for publication by any other print or electronic journal.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article was originally published with errors, which have now been corrected in the online version. Please see Correction (http://dx.doi.org/10.1080/10410236.2024.2324488)

Additional information

Funding

Notes

1. In the pre-registration, we initially differentiated between perceived trustworthiness and credibility as distinct constructs. However, subsequent to the pre-registration, we slightly revised the wording of our survey items and consolidated these two constructs into a single dimension. This change also applies H5.

2. Link to pre-registration, data, questionnaire, stimulus material, and supplemental analysis: https://osf.io/zm7ew/?view_only=0a0a4c8850394334a5a7310a281c9c96.

3. In our pre-registration, we also predicted that corrections may increase participants’ mental health efficacy. Furthermore, we predicted that the effects of our treatment might be stronger for individuals with higher education, mental health knowledge, self-reported media literacy, and trust in science. We did not find systematic support for any of these expected relationships (see supplemental analysis in the supplemental online materials https://osf.io/zm7ew/?view_only=0a0a4c8850394334a5a7310a281c9c96).

References

- Achterberg, P., de Koster, W., & van der Waal, J. (2017). A science confidence gap: Education, trust in scientific methods, and trust in scientific institutions in the United States, 2014. Public Understanding of Science, 26(6), 704–720. https://doi.org/10.1177/0963662515617367

- Allen, M., Bruflat, R., Fucilla, R., Kramer, M., McKellips, S., Ryan, D. J., & Spiegelhoff, M. (2000). Testing the persuasiveness of evidence: Combining narrative and statistical forms. Communication Research Reports, 17(4), 331–336. https://doi.org/10.1080/08824090009388781

- Arendt, F. (2019). Suicide on Instagram–content analysis of a German suicide-related hashtag. The Crisis, 40(1), 36–41. https://doi.org/10.1027/0227-5910/a000529

- Bandura, A. (1997). Self-efficacy: The exercise of control. W. H. Freeman.

- Barta, K., & Andalibi, N. (2021). Constructing authenticity on TikTok: Social norms and social support on the “Fun” platform. Proceedings of the ACM on Human-Computer Interaction, 5(CSCW2), 1–29. https://doi.org/10.1145/3479574

- Basch, C. H., Donelle, L., Fera, J., & Jaime, C. (2022). Deconstructing TikTok videos on mental health: Cross-sectional, descriptive content analysis. JMIR Formative Research, 6(5), e38340. https://doi.org/10.2196/38340

- Bhargava, P., MacDonald, K., Newton, C., Lin, H., & Pennycook, G. (2023). How effective are TikTok misinformation debunking videos? Harvard Kennedy School Misinformation Review. https://doi.org/10.37016/mr-2020-114

- Blades, C. A., Stritzke, W. G., Page, A. C., & Brown, J. D. (2018). The benefits and risks of asking research participants about suicide: A meta-analysis of the impact of exposure to suicide-related content. Clinical Psychology Review, 64, 1–12. https://doi.org/10.1016/j.cpr.2018.07.001

- Blanchard, L., Conway-Moore, K., Aguiar, A., Önal, F., Rutter, H., Helleve, A., Nwosu, E., Falcone, J., Savona, N., Boyland, E., & Knai, C. (2023). Associations between social media, adolescent mental health, and diet: A systematic review. Obesity Reviews, 24(S2), Article e13631. https://doi.org/10.1111/obr.13631.

- Bode, L., & Vraga, E. (2021). The Swiss cheese model for mitigating online misinformation. Bulletin of the Atomic Scientists, 77(3), 129–133. https://doi.org/10.1080/00963402.2021.1912170

- Bode, L., & Vraga, E. K. (2015). In related news, that was wrong: The correction of misinformation through related stories functionality in social media. Journal of Communication, 65(4), 619–638. https://doi.org/10.1111/jcom.12166

- Boothby, C., Murray, D., Waggy, A. P., Tsou, A., & Sugimoto, C. R. (2021). Credibility of scientific information on social media: Variation by platform, genre and presence of formal credibility cues. Quantitative Science Studies, 2(3), 845–863. https://doi.org/10.1162/qss_a_00151

- Borah, P., Kim, S., Xiao, X., & Lee, D. K. L. (2022). Correcting misinformation using theory-driven messages: HPV vaccine misperceptions, information seeking, and the moderating role of reflection. Atlantic Journal of Communication, 30(3), 316–331. https://doi.org/10.1080/15456870.2021.1912046

- CDC. (2021). Youth risk behavior surveillance data summary & trends report: 2011-2021. https://www.cdc.gov/healthyyouth/data/yrbs/pdf/YRBS_Data-Summary-Trends_Report2023_508.pdf

- Chaiken, S. (1987). The heuristic model of persuasion. In M. P. Zanna, J. M. Olson & C. P. Herman (Eds.), Social influence: The Ontario symposium (Vol. 5, pp. 3–39). Lawrence Erlbaum Associates, Inc.

- Davis, W. (2022). BeReal is Gen Z’s new favorite social media app. Here’s how it works. National Public Radio. https://www.npr.org/2022/04/16/1092814566/bereal-app-gen-z-download

- Dazzi, T., Gribble, R., Wessely, S., & Fear, N. T. (2014). Does asking about suicide and related behaviours induce suicidal ideation? What is the evidence? Psychological Medicine, 44(16), 3361–3363. https://doi.org/10.1017/S0033291714001299

- Douglas, B. D., Ewell, P. J., Brauer, M., & Hallam, J. S. (2023). Data quality in online human-subjects research: Comparisons between MTurk, Prolific, CloudResearch, Qualtrics, and SONA. PLOS One, 18(3), Article e0279720. https://doi.org/10.1371/journal.pone.0279720

- Engel, E., Gell, S., Heiss, R., & Karsay, K. (2023). Social media influencers and adolescents’ health: A scoping review of the research field. Social Science & Medicine, 340, Article 116387. https://doi.org/10.1016/j.socscimed.2023.116387

- Freeman, K. S., & Spyridakis, J. H. (2009). Effect of contact information on the credibility of online health information. IEEE Transactions on Professional Communication, 52(2), 152–166. https://doi.org/10.1109/TPC.2009.2017992

- Gesser-Edelsburg, A., Diamant, A., Hijazi, R., Mesch, G. S., & Angelillo, I. F. (2018). Correcting misinformation by health organizations during measles outbreaks: A controlled experiment. PLOS One, 13(12), Article e0209505. https://doi.org/10.1371/journal.pone.0209505

- Guillory, J. J., & Geraci, L. (2013). Correcting erroneous inferences in memory: The role of source credibility. Journal of Applied Research in Memory and Cognition, 2(4), 201–209. https://doi.org/10.1016/j.jarmac.2013.10.001

- Hart, A. (2001). Mann-Whitney test is not just a test of medians: Differences in spread can be important. BMJ, 323(7309), 391–393. https://doi.org/10.1136/bmj.323.7309.391

- Heiss, R., Nanz, A., Knupfer, H., Engel, E., & Matthes, J. (2023). Peer correction of misinformation on social media: (In)civility, success experience, and relationship consequences. New Media & Society. Advance online publication. https://doi.org/10.1177/14614448231209946

- Heiss, R., & Rudolph, L. (2022). Patients as health influencers: Motivations and consequences of following cancer patients on Instagram. Behaviour & Information Technology, 42(6), 806–815. https://doi.org/10.1080/0144929X.2022.2045358

- Jones, M. D., & Crow, D. A. (2017). How can we use the ‘science of stories’ to produce persuasive scientific stories? Palgrave Communications, 3(1), Article 53. https://doi.org/10.1057/s41599-017-0047-7.

- Kahne, J., & Bowyer, B. (2017). Educating for democracy in a partisan age: Confronting the challenges of motivated reasoning and misinformation. American Educational Research Journal, 54(1), 3–34. https://doi.org/10.3102/0002831216679817

- Kennedy, B., Tyson, A., & Funk, C. (2022). Americans’ trust in scientists, other groups declines. Pew Research Center. https://www.pewresearch.org/science/2022/02/15/americans-trust-in-scientists-other-groups-declines/

- Knoll, J., Matthes, J., & Heiss, R. (2020). The social media political participation model: A goal systems theory perspective. Convergence: The International Journal of Research into New Media Technologies, 26(1), 135–156. https://doi.org/10.1177/1354856517750366

- Litman, L., Robinson, J., & Abberbock, T. (2017). TurkPrime.com: A versatile crowdsourcing data acquisition platform for the behavioral sciences. Behavior Research Methods, 49(2), 433–442. https://doi.org/10.3758/s13428-016-0727-z

- Mataira, P. J., Morelli, P. T., Matsuoka, J. K., & Uehara McDonald, S. (2014). Shifting the paradigm: New directions for non-profits and funders in an era of diminishing resources. Social Business, 4(3), 231–244. https://doi.org/10.1362/204440814X14103454934212

- Matsa, K. E. (2022). More Americans are getting news on TikTok, bucking the trend on other social media sites. Pew Research Center. https://www.pewresearch.org/fact-tank/2022/10/21/more-americans-are-getting-news-on-tiktok-bucking-the-trend-on-other-social-media-sites/

- McCashin, D., & Murphy, C. M. (2022). Using TikTok for public and youth mental health–A systematic review and content analysis. Clinical Child Psychology and Psychiatry. Advance online publication. https://doi.org/10.1177/13591045221106608

- Morbia, R. (2021, November 16). TikTok teaching? U of T researchers study the social media platform’s use in academia. University of Toronto News. https://www.utoronto.ca/news/tiktok-teaching-u-t-researchers-study-social-media-platform-s-use-academia

- Nilsen, J., Fagan, K., Dreyfuss, E., & Donovan, J. (2022). TikTok, the war on Ukraine, and 10 features that make the app vulnerable to misinformation. The Media Manipulation Casebook. https://mediamanipulation.org/research/tiktok-war-ukraine-and-10-features-make-app-vulnerable-misinformation

- O’Sullivan, N. J., Nason, G., Manecksha, R. P., & O’Kelly, F. (2022). The unintentional spread of misinformation on ‘TikTok’: A paediatric urological perspective. Journal of Pediatric Urology, 18(3), 371–375. https://doi.org/10.1016/j.jpurol.2022.03.001

- Ohanian, R. (1990). Construction and validation of a scale to measure celebrity endorsers’ perceived expertise, trustworthiness, and attractiveness. Journal of Advertising, 19(3), 39–52. https://doi.org/10.1080/00913367.1990.10673191

- Pasquetto, I. V., Jahani, E., Atreja, S., & Baum, M. (2022). Social debunking of misinformation on WhatsApp: The case for strong and in-group ties. Proceedings of the ACM on Human-Computer Interaction, 6(CSCW1), 1–35. https://doi.org/10.1145/3512964

- PlushCare. (2022). How accurate is mental health advice on TikTok? https://plushcare.com/blog/tiktok-mental-health/

- Polihronis, C., Cloutier, P., Kaur, J., Skinner, R., & Cappelli, M. (2022). What’s the harm in asking? A systematic review and meta-analysis on the risks of asking about suicide-related behaviors and self-harm with quality appraisal. Archives of Suicide Research, 26(2), 325–347. https://doi.org/10.1080/13811118.2020.1793857

- Pretorius, C., McCashin, D., & Coyle, D. (2022). Mental health professionals as influencers on TikTok and Instagram: What role do they play in mental health literacy and help-seeking? Internet Interventions, 30, 100591. https://doi.org/10.1016/j.invent.2022.100591

- Putnam, A. L., & Phelps, R. J. (2017). The citation effect: In-text citations moderately increase belief in trivia claims. Acta Psychologica, 179, 114–123. https://doi.org/10.1016/j.actpsy.2017.07.010

- Shearer, E., & Mitchell, A. (2021). News use across social media platforms in 2020. Pew Research Center. https://www.pewresearch.org/journalism/2021/01/12/news-use-across-social-media-platforms-in-2020/

- Smith, C. N., & Seitz, H. H. (2019). Correcting misinformation about neuroscience via social media. Science Communication, 41(6), 790–819. https://doi.org/10.1177/1075547019890073

- Thorson, E., Wicks, R., & Leshner, G. (2012). Experimental methodology in journalism and mass communication research. Journalism & Mass Communication Quarterly, 89(1), 112–124. https://doi.org/10.1177/1077699011430066

- Valkenburg, P. M., Meier, A., & Beyens, I. (2022). Social media use and its impact on adolescent mental health: An umbrella review of the evidence. Current Opinion in Psychology, 44, 58–68. https://doi.org/10.1016/j.copsyc.2021.08.017

- Vogels, E. A., Gelles-Watnick, R., & Massarat, N. (2022). Teens, social media and technology 2022. Pew Research Center. https://www.pewresearch.org/internet/2022/08/10/teens-social-media-and-technology-2022/

- Vraga, E. K., & Bode, L. (2017). Using expert sources to correct health misinformation in social media. Science Communication, 39(5), 621–645. https://doi.org/10.1177/1075547017731776

- Vraga, E. K., & Bode, L. (2018). I do not believe you: How providing a source corrects health misperceptions across social media platforms. Information, Communication & Society, 21(10), 1337–1353. https://doi.org/10.1080/1369118X.2017.1313883

- Vraga, E. K., & Bode, L. (2022). Correcting what’s true: Testing competing claims about health misinformation on social media. American Behavioral Scientist. Advance online publication. https://doi.org/10.1177/00027642221118252

- Vraga, E. K., Sojung, C. K., Cook, J., & Bode, L. (2020). Testing the effectiveness of correction placement and type on instagram. The International Journal of Press/Politics, 25(4), 632–652. https://doi.org/10.1177/194016122091908

- Vrontis, D., Makrides, A., Christofi, M., & Thrassou, A. (2021). Social media influencer marketing: A systematic review, integrative framework and future research agenda. International Journal of Consumer Studies, 45(4), 617–644. https://doi.org/10.1111/ijcs.12647

- Walter, N., Brooks, J. J., Saucier, C. J., & Suresh, S. (2021). Evaluating the impact of attempts to correct health misinformation on social media: A meta-analysis. Health Communication, 36(13), 1776–1784. https://doi.org/10.1080/10410236.2020.1794553

- Wang, Z., Walther, J. B., Pingree, S., & Hawkins, R. P. (2008). Health information, credibility, homophily, and influence via the Internet: Web sites versus discussion groups. Health Communication, 23(4), 358–368. https://doi.org/10.1080/10410230802229738

- Xu, Y., Margolin, D., & Niederdeppe, J. (2020). Testing strategies to increase source credibility through strategic message design in the context of vaccination and vaccine hesitancy. Health Communication, 36(11), 1354–1367. https://doi.org/10.1080/10410236.2020.1751400

Appendices

Appendix A.

Additional analyses

Table A1. Correlations among dependent variables (Spearman).

Table A2. Mean values with standard deviation across experimental groups (pooled).

Table A3. Mean values with standard deviation across experimental groups. Additionally, medians are reported for Misperception Q1 and Q2.

Table A4. Adjusted p-values for multigroup comparison.

Appendix B.

Examples of stimulus materials

Figure B1. In the expert videos (left), caption with the title “Dr. Linda Hearst, MPD” were added to shown. In the ordinary person videos (right), caption only consists of a greeting message and the name of the person. In the main part of the videos with the scientific article (bottom), the scientific study appears in the background.