ABSTRACT

Purpose

An inferential comprehension intervention addressing reading comprehension difficulties of middle schoolers was tested.

Method

Students in Grades 6 to 8 (n = 145; 53.8% female; 71% White; 24% Black) who failed their state literacy test, were randomly assigned to tutor-led, computerized, or business-as-usual [BaU] interventions.

Results

The tutor-led intervention produced significant effects compared to BaU (g = .40) and computer-led (g = .30) on inference types instructed in the intervention. Students with adequate word reading in tutor-led gained more compared to BaU on WIAT-III Reading Comprehension. Boys gained more from tutor-led and BaU vs. computer-led on several measures.

Conclusion

Inferential comprehension is malleable in middle school, but adequate word reading may be important. The effects for boys vs. girls suggest the need to understand intervention factors beyond the content and instructional procedures of interventions. Findings are discussed with reference to theories of inference and reading comprehension as well as the literature on technology-aided literacy interventions.

Reading comprehension difficulties are associated with a host of negative downstream effects not only for academic achievement and high school graduation (e.g., Balfanz et al., Citation2017), but also mental and physical health (e.g., Maynard et al., Citation2015). It is problematic, then, that reading comprehension levels in adolescents are low (and falling) in the U.S., with only 34% of 8th graders scoring at or above the Proficient level on the pre-pandemic National Assessment of Educational Progress (NAEP, Citation2019), and with even more sobering findings from the 2022 NAEP. Of great concern are findings for students with disabilities: the achievement gap between students with and without disabilities is large and grows across the grades (Gilmour et al., Citation2019). These findings point to the importance of designing and testing interventions for older students who experience significant difficulties with reading comprehension.

Given the many reading skills, cognitive skills, and sources of knowledge that have been correlated with reading comprehension, determining which of these accounts for the largest differences between skilled and less skilled adolescent readers – what have been called pressure points for reading comprehension (Compton et al., Citation2014; Perfetti & Stafura, Citation2014) – is one place to start in terms of informing intervention targets. The purpose of the current study was to test an inferential reading comprehension intervention for middle school students at risk for low reading achievement. Inference-making was targeted based on the centrality of inference to process models of reading comprehension (e.g., Kintsch, Citation1988; Van den Broek et al., Citation2005), cross-sectional research demonstrating the significant contribution of inference to reading comprehension for adolescents (e.g., Ahmed et al., Citation2016; Cromley & Azevedo, Citation2007), and intervention research suggesting that inference-making skill is malleable to instruction (e.g., Elleman, Citation2017). Furthermore, given reduced availability of personnel to provide intensive literacy interventions in middle school (Wanzek et al., Citation2011), the current study also tested two versions of the same intervention: one group received the intervention in traditional, tutor-led small groups and the other received the same intervention content delivered by computer.

Inference-making and reading comprehension

Cognitive process models of reading comprehension (e.g., the Construction-Integration model [Kintsch, Citation1988]; the Landscape Model [Van den Broek et al., Citation2005]) privilege knowledge and inference-making as critical contributors to skilled reading comprehension (McNamara & Magliano, Citation2009). Component skills models such as the Direct and Inferential Mediation (DIME) framework (Cromley & Azevedo, Citation2007) and the Direct and Indirect Effects Model of Reading (DIER; Kim, Citation2020) also propose that inferencing and verbal knowledge contribute to reading comprehension. In a test of the DIME model in secondary school students, inference-making followed by verbal knowledge (vocabulary and background knowledge) exerted the largest direct effects on reading comprehension (Ahmed et al., Citation2016). Ahmed et al. (Citation2016) also found that inference-making mediated the effects of verbal knowledge on reading comprehension (see Silva & Cain, Citation2015; Cromley et al., Citation2010 for similar findings in younger and older students, respectively).

Studies of different types of inference-making have found that inference-making difficulties of middle school students with reading comprehension difficulties are ubiquitous. They have difficulty making inferences that support the accurate interpretation of text through linking of words and sentences (Barth et al., Citation2015), using knowledge to rapidly fill in gaps in the text (Barnes et al., Citation2015), and correctly assigning pronouns to their referents (Denton et al., Citation2015). These adolescents have difficulties making inferences that produce a coherent text-based representation the requires the linking of connected propositions in the text, as well as inferences that are necessary for the construction of a high-quality situation model, in which knowledge is integrated with the text-base to produce a mental representation of the real world situation described by the text (Kintsch, Citation1988). These findings suggest that inference-making is a potential target for intervention in middle school students and that several aspects of inference-making would need to be addressed.

Is there any evidence that inference-making interventions are effective in general, and specifically for middle school students with reading comprehension difficulties? Studies of inference-making interventions, systematically reviewed by Elleman (Citation2017) and Hall (Citation2016), indicate that students with reading difficulties across grade levels can improve in their ability to make inferences with small-group inference instruction. However, these reviews revealed several shortcomings of the evidence base that limit conclusions regarding the effects of inference-making interventions for adolescents. Most inference intervention studies are older (i.e., published before 1990), tested interventions of relatively short duration (50% lasting less than 5 hours), and included small numbers of participants (i.e., samples < 50). Both reviews excluded several studies due to problems with study designs, such as inadequate comparison or control groups. Furthermore, most studies with students with reading difficulties were conducted with children below sixth grade (Hall, Citation2016); of the studies conducted with middle schoolers, only one included students with reading comprehension difficulties (Elleman, Citation2017). The types of inferences instructed focused primarily on situation model inferences; few addressed inferences important for constructing both text-based and situation model representations. Most studies used only narrative text, even though the ability to comprehend expository text becomes increasingly important with the shift to learning from disciplinary text in the secondary grades (Shanahan & Shanahan, Citation2012). Given that expository texts are more difficult to remember and comprehend (Mar et al., Citation2021) and make inferences from (Clinton et al., Citation2020), it is important that inference-making be taught using the types of texts that students frequently encounter and that are important for their learning. Finally, most studies used researcher-created proximal measures of inference outcomes and few studies assessed general reading comprehension outcomes using standardized measures. Although weaknesses within the inference-making intervention literature make it difficult to draw confident conclusions, findings from these two reviews (also see Barth & Elleman, Citation2017), suggest that inference-making interventions may be effective for increasing inferential reading comprehension for students with reading comprehension difficulties.

The current study was meant to address some of these gaps in knowledge and the limitations of previous studies. We accomplished this by using an experimental, group design (i.e., a randomized controlled trial); working with middle school students in Grades 6 to 8 who were identified by their schools with difficulties in literacy based on state English Language Arts (ELA) testing; creating an intervention of significant duration (26 lessons) with many opportunities to practice, respond and obtain feedback; using a roughly equal ratio of expository to narrative texts; providing instruction on inferences needed to construct both text-level and situation model representations; and measuring outcomes on both proximal and distal measures of inferential and general reading comprehension.

Technology-based interventions for middle school readers

A major difficulty in working with older students with reading difficulties has to do with the feasibility of providing supplemental interventions (Wanzek et al., Citation2011), as middle schools in the United States are usually much larger than elementary schools and often have fewer staff available for providing small-group instruction. One potential solution to this problem is computer-assisted intervention. If computer-delivered instruction is at least as effective as teacher-led instruction in middle school, it would offer schools a cost-effective option for providing supplemental reading interventions.

However, the literature on improving reading through technology for middle school students with or at risk for reading disabilities is sparse, and previous findings are mixed. Based on What Works Clearinghouse (WWC) reports, Read 180®, which was designed for students with reading difficulties and integrates both computer and teacher-led components in a blended intervention, had positive effects on adolescents’ comprehension (What Works Clearinghouse, Institute of Education Sciences, Citation2016), but it is unknown whether the computerized or teacher-led components are more responsible for these effects. A synthesis of secondary-school reading interventions found that the use of technology per se was not associated with significant effects, although there were positive effects for older, more established programs such as Read 180® and Passport Reading Journeys (Baye et al., Citation2019).

In the middle school context, where technology-based interventions have significant advantages in terms of their feasibility, studies have not compared computerized and small-group, tutor-led versions of the same intervention (but see Xu et al., Citation2019). As a result, relatively little is known about the contexts and student populations in which teacher-delivered and computerized interventions might be most effective (Edyburn, Citation2011). For example, although computerized programs may be generally effective for typically-achieving students, students with reading difficulties might benefit more from small-group interventions. Tutors may provide individualized supports for students in areas of difficulty (e.g., word reading, vocabulary/background knowledge) not directly addressed during the computerized intervention. Tutors and peers may also provide situation-specific emotional supports for learning. Further, boys and girls may respond differentially to technology-based interventions, an issue that is further discussed below. Finally, a systematic review has found that comprehension is better when reading the same expository texts from paper versus on a computer screen (Clinton, Citation2019); however, whether this is also true for middle school students with reading difficulties is not known.

Moderators of reading interventions

Despite a general call in the learning disabilities field to test potential moderators of intervention effects (Fuchs & Fuchs, Citation2019), there are relatively few studies that have done so for adolescents with reading comprehension difficulties. A few studies have tested whether pre-intervention reading skills moderate the effects of comprehension interventions for middle school students. Although it did not only include students with reading difficulties, a study that compared the effects of a weekly computer-based text structure strategy program and business-as-usual teacher-led sessions found that middle school students with higher initial comprehension skill gained more from the computer-based intervention than those with lower initial comprehension skill (Wijekumar et al., Citation2017). In a secondary analysis of a an intervention that blended computer-based, peer-mediated, and teacher-led components for middle school students with reading difficulties, Clemens et al. (Citation2019) found that neither word reading nor vocabulary moderated the effects of the intervention compared to business as usual (BaU); however, students in the intervention who started with lower oral reading fluency made greater gains in reading comprehension compared to similar students in BaU, suggesting a compensatory effect of the intervention for students with lower general reading achievement. Vaughn et al. (Citation2020) found minimal response to an intensive reading comprehension intervention in 4th grade students when word reading proficiency at pretest was very low, suggesting that difficulty in adequately accessing the words in text was detrimental for being able to take advantage of text-based reading comprehension instruction. Wanzek et al. (Citation2019) found that neither initial background knowledge nor reading achievement moderated the effects of a social studies intervention for 8th graders, suggesting more universal effects of their classroom-based intervention on the acquisition of content knowledge and content-based reading comprehension. In the current study we tested whether initial word reading efficiency and reading comprehension moderated intervention effects.

Computerized and tutor-led versions of the same intervention might also be differentially effective depending on other student characteristics, the most obvious one being sex.Footnote1 An analysis of data on the Programme for International Student Assessment from 26 countries found, for example, that although boys demonstrated overall lower reading achievement than girls, this achievement gap in reading was smaller for computer-delivered versus paper-based assessments, an effect that was interpreted as reflecting the significantly higher rates with which boys reported playing video games compared to girls (Borgonovi, Citation2016). Less is known about whether boys and girls respond differentially to computerized interventions, however. Some studies have showed a lack of significant differential effects for boys and girls for some types of technology-based interventions (Papastergiou, Citation2009), including for literacy interventions (e.g., Cheung & Slavin, Citation2012), while others have suggested that boys may benefit more from computerized word reading programs than girls (Hughes et al., Citation2013). Studies using a web-based text structure intervention have variously found no differences between boys and girls (Wijekumar et al., Citation2014, Citation2017), as well as differences favoring boys in some cases and girls in others (e.g., Meyer et al., Citation2018), the reasons for which are unclear. Whether effects of computer delivery compared with tutor delivery of the same intervention differ for boys and girls is a question that we asked in this study.

Current study

The effects of a small-group, tutor-delivered version and a computer-delivered version of a novel inferential comprehension intervention, Connecting Text through Inference and Technology (Connect-IT), were compared to each other and with the effects of schools’ BaU literacy interventions. Pre-intervention word reading efficiency, reading comprehension level, and sex were tested as potential moderators of intervention effects. We hypothesized that the two groups who received the intervention (i.e., either the computer- or the tutor-led version of Connect-IT) would have higher scores on a researcher-created measure of skill generating the types of inferences instructed in the intervention. Given the scarcity of inference intervention studies that have measured standardized reading comprehension outcomes (Elleman, Citation2017), alongside of findings from systematic reviews of intervention research with older students with reading comprehension difficulties showing small or no effects on reading comprehension achievement for a variety of reading comprehension interventions (e.g., Scammacca et al., Citation2016), we predicted smaller, and perhaps not statistically significant effects, on the standardized measure of general reading comprehension. In terms of moderator effects, we thought that because our intervention was comprehension focused and did not teach or support word reading (similar to Vaughn et al., Citation2020), students with relatively better word reading might show larger effects on comprehension of our text-based instruction. Given the few studies with mixed findings on whether starting levels of reading comprehension moderate effects of comprehension-focused interventions we did not have a directional hypothesis. Similarly, we did not have directional hypotheses about whether sex moderates effects of the two versions of the Connect-IT intervention when compared to each other and to BaU.

Method

Research design

The study was conducted in three middle schools (serving Grades 6, 7, and 8) in three school districts in the state of Texas. The study was approved by Institutional Review Boards at the University of Texas at Austin and at Texas A&M University in accordance with US Federal Policy for the Protection of Human Subjects. In order to be eligible for the study, students had to be identified, in step 1, by their schools as having difficulties in literacy (reading comprehension and writing) based on failing their previous year’s state ELA accountability test. IRB-approved consent forms were distributed to all identified students. Students whose parents provided informed consent and students who assented to participate were eligible for screening (step 2). The 145 children who met the screening criteria (at or below the 40th percentile on a standardized test of reading comprehension described in Measures) were then randomized to one of three conditions by an analyst blind to condition: Connect-IT computer; Connect-IT tutor, or Business as Usual (BaU) control. Due to the relatively small sample size across three grades, a stratified randomization procedure was conducted for each school separately by grade level (i.e., three levels, one for each grade). Within each stratum, students were then randomly assigned to one of the three conditions.

The 40th percentile has been used in a number of studies to indicate reading difficulties or as cut-point for assignment to reading intervention (e.g., Al Otaiba et al., Citation2014; Hock et al., Citation2009; Spencer et al., Citation2019; Vellutino et al., Citation1996). The 40th percentile has also been used by some states to operationally define the lower bound of grade-level performance on reading tests (see Foorman et al., Citation2010). We did not screen for word reading because: 1) middle school students in the U.S. with reading comprehension difficulties commonly have overlapping weaknesses in word reading accuracy and/or fluency with few demonstrating isolated problems in reading comprehension (Cirino et al., Citation2013), suggesting students low in reading comprehension with a range of word reading efficiency skills be included for reasons of external validity; and 2) variability is recommended for testing moderator effects even in low performing samples (Preacher & Sterba, Citation2019).

Note that an additional five students who were nominated by their schools because they failed their state ELA test, but scored above the 40th percentile on the standardized reading comprehension screener were also randomized to condition at the request of their schools. Because the population of interest in this study is students with reading comprehension difficulties, we did not analyze the data from these five cases in order to improve study external validity. Although the inclusion of these students might complicate the randomness of the allocation by changing the probabilities of assignment, we think that the impact of this potential risk is minimal to negligible.

Participants

Participants were 145 students in Grades 6, 7, and 8. In the year in which the study was conducted (fall and spring semesters of the 2018–2019 school year), the three schools were classified as economically-disadvantaged based on percentages of students who qualified for free or reduced-price meals (75.3%, 78.5, and 81.8%). Across grade levels, between 33% to 58% of students attending these schools attained grade-level proficiency in reading according to the state accountability literacy test.

As can be seen in , the majority of participants were female (53.8%) and White (71%). In addition, 6.9% of the students received special education services and 14.5% were classified as English learners. Attrition was low at 5.5% (8 students; 3 moved and 5 refused to return to intervention classes mid-intervention). Differential attrition was in acceptable bounds (it ranged from 3% to 0.70%) using the WWC conservative standard (WWC, Citation2022). After attrition, 137 students had pretest and posttest data.

Table 1. Participant demographics.

Connect-IT intervention

The Connect-IT intervention consists of 26 lessons – one introductory lesson followed by five modules with five lessons each. The computer and tutor versions are as similar as possible with respect to content and procedures and neither is adaptive. The differences between the two formats are provided after the description of the intervention. The intervention was created using an iterative design process in which both quantitative (student performance on questions within lessons) and qualitative (student questionnaires and focus groups) information was used in prior years to refine the intervention that was then tested in the current study. Information obtained through the iterative design process was used to inform key aspects of the final version of the intervention as follows: 1) student preference for reduced “teacher talk,” resulted in the use of brief explanatory feedback during instruction and corrective feedback during practice (Butler et al., Citation2013), to increase engagement and reduce cognitive load (Sweller, Citation2010); 2) gamification components such as badges for lesson and module completion were added to the computer version to increase engagement and better parallel the types of reinforcement provided by tutors in the tutor-led version; 3) design features were added to the computer version to reduce students’ ability to “click through” the program without reading passages and questions. All changes to texts, questions, instructional scripts, feedback and general procedures (with the exception of the computer-specific design features in 2 and 3 above), were implemented identically across the two formats.

Instructional principles

The intervention provided instruction focused on several types of inferences (pronoun reference, text-connecting inference, inferring meaning from context, and knowledge-based inference), which are explained in greater detail in the description of each instructional module. Many instructional decisions were based on a review of the learning sciences literature as well as on studies of inference. For example, the lessons in Module 5, which was a practice module, employed interleaved practice in all inference types given the evidence for effects of interleaved practice on learning and retention (reviewed in Dunlosky et al., Citation2013). However, blocking of instruction for different types of inferences (Modules 1–4) was based on studies suggesting that low-knowledge middle-school students may benefit first from blocked instruction followed by interleaved practice (Rau et al., Citation2010).

Several other techniques were used to increase learning, retention, and transfer including the provision of multiple opportunities to respond with explanatory affirmative feedback for the first half of each module, which transitioned to corrective feedback in later lessons (Butler et al., Citation2013), and extensive practice within each module (Butler, Citation2010; McCormick & Hill, Citation1984). The intervention provided modeling and opportunities for students to practice making inferences while reading authentic expository and narrative text of the types routinely encountered in middle school. Several visual techniques were also used to focus the reader on using information from the text to support inference-making and to fill in gaps in understanding. These techniques varied slightly depending on delivery format (e.g., hot spots, drag and drop, highlighting for computer delivery; arrows and manual highlighting for tutor-delivery), but were as similar as possible across delivery formats. Most of the passages were read silently by students; however, the tutor or the computer read some of the passages aloud. Feedback was always provided aurally by the teacher or computer.

In terms of the passages, 55% were expository and 45% were narrative. Instructional design took into account sources of difficulties in making inferences in expository text (see Clinton et al., Citation2020). For example, explicit instruction in connecting impersonal pronouns with their referents was provided. Because the knowledge that is important for making knowledge-based inferences in expository text (e.g., topic-specific knowledge) may require more effort to retrieve than knowledge needed to make inferences in narratives (e.g., character motivations; script-based knowledge), students were taught to use the text to activate and retrieve background knowledge relevant for making an inference to understand expository text.

There was considerable variability in passage difficulty. Some of the passages, particularly those used to illustrate points during initial instruction are at lower lexiles (as low as 400–500). For most of the texts; however, lexile levels range between 700 and 1100, which corresponds roughly to the 4th to early 8th grades. Nineteen percent of the passages were read aloud and the rest were read silently. The decision to read some passages aloud was based on accuracy data (i.e., very low accuracy answering questions for some of the longer expository texts particularly when they occurred in early lessons in a module) and qualitative feedback from students (i.e., about their perceived difficulties in reading some of these expository texts), not simply on lexile, given the greater difficulty in remembering and understanding expository vs. narrative text at similar lexiles (Clinton et al., Citation2020; Mar et al., Citation2021),

Connect-IT used a research-informed sequence of difficulty: Pronoun inferences transitioned from those having more concrete referents (e.g., referring to a person or thing) to more abstract referents (e.g., referring to an idea), and transitioned from a pronoun having one possible referent to multiple possible referents. Multiple potential referents may create contextual interference during learning, which increases difficulty (Battig, Citation1979); accuracy in the face of competing referents is uniquely related to reading comprehension likely because the assignment of pronouns to their referents is necessary for constructing accurate text-based representations and situation models (Elbro et al., Citation2017). Within several modules, distance between information to be integrated increased across lessons given findings that less skilled comprehenders have difficulty making inferences across larger text distances and that these types of inferences require more cognitive effort (Barth et al., Citation2015; Cain et al., Citation2004).

Modules and lessons

In the introductory lesson, students made inferences from a video without dialogue, a video with dialogue, a text message, and then a narrative text. In the video without dialogue, students are interrupted during the film to answer inferential questions reinforcing what an inference is and underlining the fact that in their daily lives students routinely make inferences about what is happening to whom, when, and why. This approach is based on the use of non-text materials to motivate and engage inference-making in younger children in Kendeou et al. (Citation2005).

Teacher and student avatars are used to illustrate and teach concepts in the computer version. These instructional and modeling techniques were replicated as closely as possible in the tutor version. After the introductory lesson, 25 lessons were provided in sequence in Modules 1–5 (each module included five lessons). The first three modules provided instruction and practice in making inferences that served to connect information within the text. The fourth module provided instruction and practice in making inferences requiring the integration of knowledge with text, and the fifth module provided interleaved practice in all of the inference types instructed in the previous four modules. Examples of each module are in Appendix A.

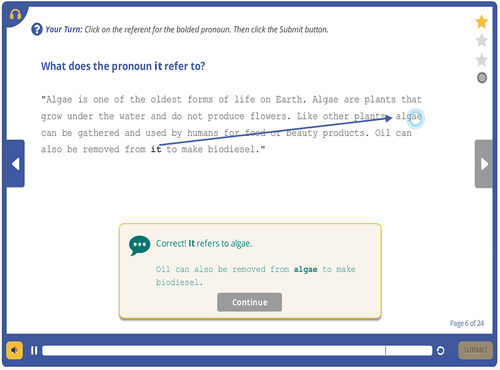

Module 1: pronoun reference

The first lesson of this module provides an overview of pronouns, pronoun references, and their importance in maintaining coherence. Students are taught how to connect personal pronouns to people or animals and provided with practice making these connections. Lesson 2 covers the pronouns “it” and “here” and “there.” In Lesson 3, the focus is on connecting pronouns to phrases and also to more abstract referents such as actions, ideas, and feelings. Lesson 4 provides instruction and practice using tense (past/present) to provide clues to the referent. Lesson 5 reviews and provides practice linking pronouns with their referents, using referent types addressed during the previous four lessons but within new texts.

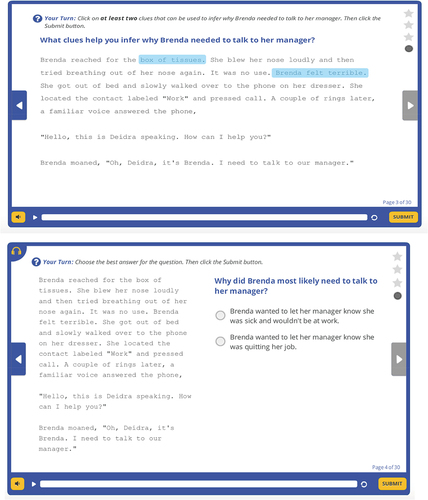

Module 2: text-connecting inferences

Students learn how to find and connect clues within the text to fill a gap or select the best ending for a passage. Lessons gradually increase the difficulty of making text-connecting inferences by requiring students to connect sentences/ideas across increasing distances in longer passages (Barth et al., Citation2015; Cain et al., Citation2004); reducing the number of within-text clues relevant to making the inference; and having the student gradually take more responsibility for finding clues in the text.

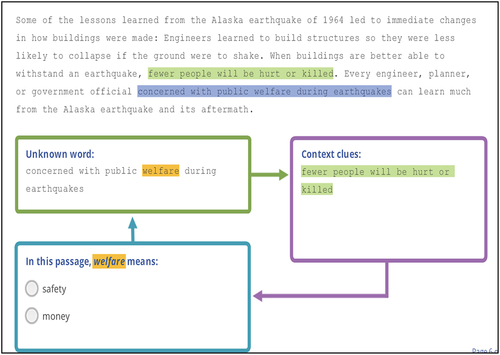

Module 3: inferring words from context

Students are taught to use strategies (e.g., using context clues; substituting a possible meaning) to infer the meanings of unknown words. In Lesson 1, passages are presented one phrase at a time to demonstrate how clues are encountered in text leading up to or following the unknown word (nonwords are used in this lesson). In Lesson 2, strategies taught in Lesson 1 are practiced with low-frequency real words. For part of this module, a graphic organizer is used to help students organize the clues found in the text, underlining the fact that clues to meaning can occur in sentences prior to the unknown word, in the same sentence, or after the sentence. In later lessons, the graphic organizer scaffold is removed; students find clues independently that support the meaning of the unknown word.

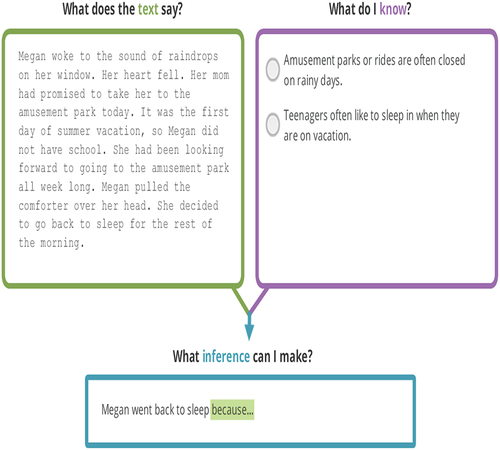

Module 4: knowledge-based inferences

Students are presented with why questions that cue the gap in the text before they read the passage; they learn to use their background knowledge to make an inference using a “because” statement that answers the question. This technique was adapted from an effective knowledge-based inference intervention for typically developing fourth-grade children (Elbro & Buch-Iversen, Citation2013). Teacher and student avatars use graphic organizers to model retrieving and integrating world knowledge to fill gaps in the text. In subsequent lessons, students read passages and answer inferential questions without the supporting graphic organizer.

Module 5: mixed practice

This module provides interleaved practice making all inference types taught and practiced during the previous four modules, such that students make multiple types of inferences while reading longer passages. This module also makes use of question types and text layouts that are similar to what students encounter during school assignments and on standardized reading accountability assessments and thereby provides opportunities for transfer of newly learned inference skills to reading in more authentic school contexts. All of the texts in Module 5 are texts that students have not seen in previous modules.

Similarities and differences in Tutor and computer connect-IT

The two versions of Connect-IT were designed to be as similar as possible in terms of content and procedures. All of the text content, instructional procedures, questions, and feedback rules and routines were the same across the two versions. However, some aspects of the two interventions necessarily varied. These similarities and differences are as follows: The tutor-led version was implemented in small groups of between 2 and six students with a median group size of 3. In the computer-delivered version, each student accessed the lessons on a computer and instruction was self-paced; however, an interventionist was present to address technical issues and monitor on-task behaviors. This procedure (self-paced computer intervention with minimal teacher and peer interaction) best captures how computerized literacy interventions are being implemented in middle school. Tutor-led instruction was scripted to correspond to the instructional content, passage format, and question presentation of the computer-delivered version. However, the pacing of instruction was not completely student-driven; for example, the tutor was instructed to wait until everyone was finished reading and had answered the question in their booklet before providing feedback on accuracy. The teacher then asked students for their answer and provided explanatory or corrective feedback when an incorrect answer was given. To promote engagement, badges for completing lessons and trophies for completing modules were provided in the computer-led version, and some items answered incorrectly during practice were repeated to promote careful responding. In the tutor-led version, tutors were provided with group management strategies to promote engagement that included reinforcing on-task behaviors and providing emotional support such as encouragement and positive statements related to effort. Tutors may have also provided scaffolding for word decoding and built knowledge required for comprehension; this additional, individualized support was neither encouraged nor discouraged as we wanted to capture potential naturally-occurring variation in the two types of delivery systems. We did not formally collect information related to such scaffolding on fidelity forms.

Tutor training

Nine tutors were trained to deliver both versions of the intervention. Tutors at one site were graduate students within a College of Education; at the other site, tutors were either graduate students in Education or were certified teachers hired by the research team. Both sets of tutors trained together through video-conferencing. After the cross-site training was conducted, a designated intervention specialist at each site certified the interventionists and conducted fidelity visits and additional coaching as needed. The tutor-led lessons were scripted and based on the computer-led lessons. Despite the scripting, we encouraged tutors to develop natural relationships and interactions with their students as would be typical in a small group teaching context.

Fidelity of implementation

Fidelity was recorded once for each tutor for an early lesson in each module. Fidelity for Connect-IT Computer was 100%. Fidelity for Connect-IT Tutor-Led was over 97%. Fidelity for the tutor-led version was measured as adherence to a series of procedures (e.g., whether passages were read aloud by the tutor or silently by students as per the script, whether the correct feedback was provided). Additions to the script that occurred in the tutor-led version (e.g., when the tutor provided the pronunciation and/or definition of a word or supplied background knowledge) were noted in the field notes during fidelity visits but were not scored for fidelity. Fidelity for the computer-led version was defined as adherence to a computer-led instruction protocol (e.g., whether the correct lesson was started for students, whether there were any technology issues, employment of redirection strategies if students were observably off-task, appropriate responses to student questions).

Business-as-usual condition

School-provided intervention classes varied widely in terms of the types of instruction. We were unable to conduct observations in BaU classes; however, we asked intervention teachers to fill out a survey of the programs and instructional materials they used, components of reading addressed during lessons, and the teaching techniques/strategies they employed. Four of the 7 BaU intervention teachers reported using computerized interventions: Read 180 (1), Achieve 3000 (1), Edmentum-Exact Path (1), Exact Path, Study Island (1). Three teachers reported using Fountas and Pinnell Leveled Literacy. The most common instructional foci reported by the teachers included teacher modeling using thinking aloud, increasing vocabulary and background knowledge, teaching students to slow down or re-read, summarization strategies, and asking students questions about texts they had read. Less commonly reported instructional foci included student think alouds, syntax, punctuation, and recall of facts or literal information from the text. With respect to question asking, teachers reported asking students to elaborate on responses (explain why something happened), and say what information they used to answer a question. Inferential questions were reported as being asked often by five teachers, sometimes by one teacher, and not at all by one teacher.

Measures

Word reading

Test of Word Reading Efficiency (TOWRE-2; Torgesen et al., Citation2012), Sight Word Efficiency (SWE) subtest. The TOWRE-2 SWE measures students’ fluency reading a list of real words that increase in difficulty. Alternate forms and test-retest reliability exceeds .89. The TOWRE-2 was given only at pretest ().

Inferential and General reading comprehension

Connect-IT Inferential Reading Comprehension Assessment (CIRCA; Clemens & Barnes, Citation2018) was designed to measure students’ inference-making on the types of inferences targeted by the Connect-IT intervention. The CIRCA was tested during the development of the intervention on two cohorts of middle school students. The CIRCA is group-administered, includes 42 test items, and takes about 30 minutes to complete. Items are organized into subsections aligned with the four types of inferences instructed in Connect-IT. However, none of the passages from the Connect-IT lessons appeared in the CIRCA. Sections vary in response formats and reading demands and for each type of inference tested. Approximately half of the texts are expository and half are narrative (see Appendix B). Correlations between the CIRCA and concurrent administrations of the Bridge-IT (described below) and the WIAT-III ranged from .52 to .68, which is consistent with commonly observed intercorrelations among standardized tests of reading comprehension (e.g., Keenan et al., Citation2008). Internal consistency (coefficient alpha) for the full measure (all 42 items) at pre- and posttest was .88 and .89, respectively.

Table 2. Pretest and posttest means with standard deviations for outcome measures.

Bridging Inferences Task (Bridge-IT). The Bridge-IT (Barth et al., Citation2015; M. Pike et al., Citation2013; M. A. Pike et al., Citation2010) uses a consistency detection paradigm to assess the ability to integrate information to make inferences between contiguous sentences (near condition) or between sentences that are separated by intervening text (far condition). Children read short stories, turn the page, and then choose the continuation sentence from amongst three choices that they think is consistent with the meaning of the story. The task assesses text-based inferences and is most closely aligned with the Text-Connecting Inference module in Connect-IT. However, none of the passages or content from the intervention appeared in the Bridge-IT. The task takes about 20 minutes. Within-sample reliability based on IRT analyses for near inference items is .93. Reliability is lower at .60 for far inference items, which were very difficult for this group of readers. The Bridge-IT total score was correlated with the CIRCA (r =.60) and with the WIAT at pretest (r = .54). The Bridge-IT was administered at pretest and posttest.

Wechsler Individual Achievement Test-Reading Comprehension Subtest (WIAT-III; Wechsler, Citation2009) requires students to silently read expository and narrative passages and answer literal and inferential questions. Questions are open-ended and are asked and answered orally. Students’ responses are recorded by the examiner and scored according to a standardized rubric. The reliability coefficient for this subtest is 0.86 for middle school. Students had to score at or below the 40th percentile on this test to be included in the analyses. The WIAT-III was administered at pretest and posttest and scaled scores, as well as separate proportion correct scores for Literal and Inferential questions (as coded in the WIAT-III manual), were used in analyses.

Assessor training

Assessors were co-trained across sites and then further training and certification was accomplished within-site through a designated assessment lead. Refresher training was provided by each site through the assessment leads prior to post-testing. Assessors were blind to condition.

Data analysis

We fit regression models to estimate the effects of the intervention. For all models, we included pretest scores (grand mean centered) as covariates. We considered three comparisons: 1) computer-led versus BaU, 2) tutor-led versus BaU, and 3) computer-led versus tutor-led. Students in the study were nested within schools. Because of the relatively limited number of schools (n = 3), we treated schools as fixed rather than random effects and included L-1 dummy codes (where L is the number of schools) for school membership (McNeish et al., Citation2017). False discovery rates associated with multiple comparisons (i.e., Type 1 error) were controlled using the Benjamini-Hochberg correction (Benjamini & Hochberg, Citation1995). Effect sizes were estimated as Hedges’ g, using the coefficient corresponding to the relevant parameter as the numerator and the posttest pooled standard deviation as the denominator, per WWC recommendations.

We also examined moderators of intervention effects. These regression models, based on path analytic framework (Preacher & Hayes, Citation2008), are extensions of the previous analyses that estimated the effect of treatment on outcomes. The statistical model was expanded to include each moderator as a main effect predicting the outcome along with its interactions with condition. Two pretest skills, word reading (as measured by TOWRE-2 SWE) and reading comprehension (as measured by the WIAT-III), were evaluated as potential moderators. We also examined whether sex predicted differential response to intervention. For each potential moderator, we estimated a separate model. All continuous moderators were grand-mean centered. To follow up a significant interaction, the Johnson-Neyman technique was used (Preacher et al., Citation2006), which derived the value along the full continuum of the moderator at which the effect of intervention transitioned from statistically significant to non-significant. These values demarcate the regions of significance of the intervention effect.

Results

Preliminary analyses

displays the means and standard deviations for each measure. All variables were normally distributed based on estimates of skewness and kurtosis. We identified no outlying values. The groups were equivalent at baseline on demographic characteristics (e.g. special education status, gender, and race) and pretest scores (p-values ranged from .31 to .93).

Main treatment effects

On the CIRCA, students in tutor-led intervention outperformed students in computer-led intervention (β = 2.20, p = .03, p - adjusted = .04) and students in BaU (β = 2.9., p = .00, p - adjusted = .04). The effect sizes were 0.30 (95% CI [0.03, 0.57]) and 0.40 (95% CI [0.13, 0.66]), respectively. Students in computer-led did not differ from those in BaU (p = .46, p - adjusted = .46; ES = 0.09 (95% CI [−0.14, 0.32])). On the Bridge-IT Far, the difference between tutor-led intervention and BaU was sizable (see Swanson et al., Citation2017 for a discussion of sizable or non-trivial effects in the context of lack of statistical significance for reading interventions in older students) in favor of tutor-led, but not statistically significant (β = 0.62, p = .08, p - adjusted = .16, ES = 0.35 (95% CI [−0.04, 0.74])), and the difference between computer-led and BaU was also sizable in favor of computer-led, but not statistically significant (β = 0.56, p = .11, p - adjusted = .16, ES = 0.32 (95% CI [−0.07, 0.71])). On the inferential comprehension items from the WIAT, students in the tutor-led condition outperformed students who received the computer-led intervention with a sizable effect that was not statistically significant after adjusting for multiple group comparisons (β = 0.05, p = .07, p - adjusted = .20, ES = 0.32 (95% CI [−0.02, 0.67])). Groups did not differ on WIAT standard scores (p-adjusted ranged from 0.12 to 0.51; ES ranged from 0.09–0.24), the literal comprehension items from WIAT (p-adjusted ranged from 0.61 to 0.90; ES ranged from 0.02–0.16), and Bridge-IT Near (p-adjusted ranged from 0.43 to 0.62; ES ranged from 0.09–0.24; see for additional details).

Additionally, we also investigated whether there were different effects across different types of inferences on the CIRCA test. As shown in , students in the tutor-led intervention outperformed students in BaU on pronoun, vocabulary, and knowledge-based inferences. The effect sizes were 0.41 (95% CI [0.08, 0.74]), 0.41 (95% CI [0.08, 0.74]) and 0.46 (95% CI [0.10, 0.83]), respectively. Students in the tutor-led intervention also outperformed students in computer-led intervention on vocabulary (0.48 (95% CI [0.16, 0.80])) and knowledge-based (0.37 (95% CI [0.02, 0.71]) inferences. Effect sizes and p-values for non-significant contrasts are in .

Moderation analysis

presents the results of the moderation analyses for word reading efficiency as a moderator of intervention effects. Word reading efficiency moderated the main effect between the tutor-led and computer-led intervention on WIAT standard scores (see ) and on the inferential comprehension items from WIAT. The path coefficient for the interaction was positive and statistically significant (β = 0.39, p = .00, p – adjusted = .01 for WIAT standard scores; β = 0.01, p = .01, p – adjusted = .03 for WIAT inferential items), indicating that the significant treatment effect favoring the tutor-led intervention compared to the computer-based version becomes significantly larger for each additional point increase in word reading. On both measures the region of statistical significance indicates that the effect favoring tutor-led intervention over the computer-led intervention was significant for students with standard scores at the sample mean of 90 or higher on TOWRE-2 SWE subtest. There were no significant moderating effects of pre-intervention WIAT-III reading comprehension level on any inference (p-adjusted ranged from 0.24 to 0.43) or reading comprehension outcome (p-adjusted ranged from 0.53 to 0.82; see for additional details).

FIGURE 1. Visualization of word reading as a moderator of intervention effect between the computer-led and tutor-led interventions on WIAT reading comprehension standard scores at posttest.

Child sex interacted with condition on several outcome measures (). On Bridge-IT Near there was a significant interaction between the tutor-led versus computer-led intervention and child’s sex (p = .02; p-adjusted = 0.03) such that boys experienced greater benefit from participation in the tutor-led version of Connect-IT compared to the computer-led version. The effect size for boys in the tutor-led intervention was 0.73 (95% CI [0.24, 1.22]). For girls, the effect of tutor-led intervention did not differ statistically from 0 (ES = −0.15, 95% CI [−0.59, 0.28]). The pattern of findings was similar on WIAT standard scores. The effect of tutor-led intervention was greater and statistically significant (p = .01; p-adjusted = 0.02) for boys and smaller and statistically not significant for girls. The effect size for boys was 0.73 (95% CI [0.16, 1.30]). For girls, the effect of tutor-led intervention was −0.02 (95% CI [−0.37, 0.33]). Additionally, on the WIAT standard scores the two-way interactions between computer-led versus BaU and sex was positive and statistically significant p = .01; p-adjusted = 0.02), indicating that the computer-led intervention affected boys and girls differently. The effect size for boys was −0.57 (95% CI [−1.04, −0.09]) in favor of BaU. For girls, the effect of computer-led intervention was 0.25 (95% CI [−0.10, 0.60]). Lastly, on the WIAT inferential items the two-way interaction between computer-led versus BaU and sex was positive and statistically significant p = .00; p-adjusted = 0.00). The effect size for boys was −0.75 (95% CI [−1.24, −0.25]) in favor of BaU. For girls, the effect of the computer-led intervention was 0.38(95% CI [−0.05, 0.80]). Sex did not moderate Bridge-IT far and WIAT literal outcomes (p-adjusted ranged from 0.53 to 0.98).

Table 3. Main effects of intervention on student outcomes.

Table 4. Main effect of intervention on CIRCA inference types.

Table 5. Results for word reading efficiency as a moderator of intervention effects.

Table 6. Results for reading comprehension as a moderator of intervention effects.

Table 7. Results for sex as a moderator of intervention effects.

Discussion

This study tested the effects on reading comprehension of Connect-IT, an inference-making intervention designed for middle school students with reading difficulties. To address time and resource limitations of the middle school environment, we compared computer-led and small group tutor-led versions of Connect-IT to each other and to the schools’ BaU interventions. We predicted that there would be positive effects of both versions of Connect-IT compared to BaU intervention on the researcher-designed assessment that measured skill generating the four inference types instructed in the intervention while reading new passages (CIRCA) and for another researcher-created measure of text-connecting inference generation (Bridge-IT). We predicted smaller or negligible effects on a standardized measure of general reading comprehension. We also tested whether pre-intervention word reading fluency, reading comprehension, and sex moderated treatment effects.

Contrary to predictions, only the tutor-led version of Connect-IT showed statistically significant, medium-sized effects (Cohen, Citation1988) on the researcher-created measure (CIRCA) compared to both the computer version and BaU and these effects were robust across three of the four types of inferences (pronoun reference, inferring words from context, knowledge-based inference). We do not have an explanation for the lack of effects on text-connecting inferences, but note that these types of inferences are measured by the Bridge-IT on which there were also not significant main effects. Although sizable effects for both versions of Connect-IT compared to BaU were found for Bridge-IT Far inference items, and for the inferential comprehension items from the WIAT-III Reading Comprehension subtest, these effects were not significant after correcting for multiple comparisons.

The significant, medium sized effects for small-group, tutor-led Connect-IT are consistent with findings from other inference interventions with typically developing readers and students with reading difficulties in the earlier grades (Elleman, Citation2017; Hall, Citation2016) and in middle grade struggling readers (Barth & Elleman, Citation2017), and suggest that inference-making is also malleable in middle school students with comprehension difficulties. Previous studies have mostly used narrative texts to teach and measure inference-making even though comprehension of expository text is of high importance in the middle school grades, and is associated with more difficulty in making inferences (Clinton et al., Citation2020). The current findings suggest that an intervention using expository and narrative text improved students’ inference-making for new narrative and expository texts. Importantly, the intervention explicitly addressed some of the difficulties associated with inference-making in expository text by providing instruction on how to link impersonal pronouns with their referents and activating relevant topic knowledge, which may be less likely to be retrieved during reading of expository texts than the type of knowledge (e.g., character motivations) that is more commonly used to make inferences in narratives.

Why the two versions of Connect-IT produced different results despite the high degree of similarity in materials, instructional routines, and feedback is not well understood based on the data we have. One explanation has to do with potential differences in engagement and motivation in the two conditions, which is more fully discussed when interpreting the moderator effects for boys and girls below. A related hypothesis concerns the support for learning that tutors and peers might afford in the small-group context, including the provision of word meanings or background knowledge as well as emotional support. It will be important in future studies to collect observational data on tutor-student and peer-to-peer interactions and support (in addition to computer-process data) to better understand and contextualize these effects given that two versions of the same treatment produced different findings (Weiss et al., Citation2014).

Moderated treatment effects

Word reading

Although there was no significant main effect of either intervention on the standardized measure of general reading comprehension achievement, there was a moderated treatment effect such that students with relatively better word reading efficiency (at and above the sample mean of the 25th percentile) gained more from the tutor-led intervention compared to the computer-led version, as measured by the WIAT. This moderated treatment effect was also found for the inferential items from the WIAT. Similar findings were reported in a study of an intensive teacher-delivered reading comprehension intervention in which children with significant word reading difficulties showed minimal response compared to their peers with “near adequate” word reading (Vaughn et al., Citation2020). Reading comprehension interventions for middle school students, including Connect-IT, might be most effective for students who, while low in reading comprehension, have word reading that is sufficient (even if not strong) to allow them access to text-based instruction. In the current study, it is unclear what explains the benefit to comprehension of tutor-led small-group instruction compared to computerized instruction for better word readers.

In U.S. samples, the most common profile amongst older students with reading difficulties is one of multiple difficulties across word decoding, reading fluency, vocabulary knowledge, and reading comprehension (Brasseur-Hock et al., Citation2011; Cirino et al., Citation2013; Clemens et al., Citation2017; Hock et al., Citation2009; Lesaux & Kieffer, Citation2010). Given that there is a substantial subgroup of less skilled older readers with difficulties in both word reading and comprehension, reading comprehension interventions such as Connect-IT may benefit from the addition of a supplemental systematic word reading component that concurrently addresses word reading difficulties of older students (Lovett et al., Citation2022; Vaughn et al., Citation2020).

Sex

Sex was a significant moderator of effects for the computer-led version of Connect-IT across several inferential and reading comprehension measures. The pattern that emerged was that boys fared better in tutor-led Connect-IT and teacher-delivered BaU interventions compared to Connect-IT computer-led. Girls either performed similarly or in the opposite direction to boys when comparing the effects of the two versions of Connect-IT, and in the opposite direction to boys when comparing the effects of Connect-IT computer-led to BaU.

There are some potential explanations, as well as explanations we can rule out, for these robust effects in favor of tutor-delivered Connect-IT for boys in comparison to girls. First, simple speed-accuracy tradeoffs in the computer version do not seem to be a factor; with the exception of one module, post-processing of the computer response data did not reveal that boys spent less time overall on the computer lessons than girls. Second, although an advantage of computer-based delivery includes high adherence and quality of implementation, if the student is not engaged in the intervention (i.e., if they do not make the effort to read the passages, answer the questions, and listen to the feedback), then the intervention is not received as intended. We do not have data that can speak to potential differences in engagement and motivation between boys and girls in the different conditions such as could be obtained by investigating attentional allocation through measures of mind wandering or eyetracking, or the use of social validity measures such as self-reports of engagement and motivation. However, the literature on differences in engagement and motivation in boys and girls with respect to both assessment (e.g., Borgonovi, Citation2022) and learning (e.g., Jansen et al., Citation2022) may be informative. Although perceptions of self-competence and values placed on learning decrease in adolescence for both boys and girls, there are gender differences (Jacobs et al., Citation2002): girls tend to show higher motivation in verbal and literacy domains than boys (Jansen et al., Citation2022). Relative to adolescent girls, adolescent boys may exert less effort and demonstrate less engagement in school tasks that involve reading. The value of the tutor over the computer for boys could include supports for engagement and motivation, as well as demands on accountability when interacting with teachers and peers. Positive teacher-student relationships, teacher support, and the type of feedback provided to students have the strongest associations with student academic motivation (Jansen et al., Citation2022). Better understanding the mechanisms for these moderation effects might involve collecting information about tutor and peer behaviors and the supports they afford in small-group interventions, and the effects these variables have on student engagement and motivation for boys compared to girls. Finally, it should be noted that for several students, BaU involved a blend of computerized and teacher-led instruction through technology-based programs such as Read 180 and Achieve 3000, both of which are associated with positive effects on standardized measures of general reading comprehension in a WWC report (What Works Clearinghouse, Institute of Education Sciences, Citation2016) and a systematic review (Baye et al., Citation2019). Because both of these technology-based programs have design features that promote engagement and motivation, including the use of adaptive technology and a hybrid component involving some teacher-led sessions, BaU instruction may have provided a strong technology-based counterfactual (Lemons et al., Citation2014) compared to computer-led Connect-IT.

As noted earlier, the literature shows mixed effects of sex on technology-based literacy instruction. The contribution of the current study to this literature is the direct comparison of highly similar versions of a tutor- and computer-delivered intervention, rather than only comparing a computer-delivered intervention to BaU reading instruction or no additional reading instruction. Although we cannot determine what makes the tutor-led version more effective in the current study, we hope that our findings prompt other researchers to look at gender as a potential moderator of intervention response, particularly, but not only, when the intervention involves a technology-based component.

Limitations and future research directions

There are several limitations of the current study. First, although this study had far more participants than most studies analyzed in the Elleman (Citation2017) and Hall (Citation2016) systematic reviews, the trial was underpowered and several effects failed to reach statistical significance at the p = .05 level after controlling for multiple comparisons. An adequately powered randomized controlled trial comparing the two interventions to each other and to BaU intervention classes would be important to both replicate and extend the current findings. Such a trial is underway.

Second, although we attempted to make the tutor- and computer-delivered interventions as similar as possible, the findings differed across the two forms; unfortunately, our hypotheses for these differences were not able to be tested. We provided suggestions, earlier, about the types of data that would need to be collected to evaluate potential sources of differences in learning across the two delivery systems. Another direction for future research would be to test the effects of a hybrid form of the intervention that uses both human agents and computers in learning for complementary purposes, similar to technology-based programs such as Read 180 when they are implemented with fidelity. Given that middle schools must often provide supplemental literacy interventions to relatively large groups of students (10 or more) with one teacher, the effects of group size might be a ecologically-valid and important factor to test.

Third, limitations on resources meant that we were unable to visit and collect fidelity data for BaU classrooms, relying instead on information provided on teacher questionnaires about the programs they used and the comprehension practices they engaged in. More detailed information about instruction in BaU classrooms would have provided a better understanding of elements of treatment contrast that explain intervention effects relative to the BaU condition. In particular, capturing what inference-related instructional elements are being used in BaU classrooms would be important in future studies. BaU was quite variable across classrooms, which poses issues for interpretation of effects; however, the BaU condition is very representative of the types of interventions that are being employed in middle schools.

Finally, the moderators tested in this study were limited. There are strong indirect effects of verbal knowledge on reading comprehension through inference-making for older students (Ahmed et al., Citation2016), and disciplinary vocabulary that is often encountered in informational text predicts reading comprehension over and above general vocabulary (Elleman et al., Citation2022). It would be of interest to test whether there are moderating effects of verbal knowledge, including academic and disciplinary vocabulary, on inference-making comprehension interventions such as Connect-IT. Given the negative effects of mind wandering on situation model construction (Mrazek et al., Citation2013), and findings that reading anxiety moderates effects of reading comprehension interventions (Martinez-Lincoln et al., Citation2021; Vaughn et al., Citation2022), attentional and emotional factors that affect information processing and engagement, particularly in situations that serve to heighten or reduce anxiety and engagement with text, may also be important to study, particularly for older students with long histories of reading difficulties.

Conclusions

This study showed that older students with comprehension difficulties made gains in inferential comprehension when they received a novel inference-making intervention derived from theories and empirical work on inference and reading comprehension. Students in the tutor-led version with higher word reading efficiency relative to other students in the sample also made gains on a standardized measure of general reading comprehension, and also on the inferential items from that assessment. These findings suggest that inferential reading comprehension is malleable in older, less skilled comprehenders. This study was unique in the way it allowed for head-to-head comparisons of the effects of two delivery platforms for the same inferential comprehension intervention. The computer-led version was robustly associated with less positive effects for boys compared to both the tutor-led version and BaU. As computer-based instruction becomes increasingly more common in schools, these findings raise important questions not just about the academic content of comprehension interventions for older students, but also how other factors (e.g., components that impact motivation) may affect the uptake of that content during instruction and practice.

Disclosure statement

No potential conflict of interest was reported by the author(s).

Additional information

Funding

Notes

1. We use the term sex rather than gender throughout this paper. Although we recognize the non-binary nature of this concept, for this sample we only know whether students were boys or girls.

References

- Ahmed, Y., Francis, D. J., York, M., Fletcher, J. M., Barnes, M., & Kulesz, P. (2016). Validation of the direct and inferential mediation (DIME) model of reading comprehension in grades 7 through 12. Contemporary Educational Psychology, 44, 68–82. https://doi.org/10.1016/j.cedpsych.2016.02.002

- Al Otaiba, S., Kim, Y. S., Wanzek, J., Petscher, Y., & Wagner, R. K. (2014). Long-term effects of first-grade multitier intervention. Journal of Research on Educational Effectiveness, 7(3), 250–267. https://doi.org/10.1080/19345747.2014.906692

- Balfanz, R., DePaoli, J. L., Bridgeland, J. M., Atwell, M., Ingram, E. S., & Byrnes, V.(2017). Grad nation: Building a grad nation. Progress and Challenge in Ending the High School Dropout Epidemic.

- Barnes, M. A., Ahmed, Y., Barth, A., & Francis, D. J. (2015). The relation of knowledge-text integration processes and reading comprehension in seventh to twelfth grade students. Scientific Studies of Reading, 19(4), 253–272. https://doi.org/10.1080/10888438.2015.1022650

- Barth, A. E., Barnes, M., Francis, D., Vaughn, S., & York, M. (2015). Inferential processing among adequate and struggling adolescent comprehenders and relations to reading comprehension. Reading and Writing, 28(5), 587–609. https://doi.org/10.1007/s11145-014-9540-1

- Barth, A. E., & Elleman, A. (2017). Evaluating the impact of a multistrategy inference intervention for middle-grade struggling readers. Language, Speech, and Hearing Services in Schools, 48(1), 31–41. https://doi.org/10.1044/2016_LSHSS-16-0041

- Battig, W. F. (1979). The flexibility of human memory. In L. S. Cermak & F. I. Craik, (Eds.), Levels of processing in human memory, (pp. 23–44). Erlbaum.

- Baye, A., Inns, A., Lake, C., & Slavin, R. E. (2019). A synthesis of quantitative research on reading programs for secondary students. Reading Research Quarterly, 54(2), 133–166. https://doi.org/10.1002/rrq.229

- Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Methodological), 57(1), 289–300. https://doi.org/10.1111/j.2517-6161.1995.tb02031.x

- Borgonovi, F. (2016). Video gaming and gender differences in digital and printed reading performance among 15-year-olds students in 26 countries. Journal of Adolescence, 48(1), 45–61. https://doi.org/10.1016/j.adolescence.2016.01.004

- Borgonovi, F. (2022). Is the literacy achievement of teenage boys poorer than that of teenage girls, or do estimates of gender gaps depend on the test? A comparison of PISA and PIAAC. Journal of Educational Psychology, 114(2), 239. https://doi.org/10.1037/edu0000659

- Brasseur-Hock, I. F., Hock, M. F., Kieffer, M. J., Biancarosa, G., & Deshler, D. D. (2011). Adolescent struggling readers in urban schools: Results of a latent class analysis. Learning and Individual Differences, 21(4), 438–452. https://doi.org/10.1016/j.lindif.2011.01.008

- Butler, A. C. (2010). Repeated testing produces superior transfer of learning relative to repeated studying. Journal of Experimental Psychology: Learning, Memory, and Cognition, 36(5), 1118–1133. https://doi.org/10.1037/a0019902

- Butler, A. C., Godbole, N., & Marsh, E. J. (2013). Explanation feedback is better than correct answer feedback for promoting transfer of learning. Journal of Educational Psychology, 105(2), 290–298. https://doi.org/10.1037/a0031026

- Cain, K., Oakhill, J., & Lemmon, K. (2004). Individual differences in the inference of word meanings from context: The influence of reading comprehension, vocabulary knowledge, and memory capacity. Journal of Educational Psychology, 96(4), 671–681. https://doi.org/10.1037/0022-0663.96.4.671

- Cheung, A. C., & Slavin, R. E. (2012). How features of educational technology applications affect student reading outcomes: A meta-analysis. Educational Research Review, 7(3), 198–215. https://doi.org/10.1016/j.edurev.2012.05.002

- Cirino, P. T., Romain, M. A., Barth, A. E., Tolar, T. D., Fletcher, J. M., & Vaughn, S. (2013). Reading skill components and impairments in middle school struggling readers. Reading and Writing, 26(7), 1059–1086. https://doi.org/10.1007/s11145-012-9406-3

- Clemens, N. H., & Barnes, M. A. (2018). Connect-IT reading comprehension assessment (CIRCA). The University of Texas at Austin: Authors. Connect-IT.

- Clemens, N. H., Oslund, E., Kwok, O. M., Fogarty, M., Simmons, D., & Davis, J. L. (2019). Skill moderators of the effects of a reading comprehension intervention. Exceptional Children, 85(2), 197–211. https://doi.org/10.1177/0014402918787339

- Clemens, N. H., Simmons, D., Simmons, L. E., Wang, H., & Kwok, O. M. (2017). The prevalence of reading fluency and vocabulary difficulties among adolescents struggling with reading comprehension. Journal of Psychoeducational Assessment, 35(8), 785–798. https://doi.org/10.1177/0734282916662120

- Clinton, V. (2019). Reading from paper compared to screens: A systematic review and meta‐analysis. Journal of Research in Reading, 42(2), 288–325. https://doi.org/10.1111/1467-9817.12269

- Clinton, V., Taylor, T., Bajpayee, S., Davison, M. L., Carlson, S. E., & Seipel, B. (2020). Inferential comprehension differences between narrative and expository texts: A systematic review and meta-analysis. Reading and Writing, 33(9), 2223–2248. https://doi.org/10.1007/s11145-020-10044-2

- Cohen, J. (1988). Statistical power analysis for the behavioral sciences. Routledge Academic.

- Compton, D. L., Miller, A. C., Elleman, A. M., & Steacy, L. M. (2014). Have we forsaken reading theory in the name of “quick fix” interventions for children with reading disability? Scientific Studies of Reading, 18(1), 55–73. https://doi.org/10.1080/10888438.2013.836200

- Cromley, J. G., & Azevedo, R. (2007). Testing and refining the direct and inferential mediation model of reading comprehension. Journal of Educational Psychology, 99(2), 311–325. https://doi.org/10.1037/0022-0663.99.2.311

- Cromley, J. G., Snyder-Hogan, L. E., & Luciw-Dubas, U. A. (2010). Reading comprehension of scientific text: A domain-specific test of the direct and inferential mediation model of reading comprehension. Journal of Educational Psychology, 102(3), 687–700. https://doi.org/10.1037/a0019452

- Denton, C. A., Enos, M., York, M. J., Francis, D. J., Barnes, M. A., Kulesz, P. A., Fletcher, J. M., & Carter, S. (2015). Text processing differences in adolescent adequate and poor comprehenders reading accessible and challenging narrative and informational text. Reading Research Quarterly, 50(4), 393–416. https://doi.org/10.1002/rrq.105

- Dunlosky, J., Rawson, K. A., Marsh, E. J., Nathan, M. J., & Willingham, D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, 14(1), 4–58. https://doi.org/10.1177/1529100612453266

- Edyburn, D. (2011). Harnessing the potential of technology to support the academic success of diverse students. New Directions for Higher Education, 154(2011), 37–44.https://doi.org/10.1002/he.432

- Elbro, C., & Buch-Iversen, I. (2013). Activation of background knowledge for inference making: Effects on reading comprehension. Scientific Studies of Reading, 17(6), 435–452. https://doi.org/10.1080/10888438.2013.774005

- Elbro, C., Oakhill, J., Megherbi, H., & Seigneuric, A. (2017). Aspects of pronominal resolution as markers of reading comprehension: The role of antecedent variability. Reading and Writing, 30(4), 813–827. https://doi.org/10.1007/s11145-016-9702-4

- Elleman, A. M. (2017). Examining the impact of inference instruction on the literal and inferential comprehension of skilled and less skilled readers: A meta-analytic review. Journal of Educational Psychology, 109(6), 761–781. https://doi.org/10.1037/edu0000180

- Elleman, A. M., Steacy, L. M., Gilbert, J. K., Cho, E., Miller, A. C., Coyne-Green, A., Pritchard, P., Fields, R. S., Schaeffer, S., & Compton, D. L. (2022). Exploring the role of knowledge in predicting reading and listening comprehension in fifth grade students. Learning and Individual Differences, 98, 102182. https://doi.org/10.1016/j.lindif.2022.102182

- Foorman, B. R., Petscher, Y., Lefsky, E. B., & Toste, J. R. (2010). Reading first in Florida: Five years of improvement. Journal of Literacy Research, 42(1), 71–93. https://doi.org/10.1080/10862960903583202

- Fuchs, D., & Fuchs, L. S. (2019). On the importance of moderator analysis in intervention research: An introduction to the special issue. Exceptional Children, 85(2), 126–128. https://doi.org/10.1177/0014402918811924

- Gilmour, A. F., Fuchs, D., & Wehby, J. H. (2019). Are students with disabilities accessing the curriculum? A meta-analysis of the reading achievement gap between students with and without disabilities. Exceptional Children, 85(3), 329–346. https://doi.org/10.1177/0014402918795830

- Hall, C. S. (2016). Inference instruction for struggling readers: A synthesis of intervention research. Educational Psychology Review, 28(1), 1–22. https://doi.org/10.1007/s10648-014-9295-x

- Hock, M. F., Brasseur, I. F., Deshler, D. D., Catts, H. W., Marquis, J. G., Mark, C. A., & Stribling, J. W. (2009). What is the reading component skill profile of adolescent struggling readers in urban schools? Learning Disability Quarterly, 32(1), 21–38. https://doi.org/10.2307/25474660

- Hughes, J. A., Phillips, G., Reed, P., & Paterson, K. (2013). Brief exposure to a self-paced computer-based reading programme and how it impacts reading ability and behaviour problems. Public Library of Science ONE, 8(11), e77867. https://doi.org/10.1371/journal.pone.0077867

- Jacobs, J. E., Lanza, S., Osgood, D. W., Eccles, J. S., & Wigfield, A. (2002). Changes in children’s self‐competence and values: Gender and domain differences across grades one through twelve. Child Development, 73(2), 509–527. https://doi.org/10.1111/1467-8624.00421

- Jansen, T., Meyer, J., Wigfield, A., & Möller, J. (2022). Which student and instructional variables are most strongly related to academic motivation in K-12 education? A systematic review of meta-analyses. Psychological Bulletin, 148(1–2), 1–26. https://doi.org/10.1037/bul0000354

- Keenan, J. M., Betjemann, R. S., & Olson, R. K. (2008). Reading comprehension tests vary in the skills they assess: Differential dependence on decoding and oral comprehension. Scientific Studies of Reading, 12(3), 281–300. https://doi.org/10.1080/10888430802132279

- Kendeou, P., Lynch, J. S., Van Den Broek, P., Espin, C. A., White, M. J., & Kremer, K. E. (2005). Developing successful readers: Building early comprehension skills through television viewing and listening. Early Childhood Education Journal, 33(2), 91–98. https://doi.org/10.1007/s10643-005-0030-6

- Kim, Y. S. G. (2020). Hierarchical and dynamic relations of language and cognitive skills to reading comprehension: Testing the direct and indirect effects model of reading (DIER). Journal of Educational Psychology, 112(4), 667–. https://doi.org/10.1037/edu0000407

- Kintsch, W. (1988). The role of knowledge in discourse comprehension: A construction-integration model. Psychological Review, 95(2), 163–182. https://doi.org/10.1037/0033-295X.95.2.163

- Lemons, C. J., Fuchs, D., Gilbert, J. K., & Fuchs, L. S. (2014). Evidence-based practices in a changing world: Reconsidering the counterfactual in education research. Educational Researcher, 43(5), 242–252. https://doi.org/10.3102/0013189X14539189

- Lenth, R., & Lenth, M. R.(2018). Package ‘lsmeans’. American Statistician, 34(4), 216–221.

- Lesaux, N. K., & Kieffer, M. J. (2010). Exploring sources of reading comprehension difficulties among language minority learners and their classmates in early adolescence. American Educational Research Journal, 47(3), 596–632. https://doi.org/10.3102/0002831209355469

- Lovett, M. W., Frijters, J. C., Steinbach, K. A., De Palma, M., Lacerenza, L., Wolf, M., Sevcik, R. A., & Morris, R. D. (2022). Interpreting comprehension outcomes after multiple-component reading intervention for children and adolescents with reading disabilities. Learning and Individual Differences, 100, 102224. https://doi.org/10.1016/j.lindif.2022.102224