Abstract

Rock and mineral labs are fundamental in traditional introductory geology courses. Successful implementation of these lab activities provides students opportunities to apply content knowledge. Inquiry-based instruction may be one way to increase student success. Prior examination of published STEM labs indicates that geology labs, particularly rock and mineral activities, are often constructed at low levels of inquiry (i.e., students are provided context throughout each step of the scientific process rather than constructing their own knowledge). This could be related to instructor concerns about the time needed to implement inquiry-based labs. Here, 36 instructor-generated rock and mineral labs available through the Teach the Earth (TTE) collection are assessed using an inquiry rubric adapted from Ryker and McConnell and a newly developed utility rubric. For the activities within these labs (n = 55), inquiry levels ranged from confirmation (7%) to open (16%) with most identified as structured (58%). Lab activities from the TTE collection are generally more inquiry-based than previously published activities. Lab utility scores ranged from 18–29 on a 10–30 scale, where lower values indicate a greater difficulty of implementation. One particular challenge for these labs may be ease of grading, the category rated most often as having low utility. No significant correlations (p > 0.05) were identified between the inquiry and utility scores, contradicting the idea that increasing inquiry comes at the expense of utility. The rubrics utilized in and developed for this study provide researchers with beneficial tools for exploration of laboratory activities on other topics, or in different disciplines.

Introduction

Geoscience laboratory courses are often used to reinforce concepts taught in lecture courses and increase student learning (Forcino, Citation2013; Nelson et al., Citation2010). Rock and mineral labs are fundamental parts of geology courses and set the stage for discussions about more complex topics such as plate tectonics, volcanism, and geologic history (Egger, Citation2019). These labs generally include a suite of common rocks and minerals that students identify using observations and interpretations based on the unique properties these Earth materials possess (Grissom et al., Citation2015). Often these labs provide students a list of properties known to be associated with each rock or mineral and ask students to match these with samples, creating a “cookbook” style exercise where students are merely confirming that their observations match an expected answer (Grissom et al., Citation2015). This can devolve into a process of elimination, wherein samples with more distinct properties are identified by a single characteristic (e.g., sulfur’s smell) or because there is only one sample name left from a list. This confirmation-based style of lab activity is at odds with the scientific process, and may not serve to effectively teach or inspire students (Bunterm et al., Citation2014; Kuo et al., Citation2020). However, it can still teach important identification skills, such as the process of reading an identification key and assessing properties such as cleavage and streak.

Efficacy of rock and mineral lab instruction may be improved by increasing the lab activity’s level of inquiry, such that students take more ownership over the learning process. Inquiry is often recognized as both a teaching approach (Sandoval, Citation2005) and a learning approach in which the building of knowledge occurs through the process of doing scientific investigations (Justice et al., Citation2009). Due to the variable ways in which inquiry can be enacted in science teaching and learning (Domin, Citation1999; Schwab, Citation1958), it is commonly described on a scale ranging from confirmation, where the instructor provides all knowledge and the student confirms the information, to authentic (sometimes referred to as open) inquiry, and where the student must independently discover and apply all information (Bell et al., Citation2005; Buck et al., Citation2008; Ryker & McConnell, Citation2017).

In a 2008 study, Buck et al. incorporated previous definitions of inquiry to quantify the role of students within the scientific process. They found that published geology labs consistently had low levels of inquiry, indicating that instructors provided content and decisions about how data should be collected, analyzed, presented and interpreted while students followed directions to confirm current scientific understanding. All 46 labs examined were rated at the lowest level of inquiry: confirmation. Ryker and McConnell (Citation2017) applied the same rubric to each activity within the labs of four published lab manuals and found that some topics (e.g., groundwater, plate tectonics) were routinely taught in a more inquiry-based way. This may indicate a discipline-level expression of pedagogical content knowledge: instructors feel as though these topics are most effectively taught in a more interactive, authentic way. Minerals was one of the six topics commonly included in published lab manuals that was evaluated as having a consistently low level of inquiry. While rock activities were also commonly included in published lab manuals, they were not consistently presented. Some manuals included labs with all rock types and others have specific labs for each rock type. Due to this inconsistency, rock lab activities were not examined by Ryker and McConnell (Citation2017). Grissom et al. (Citation2015) examined both rock and mineral labs and found that some contained higher levels of inquiry. It is possible that instructor-developed materials may contain higher levels of inquiry than their counterparts in published lab manuals, but this assertion has not been examined.

In collegiate lab courses, inquiry-based instruction has been shown to increase science literacy skills, course engagement, and student self-efficacy (Gormally et al., Citation2009; Gray, Citation2017; Ryker, Citation2014). Regardless, there is still instructor resistance to using inquiry, likely related to the perceived decrease in the utility (i.e. ease of implementation) commonly associated with increased inquiry (Apedoe et al., Citation2006). Time is one of the most commonly cited factors impeding change, including time to learn about new techniques and implement them (Henderson et al., Citation2011; Henderson & Dancy, Citation2007, Citation2009). Ways to minimize this time barrier include discipline-specific professional development (Manduca et al., Citation2017) and sharing materials (McDaris et al., Citation2019) through collections like Teach the Earth (TTE) hosted by the Science Education Resource Center (SERC , 2023a; Teach the Earth, Citation2023).

This review seeks to explore the following: (1) the degree to which instructor-generated, publicly-available introductory rock and mineral activities are inquiry-based, (2) the level of ease with which these activities can be implemented (i.e., their utility), and (3) the relationship between a lab’s level of inquiry and the utility of that lab implementation. To address the first two questions, two rubrics are employed: (1) a modification of the Buck et al. (Citation2008) inquiry rubric presented by Ryker and McConnell (Citation2017), and (2) a newly developed utility rubric based loosely on that proposed by McConnell et al. (Citation2017). To the authors’ knowledge, this study represents the first evaluation of utility within rock and mineral activities.

Theoretical framework

This study builds on research into the resistance associated with the implementation of inquiry-based instruction (Apedoe et al., Citation2006; Gormally et al., Citation2016; Spector et al., Citation2007). The work is grounded by the notion that instructors may struggle to implement new teaching practices into the classroom (Lazarides et al., Citation2020) which may be further impacted by a perception of implementation efforts.

Locating the research

Due to the qualitative nature of the works described here, the researchers’ background has an impact on the interpretation of the data (Feig, Citation2011; Marshall & Rossman, Citation2014; Patton, Citation2002). At the time of data interpretation, five of the six researchers were geoscience graduate students from various geoscience disciplines, each with multiple semesters of experience teaching labs. The last author is a geoscience education researcher with interest in how introductory labs are taught, and with experience teaching and overseeing introductory geoscience lab instruction.

Methods

Conducting the search

Published rock and mineral labs were collected through the Teach the Earth collection (Teach the Earth, Citation2023) hosted by SERC (Citation2023a) in November 2020. We opted to search TTE because it represents the largest single collection of freely available geoscience teaching activities online. The authors generated a list of search terms that might appear in rock and mineral labs, and restricted results to those identified as a “Lab Activity” (). Next, a set of inclusion and exclusion criteria were developed with the intention of identifying the labs most likely to be used in teaching rocks or minerals at an introductory level. Labs needed to meet the following four inclusion criteria: (1) the activities are published in the SERC database, (2) the audience for the lab is an introductory college geology course, (3) all lab materials were readily available, and (4) the activity was adaptable to all standard teaching environments (e.g., if it is a field activity, other instructors should be able to replicate the environment on or near their own campus). Furthermore, labs were excluded if any of the following criteria were met: (1) the activity was too site-specific (e.g., a field trip examining specific campus building stones), (2) there was nonstandard software required for purchase in order to access the lab, or (3) if the search result did not match the search term (e.g., a “modeling sea level” activity returned from a “sedimentary rocks” search). Of the 2,821 pages reviewed by the author team, 36 individual labs met all inclusion and no exclusion criteria for inquiry and utility coding ().

Table 1. The list of search results with the number of hits (labs returned), number of labs assessed (labs reviewed), and the number of labs that met the requirements for detailed evaluation (labs kept).

Inquiry rubric

Each independent portion of the lab was considered its own activity and was evaluated individually using the Ryker and McConnell (Citation2017) inquiry rubric modeled after Buck et al. (Citation2008). Buck et al. (Citation2008) identified five levels of inquiry: confirmation, structured, guided, open, and authentic. These levels are evaluated based on how closely the lab activity aligns with the six steps of the scientific process: the problem/question, theory/background, procedures/design, results analysis, results communication, and conclusions. The steps are described as being either provided or not provided for the student (, with examples in ).

Table 2. The inquiry Rubric used to break down inquiry into specific items that can be asked of a student.

Table 3. Examples of inquiry or no inquiry for each item in a laboratory activity as dictated by the rubric in Table 2.

If the step is not provided by the instructor, the student has autonomy in how they engage in the decision-making process and the step is coded as inquiry (1). If a step is provided for a student, it is coded as not inquiry (0). The result is a total inquiry score for each part of the lab that ranges from 0 (confirmation, all steps provided) to 6 (authentic, no steps provided). Where possible, the activities of the lab were determined using headings provided (e.g., Part 1, Part 2). If headings were not used, the authors used natural breaks within the lab (e.g., transitions from grain size classifications to interpretations of sedimentary environments) to partition the labs. For labs with multiple activities (n = 9), the average number of steps provided across all activities was used in the analysis of the overall lab.

In both the original Buck et al. (Citation2008) and Ryker and McConnell (Citation2017) adaptation of the inquiry rubric, the same steps in the scientific process had to be provided or not provided in order for the lab (Buck et al., Citation2008) or activity within the lab (Ryker & McConnell, Citation2017) to be identified at a level of inquiry. For example, a structured inquiry lab is one that does not provide conclusions or results communication steps for students, and instead, students are expected to provide these. A preliminary analysis of lab activities for this study indicated that a lab might not provide, for example, the conclusions and the results analysis, but would indicate how to communicate the results. Since the original and adapted inquiry rubric (Buck et al., Citation2008; Ryker & McConnell, Citation2017) required specific steps to not be provided (i.e. results communication and conclusions for structured inquiry; those plus results analysis for guided), we were unable to apply the rubric in its original form. In keeping with the intent of the original rubric, we retained the idea that the more steps provided for students, the lower the level of inquiry. For example, a structured lab was one scoring a 1-2 and a guided lab a 3, regardless of which steps were not provided ().

In order to establish interrater reliability, three authors (J.F., K.R., M.P.) co-coded three labs with seven activities. Prior to discussion, a Fleiss’ kappa value (κ) of 0.88 was calculated, suggesting very good inter-rater reliability (>0.81 considered very good; Fleiss, Citation1971 and Landis & Koch, Citation1977). After brief discussion, the researchers obtained perfect agreement (κ = 1.0) and dispersed the remaining labs for individual coding.

Utility rubric

Each lab was evaluated against a laboratory utility rubric developed for this study by three of the authors (B.S., S.O., L.T.), based loosely off of the strategy utility rubric for active learning strategies created by McConnell et al. (Citation2017; ). The utility rubric includes two main categories: instructor time and material cost. The instructor time category includes six criteria: the perceived time required to (1) train instructors, (2) prepare the activities, (3) teach the background, (4) complete the activities, and (5 & 6) grade. Grading includes two separate criteria: (5) the time associated with the type of questioning used and (6) the number of individual assessments. The material cost category includes four criteria: (1) accessibility of supplies, (2) location of the lab, (3) initial cost, and (4) upkeep costs. Each of the authors applying the utility rubric had multiple semesters of experience teaching labs and drew on this knowledge for their evaluation. Costs of materials were assessed through common supply companies such as Ward’s Science or Fisher Scientific.

Table 4. The utility rubric with criteria descriptions and rankings.

Each of the rubric criteria were assigned a score of 1 (low utility), 2 (moderate utility) or 3 (high utility). A lower utility rating indicates that the activity requires a higher expenditure of cost, instructor time, and effort. This resulted in a possible total utility score from 10 to 30, with higher scores indicating easier implementation of the lab. Labs scoring a total ranging from 10 to 16 were considered low, 17–23 moderate, and 24–30 high utility.

In order to establish interrater reliability, three authors (B.S., S.O., L.T.) co-coded ten labs independently and then compared scores. Rules were created to maintain consistency in evaluating the 10 utility criteria (see Notes, ). For example, if the duration of the lab was not specified, it is assumed to be a normal duration of 1.5–2 h (moderate utility). Two authors reviewed this default assignment for each lab to confirm it seemed reasonable based on their own teaching experiences. Moreover, if a lab provides a time range (e.g., 1–3 h), the coder assumes the longest time (3 h) is required. This iterative process continued until the rubric was adequately refined, researchers agreed on the criteria, and a good (>0.6) Fleiss’ kappa was established (Fleiss, Citation1971; Landis & Koch, Citation1977). Prior to discussion, Fleiss’ kappa for all sections was 0.81, with all but one category reaching a good Fleiss’ kappa. The ease of grading category had poor agreement (−0.18) and the authors decided to revise the rules for this section completely. Following this revision, 10 new labs were scored by two of the authors (M.P., K.R.). These authors had perfect agreement (κ = 1.0) on all categories prior to discussion, including ease of grading. After this, one author (M.P.) coded the remaining 26 labs.

Statistical analysis

The average proportion of labs at different levels of inquiry and utility are provided below. To test the relationship between inquiry and utility, we use Kendall’s tau-b. This test was selected based on both variables being measured on an ordinal scale, and the hypothesis of a potential monotonic relationship between the variables (i.e. the variables are moving in the same direction, but potentially at different rates). Statistical analyses were performed in R4.2.3, and an alpha level of 0.05 was used to determine statistical significance.

Limitations

This review was limited to labs available through SERC. Although the SERC database is extensive, it does not include any labs that may be exclusively published in other locations (e.g., university specific “in house” labs and those from textbook publishers). Additionally, the labs examined are open to instructor freedoms such as focusing on or increasing the weight of various activities within, or eliminating activities entirely. This would result in the overall inquiry scores being altered from those reported here. The relative rubric scores are directly related to the background of the researchers which are relatively consistent among the authors. However, perceptions of utility may vary for another instructor. Lastly, there is a potential relationship between some of the utility criteria as defined in the rubric. For example, if the lab location requires the instructor to be shown where to go and how to best utilize the space, then both instructor training and location of the lab categories will earn a moderate utility score.

Results

Rock and mineral laboratory characteristics

Labs were added to the Teach the Earth collection between 2004 and 2020, with the largest grouping of these being added in 2008 (see Supplemental File 1 for years). More than half (55%) of the labs reviewed (n = 36) were “traditional” rock and mineral identification activities (similar to activities described in Grissom et al., Citation2015). These activities often focused on individual identification aspects (i.e., only minerals or a specific rock family), either combining minerals and rock types, or presenting minerals or rock types individually. While the number of rock or mineral samples varied between labs (0 to 25), there was some consistency in the specific minerals and rocks examined (see Supplemental File 2), and the student approach to identification (a road map to mineral/rock name).

The rock and mineral labs included in the analysis encompassed multiple teaching modalities (i.e., in-person and virtual), methods, and materials. While not always specified in the lab description, four labs were identified as being designed for delivery in a virtual environment. Students engage with these activities via virtual samples (photos and sometimes videos) and tasks (often online quizzes). Three labs incorporated varying levels of a field component within the assignment. Two of these examined rates of chemical weathering using tombstones in cemeteries (all field-based), and the third required students to collect samples in the field for later identification and analysis. Two labs used less common approaches to deliver their content. One used samples common in traditional rock and mineral identification labs; however, students were quizzed on their knowledge during a game of Bingo, adding a gamification component (Hamari et al., Citation2014) to the experience. The other lab aimed to have students gain an overall understanding of rock type and the rock cycle, teaching by analogy (Gray & Holyoak, Citation2021) through the melting, solidifying, weathering, and "metamorphosing" of various types of candy.

Mineral identification activities frequently referenced common minerals with distinctive properties such as calcite, gypsum, and halite (see Supplemental File 2). Traditionally, the samples are numbered, and students are given a table with a row for each number. For each sample, students assess the properties of each mineral and put them into the appropriate cell of the table. Mineral properties typically assessed include hardness, streak, color, cleavage, crystal faces, magnetism, effervescence in the presence of hydrochloric acid (HCl), and sometimes other defining characteristics (e.g., smell or taste) that aid in mineral identification. Flow charts and mineral property tables are commonly used to help in the identification process.

The “traditional” rock identification activities are very similar to the “traditional” mineral labs, in that they focus on systematic identification of a set of samples, though the processes used are appropriate to the rock type. Sedimentary rocks are identified by grain size, grain shape, effervescence in response to HCl, and presence of fossils and minerals. These identification activities are often followed by questions about possible environments of deposition. Igneous rocks are traditionally taught by having students identify samples by texture and composition; these exercises are followed by questions regarding the location of these rocks in the crust and uppermost mantle. Metamorphic rocks are traditionally taught by having students identify samples based on foliation, effervescence in response to HCl, composition, and crystal size. Similar to mineral activities, identification tables and flow charts are commonly used to assist students through the sample identification process.

Inquiry results

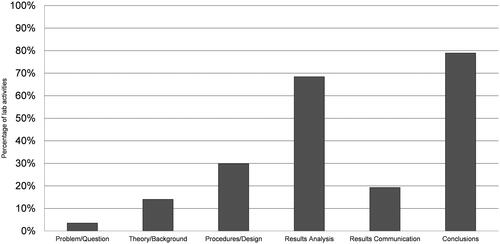

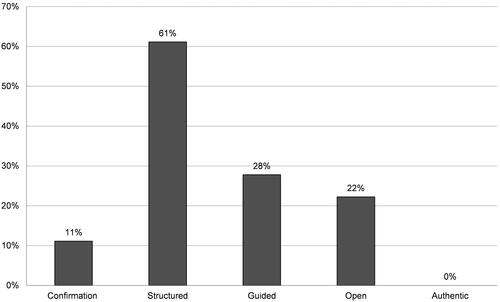

A total of 55 activities within the 36 labs were assessed for their level of inquiry. All activities ranged from 0 (confirmation) to 5 (open), with no authentic inquiry activities. In total, 7% of activities were coded as confirmation, 58% as structured, 18% as guided, and 16% as open. Most labs incorporated structured inquiry activities (61%; ). No labs had authentic inquiry activities, though roughly 22% of the labs included one or more open inquiry activities. While most of the labs examined here (n = 27) had only one activity, nine included multiple activities. Some of the labs with multiple activities used only one type of inquiry (e.g., all activities were structured; n = 3), others used activities at different inquiry levels (n = 6). All labs that included confirmation activities (n = 4) also included activities at a higher level of inquiry (structured to open).

Figure 1. Percentage of labs incorporating activities at each level of inquiry. Some labs (n = 9) contained multiple activities at different inquiry levels.

Students were most often given autonomy over the Conclusions (79%) and Results Analysis (68%) sections of activities (). Inquiry-based activities frequently include open-ended instructions like “using information you can derive from the map of the Daisen Volcano provided, describe the volcanic activity that would have been occurring at the different time periods,” (Reid et al., Citation2011). It is up to the student to determine how to analyze the information they have been given. Additional examples of how instructions can be written to either provide or limit autonomy are provided in .

Utility results

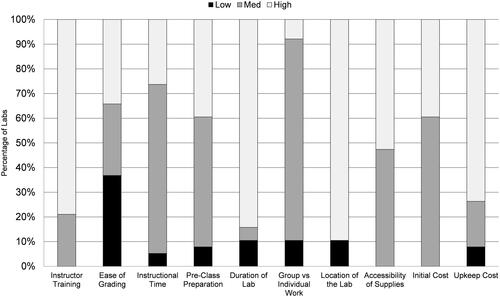

The 36 labs evaluated for utility (; ) had scores ranging from 18 to 29 with average and median values of 24.4 ± 2.6 and 24, respectively. Most of the labs evaluated (64%) fell into the high utility category (utility = 24–30). None of the labs had total scores in the low utility category (utility = 10–16).

Figure 3. Percent utility breakdown for high (light gray) medium (gray), and low (black) within each of the rubric categories.

Scores for the 10 individual utility criteria are illustrated in , with a full breakdown in Supplemental File 1. The criterion with the lowest utility scores was “ease of grading,” with 37% of labs being rated as low utility for this item. Four criteria were most likely to have medium utility: “instructional time” (68%), “pre-class prep” (53%), “group vs individual lab” (82%) and “initial cost” (61%). Lastly, high utility scores were most common for “lab location” (89% of labs), “lab duration” (84% of labs), and “instructor training” (79% of labs) criteria. Overall, utility scores were comparable for both the time and monetary utility subscales.

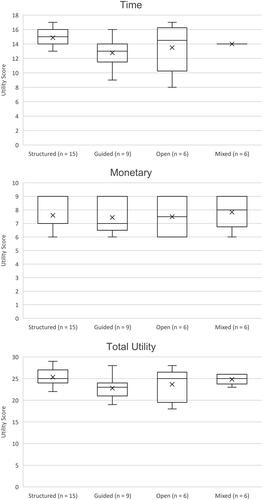

Inquiry and utility

Inquiry and utility scores were compared to identify possible trends (). Labs with all open inquiry activities had the largest range of utility scores. However, no significant correlations (N = 36) were found between inquiry and time utility (τb = −0.112, p = .404), monetary utility (τb = 0.034, p = .811), or overall utility (τb = −0.133, p = .313). Total utility and time-based utility both have a slight, though statistically insignificant, negative correlation with inquiry; monetary-based utility had almost no correlation with inquiry.

Figure 4. (a) Plot of (A) time utility, monetary utility (B), and overall utility (C) within each inquiry category. None of the labs examined during this study consisted of all confirmation or authentic activities. (b) Plot of (A) time utility, monetary utility (B), and overall utility (C) within each inquiry category. None of the labs examined during this study consisted of all confirmation or authentic activities. (c) Plot of (A) time utility, monetary utility (B), and overall utility (C) within each inquiry category. None of the labs examined during this study consisted of all confirmation or authentic activities.

Discussion

In an initial review of various STEM lab manuals, Buck et al. (Citation2008) determined that geology labs tended to have the least amount of inquiry of all the STEM fields, with all 46 labs having been evaluated as confirmation labs. Upon further examination by Ryker and McConnell (Citation2017), who analyzed geology lab activities in published lab manuals, rock and mineral activities seemed to have the lowest levels of inquiry of commonly taught geology labs. However, it is possible for these labs to be taught in more inquiry-oriented ways, based on analyses of unpublished or “in house” labs used by individual institutions (Grissom et al., Citation2015; Ryker & McConnell, Citation2014). Understanding that STEM professors tend to lean away from inquiry-based instruction (Apedoe et al., Citation2006), this review sought to determine how introductory rock and mineral activities published through TTE are taught in addition to their level of inquiry and utility.

In our assessment, clear patterns were identified in the modality of instruction and materials used. Classroom labs often relied on physical samples, while online labs utilized images or videos of samples and field labs identified rock types in situ. Regardless of modality, many included or refer to a common feature: an identification table with a sample on each row and characteristics for students to identify in columns. Some labs stopped at identification (e.g., Wiese, Citation2020), while others brought in additional information about rocks and minerals, such as tectonic settings (e.g., Reid et al., Citation2011) or formation history (e.g., Bickmore, Citation2009). The non-identification activities have the potential to enhance student interest by connecting to their real-world experiences or illustrating the relationships between the current lab with other topics covered (e.g., plate tectonics). Due to the nature of the rubric, modality of instruction directly impacts the utility score related to accessibility and does not appear to impact any other sub-categories. Online labs tended to be perceived as having greater accessibility, while labs including field components (e.g., Savina, Citation2004) rated lower in accessibility due to increased complexity with coordinating field activities (transportation, access, etc.). The samples featured in the activities tended to be similar, with some minerals and rocks featured more often, presumably due to their prevalence (e.g., quartz) or unique characteristics (e.g., galena for its density; see common minerals and rocks, Supplemental File 2). However, some activities incorporated additional materials (e.g., candy) or reference points (e.g. crystal shapes, ternary diagrams). These additions may be useful in making a lab more memorable. If mineral and rock types are taught across multiple weeks of a lab course, the “traditional” format with an identification table could become repetitive and lead to student disinterest or burnout. Providing different materials or activities (e.g., Pound, Citation2005) could prevent or minimize this.

The majority (58%) of the lab activities in this study were structured, which is comparable to the proportion of activities identified as structured in a physical geology course (Ryker & McConnell, Citation2014; 43%) and in published geology lab manuals (Ryker & McConnell, Citation2017; 48%). Nonetheless, the instructor-developed labs examined here may contain higher levels of inquiry than their previously examined published counterparts. More specifically, the labs examined here were less likely to rely on confirmation style activities: only 7% of activities were confirmation, compared with 15.3% of activities in a physical geology course (Ryker & McConnell, Citation2014) and 39.9% of activities in published geology lab manuals (Ryker & McConnell, Citation2017). Additionally, 16% of the examined instructor-made activities were open inquiry compared to the 6.5 and 1% reported by Ryker and McConnell (Citation2014, Citation2017, respectively). This supports the finding of Grissom et al. (Citation2015) that rock and mineral labs can be taught in more inquiry-based ways. The increased levels of inquiry within the TTE collection may be aligned with how these activities are reviewed after submission. This review process includes the evaluation of the materials by other geoscientists for elements including pedagogical effectiveness, robustness (usability and dependability of all lesson components), and completeness of the materials provided (SERC, Citation2023b). An activity with exemplary pedagogical effectiveness promotes higher order thinking skills, which may be aligned with the use of higher-level inquiry activities that provide students with increased autonomy.

The most common places for students to be provided with autonomy over the scientific process were Conclusions and Results Analysis. As expected, the Question/Problem of the activities were most often supplied to the students with only 2 of the 55 activities allowing for student autonomy in this area (). In a review of how to scaffold an astronomy course, Slater et al. (Citation2008) determined that surveyed instructors found the Question/Problem item to be the most difficult aspect of the scientific process to explain to students. Conversely, due to its inherent nature, the conclusion section most commonly allows for student autonomy with little to no prompting from the instructor. If this is the only portion of the scientific process that students have control over, then students may be missing out on opportunities to develop and apply their critical thinking skills through question formation, experimental design, data analysis and communication. It may be that introductory labs are not perceived as a place for students to develop question-asking skills. Even so, there could still be places to develop transferable skills like deciding how results should be presented.

Labs used in this study were identified as having high (64%) or moderate (36%) utility. Lower utility scores tended to come primarily from “ease of grading,” followed by “instructional time,” “pre-class prep,” “group vs. individual lab” and “initial cost.” Introductory rock and mineral labs on the TTE site tend to be taught within a standard lab period (∼3 h or less) and generally incorporate some level of group activity, although instructions frequently indicate that students will produce their own individual assignment for assessment. Low utility levels associated with the “ease of grading” criterion was a notable outlier in this study, being the only item that consistently achieved a low utility score among labs. Collaborative projects with group lab reports or presentations (which are rare in this setting, implemented in only 2 of 36 labs) may reduce grading burdens while providing opportunities for higher inquiry levels (Barron & Darling-Hammond, Citation2008; Fall et al., Citation2000; Johnson & Johnson, Citation1999; Meijer et al., Citation2020; Strijbos, Citation2011). Instructional time and pre-class preparation time were among the lower scoring utility criteria, on average, for the labs. Concerns about instructional time could be resolved (or at least reduced) by utilizing lecture time to introduce content or by using similarly designed labs from week to week. Once students have experienced activities like jigsaws or case studies that may require more instructor prep time (Aronson, Citation1978; Fasse & Kolodner, Citation2013; Francek, Citation2006; McConnell et al., Citation2017), they will require less instruction for future uses. While the utilities of the labs included in this study were assessed individually, it is important to note that consistently using similar techniques could improve the ease and familiarity for both instructor and students. Conversely, the minimal instructor training necessary to teach these introductory labs was a utilitarian strength, with nearly 80% of labs having a high utility in this category. This is promising, as it does not appear that these labs require additional training to use beyond a basic content knowledge of rock and mineral formation and identification.

As with inquiry level, the high utility scores of activities within the TTE collection may reflect the guidance authors receive in contributing activities, or how these activities are reviewed after submission. Authors submitting an activity to the TTE presumably have reason to believe that the activity is usable by others, which would motivate the contribution in the first place. For this reason, the majority of TTE activities, or indeed any published activity, may receive higher utility scores than those developed in-house. The rubric developed for this study could be used to test this hypothesis.

The comparison of inquiry and utility found no significant relationship for rock and mineral labs (p-value >0.05, ). As inquiry increased, utility did not decrease (nor increase), challenging the expectation that increased inquiry of activities exists at the cost of utility (Apedoe et al., Citation2006). This is important, as these rock and mineral labs on TTE provide opportunities to engage students in more inquiry-based exposures to the scientific process while learning important geologic content. Though rocks and minerals have historically been taught at low levels of inquiry (Grissom et al., Citation2015; Ryker & McConnell, Citation2017), resources available through TTE can support the incorporation of more student autonomy in the scientific process without being overly cumbersome on instructor time or other resources.

Conclusions and future work

Rock and mineral activities are integral components in introductory geology courses (Egger, Citation2019; Ryker & McConnell, Citation2017), providing critical opportunities for learning, and form the bedrock on which later geological concepts will be built. The level of utility plays a leading role in the instruction of introductory labs (Henderson et al., Citation2011; Henderson & Dancy, Citation2007, Citation2009; McConnell et al., Citation2017). By necessity, an instructor selecting lab activities must balance their efficacy in generating desired outcomes (e.g., learning gains or interest) with the time and effort it will take to implement them. Having a rough measure of the perceived utility of a lab is valuable to instructors making decisions on what laboratory activities to include. Perceived utility may impact the incorporation of these course materials, despite instructor interest in inquiry-based instruction.

While none of the labs evaluated in this study ranked as “low utility,” the rubric itself is hypothesized to have applications for other studies or descriptions of lab activities. Additionally, examining the individual criteria driving utility scores for rock and mineral labs () may be instructive. Our study supports the idea that these labs also offer students opportunities to engage in authentic scientific processes without being overly cumbersome for an instructor. This study is the first of its kind in presenting a dual analysis of the inquiry and utility for published rock and mineral activities. Surprisingly, our results showed no significant relationship between higher levels of inquiry and lower levels of utility. This finding counters prevailing notions that suggest increasing inquiry levels comes at the expense of utility (Apedoe et al., Citation2006).

Future work should examine the utility and inquiry levels of other topics within introductory geoscience to determine the ease with which more inquiry-based instruction could be implemented. Additionally, it would be interesting to investigate whether activities within the TTE have overall higher inquiry and utility values due to the intake and peer review processes (as compared with published and “in-house| lab manuals). It is possible that the high scores observed in this study reflect the overall nature of the collection, rather than just the rock and mineral labs published within it. Further investigation could include an examination of activities from other topics, and a more detailed comparison between the inquiry rubric and TTE's peer review rubric. Comparisons of inquiry and utility in additional activities could provide insights into other ways in which inquiry can be better incorporated not only into rock and mineral labs but other geoscience and STEM topics. It could also reveal ways in which more traditional, "cookbook" labs in published lab manuals could provide opportunities for student autonomy. Though instructors may have different perceptions of utility, the utility rubric developed for this study serves as an additional tool to characterize concerns and opportunities about laboratory activities of different topics.

Data File, JGE Supplement.xlsx

Download MS Excel (16.1 KB)Data File, JGE Supplement.pdf

Download PDF (215.9 KB)Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- Apedoe, X. S., Walker, S. E., & Reeves, T. C. (2006). Integrating inquiry-based learning into undergraduate geology. Journal of Geoscience Education, 54(3), 414–421. https://doi.org/10.5408/1089-9995-54.3.414

- Aronson, E. (1978). The jigsaw classroom. SAGE Publications.

- Barron, B., & Darling-Hammond, L. (2008). Teaching for meaningful learning: A review of research on inquiry-based and cooperative learning. Book excerpt. George Lucas Educational Foundation.

- Bell, R. L., Smetana, L., & Binns, I. (2005). Simplifying inquiry instruction. The Science Teacher, 72(7), 30–33.

- Bickmore, B. (2009). Rock types lab. Examples. Retrieved December 15, 2020, from https://serc.carleton.edu/sp/library/indoorlabs/examples/35316.html.

- Buck, L. B., Bretz, S. L., & Towns, M. H. (2008). Characterizing the level of inquiry in the undergraduate laboratory. Journal of College Science Teaching, 38(1), 52–58.

- Bunterm, T., Lee, K., Kong, J. N. L., Srikoon, S., Vangpoomyai, P., Rattanavongsa, J., & Rachahoon, G. (2014). Do different levels of inquiry lead to different learning outcomes? A comparison between guided and structured inquiry. International Journal of Science Education, 36(12), 1937–1959. https://doi.org/10.1080/09500693.2014.886347

- Domin, D. S. (1999). A review of laboratory instruction styles. Journal of Chemical Education, 76(4), 543. https://doi.org/10.1021/ed076p543

- Egger, A. (2019). The role of introductory geoscience courses in preparing teachers—and all students—for the future: Are we making the grade? GSA Today, 29(10), 4–10. https://doi.org/10.1130/GSATG393A.1

- Fall, R., Webb, N. M., & Chudowsky, N. (2000). Group discussion and large-scale language arts assessment: Effects on students’ comprehension. American Educational Research Journal, 37(4), 911–941. https://doi.org/10.3102/00028312037004911

- Fasse, B. B., & Kolodner, J. L. (2013). Evaluating classroom practices using qualitative research methods: Defining and refining the process. Fourth International Conference of the Learning Sciences, 4, 193–198.

- Feig, A. D. (2011). Methodology and location in the context of qualitative data and theoretical frameworks in geoscience education research. In A. D. Feig & A. Stokes, Qualitative inquiry in geoscience education research. Geological Society of America.

- Fleiss, J. L. (1971). Measuring nominal scale agreement among many raters. Psychological Bulletin, 76(5), 378–382. https://doi.org/10.1037/h0031619

- Forcino, F. L. (2013). The importance of a laboratory section on student learning outcomes in a university introductory earth science course. Journal of Geoscience Education, 61(2), 213–221. https://doi.org/10.5408/12-412.1

- Francek, M. (2006). Promoting discussion in the science classroom using gallery walks. Journal of College Science Teaching, 36(1), 27–31.

- Gormally, C., Brickman, P., Hallar, B., & Armstrong, N. (2009). Effects of inquiry-based learning on students’ science literacy skills and confidence. International Journal for the Scholarship of Teaching and Learning, 3(2), n2. https://doi.org/10.20429/ijsotl.2009.030216

- Gormally, C., Sullivan, C. S., & Szeinbaum, N. (2016). Uncovering barriers to teaching assistants (TAs) implementing inquiry teaching: Inconsistent facilitation techniques, student resistance, and reluctance to share control over learning with students. Journal of Microbiology & Biology Education, 17(2), 215–224. https://doi.org/10.1128/jmbe.v17i2.1038

- Gray, K. (2017). Assessing gains in science teaching self-efficacy after completing an inquiry-based earth science course. Journal of Geoscience Education, 65(1), 60–71. https://doi.org/10.5408/14-022.1

- Gray, M. E., & Holyoak, K. J. (2021). Teaching by analogy: From theory to practice. Mind, Brain, and Education, 15(3), 250–263. https://doi.org/10.1111/mbe.12288

- Grissom, A. N., Czajka, C. D., & McConnell, D. A. (2015). Revisions of physical geology laboratory courses to increase the level of inquiry: Implications for teaching and learning. Journal of Geoscience Education, 63(4), 285–296. https://doi.org/10.5408/14-050.1

- Hamari, J., Koivisto, J., & Sarsa, H. (2014, January). Does gamification work?–a literature review of empirical studies on gamification. In 2014 47th Hawaii International Conference on System Sciences(pp. 3025–3034). IEEE

- Henderson, C., Beach, A., & Finkelstein, N. (2011). Facilitating change in undergraduate STEM instructional practices: An analytic review of the literature. Journal of Research in Science Teaching, 48(8), 952–984. https://doi.org/10.1002/tea.20439

- Henderson, C., & Dancy, M. H. (2007). Barriers to the use of research-based instructional strategies: The influence of both individual and situational characteristics. Physical Review Special Topics – Physics Education Research, 3(2), 020102. https://doi.org/10.1103/PhysRevSTPER.3.020102

- Henderson, C., & Dancy, M. H. (2009). Impact of physics education research on the teaching of introductory quantitative physics in the United States. Physical Review Special Topics – Physics Education Research, 5(2), 020107. https://doi.org/10.1103/PhysRevSTPER.5.020107

- Johnson, D. W., & Johnson, R. T. (1999). Making cooperative learning work. Theory into Practice, 38(2), 67–73. https://doi.org/10.1080/00405849909543834

- Justice, C., Rice, J., Roy, D., Hudspith, B., & Jenkins, H. (2009). Inquiry-based learning in higher education: Administrators’ perspectives on integrating inquiry pedagogy into the curriculum. Higher Education, 58(6), 841–855. https://doi.org/10.1007/s10734-009-9228-7

- Kuo, Y.-R., Tuan, H.-L., & Chin, C.-C. (2020). The influence of inquiry-based teaching on male and female students’ motivation and engagement. Research in Science Education, 50(2), 549–572. https://doi.org/10.1007/s11165-018-9701-3

- Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33(1), 159–174.

- Lazarides, R., Watt, H. M. G., & Richardson, P. W. (2020). Teachers’ classroom management self-efficacy, perceived classroom management and teaching contexts from beginning until mid-career. Learning and Instruction, 69, 101346. https://doi.org/10.1016/j.learninstruc.2020.101346

- Manduca, C. A., Iverson, E. R., Luxenberg, M., Macdonald, R. H., McConnell, D. A., Mogk, D. W., & Tewksbury, B. J. (2017). Improving undergraduate STEM education: The efficacy of discipline-based professional development. Science Advances, 3(2), e1600193. https://doi.org/10.1126/sciadv.1600193

- Marshall, C., & Rossman, G. B. (2014). Designing qualitative research. SAGE Publications.

- McConnell, D. A., Chapman, L., Czajka, C. D., Jones, J. P., Ryker, K. D. A., & Wiggen, J. (2017). Instructional utility and learning efficacy of common active learning strategies. Journal of Geoscience Education, 65(4), 604–625. https://doi.org/10.5408/17-249.1

- McDaris, J. R., Iverson, E. R., Manduca, C. A., & Huyck Orr, C. (2019). Teach the Earth: Making the connection between research and practice in broadening participation. Journal of Geoscience Education, 67(4), 300–312. https://doi.org/10.1080/10899995.2019.1616272

- Meijer, H., Hoekstra, R., Brouwer, J., & Strijbos, J.-W. (2020). Unfolding collaborative learning assessment literacy: A reflection on current assessment methods in higher education. Assessment & Evaluation in Higher Education, 45(8), 1222–1240. https://doi.org/10.1080/02602938.2020.1729696

- Nelson, K. G., Huysken, K., & Kilibarda, Z. (2010). Assessing the impact of geoscience laboratories on student learning: Who benefits from introductory labs? Journal of Geoscience Education, 58(1), 43–50. https://doi.org/10.5408/1.3544293

- Patton, M. Q. (2002). Two decades of developments in qualitative inquiry: A personal, experiential perspective. Qualitative Social Work, 1(3), 261–283. https://doi.org/10.1177/1473325002001003636

- Pound, K. (2005). Rock & mineral bingo. Teaching materials collection. Retrieved December 15, 2020, from https://nagt.org/nagt/teaching_resources/teachingmaterials/9331.html.

- Reid, L., Cowie, B., Speta, M. (2011). Learning assessment #3—igneous & sedimentary rocks. Activities. https://serc.carleton.edu/NAGTWorkshops/intro/activities/66373.html.

- Ryker, K. D. A. (2014). An evaluation of classroom practices, inquiry and teaching beliefs in introductory geoscience classrooms. North Carolina State University.

- Ryker, K. D. A., & McConnell, D. (2014). Can graduate teaching assistants teach inquiry-based geology labs effectively? Journal of College Science Teaching, 044(01), 56–63. https://doi.org/10.2505/4/jcst14_044_01_56

- Ryker, K. D. A., & McConnell, D. A. (2017). Assessing inquiry in physical geology laboratory manuals. Journal of Geoscience Education, 65(1), 35–47. https://doi.org/10.5408/14-036.1

- Sandoval, W. A. (2005). Understanding students’ practical epistemologies and their influence on learning through inquiry. Science Education, 89(4), 634–656. https://doi.org/10.1002/sce.20065

- Savina, M. (2004). Cemetery geology. Field lab examples. Retrieved December 15, 2020, from https://serc.carleton.edu/introgeo/field_lab/examples/cemetery.html.

- Schwab, J. J. (1958). The teaching of science as inquiry. Bulletin of the Atomic Scientists, 14(9), 374–379. https://doi.org/10.1080/00963402.1958.11453895

- SERC (2023a). SERC. https://serc.carleton.edu/index.html.

- SERC (2023b). Activity Review. https://serc.carleton.edu/teachearth/activity_review.html

- Slater, S. J., Slater, T. F., & Shaner, A. (2008). Impact of backwards faded scaffolding in an astronomy course for pre-service elementary teachers based on inquiry. Journal of Geoscience Education, 56(5), 408–416. https://doi.org/10.5408/jge_nov2008_slater_408

- Spector, B., Burkett, R. S., & Leard, C. (2007). Mitigating resistance to teaching science through inquiry: Studying self. Journal of Science Teacher Education, 18(2), 185–208. https://doi.org/10.1007/s10972-006-9035-2

- Strijbos, J.-W. (2011). Assessment of (computer-supported) collaborative learning. IEEE Transactions on Learning Technologies, 4(1), 59–73. https://doi.org/10.1109/TLT.2010.37

- Teach the Earth. (2023). Teach the Earth. https://serc.carleton.edu/teachearth/index.html.

- Wiese, K. (2020). Metamorphic rock identification. Teaching activities. Retrieved December 15, 2020, from https://serc.carleton.edu/teachearth/activities/236441.html.