Abstract

Introduction

The ultimate objective of the metaverse is to create a new digital life system using brain-computer interface (BCI) technology that integrates and even enhances reality and virtuality, among which the efficient and natural interaction technology between the virtual and real world is one of the key technologies of the metaverse. Augmented reality-based BCI (AR-BCI) technology provides a channel for direct interaction between the brain and the virtual world by decoding the human brain's response to AR stimuli in real-time, thus the development of the metaverse requires the advancement of AR-BCI technology.

Method

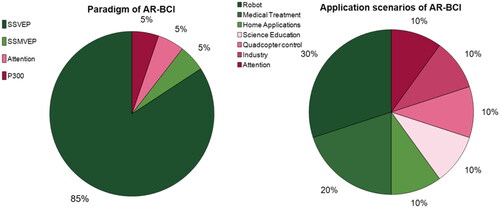

This paper began with the concepts and interrelationships between the metaverse, BCI, and AR technologies. Then the technical characteristics and potential applications of AR-BCI were introduced, and the research progress of three typical AR-BCI paradigms, including SSVEP, P300, and MI, were then summarized.

Conclusion

The survey results indicated that the use of optical see-through glasses to present the SSVEP paradigm has become the key breakthrough direction of AR-BCI research at present. Medical care and robot control are currently the main application areas of AR-BCI. And in the future, AR-BCI will be an important booster to promote the rapid evolution of the metaverse.

1. The development stage of the metaverse

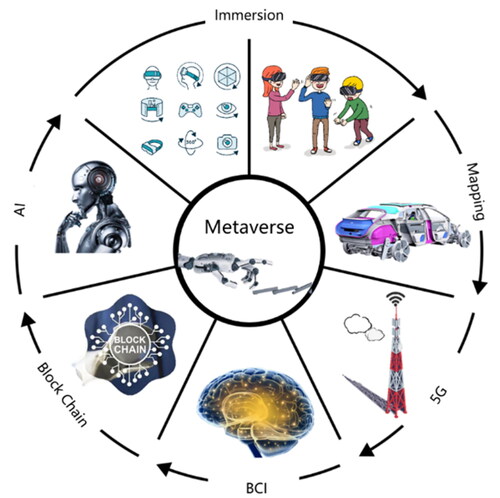

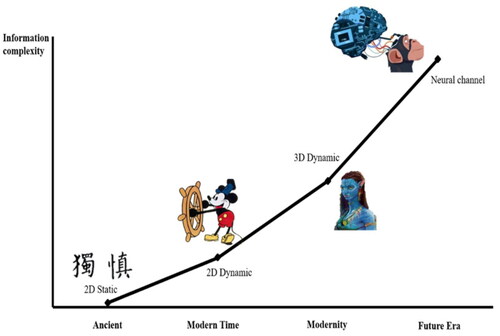

The metaverse is the most eye-catching latest technological innovation in recent years [Citation1]. With the help of augmented reality (AR) technology, the metaverse transcends the limitations of the traditional physical world by building an immersive data mirror world using the real world as a mapping template [Citation2,Citation3]. This virtual world can not only be self-contained, self-circulating, and self-renewing, but also interact with the real world in a new type of time and space, and build a brand-new digital life system that integrates and even enhances reality and virtuality [Citation4]. As shown in the , the metaverse integrates several key technologies, each with its specific role and function. Augmented Reality (AR) technology overlays virtual information onto the real world, providing a richer, immersive user experience. Virtual Reality (VR) technology creates entirely virtual environments, immersing users in a new digital world. Brain-Computer Interface (BCI) technology allows users to interact and control using brain signals, enabling direct communication between brain and the external world. Blockchain ensures data security, transparency, and tamper-proof transactions, forming a secure foundation for interactions within the metaverse. The 5 G communication system offers high-speed, low-latency network connectivity, facilitating instant communication and connectivity among various devices in the metaverse. The integration of these technologies creates a multidimensional, highly interactive, and feature-rich digital space, offering unprecedented experiences and possibilities for users [Citation1, Citation5]. At present, there is no established boundary for the metaverse, and its concept is constantly evolving. In particular, professionals from a variety of sectors are experimenting and attempting new ideas in the fields of medicine, video games, urban planning [Citation6], and online education [Citation7]. The fundamental tenet of the metaverse is the hypothesis of virtual reality compensation: individuals would want to make up for what they lack in the real world in the virtual one, making fiction the driving force behind human civilization. As seen in , virtual objects range from historical 2D static objects like calligraphy and paintings to contemporary 2D dynamic movies and well-known 3D dynamic virtual games. According to experts’ predictions, people will be freed from the restrictions of space and time, and our intelligence will carry out deeper evolution with an efficiency that cannot be calculated if the metaverse achieves its ultimate state [Citation8].

The concept of the metaverse evolves across three stages: the virtual metaverse, the brain-connected metaverse, and the consciousness metaverse [Citation2]. Initially, hardware limitations prevented immediate integration into the metaverse. Specific equipment could accurately simulate only a few visual or auditory sensations, making it challenging to replicate other sensory experiences. In the virtual metaverse, VR or AR devices are usually used as display carriers. The BCI is the key technological advancement in the brain-connected world and humans have started to enter the brain-connected metaverse with its help. At the final stage, we can directly experience the sensory information provided by the outside environment through electrical signals instead of using external devices. We are currently in the transient period between the first and second stages of the technical reserve, from the first stage’s basic technology of AR to BCI. At this point, it is inevitable that AR and BCI technologies will be merged, and this will have a favorable impact on how the metaverse develops.

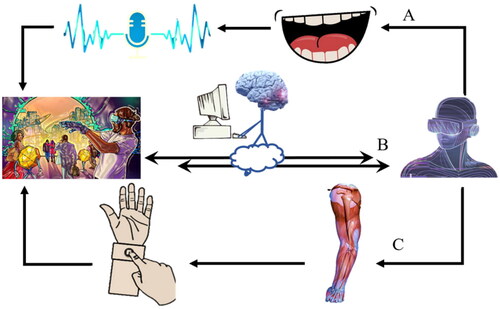

As shown in the and , traditional modes of interaction in the metaverse include familiar tools such as keyboards, mice, gaze-based interactions, gesture controls, and voice commands. These methods are user-friendly and easily adaptable. However, they rely on external devices or input methods to facilitate the interaction with the virtual environment, thus this way of interaction is indirect. As shown in the , AR-BCI provide direct connection between the user’s brain and the virtual environment through decoding brainwave signals, and it brings a deeper sense of immersion and personalized experiences, enabling users to interact more naturally with the virtual environment. For users with physical abilities or communication barriers, AR-BCI could also offer expanded possibilities. Therefore, the emergence of AR-BCI holds significant potential in transforming human-machine interaction mode and user experiences.

Figure 3. Comparison between traditional modes of interaction in the metaverse and AR-BCI. (a) Depicts the process of interacting with the metaverse using voice; (b) illustrates the process of interacting with the metaverse using a brain-computer interface (BCI); (c) demonstrates the process of interacting with the metaverse using gesture controls.

In recent years, AR technology which creates a new virtual environment, and BCI technology which offers a new way for the human brain to communicate with the outside world, have been developed particularly fast. A pioneering endeavor to integrate these two exciting technologies is currently underway, holding immense significance for advancing the evolution of the metaverse. This review aims to provide an overview of the current state of BCI and AR combined systems. The second section briefly introduces the origin and development history of BCI. The third section provides the principle of AR technology and its applications. Subsequently, the fourth section delves into the potential applications arising from the fusion of AR and BCI technologies, outlining the research progress of three exemplary AR-BCI systems. Finally, our discoveries and insights are deliberated upon in the concluding section.

2. History of brain-computer interface

Professor Hawking, a renowned physicist who passed away in 2018, battled amyotrophic lateral sclerosis (ALS). This disease deteriorates peripheral and central motor systems but minimally impacts sensory or cognitive functions. Consequently, he could only generate computer output at a rate of one word per minute by utilizing eye movements. His remarkable research contributions were significantly hindered by his slow typing speed, posing a substantial loss to astrophysics. Apart from ALS, paralysis resulting from brain injury, cerebral infarction, epilepsy [Citation9], and traumatic car accidents can sever the connection between a patient’s brain or volition and their limbs or organs [Citation10]. Even after such illnesses, many patients retain healthy nerve cells and cerebral cortex activity. Their challenge lies in the disruption of signals sent by the cerebral cortex, impeding instructions from reaching their limbs or organs. BCI technology stands as a promising solution to address these issues. It captures “human thought” from the brain using acquisition devices, decodes these signals via a computer, and subsequently transmits them to control external effectors such as spelling devices, manipulators, and intelligent wheelchairs [Citation11]. Initially developed to aid individuals with physical impairments, including robotic arms [Citation12], smart home appliances [Citation13], and mobile phone dialing [Citation14], BCI applications have now expanded. Non-invasive BCI technology enables even non-afflicted individuals to operate drones and games, marking an evolution in its use. In the past few decades, the great potential of BCI has attracted a large number of researchers to study in different directions. In 1988, Farwell et al. developed the P300-BCI system for the first time to communicate with the computer by means of the P300 potential, which could realize the spelling task of words [Citation15]. At the first international BCI meeting held in 1999, the concept of BCI was clearly defined firstly: BCI created a unique pathway for communication between brain activity and external devices, enabling direct brain control of devices without the use of muscles or peripheral nerves [Citation16,Citation17]. Since then, BCI technology based on the classical paradigm has developed rapidly [Citation18]. Gao et al. conducted a series of research on the paradigm designs, recognition algorithms, and application scenarios of SSVEP-BCI [Citation19]. Graz-BCI team explored the prospects of MI-BCI in clinical applications [Citation20]. Serby et al. improved the traditional P300-BCI system to make it achieve a high-speed communication rate while maintaining a lower error rate [Citation21]. In 2004, Schalk et al. released a general BCI research platform, BCI2000, which greatly reduced the workload of building a BCI system and attracted researchers in related fields to devote themselves to BCI research [Citation22]. Hochberg et al. build a BCI-controlled robotic arm system in 2012, and their results showed that two people with long-standing tetraplegia could use it to feed themselves [Citation23]. In fact, the brain not only communicates with computers or equipment but also interacts with the external environment and organs in the body. In order to describe the interaction of the brain more comprehensively and accurately, in 2010, Yao proposed the definition of the brain–apparatus interaction at the first Chinese brain-computer interface competition workshop for the first time and formally proposed the concept of the brain–apparatus conversation (BAC) in 2020 based on the development of interaction technology between the brain and apparatus [Citation24]. In 2011, Koji Takano first applied the experimental paradigm of P300 to AR displays [Citation25]. Our study also showed that the AR-BCI system running with transparent head-mounted displays may help to build an advanced intelligent environment [Citation26,Citation27]. These prior studies opened the prelude to the combination of BCI and AR technologies.

3. Principle of AR technology

The fundamental concept of an AR display involves presenting users with translucent images that adjust as they move [Citation28]. In essence, an object’s reflected light generates a two-dimensional image on the observer’s retina. AR technology manipulates the light beam produced by the light source, modifying it based on image information following a specific path and sequence over time [Citation29]. The optical projection system projects the predetermined outgoing light onto the retina of the human eye [Citation30]. If we simultaneously project a suitable two-dimensional image and a real-world image onto the observer’s retina, then the two-dimensional virtual image will transform into a “three-dimensional object” in reality after the aberration processing of the human cerebral cortex [Citation31,Citation32]. We can create the illusion of seeing three-dimensional objects in the real world from the perspective of the observer [Citation33].

AR technology integrates many features that flat-panel display technology does not have. It is possible to add virtual information that does not exist in reality [Citation34], integrate real scenes and virtual information by building a new large-world coordinate system [Citation35], and virtual and real information can enter human eyes simultaneously without any delay. AR enhances human perception of the world, if AR technology is combined with 5 G technology, ultra-low latency and extremely high data communication rate of the system can be achieved [Citation36]. In recent years, AR technology has developed rapidly, and some application scenarios have been realized in the fields of clinical medicine [Citation37], health monitoring [Citation38], navigation [Citation39], and engineering [Citation40]. Furthermore, with the explosive growth of powerful and affordable mobile devices, and the construction of advanced communication infrastructure, wearable AR devices are becoming more and more popular [Citation41].

At this stage, the metaverse is still gradually migrating from traditional fixed devices to mobile and even wearable devices [Citation42]. The video game interactive devices have been upgraded from computers to mobile phones, and are now evolving into AR/VR glasses. If AR technology achieves another breakthrough, it will greatly improve the efficiency and speed of human-computer interaction [Citation43]. According to the idea that the metaverse is developing towards mobile display, AR equipment is the future entrance of the metaverse.

4. Current research progress of AR-BCI

Compared with traditional display technology, AR display has many advantages. If BCI technology is combined with AR technology, a new research field can be developed, which can even revolutionize many industries including healthcare and robotics [Citation44]. Users can use neural commands to control controllers in AR to improve their lives. Traditional BCI applications use bulky computer screens to present stimulation paradigms, but the large size and weight of the display are inconvenient and obscure the user’s vision.

The eyes are an important window for human beings to express emotions and transmit information. Eyes contact allows us to better express our own emotions and receive the emotions of others, which promotes effective communication [Citation45]. In the traditional BCI systems based on visual evoked EEG signals, computer screens and VR glasses are usually used to present visual stimuli, which will hinder the direct communication between users and the outside world, resulting in the loss of some information, but using AR glasses can avoid this situation. On the other hand, BCI technology provides an efficient interaction channel for head-mounted AR devices. For example, in surgery based on AR technology, the doctor’s hands must be kept sterile and cannot operate AR glasses, so BCI technology is the best solution to replace hands [Citation46]. Many research fields are being promoted by the combination of AR and BCI technology. Arquissandas et al. developed an AR-BCI system that can change the environment to perform exposure therapy for users, and experiments showed that it can adjust the mood of patients and contribute to the recovery of depression [Citation47]. Barresi et al. employed AR-BCI technology to combine the user’s attention with the control of the surgical robot, and created the surgeon’s perception of the real surgical environment based on AR technology. The length of the laser scalpel depend on the user’s level of attention, making it convenient to observe and receive feedback in real time [Citation48]. Zeng et al. proposed a technique for giving consumers visual feedback by utilizing AR devices, which combined eye tracking and EEG-based BCI to enable intuitive and efficient control of the robot arm when perform object manipulation tasks while avoiding obstacles in the workspace [Citation49]. The above research indicated that AR-BCI has great advantages compared with traditional BCI. Next, the existing AR-BCI research are summarized according to the category of stimulus paradigms.

4.1. AR-BCI based on P300

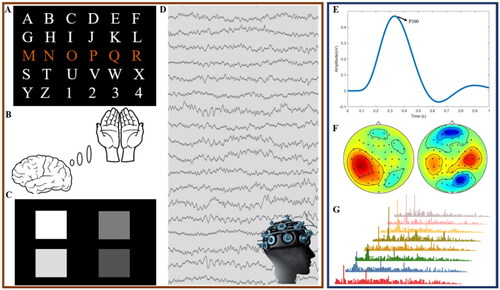

As shown in the , the Oddball stimulation paradigm, which alternates two events with different probabilities to induce the P300 in the brain waves, is the most well-known experimental design in P300-BCI [Citation50]. As shown in the , P300 is a type of event-related potential that is named because its positive peak arises 300 ms after stimulation [Citation51], it is highly responsive to the target stimulus, and the lower the probability of the target stimulus, the greater the amplitude of P300 evoked [Citation52]. Using the generation rules of P300, direct communication between the brain and external devices can be realized, such as the P300 speller [Citation53].

Figure 4. Three common paradigms used in designing AR-BCI. (a–c) represent the experimental paradigms for P300, MI, and SSVEP, respectively. (d) Displays the collected EEG signals, (e) shows the time-domain waveform of P300, (f) exhibits the energy topography of imagined left and right-hand movements, and (g) depicts the frequency-domain waveform of SSVEP.

In the initial stage of applying AR technology to the BCI system, many studies were conducted based on P300. In 2010, Lenhardt et al. combined AR and asynchronous P300-based BCI to control a robotic actuator, and experiment results showed that subject could move a specific object on the table among several objects by concentrating on it [Citation54]. Takano et al. added AR to P300-based BCI in 2011, and demonstrated that it could help build advanced intelligent environments [Citation25]. In 2017, Kerous designed an AR-BCI system based on P300, discussed the design options, and provided the direction of future work [Citation55]. In order to compare the performance of P300-BCI in AR and VR, Kim et al. created a BCI application in 2021 that can be used to control aircraft in VR and AR [Citation56]. Although P300-based AR-BCI started earlier, with the gradual rise of other paradigms, research and papers related to P300-based AR-BCI have shown a downward trend in recent years.

4.2. AR-BCI based on MI

As shown in the and , it has been discovered that the EEG rhythmic energy of the contralateral cortex of the brain dramatically decreases when the brain controls or imagines the movement of one side of the limb, and such phenomenon is called event-related desynchronization (ERD) [Citation57]. After limb movement or imagination, the energy of the contralateral EEG rhythm returns to the previous level, which is event-related synchronization (ERS) phenomenon. The different spatial characteristics and frequency band characteristics of ERD/ERS of different body parts are the theoretical basis of MI-BCI decoding. On this basis, the human body’s motion intention can be decoded in real time by utilizing the appropriate classification algorithms [Citation58].

In 2010, Chin et al. designed an AR 3D virtual hand which superimposed on the real hand for MI-BCI feedback, and subjects could receive continuous visual feedback from the MI-BCI through the virtual hand movements. After the experiment, subjects reported the AR feedback is more engaging and motivating compared with the conventional horizontal bar feedback [Citation59]. In 2020, Choi et al. combined MI- and SSVEP-based BCI with AR equipment, designed a new asynchronous hybrid AR-BCI system for navigation of quadcopter flight [Citation60]. According to the survey of Al-Qaysi et al. in 2021, the application of MI-BCI is more integrated with VR environment [Citation61].

4.3. AR-BCI based on SSVEP

As shown in the , the electrical activity of neurons in the human cerebral cortex will be modulated when the retina is stimulated by flickering at a fixed frequency, resulting in continuous feedback on the stimulation frequency (under the stimulation frequency or its doubling frequency). This EEG signal, which has a periodic rhythm similar to visual stimuli, is called SSVEP [Citation62]. The spectral peak at the particular stimulus frequency and harmonic is the primary characteristic of SSVEP [Citation63]. Although some noises are unavoidable, SSVEP is still widely used because of its stable spectrum, high signal-noise ratio (SNR), no training required, and less affected by EMG noise [Citation64].

Combining AR technology with SSVEP-BCI is a good choice to improve the practicability of the BCI system. Since 2013, Microsoft, Google and other companies have successively launched various types of AR glasses, which greatly increased the wearability of AR display devices. AR technology facilitates more natural information interaction and simplifies experimental equipment, offering a novel perspective on how to develop the SSVEP-BCI system. The extended virtual flickering stimuli and the real environment appear in the eyes at the same time, increasing the amount of information perceived by the human brain, and enabling users to perform SSVEP experimental tasks in a more realistic environment.

Horri et al. extended the SSVEP experiment to the AR display and verified that SSVEP can be induced in the subject’s brain by rhythmically altering the contrast and brightness of the stimulus in the AR setting [Citation65]. In order to make the interaction between the AR environment and the actual world more natural, Faller et al. employed four stimulus blocks of different frequencies in the AR environment as command and achieved the control of the robot’s route in the real world [Citation66]. Si-Mohammed et al. compared the AR-SSVEP experiment with the traditional CS-SSVEP experiment, their results showed that when the stimulus window length is greater than 2s, there was no significant difference between them in the recognition accuracy, which indicated that it is feasible to conduct SSVEP-BCI experiments in AR devices, and SSVEP-BCI has the portability from computer screen to AR glasses [Citation67]. Ke et al. designed an eight-class BCI designed in an OST-AR headset, and evaluated the accuracies, information transfer rates (ITRs), and SSVEP signal characteristics of the AR-BCI and CS-BCI, which provided methodological guidelines for developing more wearable BCIs in OST-AR environments [Citation68]. Recently, Zhang et al. explored the influence of the layout, quantity, color and other parameters of SSVEP stimulus in AR glasses on the accuracy and ITR of the BCI system, and proved that the experience and rules obtained by computer screen cannot be completely copied to design the SSVEP-BCI system in AR [Citation26,Citation27]. It is worth noting that when SSVEP-BCI is deployed in AR, the advantage of its large instruction set can still be preserved, but longer stimulus presentation time and more efficient decoding algorithm are required [Citation26]. In addition, using AR devices to present flashing stimuli has the advantage of direct interaction with the real environment, but it will also cause the induced SSVEP signal to be interfered by ambient light, which requires special attention when designing and using SSVEP-based AR-BCI [Citation27].

SSVEP-based AR-BCI has been explored in the fields of robot control and surgery. Zhang et al. developed a humanoid robot controlled by SSVEP-BCI based on AR stimulation, and the experiments results showed that 12 subjects could successfully complete robot walking tasks in the maze, which verified the applicability of the AR-BCI system in complex environments and helped to promote the robot control technology [Citation69]. Chen et al. proposed an asynchronous control system of robot arm made of SSVEP-based AR-BCI, and all 8 subjects in the experiment could control the robot arm to complete a jigsaw puzzle [Citation70]. Arpaia et al. integrated medical detection equipment, EEG acquisition device, and AR glasses to allow anesthesiologists to observe the patients’ vital signs gathered from electronic medical equipment in real time, thus complete anesthetic work more efficiently [Citation38]. In medical practice, we look forward to the rapid landing and progress of SSVEP-based AR-BCI technology, which can free doctors’ hands and eyes from the keyboard and screen [Citation71].

5. Future prospects of AR-BCI

lists papers on AR-BCI published between 2019 and 2022. The following research trends can be found when compared it with the review summarized by Si-Mohammed et al. [Citation85]. As shown in , (1) In the early days, half of the studies used P300 and MI as the BCI paradigm, while most of the recent AR-BCI studies were based on the SSVEP paradigm. (2) Regarding to the display mode of AR devices, 75% of them chose the VST type in the early studies, but in recent years, OST-based AR devices have dominated. (3) Half of the early AR-BCI studies required the use of computers or mobile phones to achieve enhanced display, while recent studies mainly choose head-mounted Hololens glasses as the display device. This trend shows that AR-BCI is developing in the direction of immersion, efficient interaction and portability, which is exactly the demand for BCI technology in the actual application environment and is indispensable for the development of the metaverse.

Table 1. Overview of previous systems combining AR and BCIs.

AR-BCI technology is a technology that is admired by a few people and feared by most people, but it is destined to be integrated into everyone’s life in the future. Every revolutionary technological innovation conforms to the historical law that a few promote it and the majority gradually accept it, but in the end everyone will accept it in an active, passive or half-push way, which is the unstoppable movement of the wheel of history. The metaverse, which is based on the BCI and AR technology, is still very cutting-edge. As an ordinary person, if you don’t deliberately understand it, you may still be at the level of artificial exoskeletons helping paralyzed patients move their fingers. But the brain-computer interconnection in the AR environment will eventually be a revolution that changes the definition of the entire human species and the organizational structure of human society.

While AR-BCI technology holds promise, it faces certain limitations and technical constraints. Presently, challenges persist in terms of accuracy and stability due to environmental interference, individual variability, and inherent noise during brain signal acquisition and processing. Additionally, the computational demand for real-time processing of extensive brain signal data poses constraints, particularly in resource-constrained or mobile device applications. The need for personalization and adaptation also remains a hurdle, as developing universally applicable AR-BCI systems requires tailored training and adaptability to individual brain signal patterns. Addressing these limitations may require advancements in algorithmic sophistication and computational power. Furthermore, there is significant room for advancement in the collection equipment for EEG signals, such as more accurate and convenient dry-electrode EEG caps, as well as ear canal electrodes that can be inserted into the ear, both of which can enhance the usability of EEG collection systems

6. Conclusion

This review is guided by the AR-BCI technology that helps to promote the evolution of the metaverse. Firstly, the composition of the metaverse and its future development direction are concluded. Then, AR-BCI, which combines the advantages of the two, is introduced on the basis of summarizing the characteristics and development history of BCI and AR technologies. The principles and application scenarios of three important AR-BCI paradigms, involving P300, MI, and SSVEP, are reviewed next. Finally, the future research directions for AR-BCI are proposed.

Acknowledgements

This work was supported by the STI 2030-Major Project (2022ZD0208500), and the Technology Project of Henan Province (222102310031).

Disclosure statement

No potential conflict of interest was reported by the author(s).

Correction Statement

This article has been corrected with minor changes. These changes do not impact the academic content of the article.

References

- Mystakidis S. Metaverse. Encyclopedia. 2022;2(1):486–497. doi: 10.3390/encyclopedia2010031.

- Lee S-H, Lee Y-E, Lee S-W. “Toward imagined speech based smart communication system: potential applications on metaverse conditions.” arXiv, Feb. 07, 2022. [cited 2022 Nov 2]. Available from: http://arxiv.org/abs/2112.08569.

- Wang H, Ning H, Lin Y, et al. A survey on the metaverse: the state-of-the-Art, technologies, applications, and challenges. IEEE Internet Things J. 2023;10(16):14671–14688. doi: 10.1109/JIOT.2023.3278329.

- Dionisio JDN, Iii WGB, Gilbert R. 3D virtual worlds and the metaverse: current status and future possibilities. ACM Comput. Surv. 2013;45(3):1–38. doi: 10.1145/2480741.2480751.

- Yang Q, Zhao Y, Huang H, et al. Fusing blockchain and AI with metaverse: a survey. IEEE Open J Comput Soc. 2022;3:122–136. doi: 10.1109/OJCS.2022.3188249.

- Bibri SE, Allam Z, Krogstie J. The metaverse as a virtual form of data-driven smart urbanism: platformization and its underlying processes, institutional dimensions, and disruptive impacts. Comput Urban Sci. 2022;2(1):24. doi: 10.1007/s43762-022-00051-0.

- Tlili A, Huang R, Shehata B, et al. Is metaverse in education a blessing or a curse: a combined content and bibliometric analysis. Smart Learn. Environ. 2022;9(1):24. doi: 10.1186/s40561-022-00205-x.

- van der Merwe DF. The metaverse as virtual heterotopia . Proceedings of the. 3rd World Conference on Research in Social Sciences, Oct 2021. doi: 10.33422/3rd.socialsciencesconf.2021.10.61.

- Huang L, van G. Brain computer interface for epilepsy treatment. In: Fazel-Rezai, R, editor. Brain-computer interface systems - recent progress and future prospects. InTech; 2013. doi: 10.5772/55800.

- Birbaumer N. Breaking the silence: brain?computer interfaces (BCI) for communication and motor control. Psychophysiology. 2006;43(6):517–532. doi: 10.1111/j.1469-8986.2006.00456.x.

- Tang J, Liu Y, Hu D, et al. Towards BCI-actuated smart wheelchair system. Biomed Eng Online. 2018;17(1):111. doi: 10.1186/s12938-018-0545-x.

- Chen X, Zhao B, Wang Y, et al. Combination of high-frequency SSVEP-based BCI and computer vision for controlling a robotic arm. J Neural Eng. 2019;16(2):026012. doi: 10.1088/1741-2552/aaf594.

- Chai X, Zhang Z, Guan K, et al. A hybrid BCI-controlled smart home system combining SSVEP and EMG for individuals with paralysis. Biomed Signal Process Control. 2020;56:101687. doi: 10.1016/j.bspc.2019.101687.

- Lin JS, Wang M, Lia PY, et al. An SSVEP-based BCI system for SMS in a mobile phone. AMM. 2014;513-517:412–415. doi: 10.4028/www.scientific.net/AMM.513-517.412.

- Farwell LA, Donchin E. Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr Clin Neurophysiol. 1988;70(6):510–523. doi: 10.1016/0013-4694(88)90149-6.

- Wolpaw JR, Birbaumer N, McFarland DJ, et al. Brain–computer interfaces for communication and control. Clin Neurophysiol. 2002;113(6):767–791.

- Wolpaw JR, McFarland DJ, Vaughan TM. Brain-computer interface research at the wadsworth center. IEEE Trans Rehabil Eng. 2000;8(2):222–226. doi: 10.1109/86.847823.

- Wolpaw JR, Birbaumer N, Heetderks WJ, et al. Brain-computer interface technology: a review of the first international meeting. IEEE Trans Rehabil Eng. 2000;8(2):164–173. doi: 10.1109/TRE.2000.847807.

- Gao X, Xu D, Cheng M, et al. A BCI-based environmental controller for the motion-disabled. IEEE Trans Neural Syst Rehabil Eng. 2003;11(2):137–140. doi: 10.1109/TNSRE.2003.814449.

- Pfurtscheller G, Neuper C, Muller GR, et al. Graz-BCI: state of the art and clinical applications. IEEE Trans Neural Syst Rehabil Eng. 2003;11(2):1–4. doi: 10.1109/TNSRE.2003.814454.

- Serby H, Yom-Tov E, Inbar GF. An improved P300-based brain-computer interface. IEEE Trans Neural Syst Rehabil Eng. 2005;13(1):89–98. doi: 10.1109/TNSRE.2004.841878.

- Schalk G, McFarland DJ, Hinterberger T, et al. BCI2000: a general-purpose brain-computer interface (BCI) system. IEEE Trans Biomed Eng. 2004;51(6):1034–1043. doi: 10.1109/TBME.2004.827072.

- Hochberg LR, Bacher D, Jarosiewicz B, et al. Reach and grasp by people with tetraplegia using a neurally controlled robotic arm. Nature. 2012;485(7398):372–375. doi: 10.1038/nature11076.

- Yao D, Zhang Y, Liu T, et al. Bacomics: a comprehensive cross area originating in the studies of various brain–apparatus conversations. Cogn Neurodyn. 2020;14(4):425–442. doi: 10.1007/s11571-020-09577-7.

- Takano K, Hata N, Kansaku K. Towards intelligent environments: an augmented reality–brain–machine interface operated with a see-through head-mount display. Front Neurosci. 2011;5:60. doi: 10.3389/fnins.2011.00060.

- Zhang R, Xu Z, Zhang L, et al. The effect of stimulus number on the recognition accuracy and information transfer rate of SSVEP–BCI in augmented reality. J Neural Eng. 2022;19(3):036010. doi: 10.1088/1741-2552/ac6ae5.

- Zhang R, Cao L, Xu Z, et al. Improving AR-SSVEP recognition accuracy under high ambient brightness through iterative learning. IEEE Trans Neural Syst Rehabil Eng. 2023;31:1796–1806. doi: 10.1109/TNSRE.2023.3260842.

- Carmigniani J, Furht B. Augmented reality: an overview. In: Furht B, editor. Handbook of augmented reality. New York, NY: Springer New York; 2011. pp. 3–46. doi: 10.1007/978-1-4614-0064-6_1.

- Lin J, Cheng D, Yao C, et al. Retinal projection head-mounted display. Front Optoelectron. 2017;10(1):1–8. doi: 10.1007/s12200-016-0662-8.

- Jang C, Bang K, Moon S, et al. Retinal 3D: augmented reality near-eye display via Pupil-Tracked light field projection on retina. ACM Trans Graph. 2017;36(6):1–13. doi: 10.1145/3130800.3130889.

- Peillard E, Itoh Y, Moreau G, et al. Can retinal projection displays improve spatial perception in augmented reality?. 2020 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), 2020, pp. 80–89. doi: 10.1109/ISMAR50242.2020.00028.

- Xia T, Lou Y, Hu J, et al. Simplified retinal 3D projection rendering method and system. Appl Opt. 2022;61(9):2382–2390. doi: 10.1364/AO.451482.

- Belcher D, Billinghurst M, Hayes SE, et al. Using augmented reality for visualizing complex graphs in three dimensions . Second IEEE and ACM International Symposium on Mixed and Augmented Reality, 2003. Proceedings, Tokyo, Japan, 2003, pp. 84–93. doi: 10.1109/ISMAR.2003.1240691.

- Carmigniani J, Furht B, Anisetti M, et al. Augmented reality technologies, systems and applications. Multimed Tools Appl. 2011;51(1):341–377. doi: 10.1007/s11042-010-0660-6.

- Lee B, Jo Y, Yoo D, et al. Recent progresses of near-eye display for AR and VR. presented at the Proc.SPIE, Jun. 2021, vol. 11785, p. 1178503. doi: 10.1117/12.2596128.

- Siriwardhana Y, Porambage P, Liyanage M, et al. A survey on mobile augmented reality with 5G mobile edge computing: architectures, applications, and technical aspects. IEEE Commun Surv Tutorials. 2021;23(2):1160–1192. doi: 10.1109/COMST.2021.3061981.

- Condino S, Montemurro N, Cattari N, et al. Evaluation of a wearable AR platform for guiding complex craniotomies in neurosurgery. Ann Biomed Eng. 2021;49(9):2590–2605. doi: 10.1007/s10439-021-02834-8.

- Arpaia P, De Benedetto E, Duraccio L. Design, implementation, and metrological characterization of a wearable, integrated AR-BCI hands-free system for health 4.0 monitoring. Measurement. 2021;177:109280. doi: 10.1016/j.measurement.2021.109280.

- Condino S, Fida B, Carbone M, et al. Wearable augmented reality platform for aiding complex 3D trajectory tracing. Sensors. 2020;20(6):1612. doi: 10.3390/s20061612.

- Mladenov B, Damiani L, Giribone P, et al. A short review of the SDKs and wearable devices to be used for AR application for industrial working environment. Proceedings of the World Congress on Engineering and Computer Science 2018 Vol I WCECS 2018; 2018. p. 6. .

- Starner T, Mann S, Rhodes B, et al. Augmented reality through wearable computing. Presence: Teleoperators Virtual Environ. 1997;6(4):386–398. doi: 10.1162/pres.1997.6.4.386.

- Cascio WF, Montealegre R. How technology is changing work and organizations. Annu Rev Organ Psychol Organ Behav. 2016;3(1):349–375. doi: 10.1146/annurev-orgpsych-041015-062352.

- Billinghurst M, Clark A, Lee G. A survey of augmented reality. FNT Human–Compute Interact. 2015;8(2-3):73–272. doi: 10.1561/1100000049.

- Nikitenko MS, Zhuravlev SS, Rudometov SV, et al. Walking support control system algorithms testing with brain-computer interface (BCI) and augmented reality (AR) technology integration. IOP Conf Ser: Earth Environ Sci 2018;206(1):012043. doi: 10.1088/1755-1315/206/1/012043.

- Bohannon LS, Herbert AM, Pelz JB, et al. Eye contact and video-mediated communication: a review. Displays. 2013;34(2):177–185. doi: 10.1016/j.displa.2012.10.009.

- Blum T, Stauder R, Euler E, et al. Superman-like X-ray vision: towards brain-computer interfaces for medical augmented reality. 2012 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Atlanta, GA, USA, Nov. 2012, pp. 271–272. doi: 10.1109/ISMAR.2012.6402569.

- Arquissandas P, Lamas D, Oliveira J. Augmented reality and sensory technology for treatment of anxiety disorders. 2019 14th Iberian Conference on Information Systems and Technologies (CISTI), Coimbra, Portugal, Jun. 2019, pp. 1–4. doi: 10.23919/CISTI.2019.8760859.

- Barresi G, Olivieri E, Caldwell DG, et al. Brain-controlled AR feedback design for user’s training in surgical HRI. 2015 IEEE International Conference on Systems, Man, and Cybernetics, Kowloon Tong, Hong Kong, Oct. 2015, pp. 1116–1121. doi: 10.1109/SMC.2015.200.

- Zeng H, Wang Y, Wu C, et al. Closed-Loop hybrid gaze brain-Machine interface based robotic arm control with augmented reality feedback. Front Neurorobot. 2017;11:60. doi: 10.3389/fnbot.2017.00060.

- Picton T. The P300 wave of the human event-related potential. J Clin Neurophysiol. 1992;9(4):456–479. doi: 10.1097/00004691-199210000-00002.

- Pritchard WS. Psychophysiology of P300. Psychol Bull. 1981;89(3):506–540. doi: 10.1037/0033-2909.89.3.506.

- Thomas E, Fruitet J, Clerc M. Investigating brief motor imagery for an ERD/ERS based BCI. Annu Int Conf IEEE Eng Med Biol Soc. 2012;2012. p 2929–2932. doi: 10.1109/EMBC.2012.6346577.

- Xiao X, Xu M, Han J, et al. Enhancement for P300-speller classification using multi-window discriminative canonical pattern matching. J Neural Eng. 2021;18(4):046079. doi: 10.1088/1741-2552/ac028b.

- Lenhardt A, Ritter H. An augmented-reality based brain-computer interface for robot control. In: Wong KW, Mendis BSU, and Bouzerdoum A, editors. Neural information processing. Models and applications. vol. 6444. Berlin, Heidelberg: Springer Berlin Heidelberg, 2010, pp. 58–65. doi: 10.1007/978-3-642-17534-3_8.

- Chen X, Huang X, Wang Y, et al. Combination of augmented reality based brain- computer interface and computer vision for high-level control of a robotic arm. IEEE Trans Neural Syst Rehabil Eng. 2020;28(12):3140–3147. Dec doi: 10.1109/TNSRE.2020.3038209.

- Kim S, Lee S, Kang H, et al. P300 brain–computer interface-based drone control in virtual and augmented reality. Sensors. 2021;21(17):5765. doi: 10.3390/s21175765.

- Kosmyna N, Wu Q, Hu C-Y, et al. Assessing internal and external attention in AR using brain computer interfaces: a pilot study. 2021 IEEE 17th International Conference on Wearable and Implantable Body Sensor Networks (BSN), Athens, Greece, Jul. 2021, pp. 1–6. doi: 10.1109/BSN51625.2021.9507034.

- Li P, Gao X, Li C, et al. Granger causal inference based on dual laplacian distribution and its application to MI-BCI classification. IEEE Trans Neural Netw. Learning Syst. 2023:1–15. doi: 10.1109/TNNLS.2023.3292179.

- Chin ZY, Ang KK, Wang C, et al. Online performance evaluation of motor imagery BCI with augmented-reality virtual hand feedback. Annu Int Conf IEEE Eng Med Biol Soc. Aug. 2010;2010 . p. 3341–3344. doi: 10.1109/IEMBS.2010.5627911.

- Choi J, Jo S. Application of hybrid brain-computer interface with augmented reality on quadcopter control. 2020 8th International Winter Conference on Brain-Computer Interface (BCI), Feb. 2020, pp. 1–5. doi: 10.1109/BCI48061.2020.9061659.

- Al-Qaysi ZT, Ahmed MA, Hammash NM, et al. Systematic review of training environments with motor imagery brain–computer interface: coherent taxonomy, open issues and recommendation pathway solution. Health Technol. 2021;11(4):783–801. doi: 10.1007/s12553-021-00560-8.

- Gao S, Wang Y, Gao X, et al. Visual and auditory brain–computer interfaces. IEEE Trans Biomed Eng. 2014;61(5):1436–1447. doi: 10.1109/TBME.2014.2300164.

- Lin Z, Zhang C, Wu W, et al. Frequency recognition based on canonical correlation analysis for SSVEP-Based BCIs. IEEE Trans Biomed Eng. 2006;53(12 Pt 2):2610–2614. doi: 10.1109/TBME.2006.886577.

- Müller-Putz GR, Pfurtscheller G. Control of an electrical prosthesis with an SSVEP-Based BCI. IEEE Trans Biomed Eng. 2008;55(1):361–364. doi: 10.1109/TBME.2007.897815.

- Horii S, Nakauchi S, Kitazaki M. AR-SSVEP for brain-machine interface: e stimating user’s gaze in head-mounted display with USB camera . 2015 IEEE Virtual Reality (VR), Mar. 2015, pp. 193–194. doi: 10.1109/VR.2015.7223361.

- Faller J, Allison BZ, Brunner C, Scherer R, Schmalstieg D, Pfurtscheller G, and Neuper C. A feasibility study on SSVEP-based interaction with motivating and immersive virtual and augmented reality. 2017; arXiv preprint arXiv:1701.03981.

- Si-Mohammed H, Petit J, Jeunet C, et al. Towards BCI-Based interfaces for augmented reality: feasibility, design and evaluation. IEEE Trans Vis Comput Graph. 2020;26(3):1608–1621. doi: 10.1109/TVCG.2018.2873737.

- Ke Y, Liu P, An X, et al. An online SSVEP-BCI system in an optical see-through augmented reality environment. J Neural Eng. 2020;17(1):016066. doi: 10.1088/1741-2552/ab4dc6.

- Zhang S, Gao X, Chen X. Humanoid robot walking in maze controlled by SSVEP-BCI based on augmented reality stimulus. Front Hum Neurosci. 2022;16:908050. doi: 10.3389/fnhum.2022.908050.

- Zhao X, Liu C, Xu Z, et al. SSVEP stimulus layout effect on accuracy of brain-computer interfaces in augmented reality glasses. IEEE Access. 2020;8:5990–5998. doi: 10.1109/ACCESS.2019.2963442.

- Wang J, Suenaga H, Hoshi K, et al. Augmented reality navigation with automatic Marker-Free image registration using 3-D image overlay for dental surgery. IEEE Trans Biomed Eng. 2014;61(4):1295–1304. doi: 10.1109/TBME.2014.2301191.

- Liu P, et al. An SSVEP-BCI in augmented reality. Int Conf IEEE Eng Med Biol Soc EMBC. 2019;:4. 41st Annu Oct. 2019. doi: 10.1109/embc.2019.8857859.

- Angrisani L, Arpaia P, Esposito A, et al. A wearable brain–computer interface instrument for augmented reality-based inspection in industry 4.0. IEEE Trans Instrum Meas. 2020;69(4):1530–1539. doi: 10.1109/TIM.2019.2914712.

- Kerous B, Liarokapis F. BrainChat - a collaborative augmented reality brain interface for message communication. 2017 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct), Nantes, France, Oct. 2017, pp. 279–283. doi: 10.1109/ISMAR-Adjunct.2017.91.

- Park S, Cha H-S, Im C-H. Development of an online home appliance control system using augmented reality and an SSVEP-Based brain–computer interface. IEEE Access. 2019;7:163604–163614. doi: 10.1109/ACCESS.2019.2952613.

- Tang Z, Sun S, Zhang S, et al. A brain-machine interface based on ERD/ERS for an upper-limb exoskeleton control. Sensors. 2016;16(12):2050. doi: 10.3390/s16122050.

- Arpaia P, De Benedetto E, Donato N, et al. A wearable SSVEP BCI for AR-based, real-time monitoring applications. 2021 IEEE International Symposium on Medical Measurements and Applications (MeMeA), 2021, pp. 1–6. doi: 10.1109/MeMeA52024.2021.9478593.

- Ravi A, Lu J, Pearce S, et al. Asynchronous SSMVEP BCI and influence of dynamic background in augmented reality. 2021 27th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Nov. 2021, pp. 340–343. doi: 10.1109/M2VIP49856.2021.9665078.

- Chen L, Chen P, Zhao S, et al. Adaptive asynchronous control system of robotic arm based on augmented reality-assisted brain–computer interface. J. Neural Eng. 2021;18(6):066005. Dec doi: 10.1088/1741-2552/ac3044.

- Zhao X, Du Y, Zhang R. A CNN-based multi-target fast classification method for AR-SSVEP. Comput Biol Med. 2022;141:105042. doi: 10.1016/j.compbiomed.2021.105042.

- Zhang S, Chen Y, Zhang L, et al. Study on robot grasping system of SSVEP-BCI based on augmented reality stimulus. Tsinghua Sci Technol. 2023;28(2):322–329. doi: 10.26599/TST.2021.9010085.

- Si-Mohammed H, Haumont C, Sanchez A. Designing functional prototypes combining bci and ar for home automation. Virtual Real Mix Real. p. 3–21.

- Andrews A. Integration of augmented reality and brain-computer interface technologies for health care applications: exploratory and prototyping study. JMIR Form Res. 2022;6(4):e18222. Apr doi: 10.2196/18222.

- Andrews A. Mind power: thought-controlled augmented reality for basic science education. Med Sci Educ. 2022;32(6):1571–1573. doi: 10.1007/s40670-022-01659-x.

- Si-Mohammed H, Argelaguet F, Casiez G, et al. Brain-computer interfaces and augmented reality: a state of the art. Graz Brain-Computer Interface Conference, 2017. Available from: https://api.semanticscholar.org/CorpusID:322089.